Andrew S. Morgan

Collision-inclusive Manipulation Planning for Occluded Object Grasping via Compliant Robot Motions

Dec 09, 2024

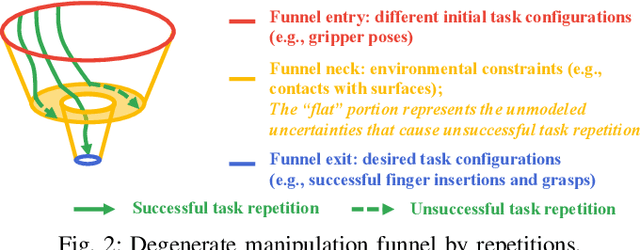

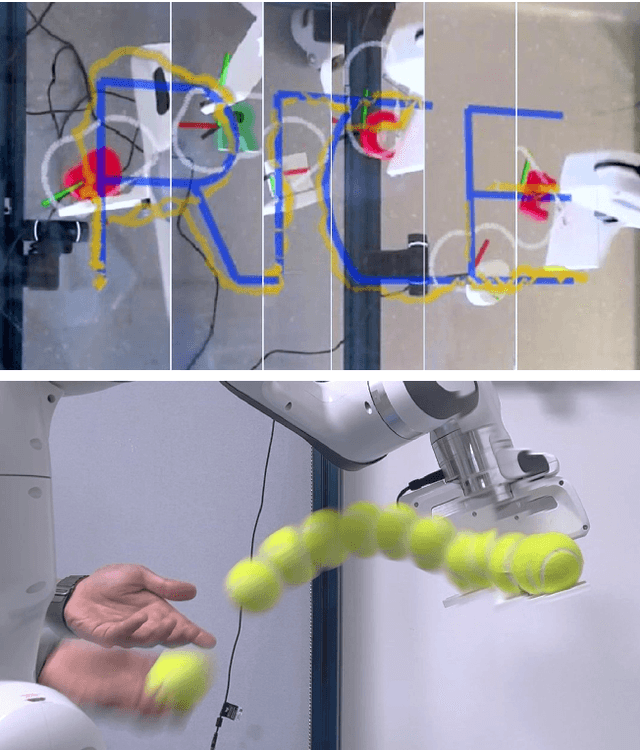

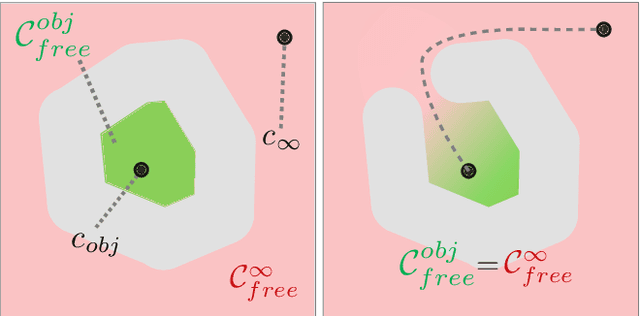

Abstract:Traditional robotic manipulation mostly focuses on collision-free tasks. In practice, however, many manipulation tasks (e.g., occluded object grasping) require the robot to intentionally collide with the environment to reach a desired task configuration. By enabling compliant robot motions, collisions between the robot and the environment are allowed and can thus be exploited, but more physical uncertainties are introduced. To address collision-rich problems such as occluded object grasping while handling the involved uncertainties, we propose a collision-inclusive planning framework that can transition the robot to a desired task configuration via roughly modeled collisions absorbed by Cartesian impedance control. By strategically exploiting the environmental constraints and exploring inside a manipulation funnel formed by task repetitions, our framework can effectively reduce physical and perception uncertainties. With real-world evaluations on both single-arm and dual-arm setups, we show that our framework is able to efficiently address various realistic occluded grasping problems where a feasible grasp does not initially exist.

Caging in Time: A Framework for Robust Object Manipulation under Uncertainties and Limited Robot Perception

Oct 21, 2024

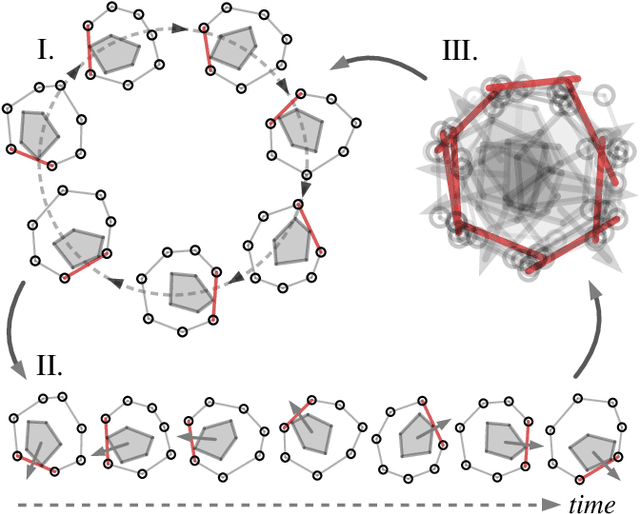

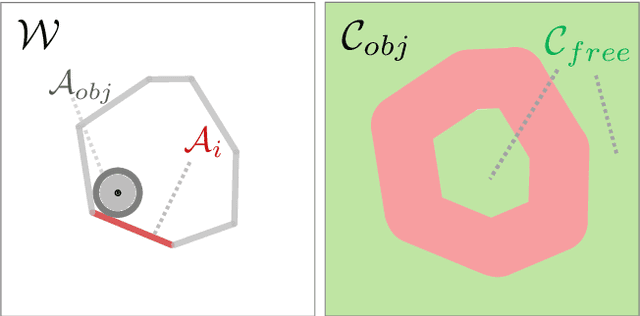

Abstract:Real-world object manipulation has been commonly challenged by physical uncertainties and perception limitations. Being an effective strategy, while caging configuration-based manipulation frameworks have successfully provided robust solutions, they are not broadly applicable due to their strict requirements on the availability of multiple robots, widely distributed contacts, or specific geometries of the robots or the objects. To this end, this work proposes a novel concept, termed Caging in Time, to allow caging configurations to be formed even if there is just one robot engaged in a task. This novel concept can be explained by an insight that even if a caging configuration is needed to constrain the motion of an object, only a small portion of the cage is actively manipulating at a time. As such, we can switch the configuration of the robot strategically so that by collapsing its configuration in time, we will see a cage formed and its necessary portion active whenever needed. We instantiate our Caging in Time theory on challenging quasistatic and dynamic manipulation tasks, showing that Caging in Time can be achieved in general state spaces including geometry-based and energy-based spaces. With extensive experiments, we show robust and accurate manipulation, in an open-loop manner, without requiring detailed knowledge of the object geometry or physical properties, nor realtime accurate feedback on the manipulation states. In addition to being an effective and robust open-loop manipulation solution, the proposed theory can be a supplementary strategy to other manipulation systems affected by uncertain or limited robot perception.

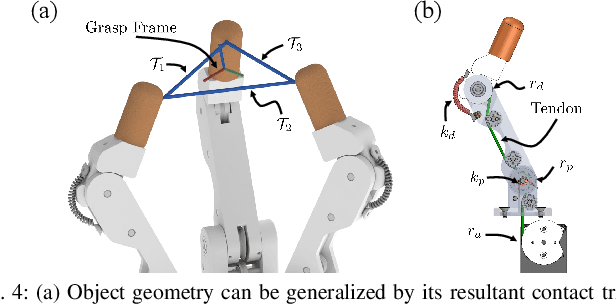

Towards Generalized Robot Assembly through Compliance-Enabled Contact Formations

Mar 09, 2023

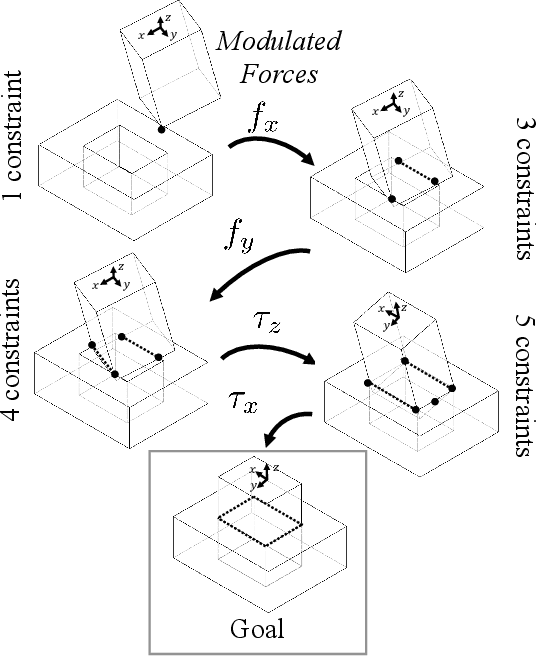

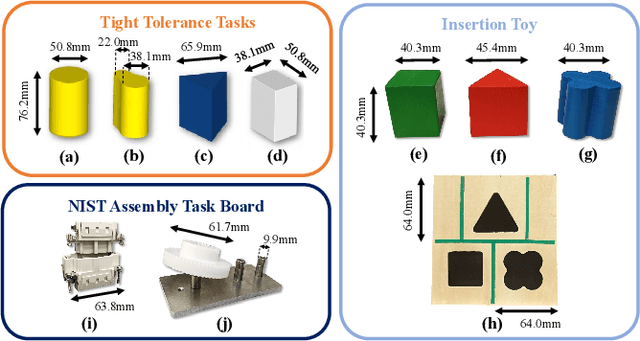

Abstract:Contact can be conceptualized as a set of constraints imposed on two bodies that are interacting with one another in some way. The nature of a contact, whether a point, line, or surface, dictates how these bodies are able to move with respect to one another given a force, and a set of contacts can provide either partial or full constraint on a body's motion. Decades of work have explored how to explicitly estimate the location of a contact and its dynamics, e.g., frictional properties, but investigated methods have been computationally expensive and there often exists significant uncertainty in the final calculation. This has affected further advancements in contact-rich tasks that are seemingly simple to humans, such as generalized peg-in-hole insertions. In this work, instead of explicitly estimating the individual contact dynamics between an object and its hole, we approach this problem by investigating compliance-enabled contact formations. More formally, contact formations are defined according to the constraints imposed on an object's available degrees-of-freedom. Rather than estimating individual contact positions, we abstract out this calculation to an implicit representation, allowing the robot to either acquire, maintain, or release constraints on the object during the insertion process, by monitoring forces enacted on the end effector through time. Using a compliant robot, our method is desirable in that we are able to complete industry-relevant insertion tasks of tolerances <0.25mm without prior knowledge of the exact hole location or its orientation. We showcase our method on more generalized insertion tasks, such as commercially available non-cylindrical objects and open world plug tasks.

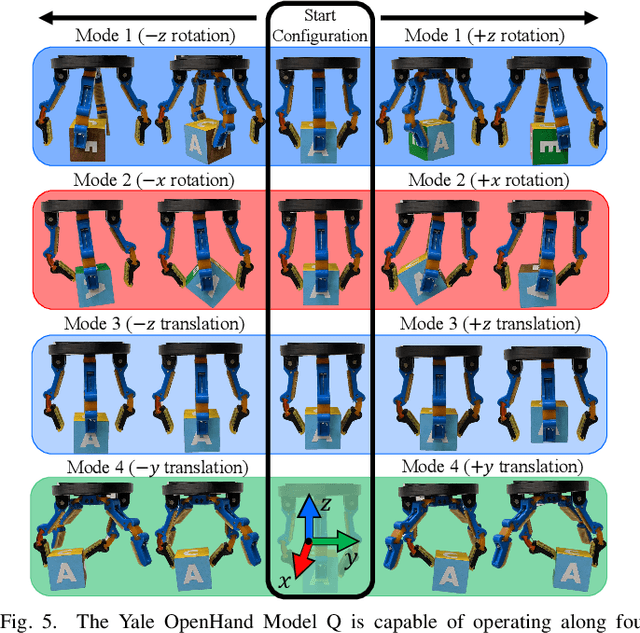

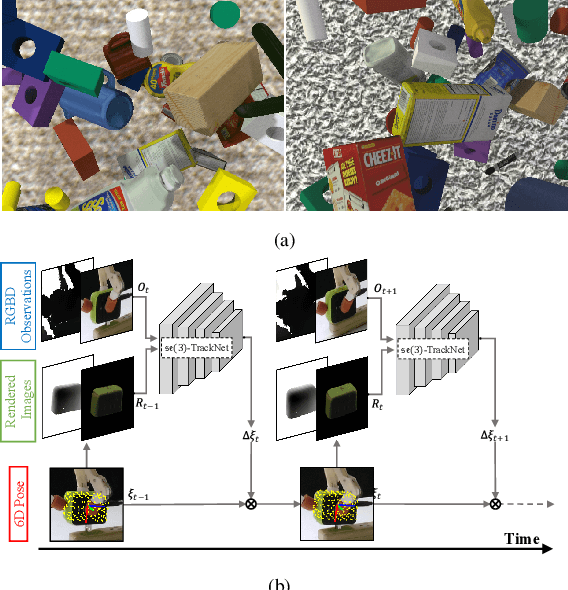

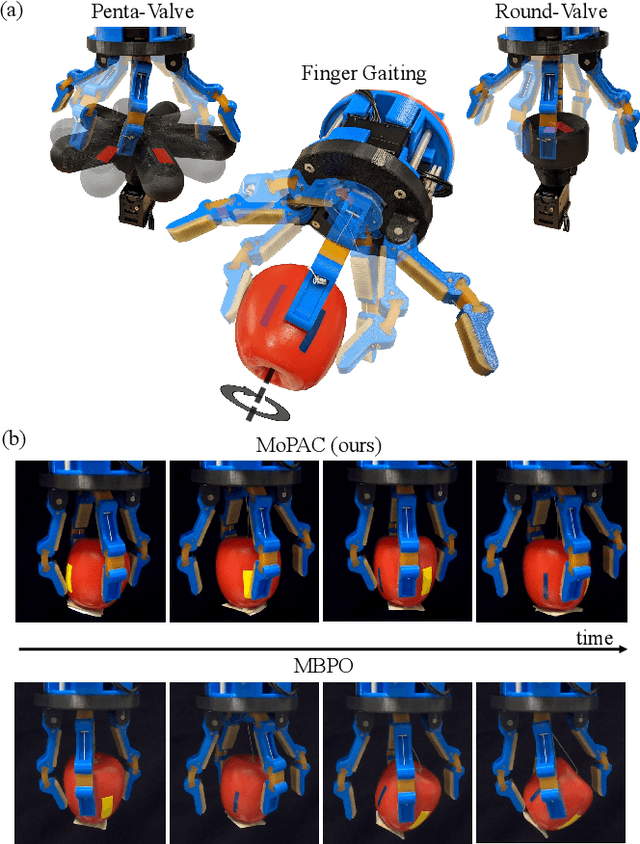

Complex In-Hand Manipulation via Compliance-Enabled Finger Gaiting and Multi-Modal Planning

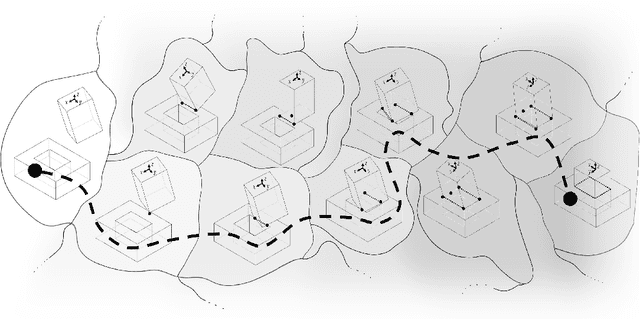

Jan 20, 2022

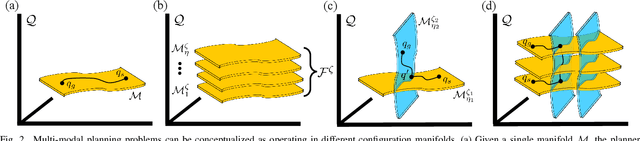

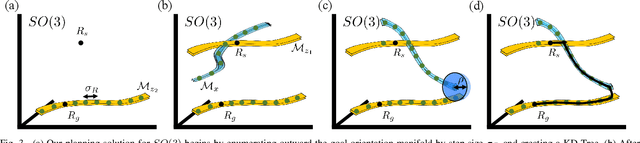

Abstract:Constraining contacts to remain fixed on an object during manipulation limits the potential workspace size, as motion is subject to the hand's kinematic topology. Finger gaiting is one way to alleviate such restraints. It allows contacts to be freely broken and remade so as to operate on different manipulation manifolds. This capability, however, has traditionally been difficult or impossible to practically realize. A finger gaiting system must simultaneously plan for and control forces on the object while maintaining stability during contact switching. This work alleviates the traditional requirement by taking advantage of system compliance, allowing the hand to more easily switch contacts while maintaining a stable grasp. Our method achieves complete SO(3) finger gaiting control of grasped objects against gravity by developing a manipulation planner that operates via orthogonal safe modes of a compliant, underactuated hand absent of tactile sensors or joint encoders. During manipulation, a low-latency 6D pose object tracker provides feedback via vision, allowing the planner to update its plan online so as to adaptively recover from trajectory deviations. The efficacy of this method is showcased by manipulating both convex and non-convex objects on a real robot. Its robustness is evaluated via perturbation rejection and long trajectory goals. To the best of the authors' knowledge, this is the first work that has autonomously achieved full SO(3) control of objects within-hand via finger gaiting and without a support surface, elucidating a valuable step towards realizing true robot in-hand manipulation capabilities.

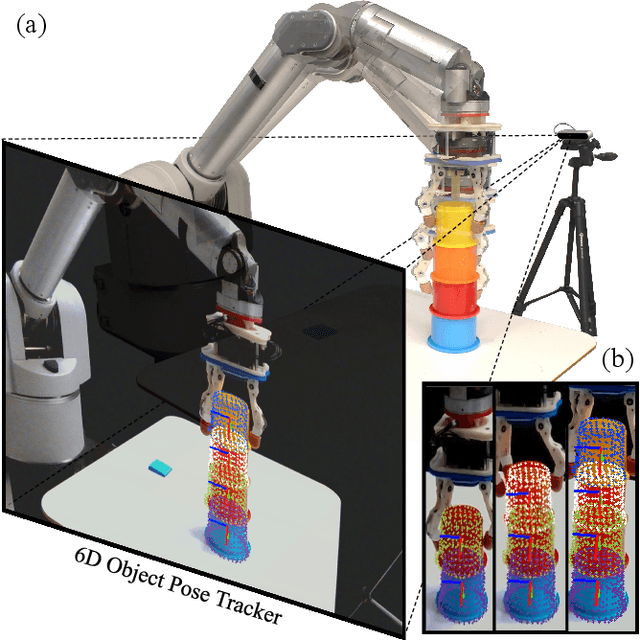

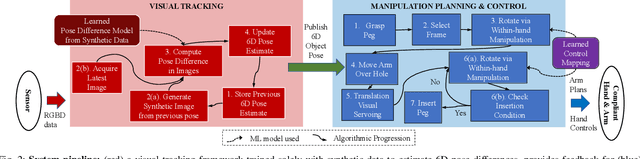

Vision-driven Compliant Manipulation for Reliable, High-Precision Assembly Tasks

Jun 26, 2021

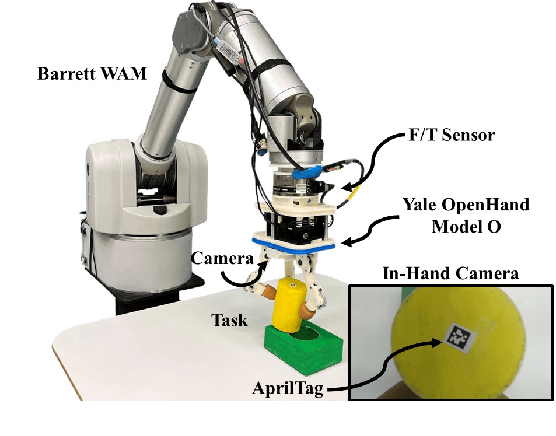

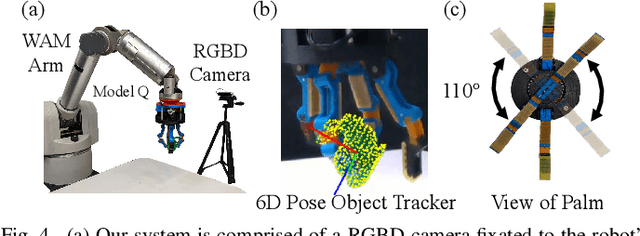

Abstract:Highly constrained manipulation tasks continue to be challenging for autonomous robots as they require high levels of precision, typically less than 1mm, which is often incompatible with what can be achieved by traditional perception systems. This paper demonstrates that the combination of state-of-the-art object tracking with passively adaptive mechanical hardware can be leveraged to complete precision manipulation tasks with tight, industrially-relevant tolerances (0.25mm). The proposed control method closes the loop through vision by tracking the relative 6D pose of objects in the relevant workspace. It adjusts the control reference of both the compliant manipulator and the hand to complete object insertion tasks via within-hand manipulation. Contrary to previous efforts for insertion, our method does not require expensive force sensors, precision manipulators, or time-consuming, online learning, which is data hungry. Instead, this effort leverages mechanical compliance and utilizes an object agnostic manipulation model of the hand learned offline, off-the-shelf motion planning, and an RGBD-based object tracker trained solely with synthetic data. These features allow the proposed system to easily generalize and transfer to new tasks and environments. This paper describes in detail the system components and showcases its efficacy with extensive experiments involving tight tolerance peg-in-hole insertion tasks of various geometries as well as open-world constrained placement tasks.

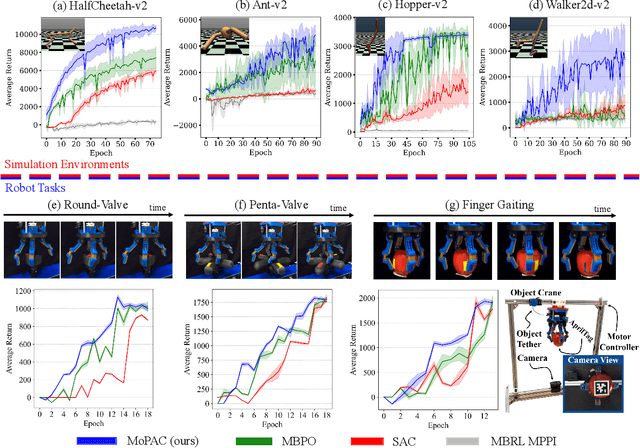

Model Predictive Actor-Critic: Accelerating Robot Skill Acquisition with Deep Reinforcement Learning

Mar 25, 2021

Abstract:Substantial advancements to model-based reinforcement learning algorithms have been impeded by the model-bias induced by the collected data, which generally hurts performance. Meanwhile, their inherent sample efficiency warrants utility for most robot applications, limiting potential damage to the robot and its environment during training. Inspired by information theoretic model predictive control and advances in deep reinforcement learning, we introduce Model Predictive Actor-Critic (MoPAC), a hybrid model-based/model-free method that combines model predictive rollouts with policy optimization as to mitigate model bias. MoPAC leverages optimal trajectories to guide policy learning, but explores via its model-free method, allowing the algorithm to learn more expressive dynamics models. This combination guarantees optimal skill learning up to an approximation error and reduces necessary physical interaction with the environment, making it suitable for real-robot training. We provide extensive results showcasing how our proposed method generally outperforms current state-of-the-art and conclude by evaluating MoPAC for learning on a physical robotic hand performing valve rotation and finger gaiting--a task that requires grasping, manipulation, and then regrasping of an object.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge