Gaotian Wang

Collision-inclusive Manipulation Planning for Occluded Object Grasping via Compliant Robot Motions

Dec 09, 2024

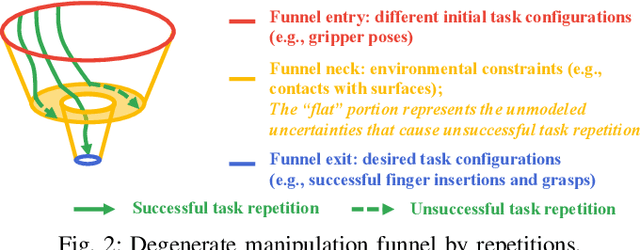

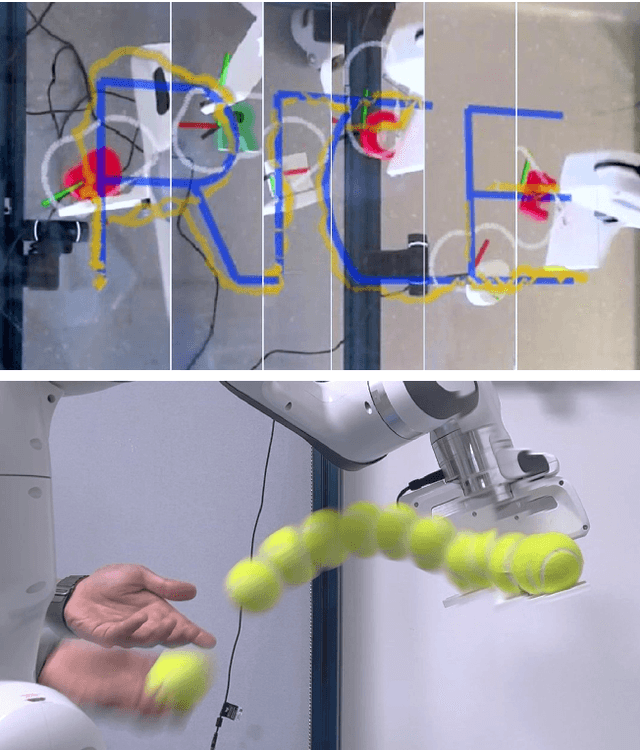

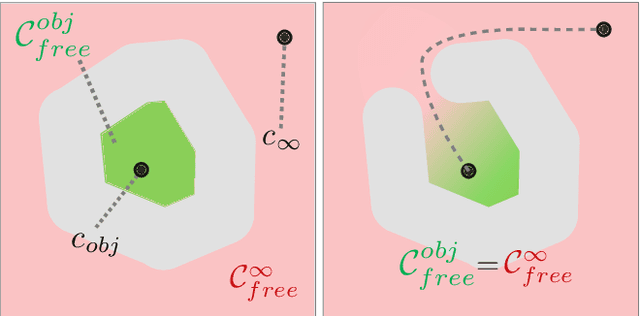

Abstract:Traditional robotic manipulation mostly focuses on collision-free tasks. In practice, however, many manipulation tasks (e.g., occluded object grasping) require the robot to intentionally collide with the environment to reach a desired task configuration. By enabling compliant robot motions, collisions between the robot and the environment are allowed and can thus be exploited, but more physical uncertainties are introduced. To address collision-rich problems such as occluded object grasping while handling the involved uncertainties, we propose a collision-inclusive planning framework that can transition the robot to a desired task configuration via roughly modeled collisions absorbed by Cartesian impedance control. By strategically exploiting the environmental constraints and exploring inside a manipulation funnel formed by task repetitions, our framework can effectively reduce physical and perception uncertainties. With real-world evaluations on both single-arm and dual-arm setups, we show that our framework is able to efficiently address various realistic occluded grasping problems where a feasible grasp does not initially exist.

Caging in Time: A Framework for Robust Object Manipulation under Uncertainties and Limited Robot Perception

Oct 21, 2024

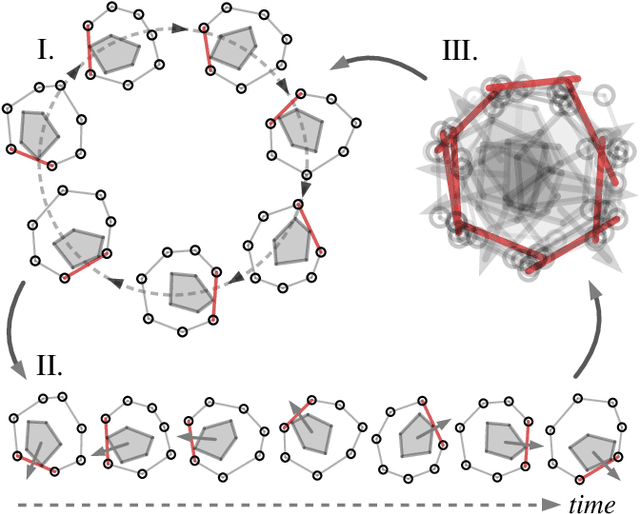

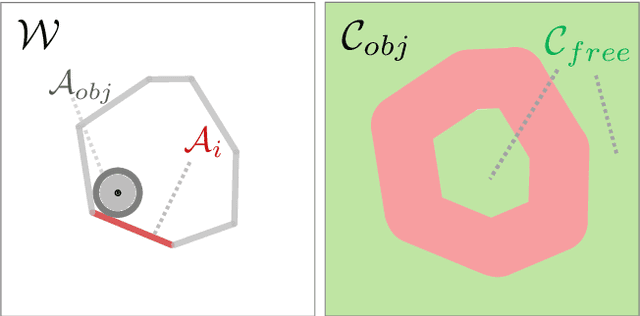

Abstract:Real-world object manipulation has been commonly challenged by physical uncertainties and perception limitations. Being an effective strategy, while caging configuration-based manipulation frameworks have successfully provided robust solutions, they are not broadly applicable due to their strict requirements on the availability of multiple robots, widely distributed contacts, or specific geometries of the robots or the objects. To this end, this work proposes a novel concept, termed Caging in Time, to allow caging configurations to be formed even if there is just one robot engaged in a task. This novel concept can be explained by an insight that even if a caging configuration is needed to constrain the motion of an object, only a small portion of the cage is actively manipulating at a time. As such, we can switch the configuration of the robot strategically so that by collapsing its configuration in time, we will see a cage formed and its necessary portion active whenever needed. We instantiate our Caging in Time theory on challenging quasistatic and dynamic manipulation tasks, showing that Caging in Time can be achieved in general state spaces including geometry-based and energy-based spaces. With extensive experiments, we show robust and accurate manipulation, in an open-loop manner, without requiring detailed knowledge of the object geometry or physical properties, nor realtime accurate feedback on the manipulation states. In addition to being an effective and robust open-loop manipulation solution, the proposed theory can be a supplementary strategy to other manipulation systems affected by uncertain or limited robot perception.

UNO Push: Unified Nonprehensile Object Pushing via Non-Parametric Estimation and Model Predictive Control

Mar 20, 2024Abstract:Nonprehensile manipulation through precise pushing is an essential skill that has been commonly challenged by perception and physical uncertainties, such as those associated with contacts, object geometries, and physical properties. For this, we propose a unified framework that jointly addresses system modeling, action generation, and control. While most existing approaches either heavily rely on a priori system information for analytic modeling, or leverage a large dataset to learn dynamic models, our framework approximates a system transition function via non-parametric learning only using a small number of exploratory actions (ca. 10). The approximated function is then integrated with model predictive control to provide precise pushing manipulation. Furthermore, we show that the approximated system transition functions can be robustly transferred across novel objects while being online updated to continuously improve the manipulation accuracy. Through extensive experiments on a real robot platform with a set of novel objects and comparing against a state-of-the-art baseline, we show that the proposed unified framework is a light-weight and highly effective approach to enable precise pushing manipulation all by itself. Our evaluation results illustrate that the system can robustly ensure millimeter-level precision and can straightforwardly work on any novel object.

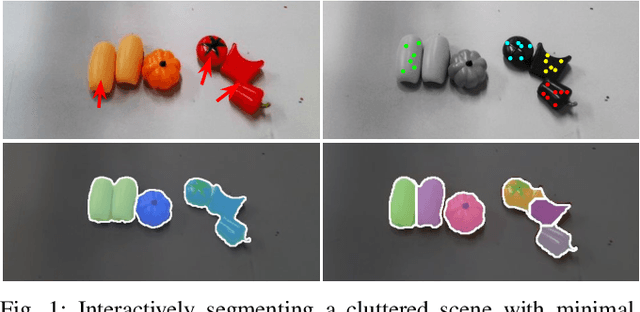

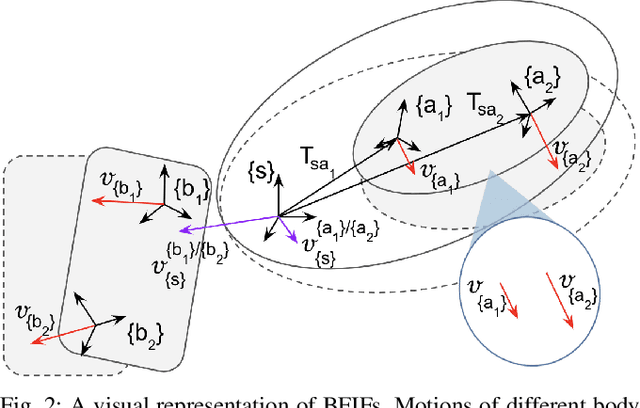

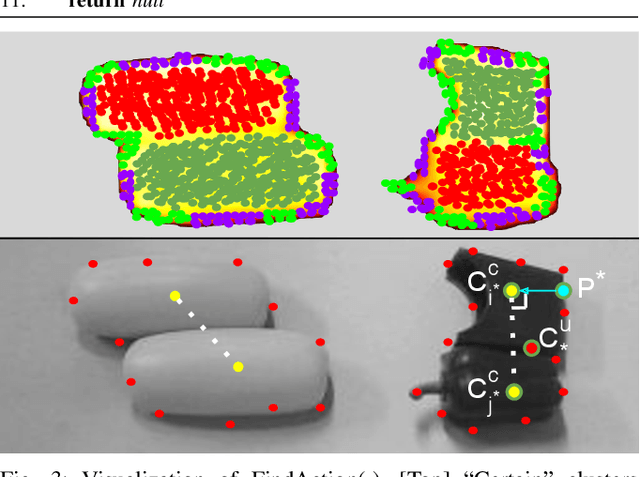

RISeg: Robot Interactive Object Segmentation via Body Frame-Invariant Features

Mar 04, 2024

Abstract:In order to successfully perform manipulation tasks in new environments, such as grasping, robots must be proficient in segmenting unseen objects from the background and/or other objects. Previous works perform unseen object instance segmentation (UOIS) by training deep neural networks on large-scale data to learn RGB/RGB-D feature embeddings, where cluttered environments often result in inaccurate segmentations. We build upon these methods and introduce a novel approach to correct inaccurate segmentation, such as under-segmentation, of static image-based UOIS masks by using robot interaction and a designed body frame-invariant feature. We demonstrate that the relative linear and rotational velocities of frames randomly attached to rigid bodies due to robot interactions can be used to identify objects and accumulate corrected object-level segmentation masks. By introducing motion to regions of segmentation uncertainty, we are able to drastically improve segmentation accuracy in an uncertainty-driven manner with minimal, non-disruptive interactions (ca. 2-3 per scene). We demonstrate the effectiveness of our proposed interactive perception pipeline in accurately segmenting cluttered scenes by achieving an average object segmentation accuracy rate of 80.7%, an increase of 28.2% when compared with other state-of-the-art UOIS methods.

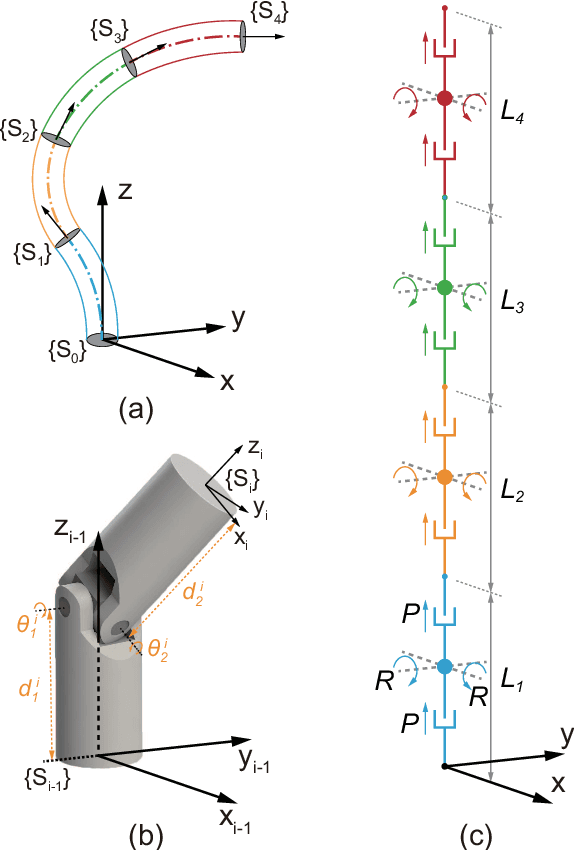

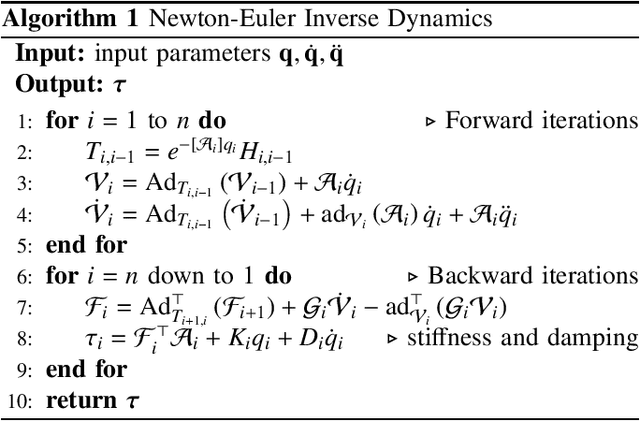

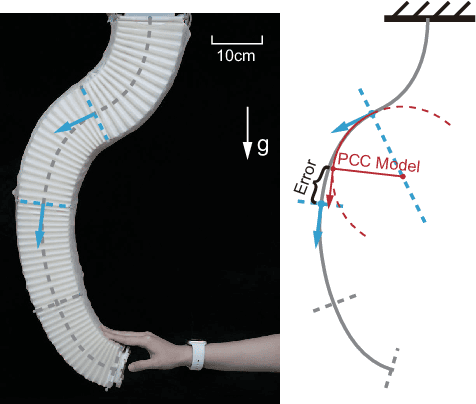

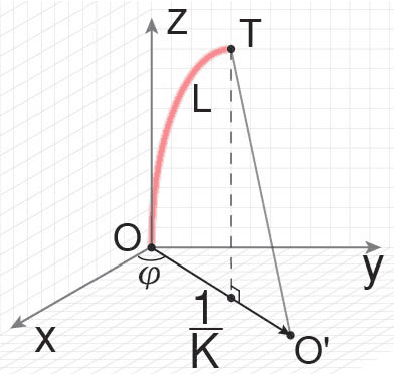

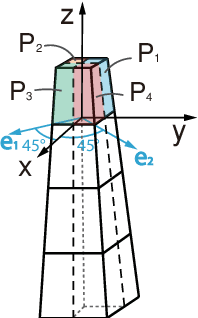

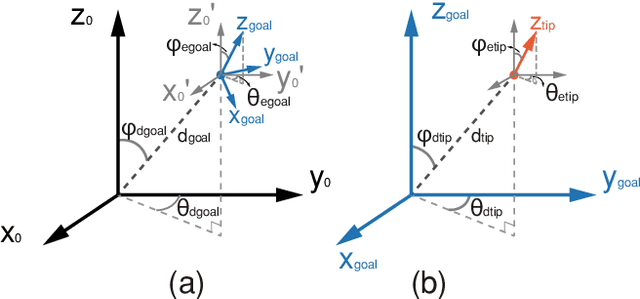

Dynamical Modeling and Control of Soft Robots with Non-constant Curvature Deformation

Mar 19, 2022

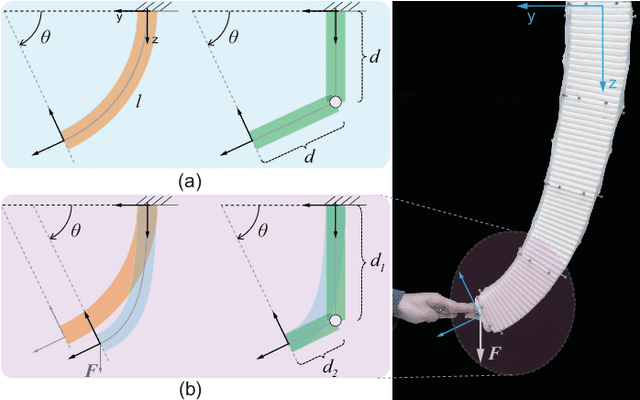

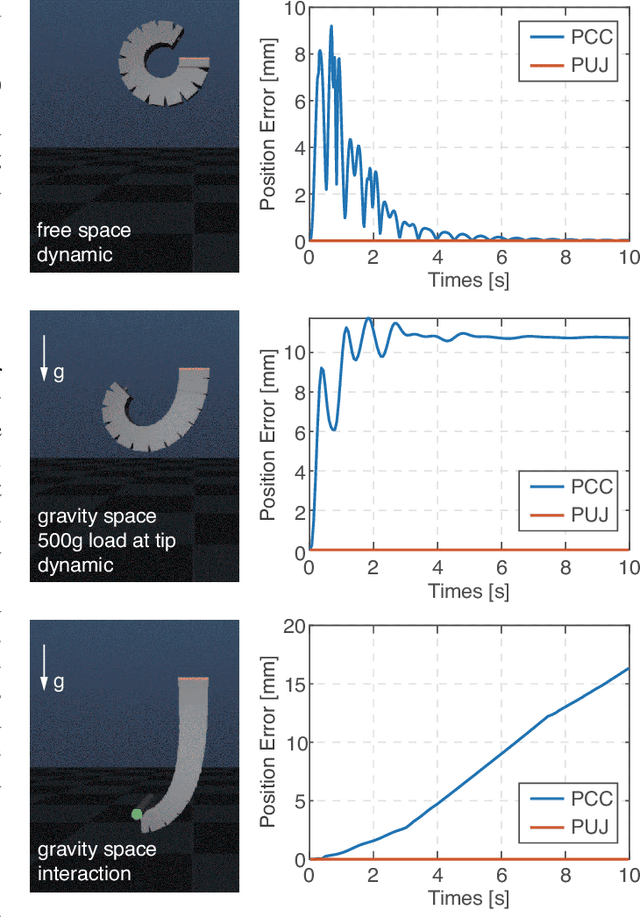

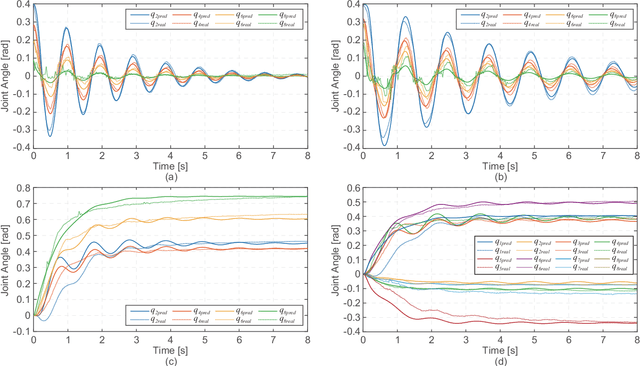

Abstract:The Piecewise Constant Curvature (PCC) model is the most widely used soft robotic modeling and control. However, the PCC fails to accurately describe the deformation of the soft robots when executing dynamic tasks or interacting with the environment. This paper presents a simple threedimensional (3D) modeling method for a multi-segment soft robotic manipulator with non-constant curvature deformation. We devise kinematic and dynamical models for soft manipulators by modeling each segment of the manipulator as two stretchable links connected by a universal joint. Based on that, we present two controllers for dynamic trajectory tracking in confguration space and pose control in task space, respectively. Model accuracy is demonstrated with simulations and experimental data. The controllers are implemented on a four-segment soft robotic manipulator and validated in dynamic motions and pose control with unknown loads. The experimental results show that the dynamic controller enables a stable reference trajectory tracking at speeds up to 7m/s.

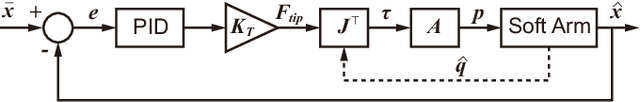

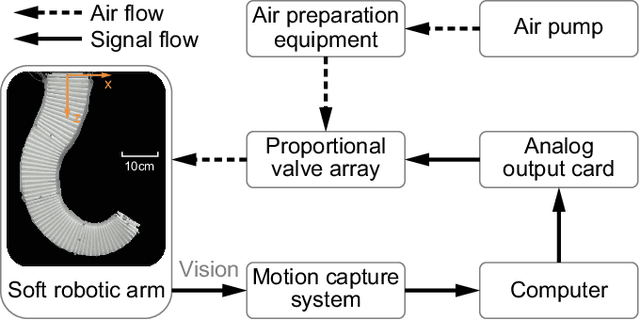

Control of a Soft Robotic Arm Using a Piecewise Universal Joint Model

Jan 05, 2022

Abstract:The 'infinite' passive degrees of freedom of soft robotic arms render their control especially challenging. In this paper, we leverage a previously developed model, which drawing equivalence of the soft arm to a series of universal joints, to design two closed-loop controllers: a configuration space controller for trajectory tracking and a task space controller for position control of the end effector. Extensive experiments and simulations on a four-segment soft arm attest to substantial improvement in terms of: a) superior tracking accuracy of the configuration space controller and b) reduced settling time and steady-state error of the task space controller. The task space controller is also verified to be effective in the presence of interactions between the soft arm and the environment.

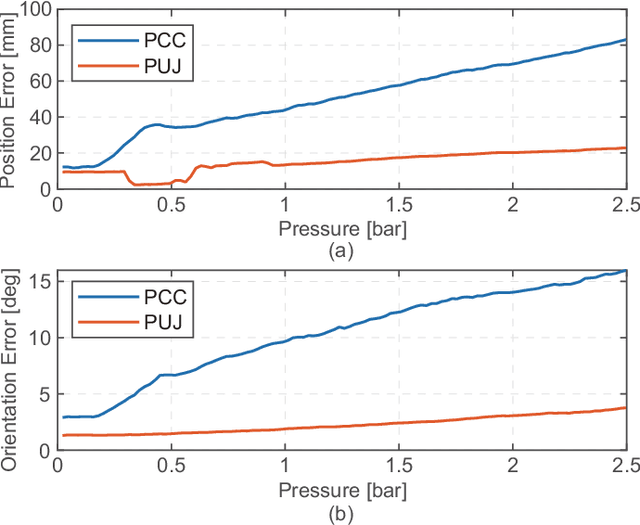

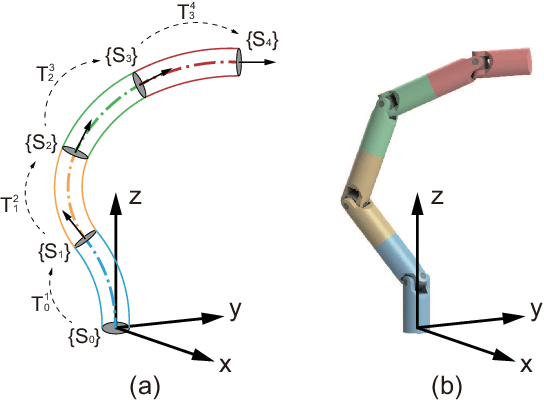

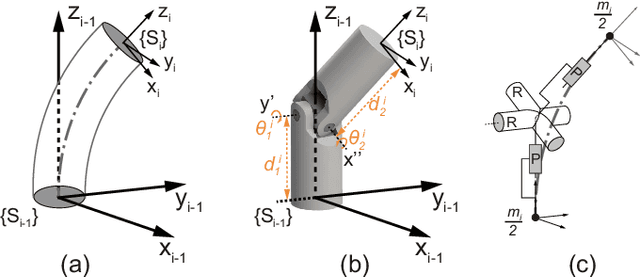

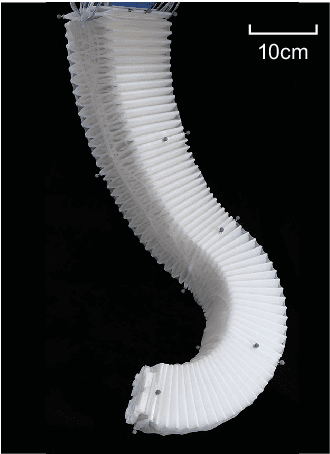

Unified Kinematic and Dynamical Modeling of a Soft Robotic Arm by a Piecewise Universal Joint Model

Sep 14, 2021

Abstract:The compliance of soft robotic arms renders the development of accurate kinematic & dynamical models especially challenging. The most widely used model in soft robotic kinematics assumes Piecewise Constant Curvature (PCC). However, PCC fails to effectively handle external forces, or even the influence of gravity, since the robot does not deform with a constant curvature under these conditions. In this paper, we establish three-dimensional (3D) modeling of a multi-segment soft robotic arm under the less restrictive assumption that each segment of the arm is deformed on a plane without twisting. We devise a kinematic and dynamical model for the soft arm by deriving equivalence to a serial universal joint robot. Numerous experiments on the real robot platform along with simulations attest to the modeling accuracy of our approach in 3D motion with load. The maximum position/rotation error of the proposed model is verified 6.7x/4.6x lower than the PCC model considering gravity and external forces.

A Q-learning Control Method for a Soft Robotic Arm Utilizing Training Data from a Rough Simulator

Sep 13, 2021

Abstract:It is challenging to control a soft robot, where reinforcement learning methods have been applied with promising results. However, due to the poor sample efficiency, reinforcement learning methods require a large collection of training data, which limits their applications. In this paper, we propose a Q-learning controller for a physical soft robot, in which pre-trained models using data from a rough simulator are applied to improve the performance of the controller. We implement the method on our soft robot, i.e., Honeycomb Pneumatic Network (HPN) arm. The experiments show that the usage of pre-trained models can not only reduce the amount of the real-world training data, but also greatly improve its accuracy and convergence rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge