Quentin Bateux

Improving the accuracy of automated labeling of specimen images datasets via a confidence-based process

Nov 15, 2024Abstract:The digitization of natural history collections over the past three decades has unlocked a treasure trove of specimen imagery and metadata. There is great interest in making this data more useful by further labeling it with additional trait data, and modern deep learning machine learning techniques utilizing convolutional neural nets (CNNs) and similar networks show particular promise to reduce the amount of required manual labeling by human experts, making the process much faster and less expensive. However, in most cases, the accuracy of these approaches is too low for reliable utilization of the automatic labeling, typically in the range of 80-85% accuracy. In this paper, we present and validate an approach that can greatly improve this accuracy, essentially by examining the confidence that the network has in the generated label as well as utilizing a user-defined threshold to reject labels that fall below a chosen level. We demonstrate that a naive model that produced 86% initial accuracy can achieve improved performance - over 95% accuracy (rejecting about 40% of the labels) or over 99% accuracy (rejecting about 65%) by selecting higher confidence thresholds. This gives flexibility to adapt existing models to the statistical requirements of various types of research and has the potential to move these automatic labeling approaches from being unusably inaccurate to being an invaluable new tool. After validating the approach in a number of ways, we annotate the reproductive state of a large dataset of over 600,000 herbarium specimens. The analysis of the results points at under-investigated correlations as well as general alignment with known trends. By sharing this new dataset alongside this work, we want to allow ecologists to gather insights for their own research questions, at their chosen point of accuracy/coverage trade-off.

Towards Generalized Robot Assembly through Compliance-Enabled Contact Formations

Mar 09, 2023

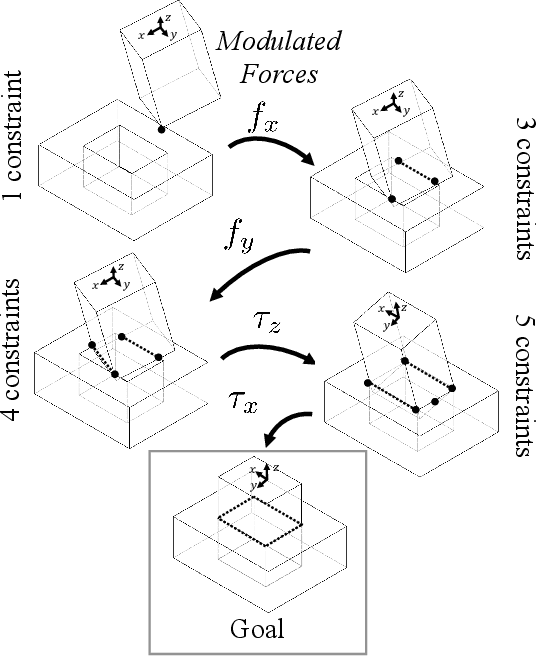

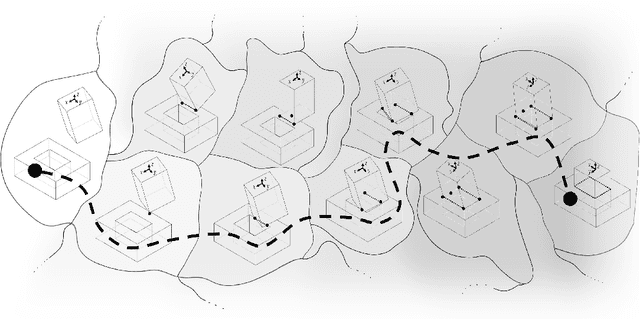

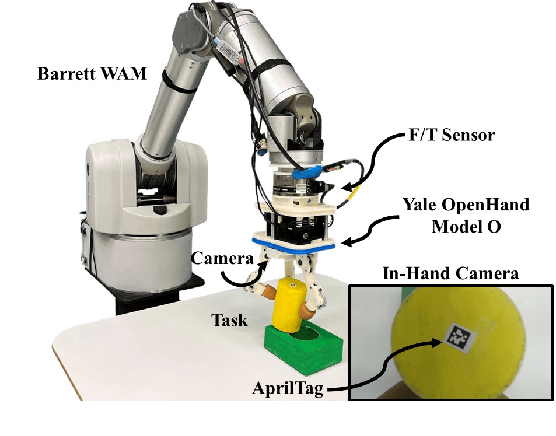

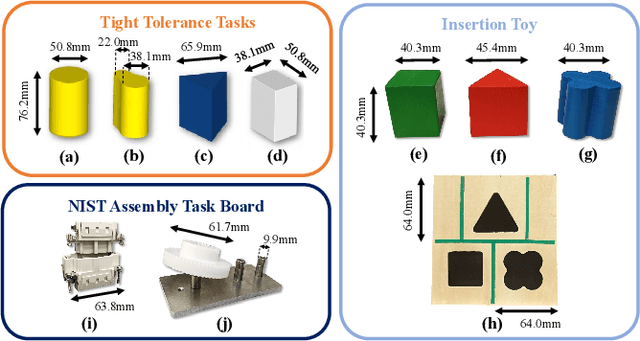

Abstract:Contact can be conceptualized as a set of constraints imposed on two bodies that are interacting with one another in some way. The nature of a contact, whether a point, line, or surface, dictates how these bodies are able to move with respect to one another given a force, and a set of contacts can provide either partial or full constraint on a body's motion. Decades of work have explored how to explicitly estimate the location of a contact and its dynamics, e.g., frictional properties, but investigated methods have been computationally expensive and there often exists significant uncertainty in the final calculation. This has affected further advancements in contact-rich tasks that are seemingly simple to humans, such as generalized peg-in-hole insertions. In this work, instead of explicitly estimating the individual contact dynamics between an object and its hole, we approach this problem by investigating compliance-enabled contact formations. More formally, contact formations are defined according to the constraints imposed on an object's available degrees-of-freedom. Rather than estimating individual contact positions, we abstract out this calculation to an implicit representation, allowing the robot to either acquire, maintain, or release constraints on the object during the insertion process, by monitoring forces enacted on the end effector through time. Using a compliant robot, our method is desirable in that we are able to complete industry-relevant insertion tasks of tolerances <0.25mm without prior knowledge of the exact hole location or its orientation. We showcase our method on more generalized insertion tasks, such as commercially available non-cylindrical objects and open world plug tasks.

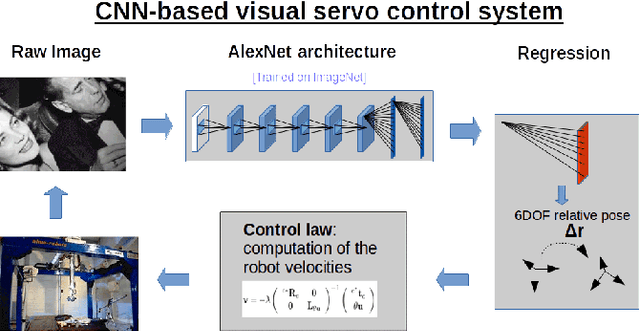

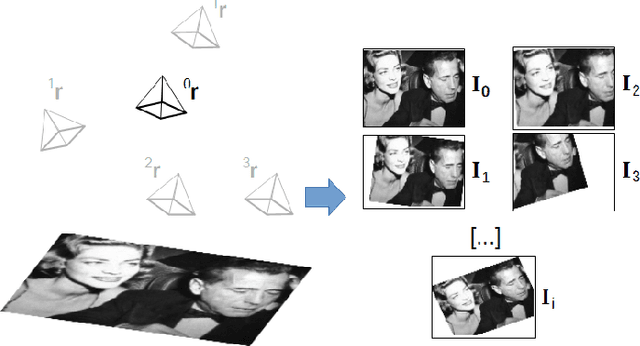

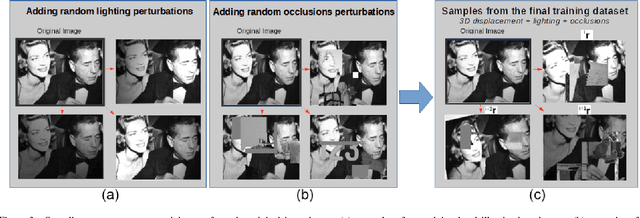

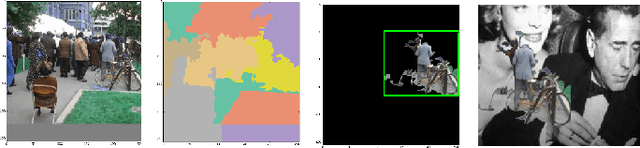

Visual Servoing from Deep Neural Networks

Jun 07, 2017

Abstract:We present a deep neural network-based method to perform high-precision, robust and real-time 6 DOF visual servoing. The paper describes how to create a dataset simulating various perturbations (occlusions and lighting conditions) from a single real-world image of the scene. A convolutional neural network is fine-tuned using this dataset to estimate the relative pose between two images of the same scene. The output of the network is then employed in a visual servoing control scheme. The method converges robustly even in difficult real-world settings with strong lighting variations and occlusions.A positioning error of less than one millimeter is obtained in experiments with a 6 DOF robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge