Zhiqiang Zheng

Leveraging Semantic Graphs for Efficient and Robust LiDAR SLAM

Mar 14, 2025

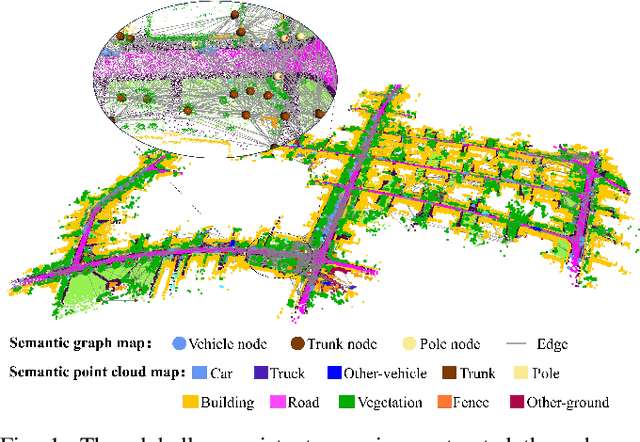

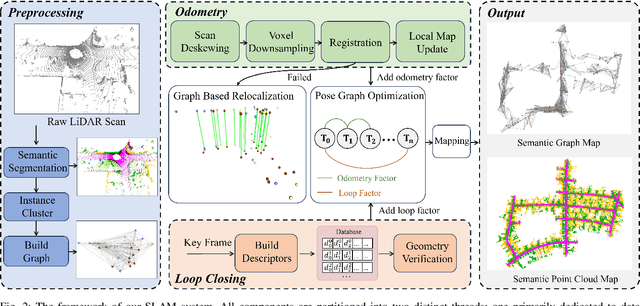

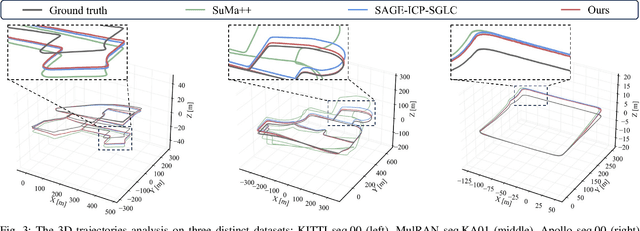

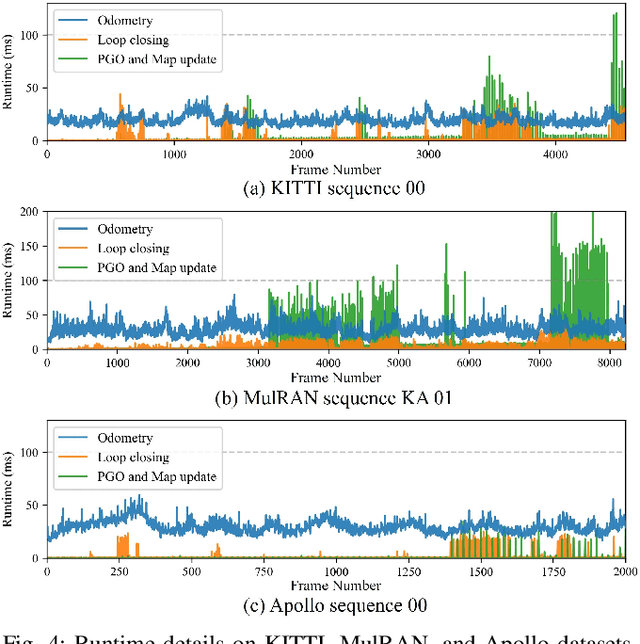

Abstract:Accurate and robust simultaneous localization and mapping (SLAM) is crucial for autonomous mobile systems, typically achieved by leveraging the geometric features of the environment. Incorporating semantics provides a richer scene representation that not only enhances localization accuracy in SLAM but also enables advanced cognitive functionalities for downstream navigation and planning tasks. Existing point-wise semantic LiDAR SLAM methods often suffer from poor efficiency and generalization, making them less robust in diverse real-world scenarios. In this paper, we propose a semantic graph-enhanced SLAM framework, named SG-SLAM, which effectively leverages the geometric, semantic, and topological characteristics inherent in environmental structures. The semantic graph serves as a fundamental component that facilitates critical functionalities of SLAM, including robust relocalization during odometry failures, accurate loop closing, and semantic graph map construction. Our method employs a dual-threaded architecture, with one thread dedicated to online odometry and relocalization, while the other handles loop closure, pose graph optimization, and map update. This design enables our method to operate in real time and generate globally consistent semantic graph maps and point cloud maps. We extensively evaluate our method across the KITTI, MulRAN, and Apollo datasets, and the results demonstrate its superiority compared to state-of-the-art methods. Our method has been released at https://github.com/nubot-nudt/SG-SLAM.

A Novel Multi-Gait Strategy for Stable and Efficient Quadruped Robot Locomotion

Oct 12, 2024

Abstract:Taking inspiration from the natural gait transition mechanism of quadrupeds, devising a good gait transition strategy is important for quadruped robots to achieve energy-efficient locomotion on various terrains and velocities. While previous studies have recognized that gait patterns linked to velocities impact two key factors, the Cost of Transport (CoT) and the stability of robot locomotion, only a limited number of studies have effectively combined these factors to design a mechanism that ensures both efficiency and stability in quadruped robot locomotion. In this paper, we propose a multi-gait selection and transition strategy to achieve stable and efficient locomotion across different terrains. Our strategy starts by establishing a gait mapping considering both CoT and locomotion stability to guide the gait selection process during locomotion. Then, we achieve gait switching in time by introducing affine transformations for gait parameters and a designed finite state machine to build the switching order. Comprehensive experiments have been conducted on using our strategy with changing terrains and velocities, and the results indicate that our proposed strategy outperforms baseline methods in achieving simultaneous efficiency in locomotion by considering CoT and stability.

SGLC: Semantic Graph-Guided Coarse-Fine-Refine Full Loop Closing for LiDAR SLAM

Jul 11, 2024

Abstract:Loop closing is a crucial component in SLAM that helps eliminate accumulated errors through two main steps: loop detection and loop pose correction. The first step determines whether loop closing should be performed, while the second estimates the 6-DoF pose to correct odometry drift. Current methods mostly focus on developing robust descriptors for loop closure detection, often neglecting loop pose estimation. A few methods that do include pose estimation either suffer from low accuracy or incur high computational costs. To tackle this problem, we introduce SGLC, a real-time semantic graph-guided full loop closing method, with robust loop closure detection and 6-DoF pose estimation capabilities. SGLC takes into account the distinct characteristics of foreground and background points. For foreground instances, it builds a semantic graph that not only abstracts point cloud representation for fast descriptor generation and matching but also guides the subsequent loop verification and initial pose estimation. Background points, meanwhile, are exploited to provide more geometric features for scan-wise descriptor construction and stable planar information for further pose refinement. Loop pose estimation employs a coarse-fine-refine registration scheme that considers the alignment of both instance points and background points, offering high efficiency and accuracy. We evaluate the loop closing performance of SGLC through extensive experiments on the KITTI and KITTI-360 datasets, demonstrating its superiority over existing state-of-the-art methods. Additionally, we integrate SGLC into a SLAM system, eliminating accumulated errors and improving overall SLAM performance. The implementation of SGLC will be released at https://github.com/nubot-nudt/SGLC.

SegNet4D: Effective and Efficient 4D LiDAR Semantic Segmentation in Autonomous Driving Environments

Jun 24, 2024

Abstract:4D LiDAR semantic segmentation, also referred to as multi-scan semantic segmentation, plays a crucial role in enhancing the environmental understanding capabilities of autonomous vehicles. It entails identifying the semantic category of each point in the LiDAR scan and distinguishing whether it is dynamic, a critical aspect in downstream tasks such as path planning and autonomous navigation. Existing methods for 4D semantic segmentation often rely on computationally intensive 4D convolutions for multi-scan input, resulting in poor real-time performance. In this article, we introduce SegNet4D, a novel real-time multi-scan semantic segmentation method leveraging a projection-based approach for fast motion feature encoding, showcasing outstanding performance. SegNet4D treats 4D semantic segmentation as two distinct tasks: single-scan semantic segmentation and moving object segmentation, each addressed by dedicated head. These results are then fused in the proposed motion-semantic fusion module to achieve comprehensive multi-scan semantic segmentation. Besides, we propose extracting instance information from the current scan and incorporating it into the network for instance-aware segmentation. Our approach exhibits state-of-the-art performance across multiple datasets and stands out as a real-time multi-scan semantic segmentation method. The implementation of SegNet4D will be made available at \url{https://github.com/nubot-nudt/SegNet4D}.

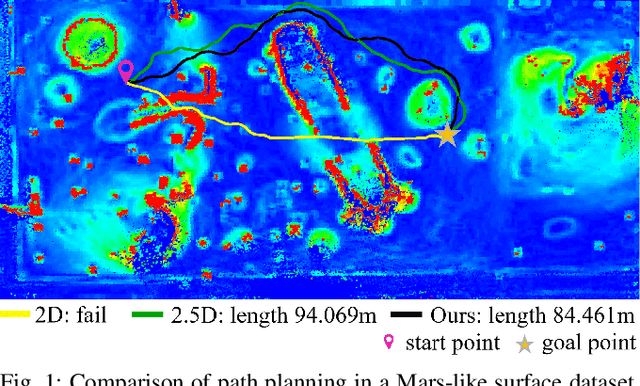

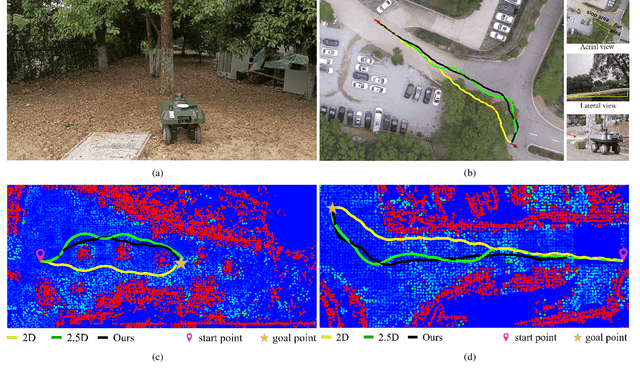

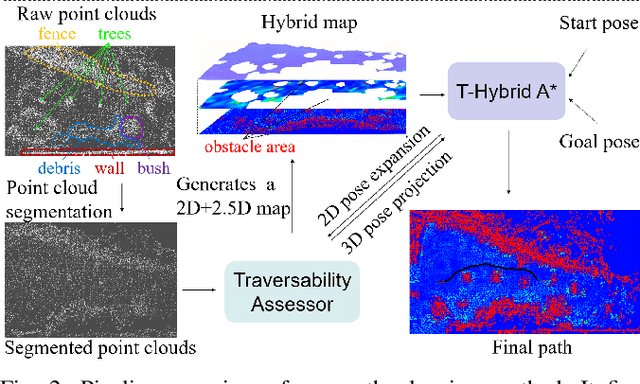

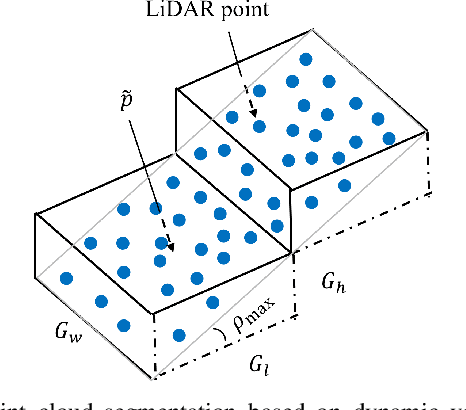

Hybrid Map-Based Path Planning for Robot Navigation in Unstructured Environments

Mar 09, 2023

Abstract:Fast and accurate path planning is important for ground robots to achieve safe and efficient autonomous navigation in unstructured outdoor environments. However, most existing methods exploiting either 2D or 2.5D maps struggle to balance the efficiency and safety for ground robots navigating in such challenging scenarios. In this paper, we propose a novel hybrid map representation by fusing a 2D grid and a 2.5D digital elevation map. Based on it, a novel path planning method is proposed, which considers the robot poses during traversability estimation. By doing so, our method explicitly takes safety as a planning constraint enabling robots to navigate unstructured environments smoothly.The proposed approach has been evaluated on both simulated datasets and a real robot platform. The experimental results demonstrate the efficiency and effectiveness of the proposed method. Compared to state-of-the-art baseline methods, the proposed approach consistently generates safer and easier paths for the robot in different unstructured outdoor environments. The implementation of our method is publicly available at https://github.com/nubot-nudt/T-Hybrid-planner.

ElC-OIS: Ellipsoidal Clustering for Open-World Instance Segmentation on LiDAR Data

Mar 08, 2023

Abstract:Open-world Instance Segmentation (OIS) is a challenging task that aims to accurately segment every object instance appearing in the current observation, regardless of whether these instances have been labeled in the training set. This is important for safety-critical applications such as robust autonomous navigation. In this paper, we present a flexible and effective OIS framework for LiDAR point cloud that can accurately segment both known and unknown instances (i.e., seen and unseen instance categories during training). It first identifies points belonging to known classes and removes the background by leveraging close-set panoptic segmentation networks. Then, we propose a novel ellipsoidal clustering method that is more adapted to the characteristic of LiDAR scans and allows precise segmentation of unknown instances. Furthermore, a diffuse searching method is proposed to handle the common over-segmentation problem presented in the known instances. With the combination of these techniques, we are able to achieve accurate segmentation for both known and unknown instances. We evaluated our method on the SemanticKITTI open-world LiDAR instance segmentation dataset. The experimental results suggest that it outperforms current state-of-the-art methods, especially with a 10.0% improvement in association quality. The source code of our method will be publicly available at https://github.com/nubot-nudt/ElC-OIS.

InsMOS: Instance-Aware Moving Object Segmentation in LiDAR Data

Mar 07, 2023

Abstract:Identifying moving objects is a crucial capability for autonomous navigation, consistent map generation, and future trajectory prediction of objects. In this paper, we propose a novel network that addresses the challenge of segmenting moving objects in 3D LiDAR scans. Our approach not only predicts point-wise moving labels but also detects instance information of main traffic participants. Such a design helps determine which instances are actually moving and which ones are temporarily static in the current scene. Our method exploits a sequence of point clouds as input and quantifies them into 4D voxels. We use 4D sparse convolutions to extract motion features from the 4D voxels and inject them into the current scan. Then, we extract spatio-temporal features from the current scan for instance detection and feature fusion. Finally, we design an upsample fusion module to output point-wise labels by fusing the spatio-temporal features and predicted instance information. We evaluated our approach on the LiDAR-MOS benchmark based on SemanticKITTI and achieved better moving object segmentation performance compared to state-of-the-art methods, demonstrating the effectiveness of our approach in integrating instance information for moving object segmentation. Furthermore, our method shows superior performance on the Apollo dataset with a pre-trained model on SemanticKITTI, indicating that our method generalizes well in different scenes.The code and pre-trained models of our method will be released at https://github.com/nubot-nudt/InsMOS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge