Zhenyi Wang

CoRe: Context-Robust Remasking for Diffusion Language Models

Feb 04, 2026Abstract:Standard decoding in Masked Diffusion Models (MDMs) is hindered by context rigidity: tokens are retained based on transient high confidence, often ignoring that early predictions lack full context. This creates cascade effects where initial inconsistencies misguide the remaining generation. Existing revision strategies attempt to mitigate this by relying on static confidence scores, but these signals are inherently myopic; inconsistent tokens can appear confident to the model itself. We propose Context-Robust Remasking (CoRe), a training-free framework for inference-time revision. Rather than trusting static token probabilities, CoRe identifies context-brittle tokens by probing their sensitivity to targeted masked-context perturbations. We formalize revision as a robust optimization objective over context shifts and efficiently approximate this objective to prioritize unstable tokens for revision. On LLaDA-8B-Base, CoRe delivers consistent improvements across reasoning and code benchmarks, outperforming compute-matched baselines and improving MBPP by up to 9.2 percentage points.

Medical SAM3: A Foundation Model for Universal Prompt-Driven Medical Image Segmentation

Jan 15, 2026Abstract:Promptable segmentation foundation models such as SAM3 have demonstrated strong generalization capabilities through interactive and concept-based prompting. However, their direct applicability to medical image segmentation remains limited by severe domain shifts, the absence of privileged spatial prompts, and the need to reason over complex anatomical and volumetric structures. Here we present Medical SAM3, a foundation model for universal prompt-driven medical image segmentation, obtained by fully fine-tuning SAM3 on large-scale, heterogeneous 2D and 3D medical imaging datasets with paired segmentation masks and text prompts. Through a systematic analysis of vanilla SAM3, we observe that its performance degrades substantially on medical data, with its apparent competitiveness largely relying on strong geometric priors such as ground-truth-derived bounding boxes. These findings motivate full model adaptation beyond prompt engineering alone. By fine-tuning SAM3's model parameters on 33 datasets spanning 10 medical imaging modalities, Medical SAM3 acquires robust domain-specific representations while preserving prompt-driven flexibility. Extensive experiments across organs, imaging modalities, and dimensionalities demonstrate consistent and significant performance gains, particularly in challenging scenarios characterized by semantic ambiguity, complex morphology, and long-range 3D context. Our results establish Medical SAM3 as a universal, text-guided segmentation foundation model for medical imaging and highlight the importance of holistic model adaptation for achieving robust prompt-driven segmentation under severe domain shift. Code and model will be made available at https://github.com/AIM-Research-Lab/Medical-SAM3.

RAIR: A Rule-Aware Benchmark Uniting Challenging Long-Tail and Visual Salience Subset for E-commerce Relevance Assessment

Dec 31, 2025Abstract:Search relevance plays a central role in web e-commerce. While large language models (LLMs) have shown significant results on relevance task, existing benchmarks lack sufficient complexity for comprehensive model assessment, resulting in an absence of standardized relevance evaluation metrics across the industry. To address this limitation, we propose Rule-Aware benchmark with Image for Relevance assessment(RAIR), a Chinese dataset derived from real-world scenarios. RAIR established a standardized framework for relevance assessment and provides a set of universal rules, which forms the foundation for standardized evaluation. Additionally, RAIR analyzes essential capabilities required for current relevance models and introduces a comprehensive dataset consists of three subset: (1) a general subset with industry-balanced sampling to evaluate fundamental model competencies; (2) a long-tail hard subset focus on challenging cases to assess performance limits; (3) a visual salience subset for evaluating multimodal understanding capabilities. We conducted experiments on RAIR using 14 open and closed-source models. The results demonstrate that RAIR presents sufficient challenges even for GPT-5, which achieved the best performance. RAIR data are now available, serving as an industry benchmark for relevance assessment while providing new insights into general LLM and Visual Language Model(VLM) evaluation.

Dynamic Feedback Engines: Layer-Wise Control for Self-Regulating Continual Learning

Dec 25, 2025Abstract:Continual learning aims to acquire new tasks while preserving performance on previously learned ones, but most methods struggle with catastrophic forgetting. Existing approaches typically treat all layers uniformly, often trading stability for plasticity or vice versa. However, different layers naturally exhibit varying levels of uncertainty (entropy) when classifying tasks. High-entropy layers tend to underfit by failing to capture task-specific patterns, while low-entropy layers risk overfitting by becoming overly confident and specialized. To address this imbalance, we propose an entropy-aware continual learning method that employs a dynamic feedback mechanism to regulate each layer based on its entropy. Specifically, our approach reduces entropy in high-entropy layers to mitigate underfitting and increases entropy in overly confident layers to alleviate overfitting. This adaptive regulation encourages the model to converge to wider local minima, which have been shown to improve generalization. Our method is general and can be seamlessly integrated with both replay- and regularization-based approaches. Experiments on various datasets demonstrate substantial performance gains over state-of-the-art continual learning baselines.

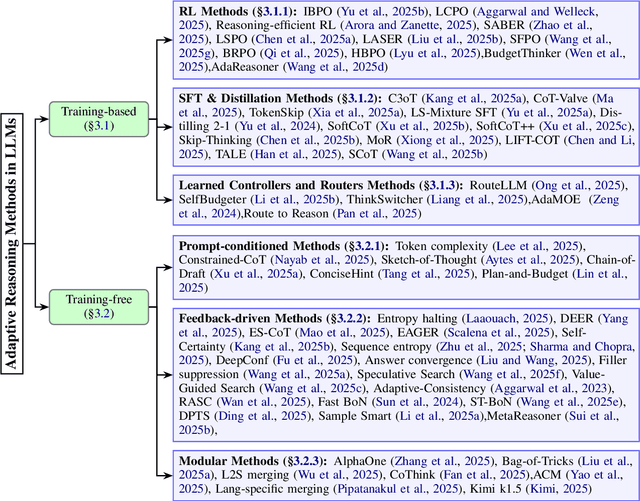

From Efficiency to Adaptivity: A Deeper Look at Adaptive Reasoning in Large Language Models

Nov 13, 2025

Abstract:Recent advances in large language models (LLMs) have made reasoning a central benchmark for evaluating intelligence. While prior surveys focus on efficiency by examining how to shorten reasoning chains or reduce computation, this view overlooks a fundamental challenge: current LLMs apply uniform reasoning strategies regardless of task complexity, generating long traces for trivial problems while failing to extend reasoning for difficult tasks. This survey reframes reasoning through the lens of {adaptivity}: the capability to allocate reasoning effort based on input characteristics such as difficulty and uncertainty. We make three contributions. First, we formalize deductive, inductive, and abductive reasoning within the LLM context, connecting these classical cognitive paradigms with their algorithmic realizations. Second, we formalize adaptive reasoning as a control-augmented policy optimization problem balancing task performance with computational cost, distinguishing learned policies from inference-time control mechanisms. Third, we propose a systematic taxonomy organizing existing methods into training-based approaches that internalize adaptivity through reinforcement learning, supervised fine-tuning, and learned controllers, and training-free approaches that achieve adaptivity through prompt conditioning, feedback-driven halting, and modular composition. This framework clarifies how different mechanisms realize adaptive reasoning in practice and enables systematic comparison across diverse strategies. We conclude by identifying open challenges in self-evaluation, meta-reasoning, and human-aligned reasoning control.

Adaptive Defense against Harmful Fine-Tuning for Large Language Models via Bayesian Data Scheduler

Oct 31, 2025Abstract:Harmful fine-tuning poses critical safety risks to fine-tuning-as-a-service for large language models. Existing defense strategies preemptively build robustness via attack simulation but suffer from fundamental limitations: (i) the infeasibility of extending attack simulations beyond bounded threat models due to the inherent difficulty of anticipating unknown attacks, and (ii) limited adaptability to varying attack settings, as simulation fails to capture their variability and complexity. To address these challenges, we propose Bayesian Data Scheduler (BDS), an adaptive tuning-stage defense strategy with no need for attack simulation. BDS formulates harmful fine-tuning defense as a Bayesian inference problem, learning the posterior distribution of each data point's safety attribute, conditioned on the fine-tuning and alignment datasets. The fine-tuning process is then constrained by weighting data with their safety attributes sampled from the posterior, thus mitigating the influence of harmful data. By leveraging the post hoc nature of Bayesian inference, the posterior is conditioned on the fine-tuning dataset, enabling BDS to tailor its defense to the specific dataset, thereby achieving adaptive defense. Furthermore, we introduce a neural scheduler based on amortized Bayesian learning, enabling efficient transfer to new data without retraining. Comprehensive results across diverse attack and defense settings demonstrate the state-of-the-art performance of our approach. Code is available at https://github.com/Egg-Hu/Bayesian-Data-Scheduler.

Sparse Model Inversion: Efficient Inversion of Vision Transformers for Data-Free Applications

Oct 31, 2025

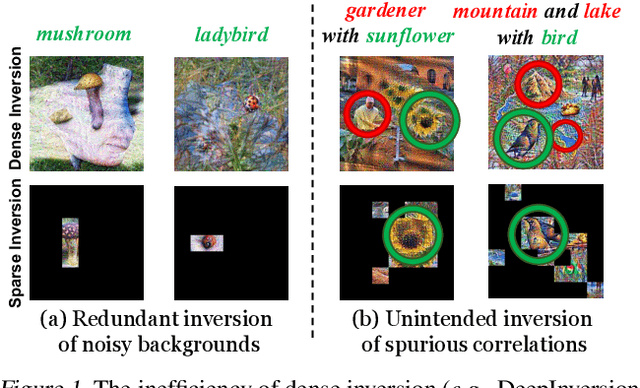

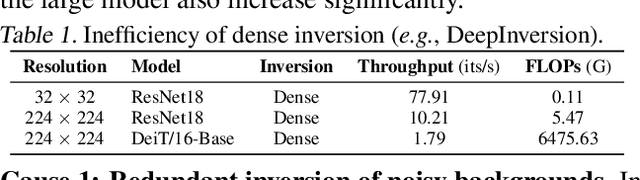

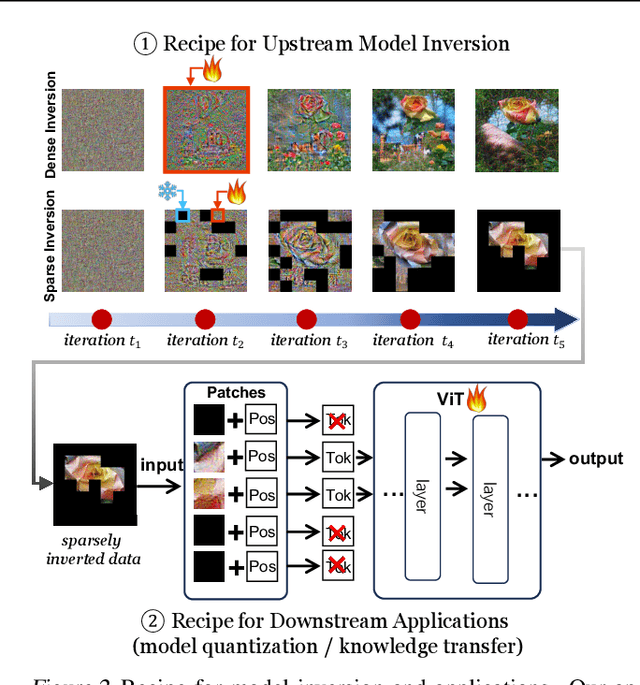

Abstract:Model inversion, which aims to reconstruct the original training data from pre-trained discriminative models, is especially useful when the original training data is unavailable due to privacy, usage rights, or size constraints. However, existing dense inversion methods attempt to reconstruct the entire image area, making them extremely inefficient when inverting high-resolution images from large-scale Vision Transformers (ViTs). We further identify two underlying causes of this inefficiency: the redundant inversion of noisy backgrounds and the unintended inversion of spurious correlations--a phenomenon we term "hallucination" in model inversion. To address these limitations, we propose a novel sparse model inversion strategy, as a plug-and-play extension to speed up existing dense inversion methods with no need for modifying their original loss functions. Specifically, we selectively invert semantic foregrounds while stopping the inversion of noisy backgrounds and potential spurious correlations. Through both theoretical and empirical studies, we validate the efficacy of our approach in achieving significant inversion acceleration (up to 3.79 faster) while maintaining comparable or even enhanced downstream performance in data-free model quantization and data-free knowledge transfer. Code is available at https://github.com/Egg-Hu/SMI.

Model-Agnostic Gender Bias Control for Text-to-Image Generation via Sparse Autoencoder

Jul 28, 2025

Abstract:Text-to-image (T2I) diffusion models often exhibit gender bias, particularly by generating stereotypical associations between professions and gendered subjects. This paper presents SAE Debias, a lightweight and model-agnostic framework for mitigating such bias in T2I generation. Unlike prior approaches that rely on CLIP-based filtering or prompt engineering, which often require model-specific adjustments and offer limited control, SAE Debias operates directly within the feature space without retraining or architectural modifications. By leveraging a k-sparse autoencoder pre-trained on a gender bias dataset, the method identifies gender-relevant directions within the sparse latent space, capturing professional stereotypes. Specifically, a biased direction per profession is constructed from sparse latents and suppressed during inference to steer generations toward more gender-balanced outputs. Trained only once, the sparse autoencoder provides a reusable debiasing direction, offering effective control and interpretable insight into biased subspaces. Extensive evaluations across multiple T2I models, including Stable Diffusion 1.4, 1.5, 2.1, and SDXL, demonstrate that SAE Debias substantially reduces gender bias while preserving generation quality. To the best of our knowledge, this is the first work to apply sparse autoencoders for identifying and intervening in gender bias within T2I models. These findings contribute toward building socially responsible generative AI, providing an interpretable and model-agnostic tool to support fairness in text-to-image generation.

SurgeryV2: Bridging the Gap Between Model Merging and Multi-Task Learning with Deep Representation Surgery

Oct 18, 2024

Abstract:Model merging-based multitask learning (MTL) offers a promising approach for performing MTL by merging multiple expert models without requiring access to raw training data. However, in this paper, we examine the merged model's representation distribution and uncover a critical issue of "representation bias". This bias arises from a significant distribution gap between the representations of the merged and expert models, leading to the suboptimal performance of the merged MTL model. To address this challenge, we first propose a representation surgery solution called Surgery. Surgery is a lightweight, task-specific module that aligns the final layer representations of the merged model with those of the expert models, effectively alleviating bias and improving the merged model's performance. Despite these improvements, a performance gap remains compared to the traditional MTL method. Further analysis reveals that representation bias phenomena exist at each layer of the merged model, and aligning representations only in the last layer is insufficient for fully reducing systemic bias because biases introduced at each layer can accumulate and interact in complex ways. To tackle this, we then propose a more comprehensive solution, deep representation surgery (also called SurgeryV2), which mitigates representation bias across all layers, and thus bridges the performance gap between model merging-based MTL and traditional MTL. Finally, we design an unsupervised optimization objective to optimize both the Surgery and SurgeryV2 modules. Our experimental results show that incorporating these modules into state-of-the-art (SOTA) model merging schemes leads to significant performance gains. Notably, our SurgeryV2 scheme reaches almost the same level as individual expert models or the traditional MTL model. The code is available at \url{https://github.com/EnnengYang/SurgeryV2}.

Large Language Model Enabled Semantic Communication Systems

Jul 19, 2024

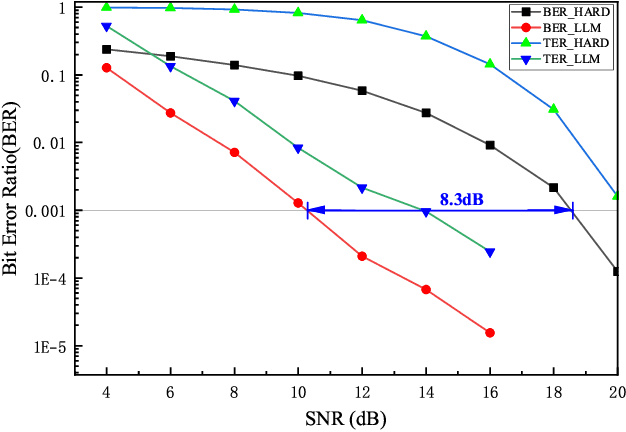

Abstract:Large language models (LLMs) have recently demonstrated state-of-the-art performance across various natural language processing (NLP) tasks, achieving near-human levels in multiple language understanding challenges and aligning closely with the core principles of semantic communication. Inspired by LLMs' advancements in semantic processing, we propose an innovative LLM-enabled semantic communication system framework, named LLM-SC, that applies LLMs directly to the physical layer coding and decoding for the first time. By analyzing the relationship between the training process of LLMs and the optimization objectives of semantic communication, we propose training a semantic encoder through LLMs' tokenizer training and establishing a semantic knowledge base via the LLMs' unsupervised pre-training process. This knowledge base aids in constructing the optimal decoder by providing the prior probability of the transmitted language sequence. Based on this foundation, we derive the optimal decoding criterion for the receiver and introduce the beam search algorithm to further reduce the complexity. Furthermore, we assert that existing LLMs can be employed directly for LLM-SC without additional re-training or fine-tuning. Simulation results demonstrate that LLM-SC outperforms classical DeepSC at signal-to-noise ratios (SNR) exceeding 3 dB, enabling error-free transmission of semantic information under high SNR, which is unattainable by DeepSC. In addition to semantic-level performance, LLM-SC demonstrates compatibility with technical-level performance, achieving approximately 8 dB coding gain for a bit error ratio (BER) of $10^{-3}$ without any channel coding while maintaining the same joint source-channel coding rate as traditional communication systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge