Zheng Jiang

SSTODE: Ocean-Atmosphere Physics-Informed Neural ODEs for Sea Surface Temperature Prediction

Nov 07, 2025Abstract:Sea Surface Temperature (SST) is crucial for understanding upper-ocean thermal dynamics and ocean-atmosphere interactions, which have profound economic and social impacts. While data-driven models show promise in SST prediction, their black-box nature often limits interpretability and overlooks key physical processes. Recently, physics-informed neural networks have been gaining momentum but struggle with complex ocean-atmosphere dynamics due to 1) inadequate characterization of seawater movement (e.g., coastal upwelling) and 2) insufficient integration of external SST drivers (e.g., turbulent heat fluxes). To address these challenges, we propose SSTODE, a physics-informed Neural Ordinary Differential Equations (Neural ODEs) framework for SST prediction. First, we derive ODEs from fluid transport principles, incorporating both advection and diffusion to model ocean spatiotemporal dynamics. Through variational optimization, we recover a latent velocity field that explicitly governs the temporal dynamics of SST. Building upon ODE, we introduce an Energy Exchanges Integrator (EEI)-inspired by ocean heat budget equations-to account for external forcing factors. Thus, the variations in the components of these factors provide deeper insights into SST dynamics. Extensive experiments demonstrate that SSTODE achieves state-of-the-art performances in global and regional SST forecasting benchmarks. Furthermore, SSTODE visually reveals the impact of advection dynamics, thermal diffusion patterns, and diurnal heating-cooling cycles on SST evolution. These findings demonstrate the model's interpretability and physical consistency.

HaDM-ST: Histology-Assisted Differential Modeling for Spatial Transcriptomics Generation

Aug 10, 2025Abstract:Spatial transcriptomics (ST) reveals spatial heterogeneity of gene expression, yet its resolution is limited by current platforms. Recent methods enhance resolution via H&E-stained histology, but three major challenges persist: (1) isolating expression-relevant features from visually complex H&E images; (2) achieving spatially precise multimodal alignment in diffusion-based frameworks; and (3) modeling gene-specific variation across expression channels. We propose HaDM-ST (Histology-assisted Differential Modeling for ST Generation), a high-resolution ST generation framework conditioned on H&E images and low-resolution ST. HaDM-ST includes: (i) a semantic distillation network to extract predictive cues from H&E; (ii) a spatial alignment module enforcing pixel-wise correspondence with low-resolution ST; and (iii) a channel-aware adversarial learner for fine-grained gene-level modeling. Experiments on 200 genes across diverse tissues and species show HaDM-ST consistently outperforms prior methods, enhancing spatial fidelity and gene-level coherence in high-resolution ST predictions.

FSD-BEV: Foreground Self-Distillation for Multi-view 3D Object Detection

Jul 14, 2024

Abstract:Although multi-view 3D object detection based on the Bird's-Eye-View (BEV) paradigm has garnered widespread attention as an economical and deployment-friendly perception solution for autonomous driving, there is still a performance gap compared to LiDAR-based methods. In recent years, several cross-modal distillation methods have been proposed to transfer beneficial information from teacher models to student models, with the aim of enhancing performance. However, these methods face challenges due to discrepancies in feature distribution originating from different data modalities and network structures, making knowledge transfer exceptionally challenging. In this paper, we propose a Foreground Self-Distillation (FSD) scheme that effectively avoids the issue of distribution discrepancies, maintaining remarkable distillation effects without the need for pre-trained teacher models or cumbersome distillation strategies. Additionally, we design two Point Cloud Intensification (PCI) strategies to compensate for the sparsity of point clouds by frame combination and pseudo point assignment. Finally, we develop a Multi-Scale Foreground Enhancement (MSFE) module to extract and fuse multi-scale foreground features by predicted elliptical Gaussian heatmap, further improving the model's performance. We integrate all the above innovations into a unified framework named FSD-BEV. Extensive experiments on the nuScenes dataset exhibit that FSD-BEV achieves state-of-the-art performance, highlighting its effectiveness. The code and models are available at: https://github.com/CocoBoom/fsd-bev.

Sensing Mutual Information with Random Signals in Gaussian Channels

Nov 13, 2023

Abstract:Sensing performance is typically evaluated by classical metrics, such as Cramer-Rao bound and signal-to-clutter-plus-noise ratio. The recent development of the integrated sensing and communication (ISAC) framework motivated the efforts to unify the metric for sensing and communication, where researchers have proposed to utilize mutual information (MI) to measure the sensing performance with deterministic signals. However, the need to communicate in ISAC systems necessitates the use of random signals for sensing applications and the closed-form evaluation for the sensing mutual information (SMI) with random signals is not yet available in the literature. This paper investigates the achievable performance and precoder design for sensing applications with random signals. For that purpose, we first derive the closed-form expression for the SMI with random signals by utilizing random matrix theory. The result reveals some interesting physical insights regarding the relation between the SMI with deterministic and random signals. The derived SMI is then utilized to optimize the precoder by leveraging a manifold-based optimization approach. The effectiveness of the proposed methods is validated by simulation results.

Dialog-to-Actions: Building Task-Oriented Dialogue System via Action-Level Generation

Apr 03, 2023

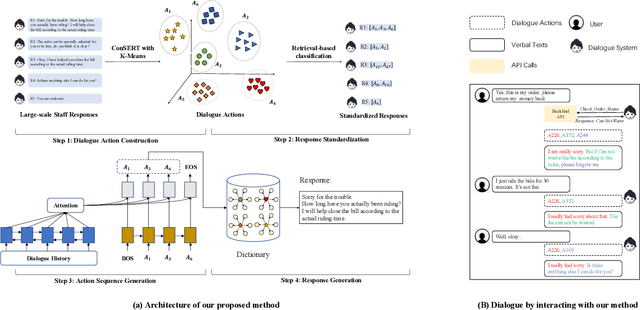

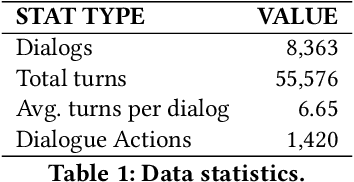

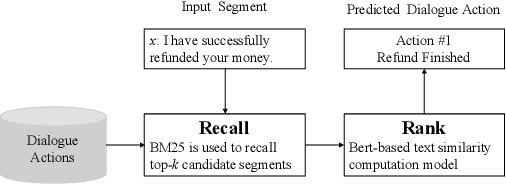

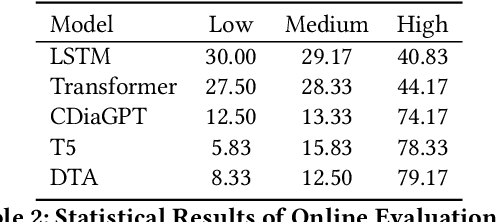

Abstract:End-to-end generation-based approaches have been investigated and applied in task-oriented dialogue systems. However, in industrial scenarios, existing methods face the bottlenecks of controllability (e.g., domain-inconsistent responses, repetition problem, etc) and efficiency (e.g., long computation time, etc). In this paper, we propose a task-oriented dialogue system via action-level generation. Specifically, we first construct dialogue actions from large-scale dialogues and represent each natural language (NL) response as a sequence of dialogue actions. Further, we train a Sequence-to-Sequence model which takes the dialogue history as input and outputs sequence of dialogue actions. The generated dialogue actions are transformed into verbal responses. Experimental results show that our light-weighted method achieves competitive performance, and has the advantage of controllability and efficiency.

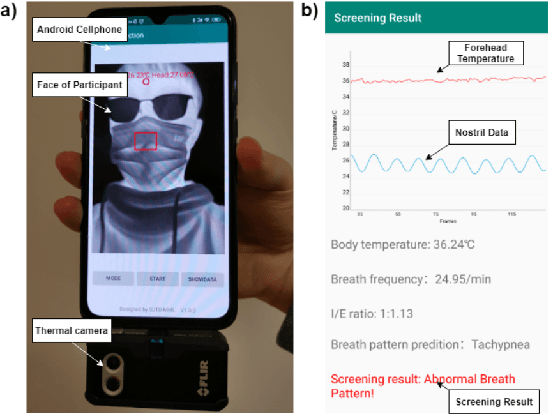

Combining Visible Light and Infrared Imaging for Efficient Detection of Respiratory Infections such as COVID-19 on Portable Device

Apr 15, 2020

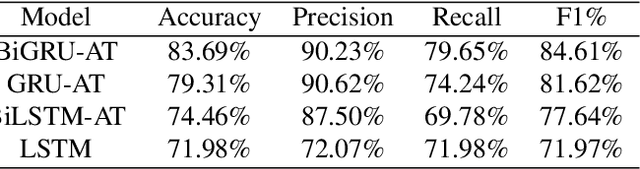

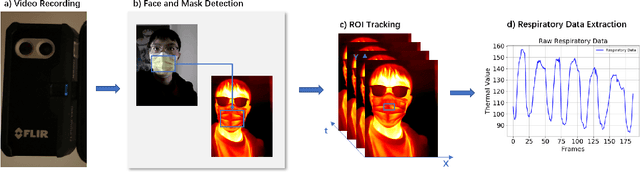

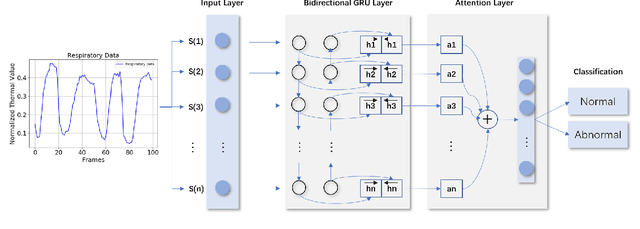

Abstract:Coronavirus Disease 2019 (COVID-19) has become a serious global epidemic in the past few months and caused huge loss to human society worldwide. For such a large-scale epidemic, early detection and isolation of potential virus carriers is essential to curb the spread of the epidemic. Recent studies have shown that one important feature of COVID-19 is the abnormal respiratory status caused by viral infections. During the epidemic, many people tend to wear masks to reduce the risk of getting sick. Therefore, in this paper, we propose a portable non-contact method to screen the health condition of people wearing masks through analysis of the respiratory characteristics. The device mainly consists of a FLIR one thermal camera and an Android phone. This may help identify those potential patients of COVID-19 under practical scenarios such as pre-inspection in schools and hospitals. In this work, we perform the health screening through the combination of the RGB and thermal videos obtained from the dual-mode camera and deep learning architecture.We first accomplish a respiratory data capture technique for people wearing masks by using face recognition. Then, a bidirectional GRU neural network with attention mechanism is applied to the respiratory data to obtain the health screening result. The results of validation experiments show that our model can identify the health status on respiratory with the accuracy of 83.7\% on the real-world dataset. The abnormal respiratory data and part of normal respiratory data are collected from Ruijin Hospital Affiliated to The Shanghai Jiao Tong University Medical School. Other normal respiratory data are obtained from healthy people around our researchers. This work demonstrates that the proposed portable and intelligent health screening device can be used as a pre-scan method for respiratory infections, which may help fight the current COVID-19 epidemic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge