Zeshi Yang

TokenHSI: Unified Synthesis of Physical Human-Scene Interactions through Task Tokenization

Mar 25, 2025Abstract:Synthesizing diverse and physically plausible Human-Scene Interactions (HSI) is pivotal for both computer animation and embodied AI. Despite encouraging progress, current methods mainly focus on developing separate controllers, each specialized for a specific interaction task. This significantly hinders the ability to tackle a wide variety of challenging HSI tasks that require the integration of multiple skills, e.g., sitting down while carrying an object. To address this issue, we present TokenHSI, a single, unified transformer-based policy capable of multi-skill unification and flexible adaptation. The key insight is to model the humanoid proprioception as a separate shared token and combine it with distinct task tokens via a masking mechanism. Such a unified policy enables effective knowledge sharing across skills, thereby facilitating the multi-task training. Moreover, our policy architecture supports variable length inputs, enabling flexible adaptation of learned skills to new scenarios. By training additional task tokenizers, we can not only modify the geometries of interaction targets but also coordinate multiple skills to address complex tasks. The experiments demonstrate that our approach can significantly improve versatility, adaptability, and extensibility in various HSI tasks. Website: https://liangpan99.github.io/TokenHSI/

Learning based 2D Irregular Shape Packing

Sep 19, 2023Abstract:2D irregular shape packing is a necessary step to arrange UV patches of a 3D model within a texture atlas for memory-efficient appearance rendering in computer graphics. Being a joint, combinatorial decision-making problem involving all patch positions and orientations, this problem has well-known NP-hard complexity. Prior solutions either assume a heuristic packing order or modify the upstream mesh cut and UV mapping to simplify the problem, which either limits the packing ratio or incurs robustness or generality issues. Instead, we introduce a learning-assisted 2D irregular shape packing method that achieves a high packing quality with minimal requirements from the input. Our method iteratively selects and groups subsets of UV patches into near-rectangular super patches, essentially reducing the problem to bin-packing, based on which a joint optimization is employed to further improve the packing ratio. In order to efficiently deal with large problem instances with hundreds of patches, we train deep neural policies to predict nearly rectangular patch subsets and determine their relative poses, leading to linear time scaling with the number of patches. We demonstrate the effectiveness of our method on three datasets for UV packing, where our method achieves a higher packing ratio over several widely used baselines with competitive computational speed.

Learning to Use Chopsticks in Diverse Styles

May 28, 2022

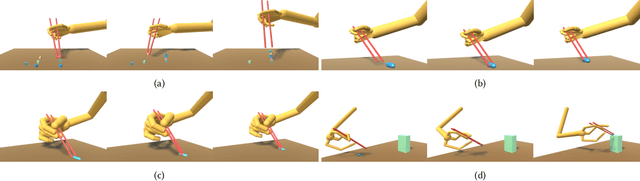

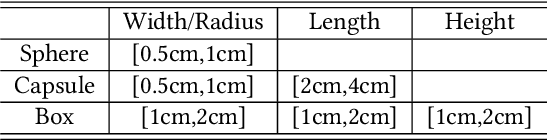

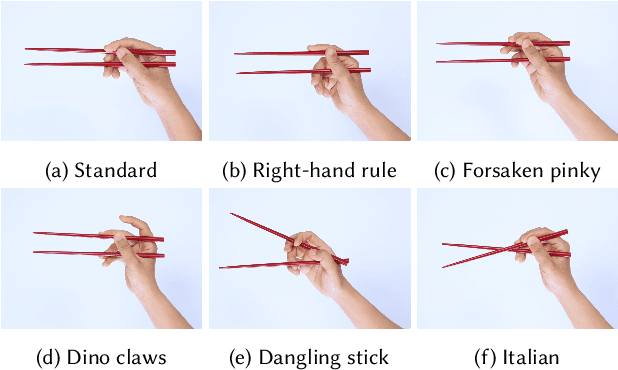

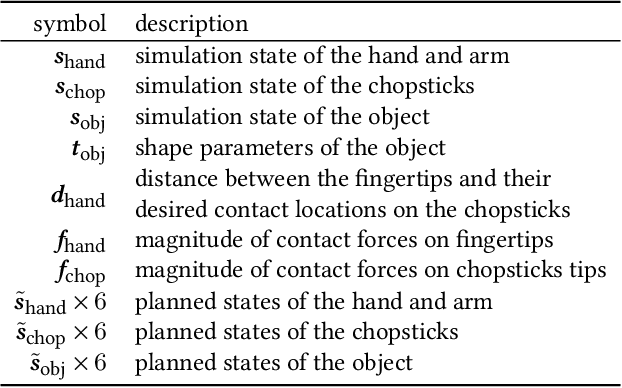

Abstract:Learning dexterous manipulation skills is a long-standing challenge in computer graphics and robotics, especially when the task involves complex and delicate interactions between the hands, tools and objects. In this paper, we focus on chopsticks-based object relocation tasks, which are common yet demanding. The key to successful chopsticks skills is steady gripping of the sticks that also supports delicate maneuvers. We automatically discover physically valid chopsticks holding poses by Bayesian Optimization (BO) and Deep Reinforcement Learning (DRL), which works for multiple gripping styles and hand morphologies without the need of example data. Given as input the discovered gripping poses and desired objects to be moved, we build physics-based hand controllers to accomplish relocation tasks in two stages. First, kinematic trajectories are synthesized for the chopsticks and hand in a motion planning stage. The key components of our motion planner include a grasping model to select suitable chopsticks configurations for grasping the object, and a trajectory optimization module to generate collision-free chopsticks trajectories. Then we train physics-based hand controllers through DRL again to track the desired kinematic trajectories produced by the motion planner. We demonstrate the capabilities of our framework by relocating objects of various shapes and sizes, in diverse gripping styles and holding positions for multiple hand morphologies. Our system achieves faster learning speed and better control robustness, when compared to vanilla systems that attempt to learn chopstick-based skills without a gripping pose optimization module and/or without a kinematic motion planner.

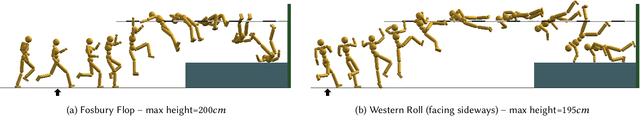

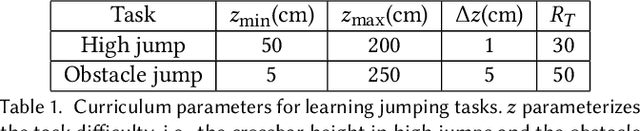

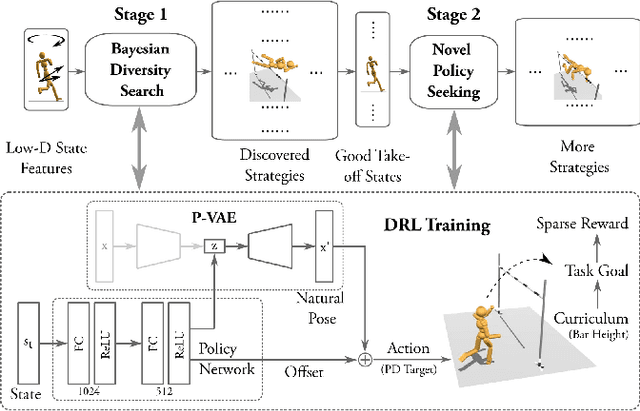

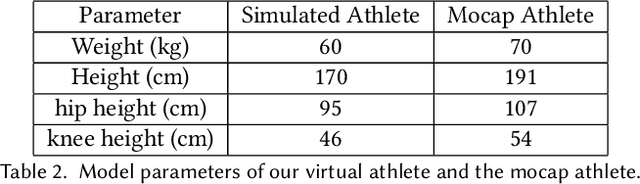

Discovering Diverse Athletic Jumping Strategies

May 02, 2021

Abstract:We present a framework that enables the discovery of diverse and natural-looking motion strategies for athletic skills such as the high jump. The strategies are realized as control policies for physics-based characters. Given a task objective and an initial character configuration, the combination of physics simulation and deep reinforcement learning (DRL) provides a suitable starting point for automatic control policy training. To facilitate the learning of realistic human motions, we propose a Pose Variational Autoencoder (P-VAE) to constrain the actions to a subspace of natural poses. In contrast to motion imitation methods, a rich variety of novel strategies can naturally emerge by exploring initial character states through a sample-efficient Bayesian diversity search (BDS) algorithm. A second stage of optimization that encourages novel policies can further enrich the unique strategies discovered. Our method allows for the discovery of diverse and novel strategies for athletic jumping motions such as high jumps and obstacle jumps with no motion examples and less reward engineering than prior work.

* 17 pages; SIGGRAPH 2021

Efficient Hyperparameter Optimization for Physics-based Character Animation

Apr 26, 2021

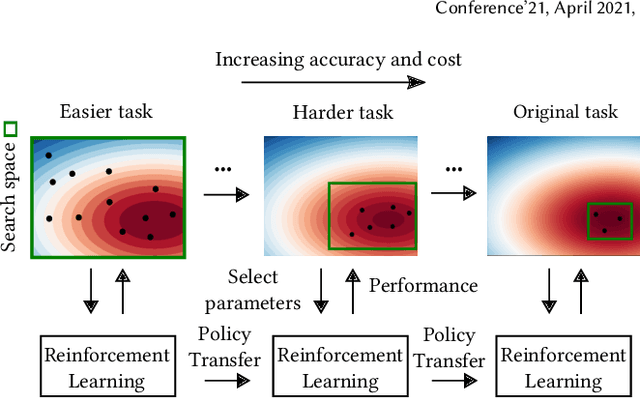

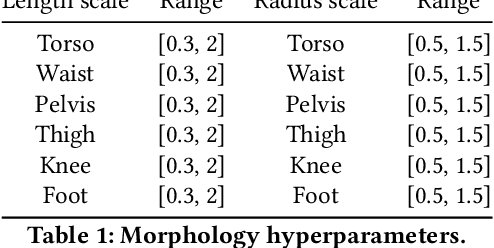

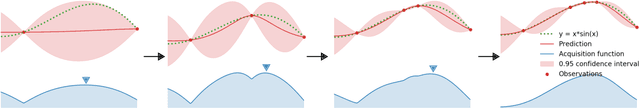

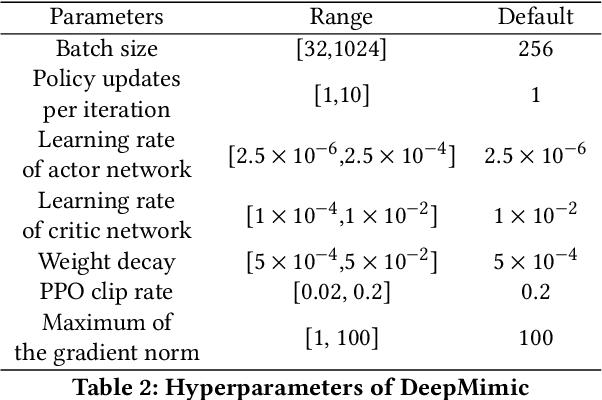

Abstract:Physics-based character animation has seen significant advances in recent years with the adoption of Deep Reinforcement Learning (DRL). However, DRL-based learning methods are usually computationally expensive and their performance crucially depends on the choice of hyperparameters. Tuning hyperparameters for these methods often requires repetitive training of control policies, which is even more computationally prohibitive. In this work, we propose a novel Curriculum-based Multi-Fidelity Bayesian Optimization framework (CMFBO) for efficient hyperparameter optimization of DRL-based character control systems. Using curriculum-based task difficulty as fidelity criterion, our method improves searching efficiency by gradually pruning search space through evaluation on easier motor skill tasks. We evaluate our method on two physics-based character control tasks: character morphology optimization and hyperparameter tuning of DeepMimic. Our algorithm significantly outperforms state-of-the-art hyperparameter optimization methods applicable for physics-based character animation. In particular, we show that hyperparameters optimized through our algorithm result in at least 5x efficiency gain comparing to author-released settings in DeepMimic.

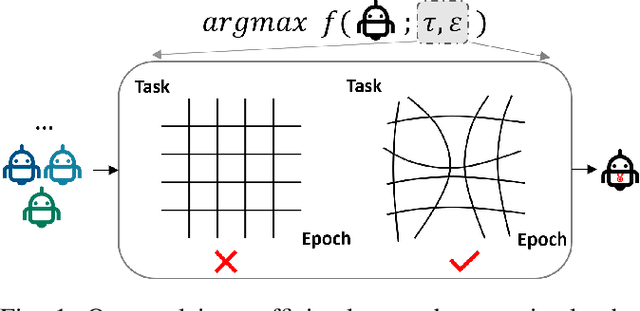

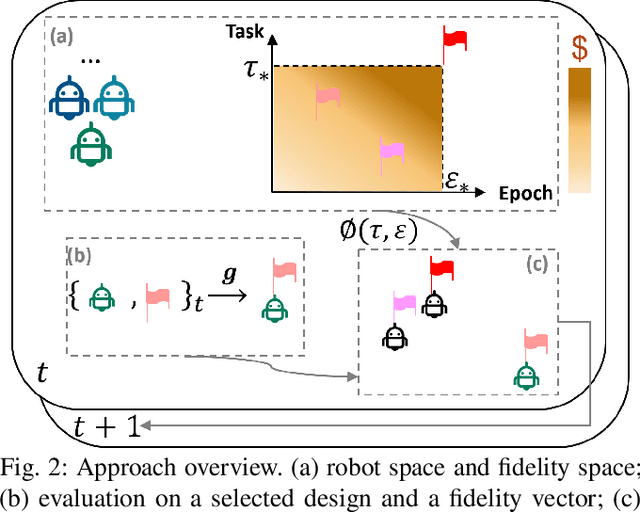

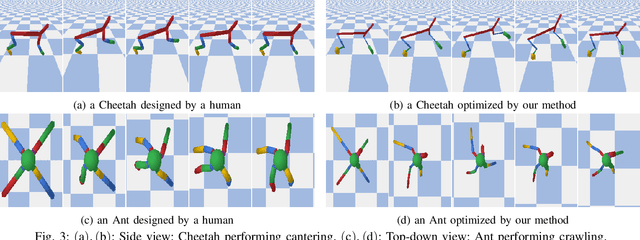

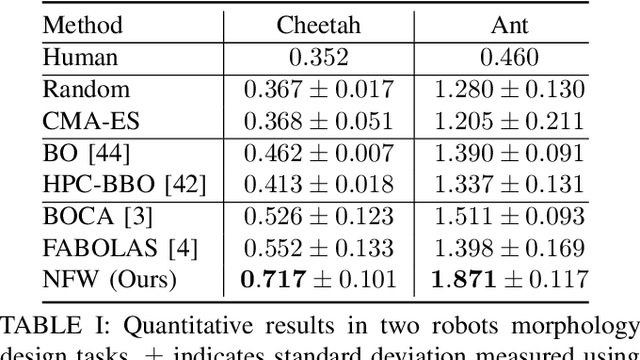

Neural fidelity warping for efficient robot morphology design

Dec 09, 2020

Abstract:We consider the problem of optimizing a robot morphology to achieve the best performance for a target task, under computational resource limitations. The evaluation process for each morphological design involves learning a controller for the design, which can consume substantial time and computational resources. To address the challenge of expensive robot morphology evaluation, we present a continuous multi-fidelity Bayesian Optimization framework that efficiently utilizes computational resources via low-fidelity evaluations. We identify the problem of non-stationarity over fidelity space. Our proposed fidelity warping mechanism can learn representations of learning epochs and tasks to model non-stationary covariances between continuous fidelity evaluations which prove challenging for off-the-shelf stationary kernels. Various experiments demonstrate that our method can utilize the low-fidelity evaluations to efficiently search for the optimal robot morphology, outperforming state-of-the-art methods.

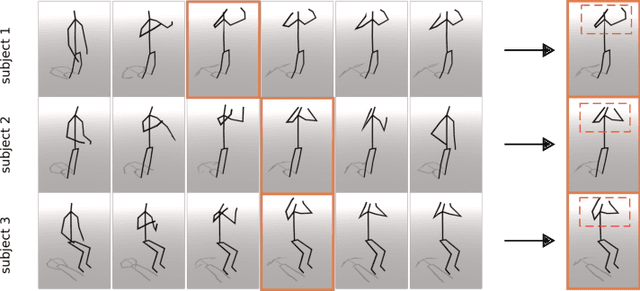

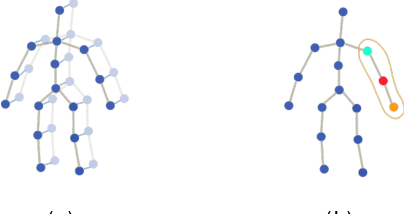

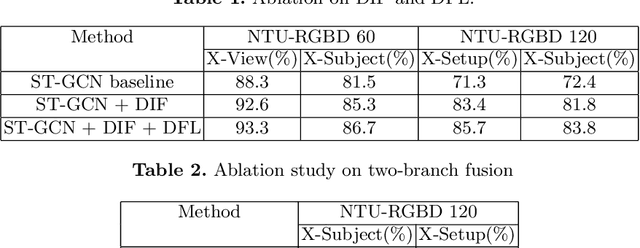

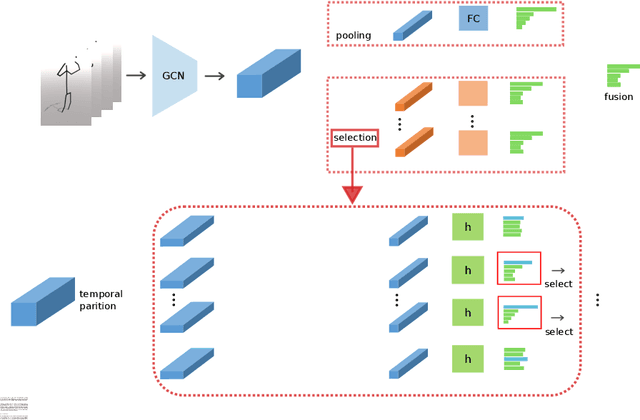

Improving Skeleton-based Action Recognitionwith Robust Spatial and Temporal Features

Aug 01, 2020

Abstract:Recently skeleton-based action recognition has made signif-icant progresses in the computer vision community. Most state-of-the-art algorithms are based on Graph Convolutional Networks (GCN), andtarget at improving the network structure of the backbone GCN lay-ers. In this paper, we propose a novel mechanism to learn more robustdiscriminative features in space and time. More specifically, we add aDiscriminative Feature Learning (DFL) branch to the last layers of thenetwork to extract discriminative spatial and temporal features to helpregularize the learning. We also formally advocate the use of Direction-Invariant Features (DIF) as input to the neural networks. We show thataction recognition accuracy can be improved when these robust featuresare learned and used. We compare our results with those of ST-GCNand related methods on four datasets: NTU-RGBD60, NTU-RGBD120,SYSU 3DHOI and Skeleton-Kinetics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge