Zbigniew Kalbarczyk

Hierarchical Autoscaling for Large Language Model Serving with Chiron

Jan 14, 2025

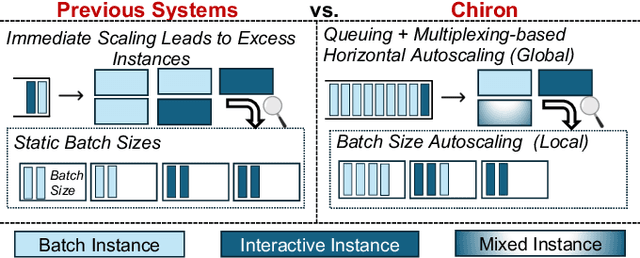

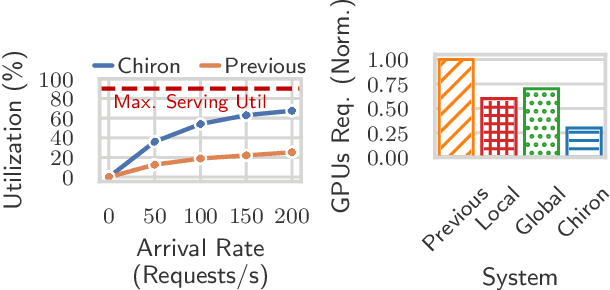

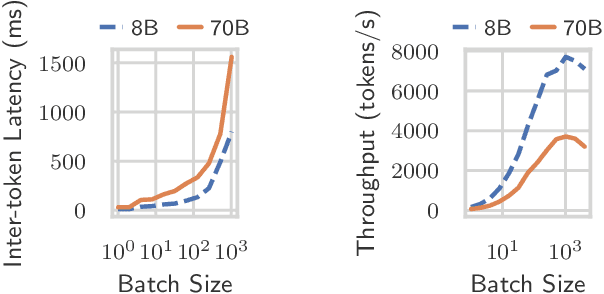

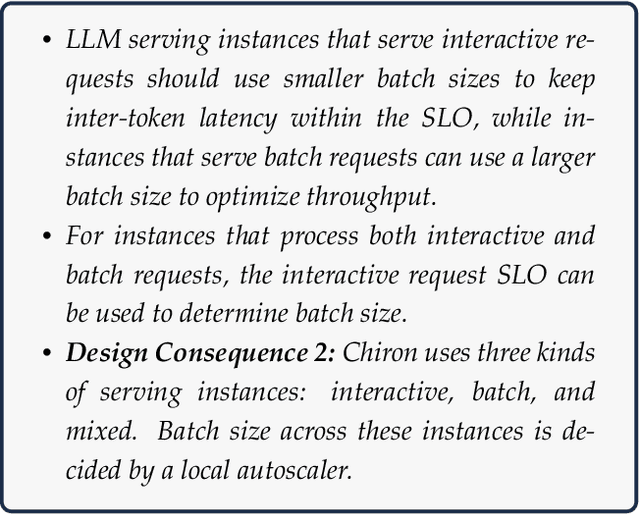

Abstract:Large language model (LLM) serving is becoming an increasingly important workload for cloud providers. Based on performance SLO requirements, LLM inference requests can be divided into (a) interactive requests that have tight SLOs in the order of seconds, and (b) batch requests that have relaxed SLO in the order of minutes to hours. These SLOs can degrade based on the arrival rates, multiplexing, and configuration parameters, thus necessitating the use of resource autoscaling on serving instances and their batch sizes. However, previous autoscalers for LLM serving do not consider request SLOs leading to unnecessary scaling and resource under-utilization. To address these limitations, we introduce Chiron, an autoscaler that uses the idea of hierarchical backpressure estimated using queue size, utilization, and SLOs. Our experiments show that Chiron achieves up to 90% higher SLO attainment and improves GPU efficiency by up to 70% compared to existing solutions.

Watch Out for the Safety-Threatening Actors: Proactively Mitigating Safety Hazards

Jun 02, 2022

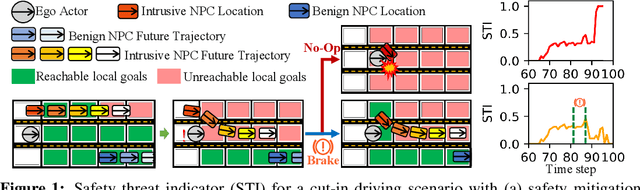

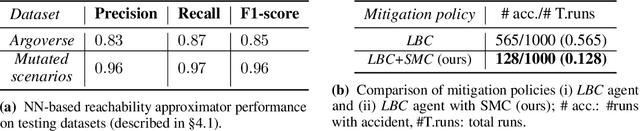

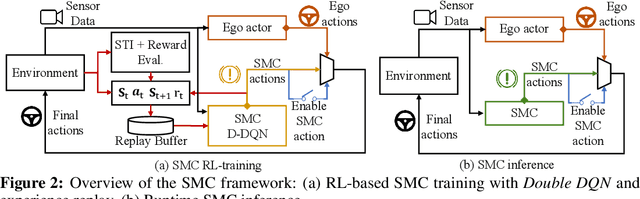

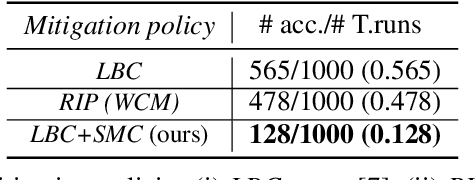

Abstract:Despite the successful demonstration of autonomous vehicles (AVs), such as self-driving cars, ensuring AV safety remains a challenging task. Although some actors influence an AV's driving decisions more than others, current approaches pay equal attention to each actor on the road. An actor's influence on the AV's decision can be characterized in terms of its ability to decrease the number of safe navigational choices for the AV. In this work, we propose a safety threat indicator (STI) using counterfactual reasoning to estimate the importance of each actor on the road with respect to its influence on the AV's safety. We use this indicator to (i) characterize the existing real-world datasets to identify rare hazardous scenarios as well as the poor performance of existing controllers in such scenarios; and (ii) design an RL based safety mitigation controller to proactively mitigate the safety hazards those actors pose to the AV. Our approach reduces the accident rate for the state-of-the-art AV agent(s) in rare hazardous scenarios by more than 70%.

Watch out for the risky actors: Assessing risk in dynamic environments for safe driving

Oct 19, 2021

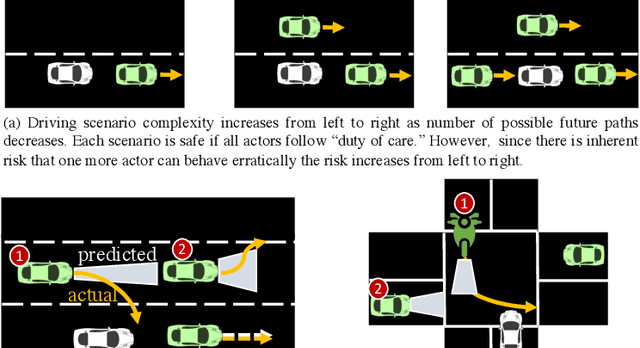

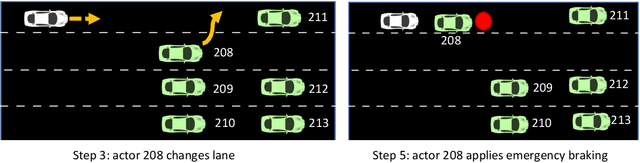

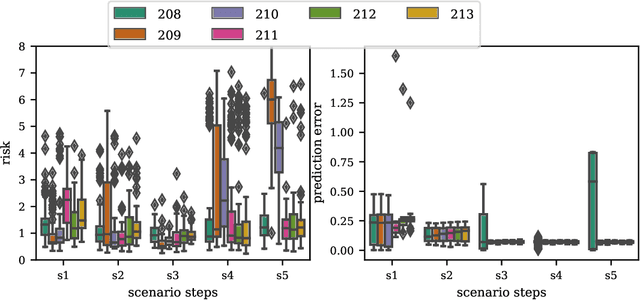

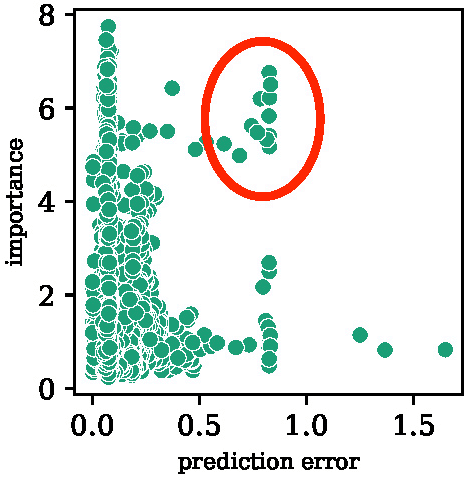

Abstract:Driving in a dynamic environment that consists of other actors is inherently a risky task as each actor influences the driving decision and may significantly limit the number of choices in terms of navigation and safety plan. The risk encountered by the Ego actor depends on the driving scenario and the uncertainty associated with predicting the future trajectories of the other actors in the driving scenario. However, not all objects pose a similar risk. Depending on the object's type, trajectory, position, and the associated uncertainty with these quantities; some objects pose a much higher risk than others. The higher the risk associated with an actor, the more attention must be directed towards that actor in terms of resources and safety planning. In this paper, we propose a novel risk metric to calculate the importance of each actor in the world and demonstrate its usefulness through a case study.

ML-driven Malware that Targets AV Safety

Apr 24, 2020

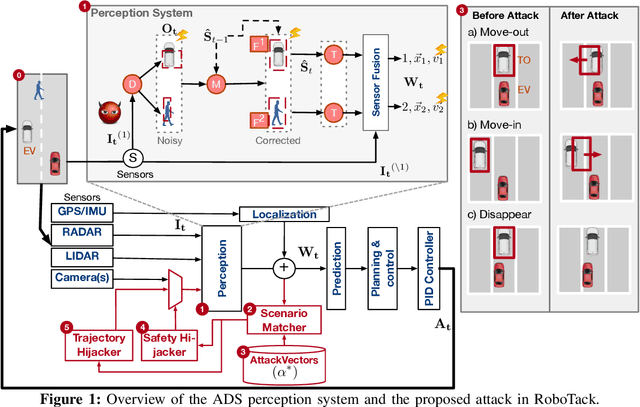

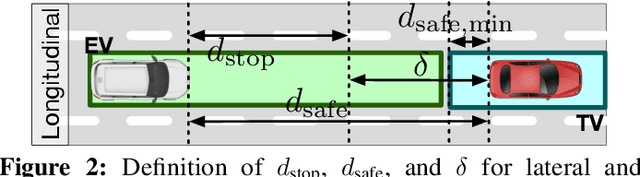

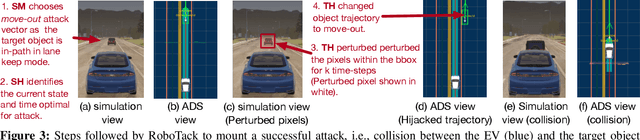

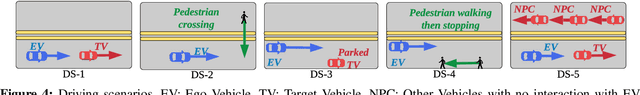

Abstract:Ensuring the safety of autonomous vehicles (AVs) is critical for their mass deployment and public adoption. However, security attacks that violate safety constraints and cause accidents are a significant deterrent to achieving public trust in AVs, and that hinders a vendor's ability to deploy AVs. Creating a security hazard that results in a severe safety compromise (for example, an accident) is compelling from an attacker's perspective. In this paper, we introduce an attack model, a method to deploy the attack in the form of smart malware, and an experimental evaluation of its impact on production-grade autonomous driving software. We find that determining the time interval during which to launch the attack is{ critically} important for causing safety hazards (such as collisions) with a high degree of success. For example, the smart malware caused 33X more forced emergency braking than random attacks did, and accidents in 52.6% of the driving simulations.

* Accepted for DSN 2020

Adverse Events in Robotic Surgery: A Retrospective Study of 14 Years of FDA Data

Jul 21, 2015

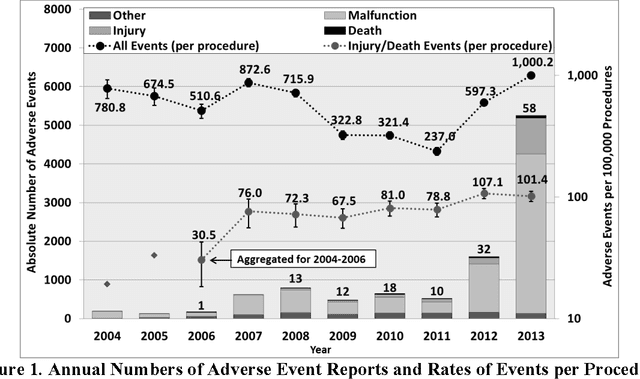

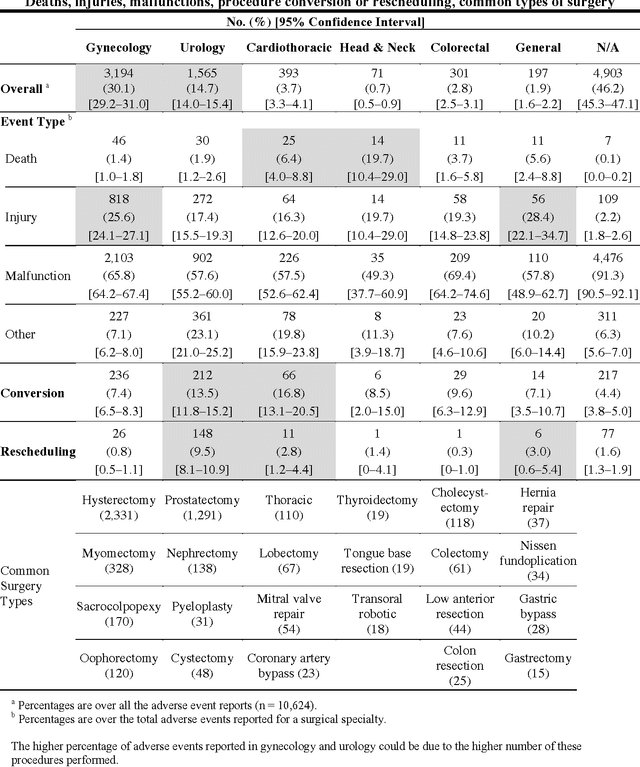

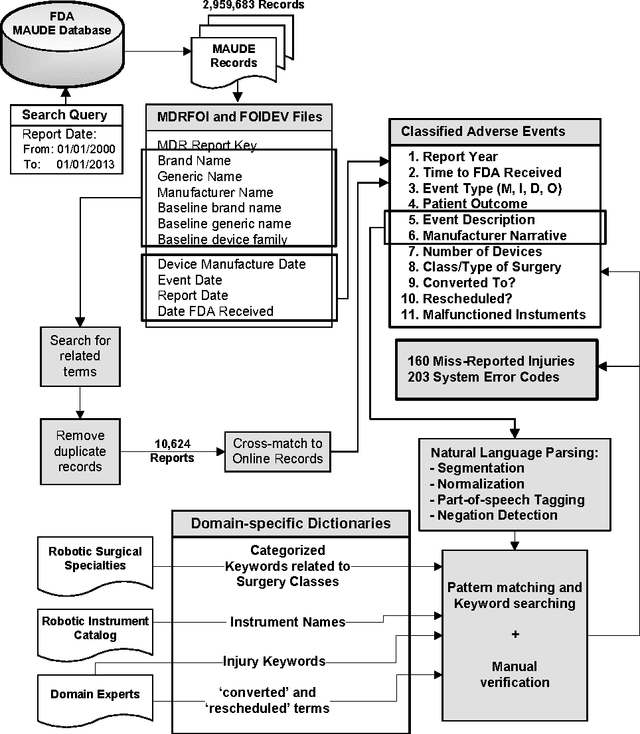

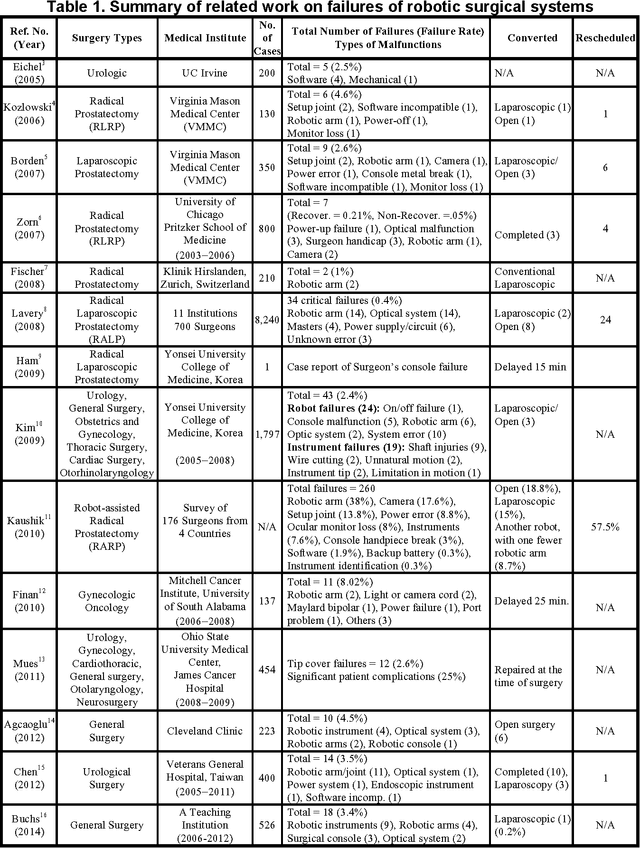

Abstract:Understanding the causes and patient impacts of surgical adverse events will help improve systems and operational practices to avoid incidents in the future. We analyzed the adverse events data related to robotic systems and instruments used in minimally invasive surgery, reported to the U.S. FDA MAUDE database from January 2000 to December 2013. We determined the number of events reported per procedure and per surgical specialty, the most common types of device malfunctions and their impact on patients, and the causes for catastrophic events such as major complications, patient injuries, and deaths. During the study period, 144 deaths (1.4% of the 10,624 reports), 1,391 patient injuries (13.1%), and 8,061 device malfunctions (75.9%) were reported. The numbers of injury and death events per procedure have stayed relatively constant since 2007 (mean = 83.4, 95% CI, 74.2-92.7). Surgical specialties, for which robots are extensively used, such as gynecology and urology, had lower number of injuries, deaths, and conversions per procedure than more complex surgeries, such as cardiothoracic and head and neck (106.3 vs. 232.9, Risk Ratio = 2.2, 95% CI, 1.9-2.6). Device and instrument malfunctions, such as falling of burnt/broken pieces of instruments into the patient (14.7%), electrical arcing of instruments (10.5%), unintended operation of instruments (8.6%), system errors (5%), and video/imaging problems (2.6%), constituted a major part of the reports. Device malfunctions impacted patients in terms of injuries or procedure interruptions. In 1,104 (10.4%) of the events, the procedure was interrupted to restart the system (3.1%), to convert the procedure to non-robotic techniques (7.3%), or to reschedule it to a later time (2.5%). Adoption of advanced techniques in design and operation of robotic surgical systems may reduce these preventable incidents in the future.

* Presented as the J. Maxwell Chamberlain Memorial Paper for adult cardiac surgery at the 50th Annual Meeting of the Society of Thoracic Surgeons in January. See Appendix for more detailed results, discussions, and related work. Updated the headers

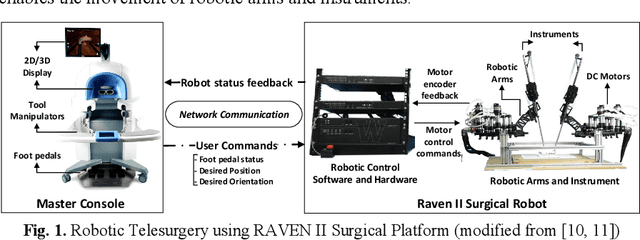

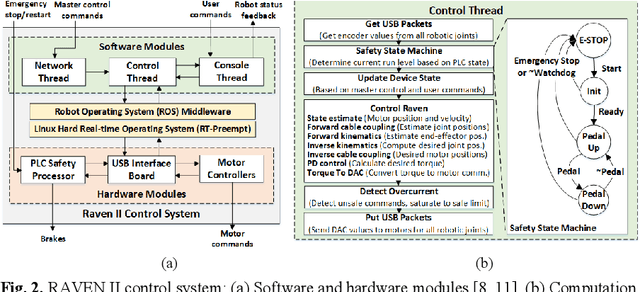

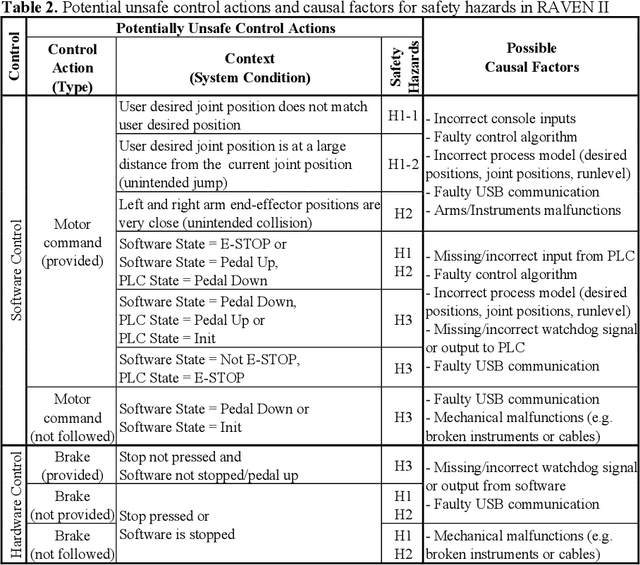

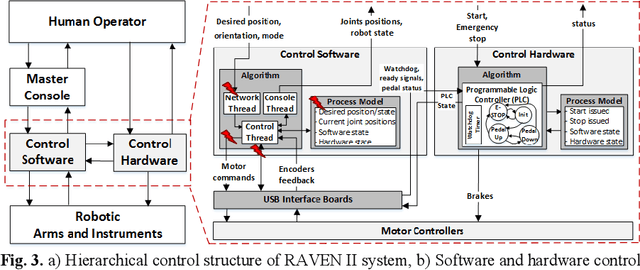

Systems-theoretic Safety Assessment of Robotic Telesurgical Systems

Jul 08, 2015

Abstract:Robotic telesurgical systems are one of the most complex medical cyber-physical systems on the market, and have been used in over 1.75 million procedures during the last decade. Despite significant improvements in design of robotic surgical systems through the years, there have been ongoing occurrences of safety incidents during procedures that negatively impact patients. This paper presents an approach for systems-theoretic safety assessment of robotic telesurgical systems using software-implemented fault-injection. We used a systemstheoretic hazard analysis technique (STPA) to identify the potential safety hazard scenarios and their contributing causes in RAVEN II robot, an open-source robotic surgical platform. We integrated the robot control software with a softwareimplemented fault-injection engine which measures the resilience of the system to the identified safety hazard scenarios by automatically inserting faults into different parts of the robot control software. Representative hazard scenarios from real robotic surgery incidents reported to the U.S. Food and Drug Administration (FDA) MAUDE database were used to demonstrate the feasibility of the proposed approach for safety-based design of robotic telesurgical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge