Zain Asgar

KForge: Program Synthesis for Diverse AI Hardware Accelerators

Nov 17, 2025

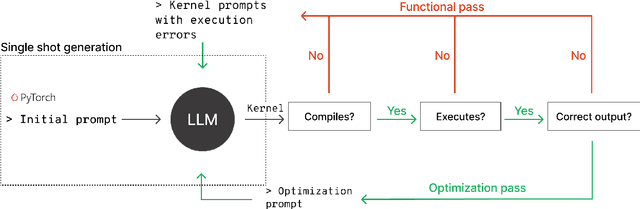

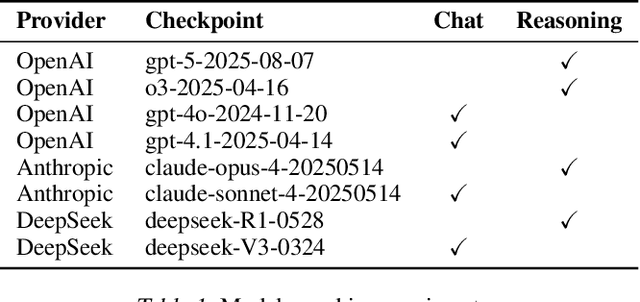

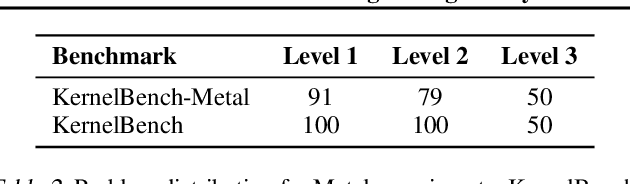

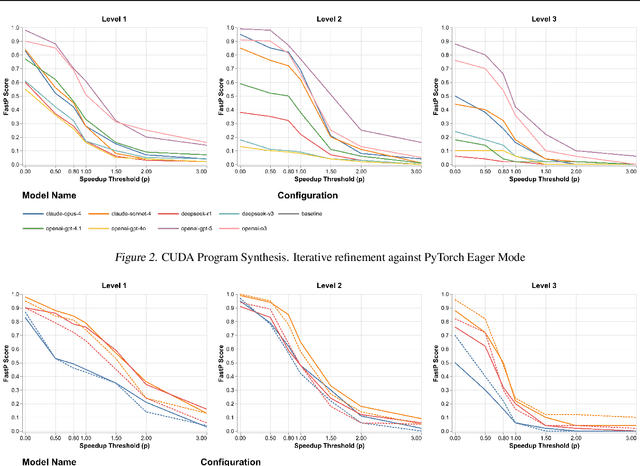

Abstract:GPU kernels are critical for ML performance but difficult to optimize across diverse accelerators. We present KForge, a platform-agnostic framework built on two collaborative LLM-based agents: a generation agent that produces and iteratively refines programs through compilation and correctness feedback, and a performance analysis agent that interprets profiling data to guide optimization. This agent-based architecture requires only a single-shot example to target new platforms. We make three key contributions: (1) introducing an iterative refinement system where the generation agent and performance analysis agent collaborate through functional and optimization passes, interpreting diverse profiling data (from programmatic APIs to GUI-based tools) to generate actionable recommendations that guide program synthesis for arbitrary accelerators; (2) demonstrating that the generation agent effectively leverages cross-platform knowledge transfer, where a reference implementation from one architecture substantially improves generation quality for different hardware targets; and (3) validating the platform-agnostic nature of our approach by demonstrating effective program synthesis across fundamentally different parallel computing platforms: NVIDIA CUDA and Apple Metal.

Machine Learning Sensors

Jun 07, 2022

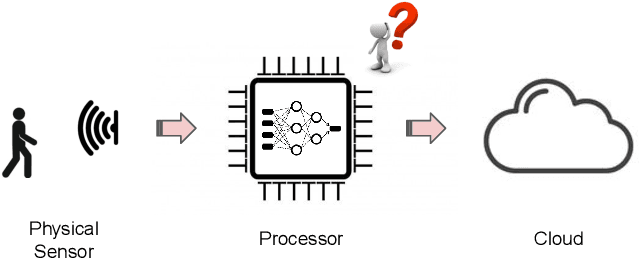

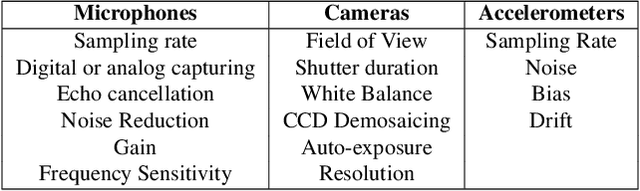

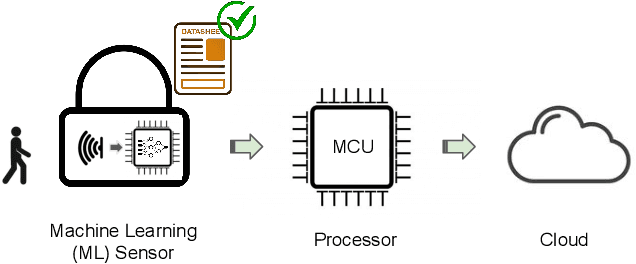

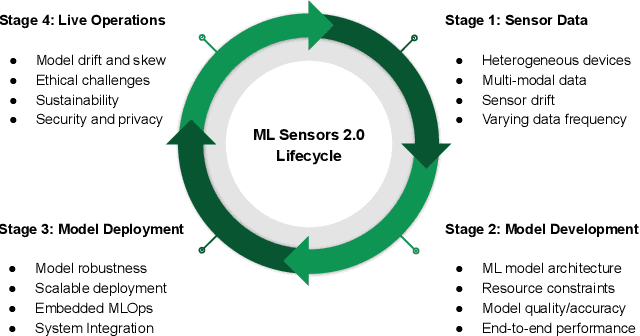

Abstract:Machine learning sensors represent a paradigm shift for the future of embedded machine learning applications. Current instantiations of embedded machine learning (ML) suffer from complex integration, lack of modularity, and privacy and security concerns from data movement. This article proposes a more data-centric paradigm for embedding sensor intelligence on edge devices to combat these challenges. Our vision for "sensor 2.0" entails segregating sensor input data and ML processing from the wider system at the hardware level and providing a thin interface that mimics traditional sensors in functionality. This separation leads to a modular and easy-to-use ML sensor device. We discuss challenges presented by the standard approach of building ML processing into the software stack of the controlling microprocessor on an embedded system and how the modularity of ML sensors alleviates these problems. ML sensors increase privacy and accuracy while making it easier for system builders to integrate ML into their products as a simple component. We provide examples of prospective ML sensors and an illustrative datasheet as a demonstration and hope that this will build a dialogue to progress us towards sensor 2.0.

ML-EXray: Visibility into ML Deployment on the Edge

Nov 08, 2021

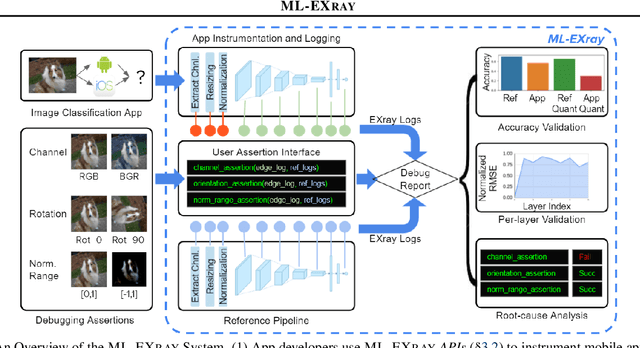

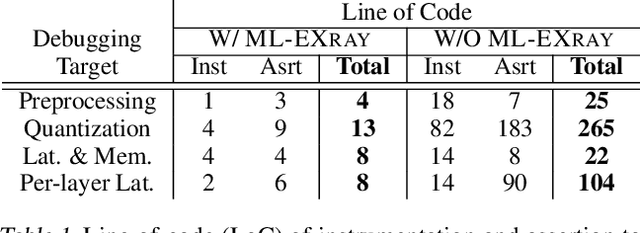

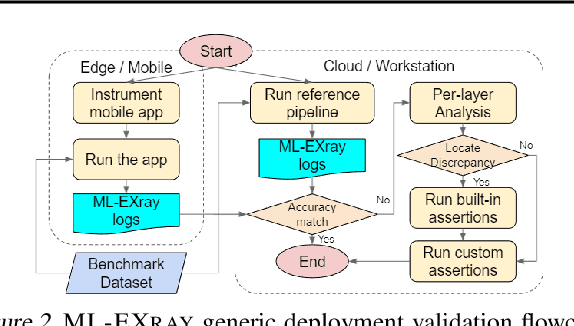

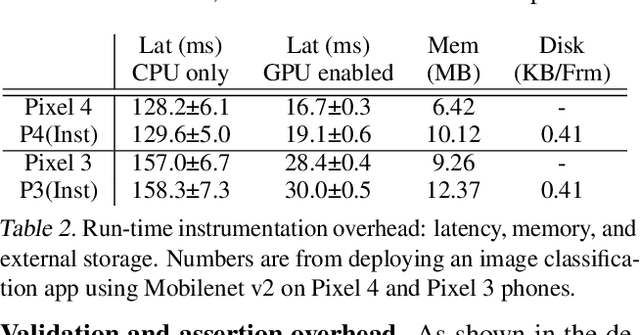

Abstract:Benefiting from expanding cloud infrastructure, deep neural networks (DNNs) today have increasingly high performance when trained in the cloud. Researchers spend months of effort competing for an extra few percentage points of model accuracy. However, when these models are actually deployed on edge devices in practice, very often, the performance can abruptly drop over 10% without obvious reasons. The key challenge is that there is not much visibility into ML inference execution on edge devices, and very little awareness of potential issues during the edge deployment process. We present ML-EXray, an end-to-end framework, which provides visibility into layer-level details of the ML execution, and helps developers analyze and debug cloud-to-edge deployment issues. More often than not, the reason for sub-optimal edge performance does not only lie in the model itself, but every operation throughout the data flow and the deployment process. Evaluations show that ML-EXray can effectively catch deployment issues, such as pre-processing bugs, quantization issues, suboptimal kernels, etc. Using ML-EXray, users need to write less than 15 lines of code to fully examine the edge deployment pipeline. Eradicating these issues, ML-EXray can correct model performance by up to 30%, pinpoint error-prone layers, and guide users to optimize kernel execution latency by two orders of magnitude. Code and APIs will be released as an open-source multi-lingual instrumentation library and a Python deployment validation library.

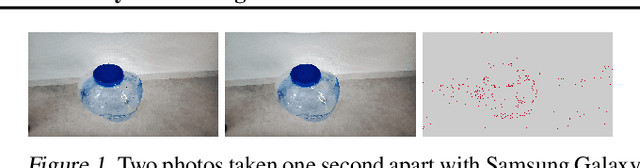

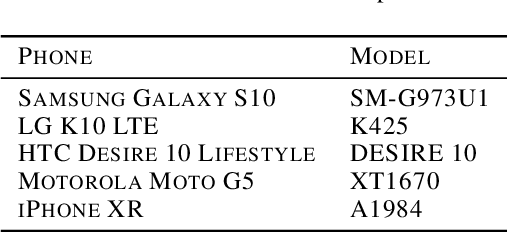

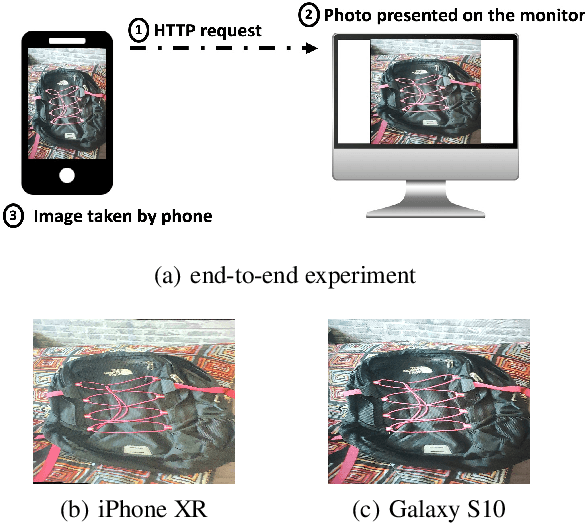

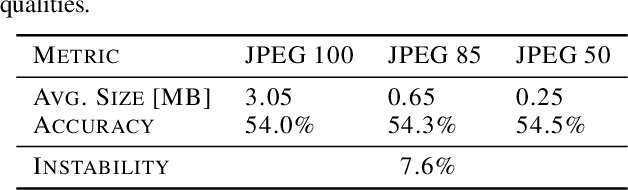

Characterizing and Taming Model Instability Across Edge Devices

Oct 18, 2020

Abstract:The same machine learning model running on different edge devices may produce highly-divergent outputs on a nearly-identical input. Possible reasons for the divergence include differences in the device sensors, the device's signal processing hardware and software, and its operating system and processors. This paper presents the first methodical characterization of the variations in model prediction across real-world mobile devices. We demonstrate that accuracy is not a useful metric to characterize prediction divergence, and introduce a new metric, instability, which captures this variation. We characterize different sources for instability, and show that differences in compression formats and image signal processing account for significant instability in object classification models. Notably, in our experiments, 14-17% of images produced divergent classifications across one or more phone models. We evaluate three different techniques for reducing instability. In particular, we adapt prior work on making models robust to noise in order to fine-tune models to be robust to variations across edge devices. We demonstrate our fine-tuning techniques reduce instability by 75%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge