Yuntao Shou

The Paradigm Shift: A Comprehensive Survey on Large Vision Language Models for Multimodal Fake News Detection

Jan 16, 2026Abstract:In recent years, the rapid evolution of large vision-language models (LVLMs) has driven a paradigm shift in multimodal fake news detection (MFND), transforming it from traditional feature-engineering approaches to unified, end-to-end multimodal reasoning frameworks. Early methods primarily relied on shallow fusion techniques to capture correlations between text and images, but they struggled with high-level semantic understanding and complex cross-modal interactions. The emergence of LVLMs has fundamentally changed this landscape by enabling joint modeling of vision and language with powerful representation learning, thereby enhancing the ability to detect misinformation that leverages both textual narratives and visual content. Despite these advances, the field lacks a systematic survey that traces this transition and consolidates recent developments. To address this gap, this paper provides a comprehensive review of MFND through the lens of LVLMs. We first present a historical perspective, mapping the evolution from conventional multimodal detection pipelines to foundation model-driven paradigms. Next, we establish a structured taxonomy covering model architectures, datasets, and performance benchmarks. Furthermore, we analyze the remaining technical challenges, including interpretability, temporal reasoning, and domain generalization. Finally, we outline future research directions to guide the next stage of this paradigm shift. To the best of our knowledge, this is the first comprehensive survey to systematically document and analyze the transformative role of LVLMs in combating multimodal fake news. The summary of existing methods mentioned is in our Github: \href{https://github.com/Tan-YiLong/Overview-of-Fake-News-Detection}{https://github.com/Tan-YiLong/Overview-of-Fake-News-Detection}.

GSDNet: Revisiting Incomplete Multimodal-Diffusion from Graph Spectrum Perspective for Conversation Emotion Recognition

Jun 14, 2025

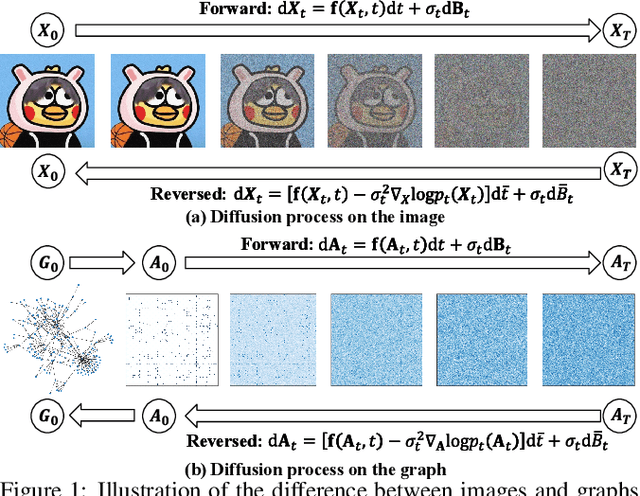

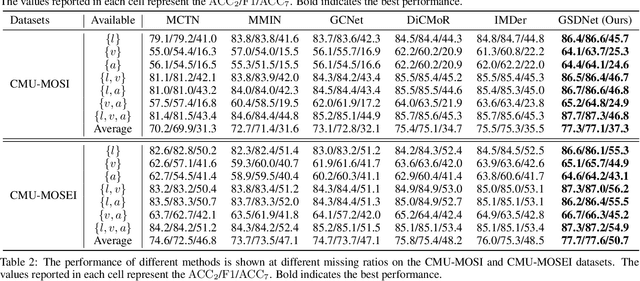

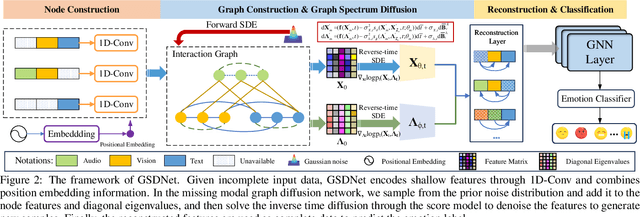

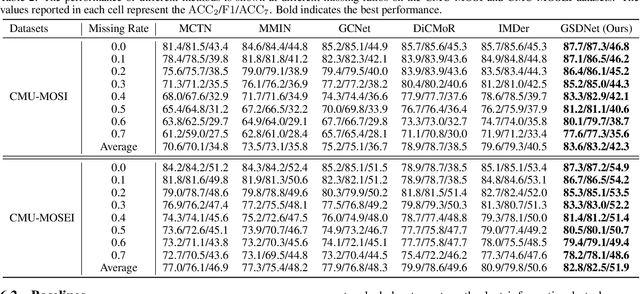

Abstract:Multimodal emotion recognition in conversations (MERC) aims to infer the speaker's emotional state by analyzing utterance information from multiple sources (i.e., video, audio, and text). Compared with unimodality, a more robust utterance representation can be obtained by fusing complementary semantic information from different modalities. However, the modality missing problem severely limits the performance of MERC in practical scenarios. Recent work has achieved impressive performance on modality completion using graph neural networks and diffusion models, respectively. This inspires us to combine these two dimensions through the graph diffusion model to obtain more powerful modal recovery capabilities. Unfortunately, existing graph diffusion models may destroy the connectivity and local structure of the graph by directly adding Gaussian noise to the adjacency matrix, resulting in the generated graph data being unable to retain the semantic and topological information of the original graph. To this end, we propose a novel Graph Spectral Diffusion Network (GSDNet), which maps Gaussian noise to the graph spectral space of missing modalities and recovers the missing data according to its original distribution. Compared with previous graph diffusion methods, GSDNet only affects the eigenvalues of the adjacency matrix instead of destroying the adjacency matrix directly, which can maintain the global topological information and important spectral features during the diffusion process. Extensive experiments have demonstrated that GSDNet achieves state-of-the-art emotion recognition performance in various modality loss scenarios.

SE-GNN: Seed Expanded-Aware Graph Neural Network with Iterative Optimization for Semi-supervised Entity Alignment

Mar 24, 2025Abstract:Entity alignment aims to use pre-aligned seed pairs to find other equivalent entities from different knowledge graphs (KGs) and is widely used in graph fusion-related fields. However, as the scale of KGs increases, manually annotating pre-aligned seed pairs becomes difficult. Existing research utilizes entity embeddings obtained by aggregating single structural information to identify potential seed pairs, thus reducing the reliance on pre-aligned seed pairs. However, due to the structural heterogeneity of KGs, the quality of potential seed pairs obtained using only a single structural information is not ideal. In addition, although existing research improves the quality of potential seed pairs through semi-supervised iteration, they underestimate the impact of embedding distortion produced by noisy seed pairs on the alignment effect. In order to solve the above problems, we propose a seed expanded-aware graph neural network with iterative optimization for semi-supervised entity alignment, named SE-GNN. First, we utilize the semantic attributes and structural features of entities, combined with a conditional filtering mechanism, to obtain high-quality initial potential seed pairs. Next, we designed a local and global awareness mechanism. It introduces initial potential seed pairs and combines local and global information to obtain a more comprehensive entity embedding representation, which alleviates the impact of KGs structural heterogeneity and lays the foundation for the optimization of initial potential seed pairs. Then, we designed the threshold nearest neighbor embedding correction strategy. It combines the similarity threshold and the bidirectional nearest neighbor method as a filtering mechanism to select iterative potential seed pairs and also uses an embedding correction strategy to eliminate the embedding distortion.

LRA-GNN: Latent Relation-Aware Graph Neural Network with Initial and Dynamic Residual for Facial Age Estimation

Feb 08, 2025Abstract:Face information is mainly concentrated among facial key points, and frontier research has begun to use graph neural networks to segment faces into patches as nodes to model complex face representations. However, these methods construct node-to-node relations based on similarity thresholds, so there is a problem that some latent relations are missing. These latent relations are crucial for deep semantic representation of face aging. In this novel, we propose a new Latent Relation-Aware Graph Neural Network with Initial and Dynamic Residual (LRA-GNN) to achieve robust and comprehensive facial representation. Specifically, we first construct an initial graph utilizing facial key points as prior knowledge, and then a random walk strategy is employed to the initial graph for obtaining the global structure, both of which together guide the subsequent effective exploration and comprehensive representation. Then LRA-GNN leverages the multi-attention mechanism to capture the latent relations and generates a set of fully connected graphs containing rich facial information and complete structure based on the aforementioned guidance. To avoid over-smoothing issues for deep feature extraction on the fully connected graphs, the deep residual graph convolutional networks are carefully designed, which fuse adaptive initial residuals and dynamic developmental residuals to ensure the consistency and diversity of information. Finally, to improve the estimation accuracy and generalization ability, progressive reinforcement learning is proposed to optimize the ensemble classification regressor. Our proposed framework surpasses the state-of-the-art baselines on several age estimation benchmarks, demonstrating its strength and effectiveness.

SE-GCL: An Event-Based Simple and Effective Graph Contrastive Learning for Text Representation

Dec 16, 2024

Abstract:Text representation learning is significant as the cornerstone of natural language processing. In recent years, graph contrastive learning (GCL) has been widely used in text representation learning due to its ability to represent and capture complex text information in a self-supervised setting. However, current mainstream graph contrastive learning methods often require the incorporation of domain knowledge or cumbersome computations to guide the data augmentation process, which significantly limits the application efficiency and scope of GCL. Additionally, many methods learn text representations only by constructing word-document relationships, which overlooks the rich contextual semantic information in the text. To address these issues and exploit representative textual semantics, we present an event-based, simple, and effective graph contrastive learning (SE-GCL) for text representation. Precisely, we extract event blocks from text and construct internal relation graphs to represent inter-semantic interconnections, which can ensure that the most critical semantic information is preserved. Then, we devise a streamlined, unsupervised graph contrastive learning framework to leverage the complementary nature of the event semantic and structural information for intricate feature data capture. In particular, we introduce the concept of an event skeleton for core representation semantics and simplify the typically complex data augmentation techniques found in existing graph contrastive learning to boost algorithmic efficiency. We employ multiple loss functions to prompt diverse embeddings to converge or diverge within a confined distance in the vector space, ultimately achieving a harmonious equilibrium. We conducted experiments on the proposed SE-GCL on four standard data sets (AG News, 20NG, SougouNews, and THUCNews) to verify its effectiveness in text representation learning.

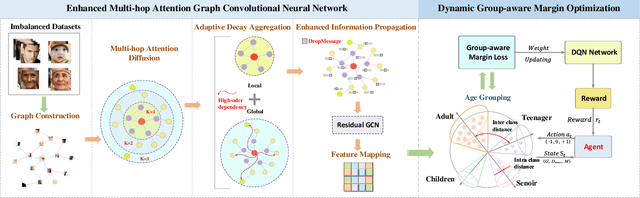

GroupFace: Imbalanced Age Estimation Based on Multi-hop Attention Graph Convolutional Network and Group-aware Margin Optimization

Dec 16, 2024

Abstract:With the recent advances in computer vision, age estimation has significantly improved in overall accuracy. However, owing to the most common methods do not take into account the class imbalance problem in age estimation datasets, they suffer from a large bias in recognizing long-tailed groups. To achieve high-quality imbalanced learning in long-tailed groups, the dominant solution lies in that the feature extractor learns the discriminative features of different groups and the classifier is able to provide appropriate and unbiased margins for different groups by the discriminative features. Therefore, in this novel, we propose an innovative collaborative learning framework (GroupFace) that integrates a multi-hop attention graph convolutional network and a dynamic group-aware margin strategy based on reinforcement learning. Specifically, to extract the discriminative features of different groups, we design an enhanced multi-hop attention graph convolutional network. This network is capable of capturing the interactions of neighboring nodes at different distances, fusing local and global information to model facial deep aging, and exploring diverse representations of different groups. In addition, to further address the class imbalance problem, we design a dynamic group-aware margin strategy based on reinforcement learning to provide appropriate and unbiased margins for different groups. The strategy divides the sample into four age groups and considers identifying the optimum margins for various age groups by employing a Markov decision process. Under the guidance of the agent, the feature representation bias and the classification margin deviation between different groups can be reduced simultaneously, balancing inter-class separability and intra-class proximity. After joint optimization, our architecture achieves excellent performance on several age estimation benchmark datasets.

Dynamic Graph Neural Ordinary Differential Equation Network for Multi-modal Emotion Recognition in Conversation

Dec 04, 2024

Abstract:Multimodal emotion recognition in conversation (MERC) refers to identifying and classifying human emotional states by combining data from multiple different modalities (e.g., audio, images, text, video, etc.). Most existing multimodal emotion recognition methods use GCN to improve performance, but existing GCN methods are prone to overfitting and cannot capture the temporal dependency of the speaker's emotions. To address the above problems, we propose a Dynamic Graph Neural Ordinary Differential Equation Network (DGODE) for MERC, which combines the dynamic changes of emotions to capture the temporal dependency of speakers' emotions, and effectively alleviates the overfitting problem of GCNs. Technically, the key idea of DGODE is to utilize an adaptive mixhop mechanism to improve the generalization ability of GCNs and use the graph ODE evolution network to characterize the continuous dynamics of node representations over time and capture temporal dependencies. Extensive experiments on two publicly available multimodal emotion recognition datasets demonstrate that the proposed DGODE model has superior performance compared to various baselines. Furthermore, the proposed DGODE can also alleviate the over-smoothing problem, thereby enabling the construction of a deep GCN network.

SDR-GNN: Spectral Domain Reconstruction Graph Neural Network for Incomplete Multimodal Learning in Conversational Emotion Recognition

Nov 29, 2024Abstract:Multimodal Emotion Recognition in Conversations (MERC) aims to classify utterance emotions using textual, auditory, and visual modal features. Most existing MERC methods assume each utterance has complete modalities, overlooking the common issue of incomplete modalities in real-world scenarios. Recently, graph neural networks (GNNs) have achieved notable results in Incomplete Multimodal Emotion Recognition in Conversations (IMERC). However, traditional GNNs focus on binary relationships between nodes, limiting their ability to capture more complex, higher-order information. Moreover, repeated message passing can cause over-smoothing, reducing their capacity to preserve essential high-frequency details. To address these issues, we propose a Spectral Domain Reconstruction Graph Neural Network (SDR-GNN) for incomplete multimodal learning in conversational emotion recognition. SDR-GNN constructs an utterance semantic interaction graph using a sliding window based on both speaker and context relationships to model emotional dependencies. To capture higher-order and high-frequency information, SDR-GNN utilizes weighted relationship aggregation, ensuring consistent semantic feature extraction across utterances. Additionally, it performs multi-frequency aggregation in the spectral domain, enabling efficient recovery of incomplete modalities by extracting both high- and low-frequency information. Finally, multi-head attention is applied to fuse and optimize features for emotion recognition. Extensive experiments on various real-world datasets demonstrate that our approach is effective in incomplete multimodal learning and outperforms current state-of-the-art methods.

Contrastive Multi-graph Learning with Neighbor Hierarchical Sifting for Semi-supervised Text Classification

Nov 25, 2024

Abstract:Graph contrastive learning has been successfully applied in text classification due to its remarkable ability for self-supervised node representation learning. However, explicit graph augmentations may lead to a loss of semantics in the contrastive views. Secondly, existing methods tend to overlook edge features and the varying significance of node features during multi-graph learning. Moreover, the contrastive loss suffer from false negatives. To address these limitations, we propose a novel method of contrastive multi-graph learning with neighbor hierarchical sifting for semi-supervised text classification, namely ConNHS. Specifically, we exploit core features to form a multi-relational text graph, enhancing semantic connections among texts. By separating text graphs, we provide diverse views for contrastive learning. Our approach ensures optimal preservation of the graph information, minimizing data loss and distortion. Then, we separately execute relation-aware propagation and cross-graph attention propagation, which effectively leverages the varying correlations between nodes and edge features while harmonising the information fusion across graphs. Subsequently, we present the neighbor hierarchical sifting loss (NHS) to refine the negative selection. For one thing, following the homophily assumption, NHS masks first-order neighbors of the anchor and positives from being negatives. For another, NHS excludes the high-order neighbors analogous to the anchor based on their similarities. Consequently, it effectively reduces the occurrence of false negatives, preventing the expansion of the distance between similar samples in the embedding space. Our experiments on ThuCNews, SogouNews, 20 Newsgroups, and Ohsumed datasets achieved 95.86\%, 97.52\%, 87.43\%, and 70.65\%, which demonstrates competitive results in semi-supervised text classification.

Graph Domain Adaptation with Dual-branch Encoder and Two-level Alignment for Whole Slide Image-based Survival Prediction

Nov 21, 2024Abstract:In recent years, histopathological whole slide image (WSI)- based survival analysis has attracted much attention in medical image analysis. In practice, WSIs usually come from different hospitals or laboratories, which can be seen as different domains, and thus may have significant differences in imaging equipment, processing procedures, and sample sources. These differences generally result in large gaps in distribution between different WSI domains, and thus the survival analysis models trained on one domain may fail to transfer to another. To address this issue, we propose a Dual-branch Encoder and Two-level Alignment (DETA) framework to explore both feature and category-level alignment between different WSI domains. Specifically, we first formulate the concerned problem as graph domain adaptation (GDA) by virtue the graph representation of WSIs. Then we construct a dual-branch graph encoder, including the message passing branch and the shortest path branch, to explicitly and implicitly extract semantic information from the graph-represented WSIs. To realize GDA, we propose a two-level alignment approach: at the category level, we develop a coupling technique by virtue of the dual-branch structure, leading to reduced divergence between the category distributions of the two domains; at the feature level, we introduce an adversarial perturbation strategy to better augment source domain feature, resulting in improved alignment in feature distribution. To the best of our knowledge, our work is the first attempt to alleviate the domain shift issue for WSI data analysis. Extensive experiments on four TCGA datasets have validated the effectiveness of our proposed DETA framework and demonstrated its superior performance in WSI-based survival analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge