Yinghui Gao

Lighten CARAFE: Dynamic Lightweight Upsampling with Guided Reassemble Kernels

Oct 29, 2024Abstract:As a fundamental operation in modern machine vision models, feature upsampling has been widely used and investigated in the literatures. An ideal upsampling operation should be lightweight, with low computational complexity. That is, it can not only improve the overall performance but also not affect the model complexity. Content-aware Reassembly of Features (CARAFE) is a well-designed learnable operation to achieve feature upsampling. Albeit encouraging performance achieved, this method requires generating large-scale kernels, which brings a mass of extra redundant parameters, and inherently has limited scalability. To this end, we propose a lightweight upsampling operation, termed Dynamic Lightweight Upsampling (DLU) in this paper. In particular, it first constructs a small-scale source kernel space, and then samples the large-scale kernels from the kernel space by introducing learnable guidance offsets, hence avoiding introducing a large collection of trainable parameters in upsampling. Experiments on several mainstream vision tasks show that our DLU achieves comparable and even better performance to the original CARAFE, but with much lower complexity, e.g., DLU requires 91% fewer parameters and at least 63% fewer FLOPs (Floating Point Operations) than CARAFE in the case of 16x upsampling, but outperforms the CARAFE by 0.3% mAP in object detection. Code is available at https://github.com/Fu0511/Dynamic-Lightweight-Upsampling.

SEG:Seeds-Enhanced Iterative Refinement Graph Neural Network for Entity Alignment

Oct 28, 2024

Abstract:Entity alignment is crucial for merging knowledge across knowledge graphs, as it matches entities with identical semantics. The standard method matches these entities based on their embedding similarities using semi-supervised learning. However, diverse data sources lead to non-isomorphic neighborhood structures for aligned entities, complicating alignment, especially for less common and sparsely connected entities. This paper presents a soft label propagation framework that integrates multi-source data and iterative seed enhancement, addressing scalability challenges in handling extensive datasets where scale computing excels. The framework uses seeds for anchoring and selects optimal relationship pairs to create soft labels rich in neighborhood features and semantic relationship data. A bidirectional weighted joint loss function is implemented, which reduces the distance between positive samples and differentially processes negative samples, taking into account the non-isomorphic neighborhood structures. Our method outperforms existing semi-supervised approaches, as evidenced by superior results on multiple datasets, significantly improving the quality of entity alignment.

A Learning-based Optimization Algorithm:Image Registration Optimizer Network

Nov 23, 2020

Abstract:Remote sensing image registration is valuable for image-based navigation system despite posing many challenges. As the search space of registration is usually non-convex, the optimization algorithm, which aims to search the best transformation parameters, is a challenging step. Conventional optimization algorithms can hardly reconcile the contradiction of simultaneous rapid convergence and the global optimization. In this paper, a novel learning-based optimization algorithm named Image Registration Optimizer Network (IRON) is proposed, which can predict the global optimum after single iteration. The IRON is trained by a 3D tensor (9x9x9), which consists of similar metric values. The elements of the 3D tensor correspond to the 9x9x9 neighbors of the initial parameters in the search space. Then, the tensor's label is a vector that points to the global optimal parameters from the initial parameters. Because of the special architecture, the IRON could predict the global optimum directly for any initialization. The experimental results demonstrate that the proposed algorithm performs better than other classical optimization algorithms as it has higher accuracy, lower root of mean square error (RMSE), and more efficiency. Our IRON codes are available for further study.https://www.github.com/jaxwangkd04/IRON

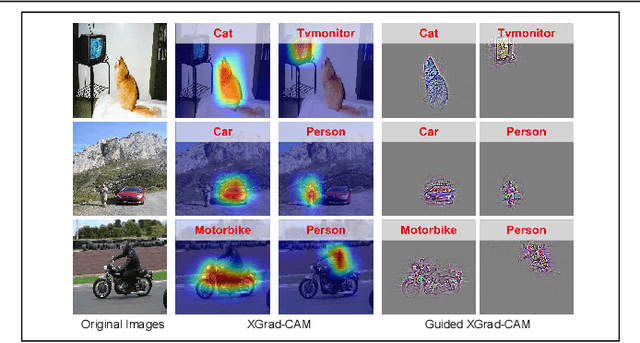

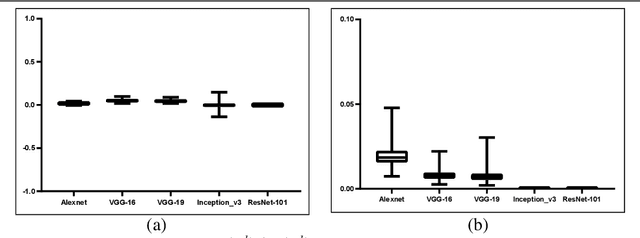

Axiom-based Grad-CAM: Towards Accurate Visualization and Explanation of CNNs

Aug 19, 2020

Abstract:To have a better understanding and usage of Convolution Neural Networks (CNNs), the visualization and interpretation of CNNs has attracted increasing attention in recent years. In particular, several Class Activation Mapping (CAM) methods have been proposed to discover the connection between CNN's decision and image regions. In spite of the reasonable visualization, lack of clear and sufficient theoretical support is the main limitation of these methods. In this paper, we introduce two axioms -- Conservation and Sensitivity -- to the visualization paradigm of the CAM methods. Meanwhile, a dedicated Axiom-based Grad-CAM (XGrad-CAM) is proposed to satisfy these axioms as much as possible. Experiments demonstrate that XGrad-CAM is an enhanced version of Grad-CAM in terms of conservation and sensitivity. It is able to achieve better visualization performance than Grad-CAM, while also be class-discriminative and easy-to-implement compared with Grad-CAM++ and Ablation-CAM. The code is available at https://github.com/Fu0511/XGrad-CAM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge