Yukang Yan

Auptimize: Optimal Placement of Spatial Audio Cues for Extended Reality

Aug 18, 2024

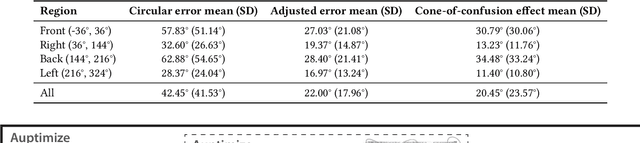

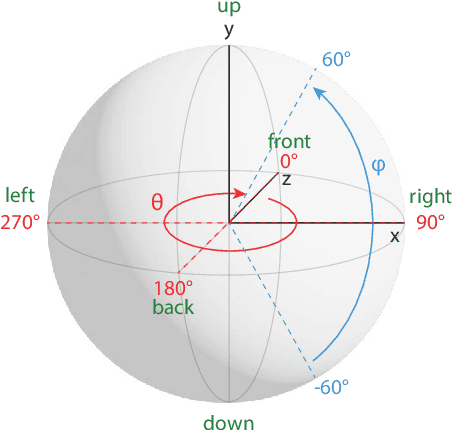

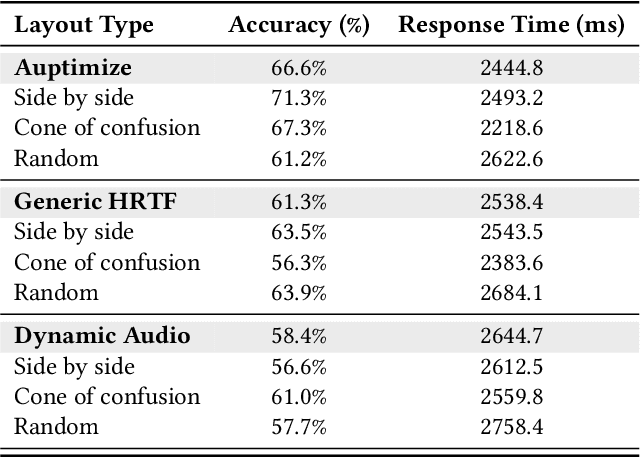

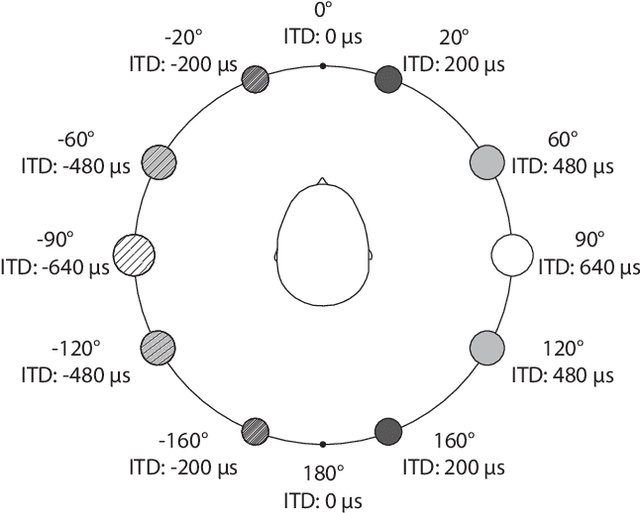

Abstract:Spatial audio in Extended Reality (XR) provides users with better awareness of where virtual elements are placed, and efficiently guides them to events such as notifications, system alerts from different windows, or approaching avatars. Humans, however, are inaccurate in localizing sound cues, especially with multiple sources due to limitations in human auditory perception such as angular discrimination error and front-back confusion. This decreases the efficiency of XR interfaces because users misidentify from which XR element a sound is coming. To address this, we propose Auptimize, a novel computational approach for placing XR sound sources, which mitigates such localization errors by utilizing the ventriloquist effect. Auptimize disentangles the sound source locations from the visual elements and relocates the sound sources to optimal positions for unambiguous identification of sound cues, avoiding errors due to inter-source proximity and front-back confusion. Our evaluation shows that Auptimize decreases spatial audio-based source identification errors compared to playing sound cues at the paired visual-sound locations. We demonstrate the applicability of Auptimize for diverse spatial audio-based interactive XR scenarios.

Computational Trichromacy Reconstruction: Empowering the Color-Vision Deficient to Recognize Colors Using Augmented Reality

Aug 04, 2024Abstract:We propose an assistive technology that helps individuals with Color Vision Deficiencies (CVD) to recognize/name colors. A dichromat's color perception is a reduced two-dimensional (2D) subset of a normal trichromat's three dimensional color (3D) perception, leading to confusion when visual stimuli that appear identical to the dichromat are referred to by different color names. Using our proposed system, CVD individuals can interactively induce distinct perceptual changes to originally confusing colors via a computational color space transformation. By combining their original 2D precepts for colors with the discriminative changes, a three dimensional color space is reconstructed, where the dichromat can learn to resolve color name confusions and accurately recognize colors. Our system is implemented as an Augmented Reality (AR) interface on smartphones, where users interactively control the rotation through swipe gestures and observe the induced color shifts in the camera view or in a displayed image. Through psychophysical experiments and a longitudinal user study, we demonstrate that such rotational color shifts have discriminative power (initially confusing colors become distinct under rotation) and exhibit structured perceptual shifts dichromats can learn with modest training. The AR App is also evaluated in two real-world scenarios (building with lego blocks and interpreting artistic works); users all report positive experience in using the App to recognize object colors that they otherwise could not.

Time2Stop: Adaptive and Explainable Human-AI Loop for Smartphone Overuse Intervention

Mar 03, 2024Abstract:Despite a rich history of investigating smartphone overuse intervention techniques, AI-based just-in-time adaptive intervention (JITAI) methods for overuse reduction are lacking. We develop Time2Stop, an intelligent, adaptive, and explainable JITAI system that leverages machine learning to identify optimal intervention timings, introduces interventions with transparent AI explanations, and collects user feedback to establish a human-AI loop and adapt the intervention model over time. We conducted an 8-week field experiment (N=71) to evaluate the effectiveness of both the adaptation and explanation aspects of Time2Stop. Our results indicate that our adaptive models significantly outperform the baseline methods on intervention accuracy (>32.8\% relatively) and receptivity (>8.0\%). In addition, incorporating explanations further enhances the effectiveness by 53.8\% and 11.4\% on accuracy and receptivity, respectively. Moreover, Time2Stop significantly reduces overuse, decreasing app visit frequency by 7.0$\sim$8.9\%. Our subjective data also echoed these quantitative measures. Participants preferred the adaptive interventions and rated the system highly on intervention time accuracy, effectiveness, and level of trust. We envision our work can inspire future research on JITAI systems with a human-AI loop to evolve with users.

Modeling the Trade-off of Privacy Preservation and Activity Recognition on Low-Resolution Images

Mar 18, 2023

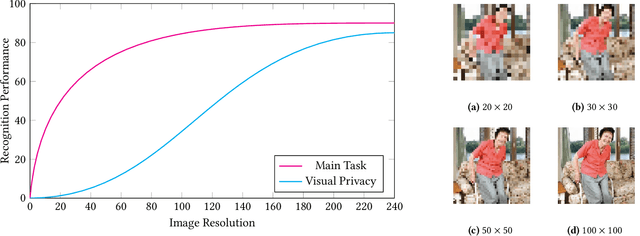

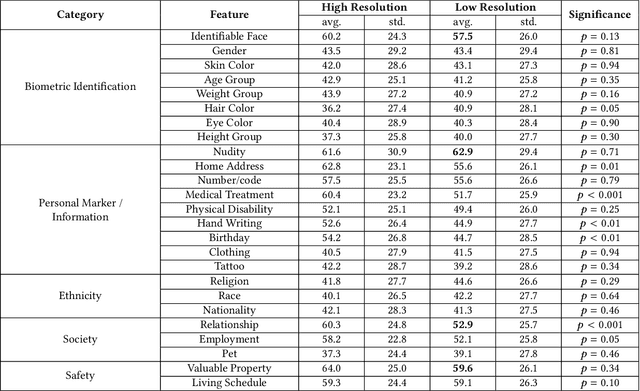

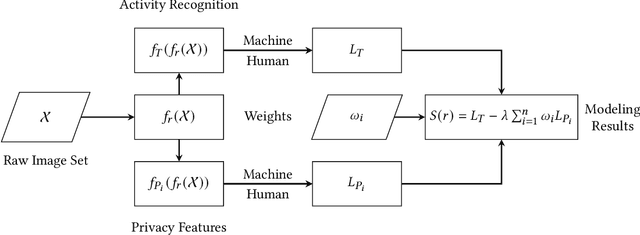

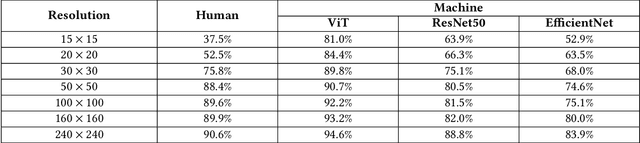

Abstract:A computer vision system using low-resolution image sensors can provide intelligent services (e.g., activity recognition) but preserve unnecessary visual privacy information from the hardware level. However, preserving visual privacy and enabling accurate machine recognition have adversarial needs on image resolution. Modeling the trade-off of privacy preservation and machine recognition performance can guide future privacy-preserving computer vision systems using low-resolution image sensors. In this paper, using the at-home activity of daily livings (ADLs) as the scenario, we first obtained the most important visual privacy features through a user survey. Then we quantified and analyzed the effects of image resolution on human and machine recognition performance in activity recognition and privacy awareness tasks. We also investigated how modern image super-resolution techniques influence these effects. Based on the results, we proposed a method for modeling the trade-off of privacy preservation and activity recognition on low-resolution images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge