Ethan Chen

Sylber: Syllabic Embedding Representation of Speech from Raw Audio

Oct 09, 2024

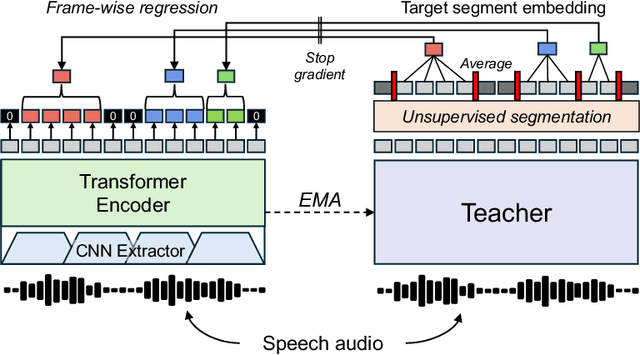

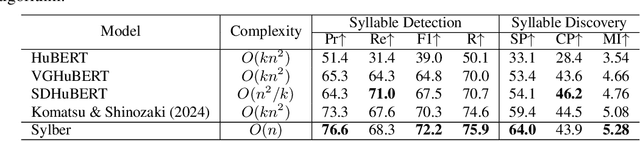

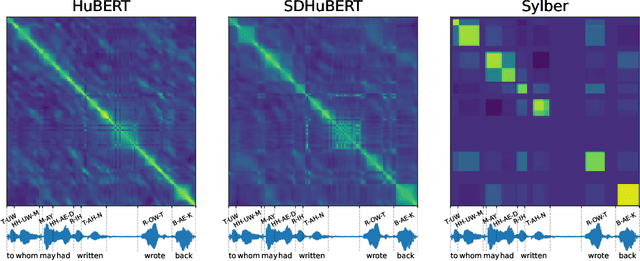

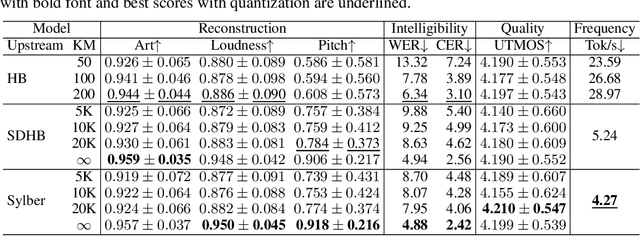

Abstract:Syllables are compositional units of spoken language that play a crucial role in human speech perception and production. However, current neural speech representations lack structure, resulting in dense token sequences that are costly to process. To bridge this gap, we propose a new model, Sylber, that produces speech representations with clean and robust syllabic structure. Specifically, we propose a self-supervised model that regresses features on syllabic segments distilled from a teacher model which is an exponential moving average of the model in training. This results in a highly structured representation of speech features, offering three key benefits: 1) a fast, linear-time syllable segmentation algorithm, 2) efficient syllabic tokenization with an average of 4.27 tokens per second, and 3) syllabic units better suited for lexical and syntactic understanding. We also train token-to-speech generative models with our syllabic units and show that fully intelligible speech can be reconstructed from these tokens. Lastly, we observe that categorical perception, a linguistic phenomenon of speech perception, emerges naturally in our model, making the embedding space more categorical and sparse than previous self-supervised learning approaches. Together, we present a novel self-supervised approach for representing speech as syllables, with significant potential for efficient speech tokenization and spoken language modeling.

Computational Trichromacy Reconstruction: Empowering the Color-Vision Deficient to Recognize Colors Using Augmented Reality

Aug 04, 2024Abstract:We propose an assistive technology that helps individuals with Color Vision Deficiencies (CVD) to recognize/name colors. A dichromat's color perception is a reduced two-dimensional (2D) subset of a normal trichromat's three dimensional color (3D) perception, leading to confusion when visual stimuli that appear identical to the dichromat are referred to by different color names. Using our proposed system, CVD individuals can interactively induce distinct perceptual changes to originally confusing colors via a computational color space transformation. By combining their original 2D precepts for colors with the discriminative changes, a three dimensional color space is reconstructed, where the dichromat can learn to resolve color name confusions and accurately recognize colors. Our system is implemented as an Augmented Reality (AR) interface on smartphones, where users interactively control the rotation through swipe gestures and observe the induced color shifts in the camera view or in a displayed image. Through psychophysical experiments and a longitudinal user study, we demonstrate that such rotational color shifts have discriminative power (initially confusing colors become distinct under rotation) and exhibit structured perceptual shifts dichromats can learn with modest training. The AR App is also evaluated in two real-world scenarios (building with lego blocks and interpreting artistic works); users all report positive experience in using the App to recognize object colors that they otherwise could not.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge