Yuguang Li

BADGR: Bundle Adjustment Diffusion Conditioned by GRadients for Wide-Baseline Floor Plan Reconstruction

Mar 25, 2025Abstract:Reconstructing precise camera poses and floor plan layouts from wide-baseline RGB panoramas is a difficult and unsolved problem. We introduce BADGR, a novel diffusion model that jointly performs reconstruction and bundle adjustment (BA) to refine poses and layouts from a coarse state, using 1D floor boundary predictions from dozens of images of varying input densities. Unlike a guided diffusion model, BADGR is conditioned on dense per-entity outputs from a single-step Levenberg Marquardt (LM) optimizer and is trained to predict camera and wall positions while minimizing reprojection errors for view-consistency. The objective of layout generation from denoising diffusion process complements BA optimization by providing additional learned layout-structural constraints on top of the co-visible features across images. These constraints help BADGR to make plausible guesses on spatial relations which help constrain pose graph, such as wall adjacency, collinearity, and learn to mitigate errors from dense boundary observations with global contexts. BADGR trains exclusively on 2D floor plans, simplifying data acquisition, enabling robust augmentation, and supporting variety of input densities. Our experiments and analysis validate our method, which significantly outperforms the state-of-the-art pose and floor plan layout reconstruction with different input densities.

SALVe: Semantic Alignment Verification for Floorplan Reconstruction from Sparse Panoramas

Jun 27, 2024Abstract:We propose a new system for automatic 2D floorplan reconstruction that is enabled by SALVe, our novel pairwise learned alignment verifier. The inputs to our system are sparsely located 360$^\circ$ panoramas, whose semantic features (windows, doors, and openings) are inferred and used to hypothesize pairwise room adjacency or overlap. SALVe initializes a pose graph, which is subsequently optimized using GTSAM. Once the room poses are computed, room layouts are inferred using HorizonNet, and the floorplan is constructed by stitching the most confident layout boundaries. We validate our system qualitatively and quantitatively as well as through ablation studies, showing that it outperforms state-of-the-art SfM systems in completeness by over 200%, without sacrificing accuracy. Our results point to the significance of our work: poses of 81% of panoramas are localized in the first 2 connected components (CCs), and 89% in the first 3 CCs. Code and models are publicly available at https://github.com/zillow/salve.

Graph-CoVis: GNN-based Multi-view Panorama Global Pose Estimation

Apr 26, 2023

Abstract:In this paper, we address the problem of wide-baseline camera pose estimation from a group of 360$^\circ$ panoramas under upright-camera assumption. Recent work has demonstrated the merit of deep-learning for end-to-end direct relative pose regression in 360$^\circ$ panorama pairs [11]. To exploit the benefits of multi-view logic in a learning-based framework, we introduce Graph-CoVis, which non-trivially extends CoVisPose [11] from relative two-view to global multi-view spherical camera pose estimation. Graph-CoVis is a novel Graph Neural Network based architecture that jointly learns the co-visible structure and global motion in an end-to-end and fully-supervised approach. Using the ZInD [4] dataset, which features real homes presenting wide-baselines, occlusion, and limited visual overlap, we show that our model performs competitively to state-of-the-art approaches.

U2RLE: Uncertainty-Guided 2-Stage Room Layout Estimation

Apr 17, 2023

Abstract:While the existing deep learning-based room layout estimation techniques demonstrate good overall accuracy, they are less effective for distant floor-wall boundary. To tackle this problem, we propose a novel uncertainty-guided approach for layout boundary estimation introducing new two-stage CNN architecture termed U2RLE. The initial stage predicts both floor-wall boundary and its uncertainty and is followed by the refinement of boundaries with high positional uncertainty using a different, distance-aware loss. Finally, outputs from the two stages are merged to produce the room layout. Experiments using ZInD and Structure3D datasets show that U2RLE improves over current state-of-the-art, being able to handle both near and far walls better. In particular, U2RLE outperforms current state-of-the-art techniques for the most distant walls.

PSMNet: Position-aware Stereo Merging Network for Room Layout Estimation

Mar 30, 2022

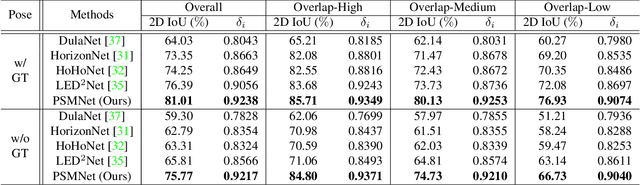

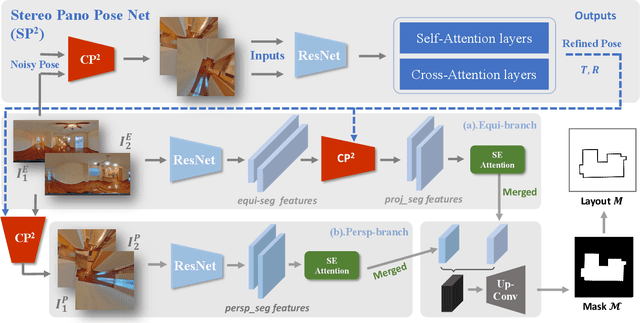

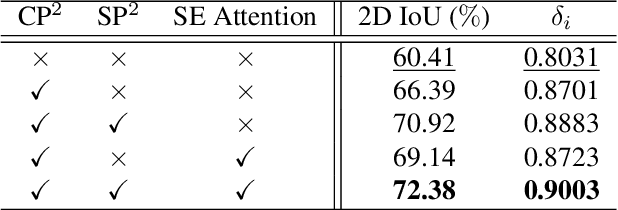

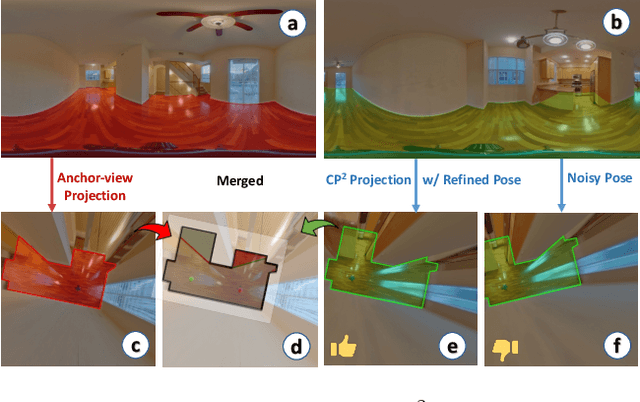

Abstract:In this paper, we propose a new deep learning-based method for estimating room layout given a pair of 360 panoramas. Our system, called Position-aware Stereo Merging Network or PSMNet, is an end-to-end joint layout-pose estimator. PSMNet consists of a Stereo Pano Pose (SP2) transformer and a novel Cross-Perspective Projection (CP2) layer. The stereo-view SP2 transformer is used to implicitly infer correspondences between views, and can handle noisy poses. The pose-aware CP2 layer is designed to render features from the adjacent view to the anchor (reference) view, in order to perform view fusion and estimate the visible layout. Our experiments and analysis validate our method, which significantly outperforms the state-of-the-art layout estimators, especially for large and complex room spaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge