Yash Chitalia

Towards Autonomous Instrument Tray Assembly for Sterile Processing Applications

Feb 02, 2026Abstract:The Sterile Processing and Distribution (SPD) department is responsible for cleaning, disinfecting, inspecting, and assembling surgical instruments between surgeries. Manual inspection and preparation of instrument trays is a time-consuming, error-prone task, often prone to contamination and instrument breakage. In this work, we present a fully automated robotic system that sorts and structurally packs surgical instruments into sterile trays, focusing on automation of the SPD assembly stage. A custom dataset comprising 31 surgical instruments and 6,975 annotated images was collected to train a hybrid perception pipeline using YOLO12 for detection and a cascaded ResNet-based model for fine-grained classification. The system integrates a calibrated vision module, a 6-DOF Staubli TX2-60L robotic arm with a custom dual electromagnetic gripper, and a rule-based packing algorithm that reduces instrument collisions during transport. The packing framework uses 3D printed dividers and holders to physically isolate instruments, reducing collision and friction during transport. Experimental evaluations show high perception accuracy and statistically significant reduction in tool-to-tool collisions compared to human-assembled trays. This work serves as the scalable first step toward automating SPD workflows, improving safety, and consistency of surgical preparation while reducing SPD processing times.

Towards a Novel Wearable Robotic Vest for Hemorrhage Suppression

Feb 01, 2026Abstract:This paper introduces a novel robotic system designed to manage severe bleeding in emergency scenarios, including unique environments like space stations. The robot features a shape-adjustable "ring mechanism", transitioning from a circular to an elliptical configuration to adjust wound coverage across various anatomical regions. We developed various arms for this ring mechanism with varying flexibilities to improve adaptability when applied to non-extremities of the body (abdomen, back, neck, etc.). To apply equal and constant pressure across the wound, we developed an inflatable ring and airbag balloon that are compatible with this shape-changing ring mechanism. A series of experiments focused on evaluating various ring arm configurations to characterize their bending stiffness. Subsequent experiments measured the force exerted by the airbag balloon system using a digital scale. Despite its promising performance, certain limitations related to coverage area are identified. The shape-changing effect of the device is limited to scenarios involving partially inflated or deflated airbag balloons, and cannot fully conform to complex anatomical regions. Finally, the device was tested on casualty simulation kits, where it successfully demonstrated its ability to control simulated bleeding.

The OncoReach Stylet for Brachytherapy: Design Evaluation and Pilot Study

Jan 20, 2026Abstract:Cervical cancer accounts for a significant portion of the global cancer burden among women. Interstitial brachytherapy (ISBT) is a standard procedure for treating cervical cancer; it involves placing a radioactive source through a straight hollow needle within or in close proximity to the tumor and surrounding tissue. However, the use of straight needles limits surgical planning to a linear needle path. We present the OncoReach stylet, a handheld, tendon-driven steerable stylet designed for compatibility with standard ISBT 15- and 13-gauge needles. Building upon our prior work, we evaluated design parameters like needle gauge, spherical joint count and spherical joint placement, including an asymmetric disk design to identify a configuration that maximizes bending compliance while retaining axial stiffness. Free space experiments quantified tip deflection across configurations, and a two-tube Cosserat rod model accurately predicted the centerline shape of the needle for most trials. The best performing configuration was integrated into a reusable handheld prototype that enables manual actuation. A patient-derived, multi-composite phantom model of the uterus and pelvis was developed to conduct a pilot study of the OncoReach steerable stylet with one expert user. Results showed the ability to steer from less-invasive, medial entry points to reach the lateral-most targets, underscoring the significance of steerable stylets.

Helical Tendon-Driven Continuum Robot with Programmable Follow-the-Leader Operation

Jan 19, 2026Abstract:Spinal cord stimulation (SCS) is primarily utilized for pain management and has recently demonstrated efficacy in promoting functional recovery in patients with spinal cord injury. Effective stimulation of motor neurons ideally requires the placement of SCS leads in the ventral or lateral epidural space where the corticospinal and rubrospinal motor fibers are located. This poses significant challenges with the current standard of manual steering. In this study, we present a static modeling approach for the ExoNav, a steerable robotic tool designed to facilitate precise navigation to the ventral and lateral epidural space. Cosserat rod framework is employed to establish the relationship between tendon actuation forces and the robot's overall shape. The effects of gravity, as an example of an external load, are investigated and implemented in the model and simulation. The experimental results indicate RMSE values of 1.76mm, 2.33mm, 2.18mm, and 1.33mm across four tested prototypes. Based on the helical shape of the ExoNav upon actuation, it is capable of performing follow-the-leader (FTL) motion by adding insertion and rotation DoFs to this robotic system, which is shown in simulation and experimentally. The proposed simulation has the capability to calculate optimum tendon tensions to follow the desired FTL paths while gravity-induced robot deformations are present. Three FTL experimental trials are conducted and the end-effector position showed repeatable alignments with the desired path with maximum RMSE value of 3.75mm. Ultimately, a phantom model demonstration is conducted where the teleoperated robot successfully navigated to the lateral and ventral spinal cord targets. Additionally, the user was able to navigate to the dorsal root ganglia, illustrating ExoNav's potential in both motor function recovery and pain management.

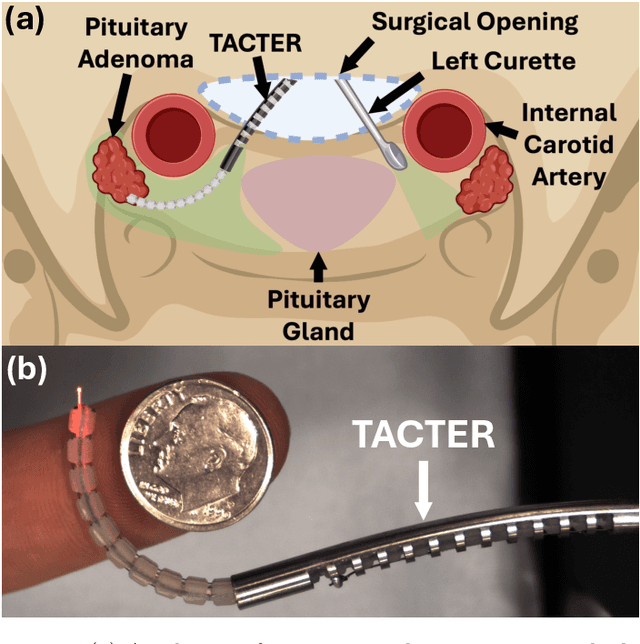

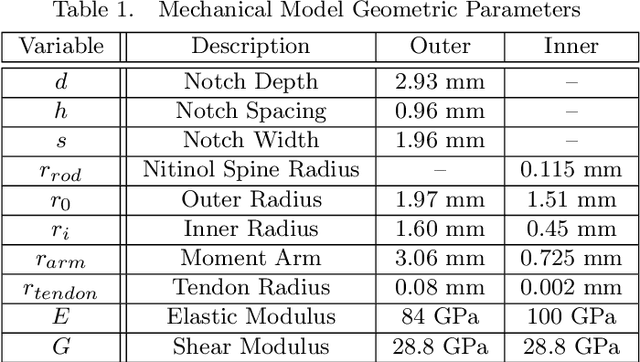

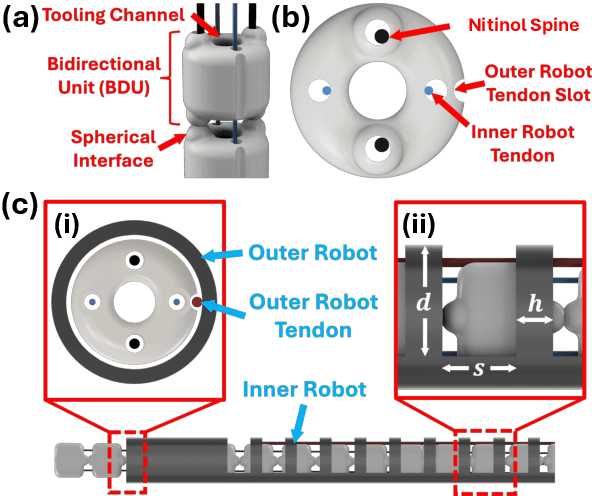

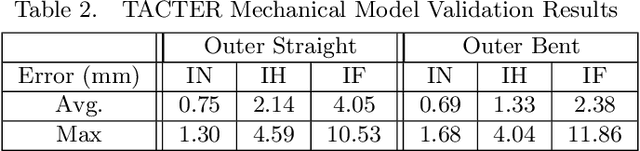

Tendon-Actuated Concentric Tube Endonasal Robot (TACTER)

Apr 28, 2025

Abstract:Endoscopic endonasal approaches (EEA) have become more prevalent for minimally invasive skull base and sinus surgeries. However, rigid scopes and tools significantly decrease the surgeon's ability to operate in tight anatomical spaces and avoid critical structures such as the internal carotid artery and cranial nerves. This paper proposes a novel tendon-actuated concentric tube endonasal robot (TACTER) design in which two tendon-actuated robots are concentric to each other, resulting in an outer and inner robot that can bend independently. The outer robot is a unidirectionally asymmetric notch (UAN) nickel-titanium robot, and the inner robot is a 3D-printed bidirectional robot, with a nickel-titanium bending member. In addition, the inner robot can translate axially within the outer robot, allowing the tool to traverse through structures while bending, thereby executing follow-the-leader motion. A Cosserat-rod based mechanical model is proposed that uses tendon tension of both tendon-actuated robots and the relative translation between the robots as inputs and predicts the TACTER tip position for varying input parameters. The model is validated with experiments, and a human cadaver experiment is presented to demonstrate maneuverability from the nostril to the sphenoid sinus. This work presents the first tendon-actuated concentric tube (TACT) dexterous robotic tool capable of performing follow-the-leader motion within natural nasal orifices to cover workspaces typically required for a successful EEA.

ExoNav II: Design of a Robotic Tool with Follow-the-Leader Motion Capability for Lateral and Ventral Spinal Cord Stimulation (SCS)

Mar 06, 2025Abstract:Spinal cord stimulation (SCS) electrodes are traditionally placed in the dorsal epidural space to stimulate the dorsal column fibers for pain therapy. Recently, SCS has gained attention in restoring gait. However, the motor fibers triggering locomotion are located in the ventral and lateral spinal cord. Currently, SCS electrodes are steered manually, making it difficult to navigate them to the lateral and ventral motor fibers in the spinal cord. In this work, we propose a helically micro-machined continuum robot that can bend in a helical shape when subjected to actuation tendon forces. Using a stiff outer tube and adding translational and rotational degrees of freedom, this helical continuum robot can perform follow-the-leader (FTL) motion. We propose a kinematic model to relate tendon stroke and geometric parameters of the robot's helical shape to its acquired trajectory and end-effector position. We evaluate the proposed kinematic model and the robot's FTL motion capability experimentally. The stroke-based method, which links tendon stroke values to the robot's shape, showed inaccuracies with a 19.84 mm deviation and an RMSE of 14.42 mm for 63.6 mm of robot's length bending. The position-based method, using kinematic equations to map joint space to task space, performed better with a 10.54 mm deviation and an RMSE of 8.04 mm. Follow-the-leader experiments showed deviations of 11.24 mm and 7.32 mm, with RMSE values of 8.67 mm and 5.18 mm for the stroke-based and position-based methods, respectively. Furthermore, end-effector trajectories in two FTL motion trials are compared to confirm the robot's repeatable behavior. Finally, we demonstrate the robot's operation on a 3D-printed spinal cord phantom model.

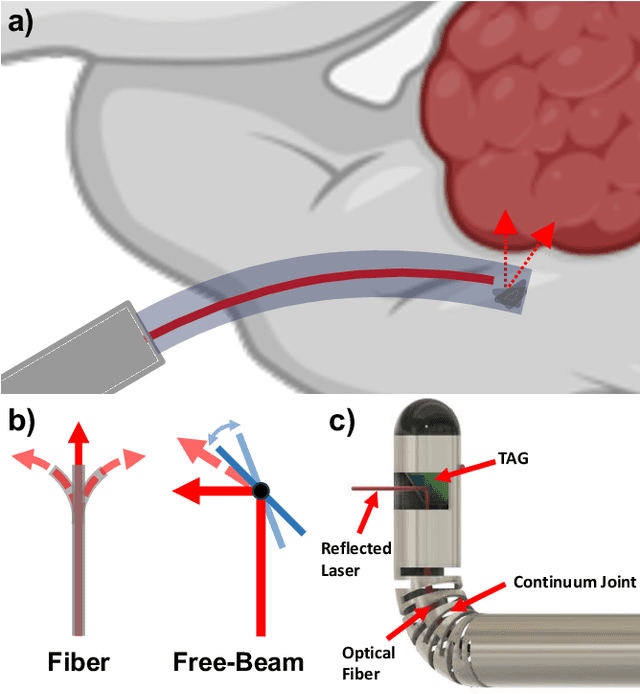

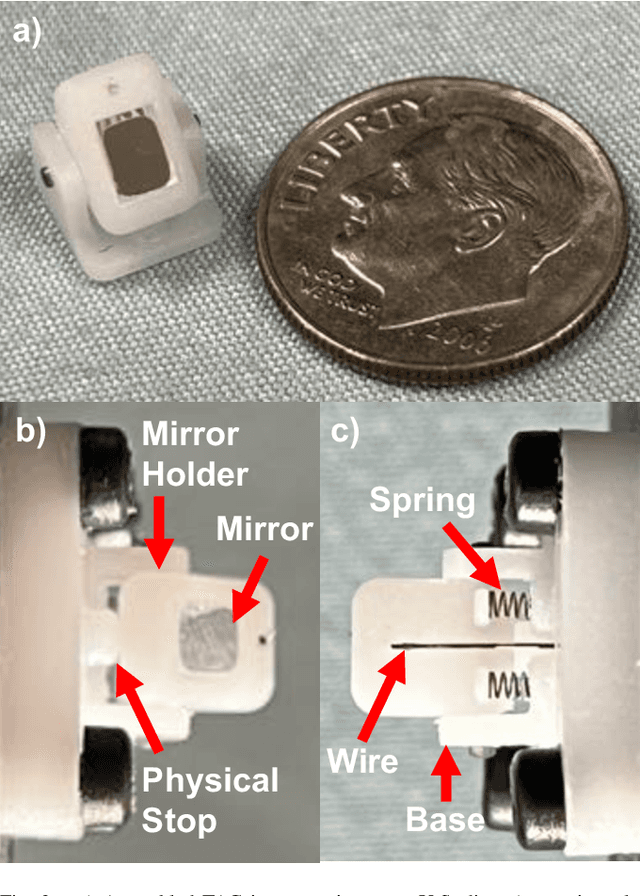

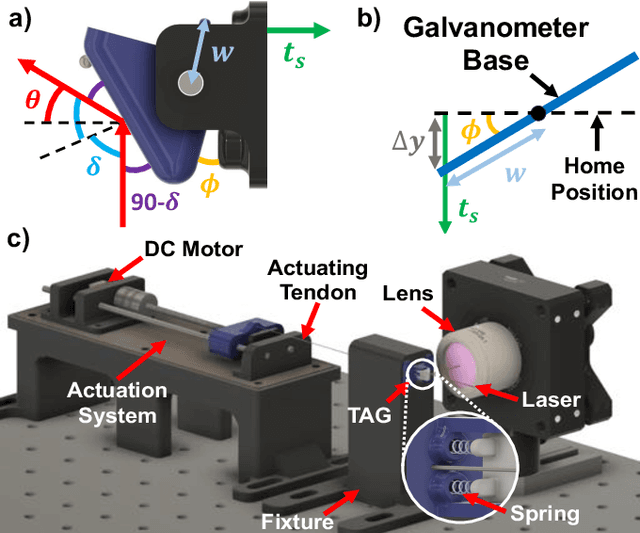

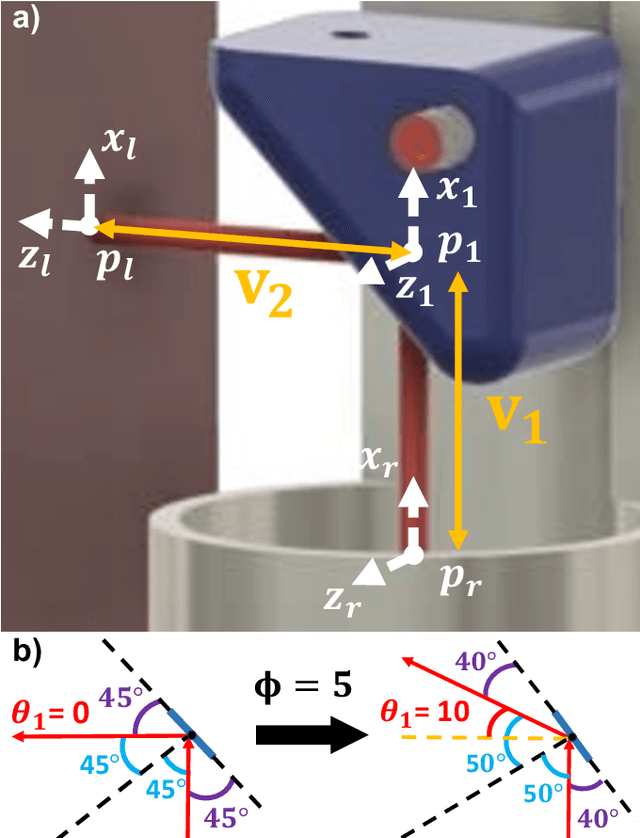

Towards the Development of a Tendon-Actuated Galvanometer for Endoscopic Surgical Laser Scanning

Jun 05, 2024

Abstract:There is a need for precision pathological sensing, imaging, and tissue manipulation in neurosurgical procedures, such as brain tumor resection. Precise tumor margin identification and resection can prevent further growth and protect critical structures. Surgical lasers with small laser diameters and steering capabilities can allow for new minimally invasive procedures by traversing through complex anatomy, then providing energy to sense, visualize, and affect tissue. In this paper, we present the design of a small-scale tendon-actuated galvanometer (TAG) that can serve as an end-effector tool for a steerable surgical laser. The galvanometer sensor design, fabrication, and kinematic modeling are presented and derived. It can accurately rotate up to 30.14 degrees (or a laser reflection angle of 60.28 degrees). A kinematic mapping of input tendon stroke to output galvanometer angle change and a forward-kinematics model relating the end of the continuum joint to the laser end-point are derived and validated.

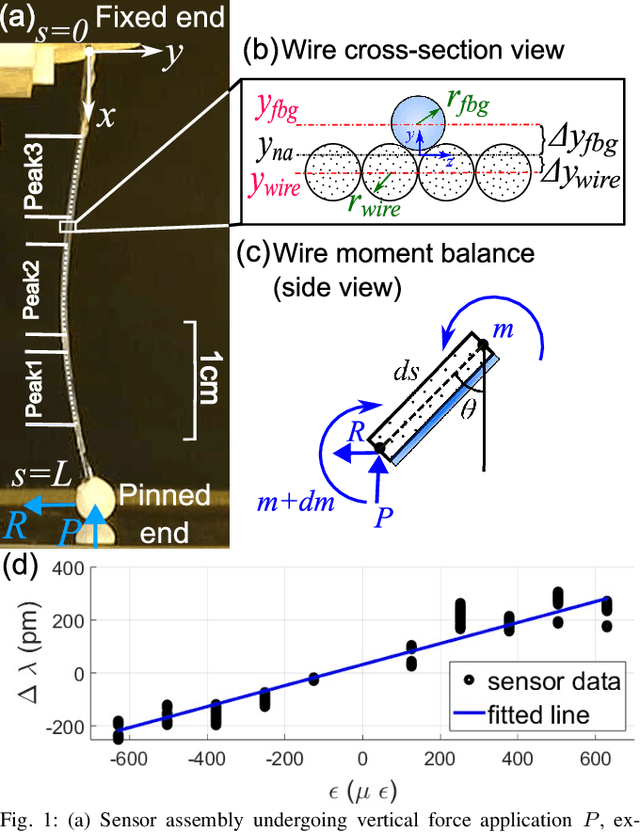

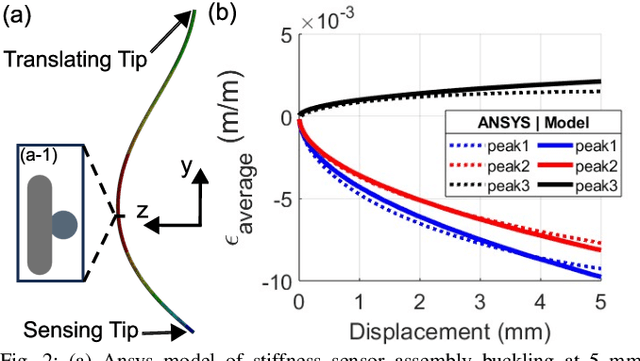

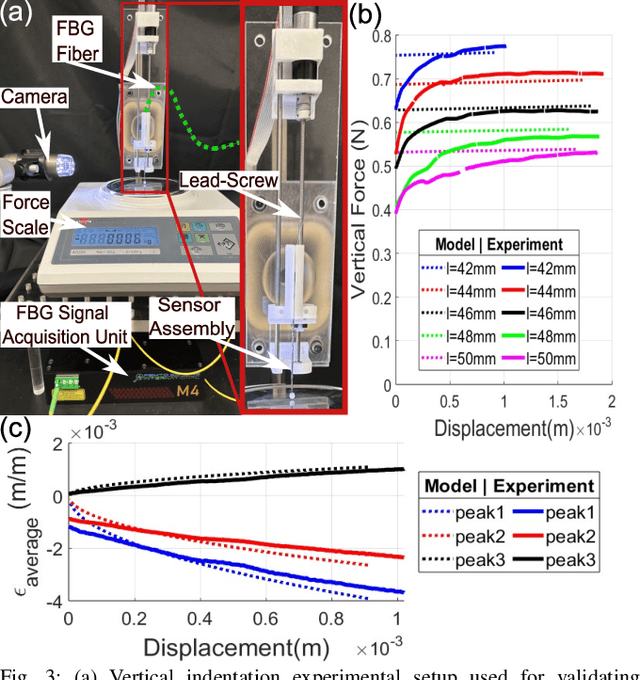

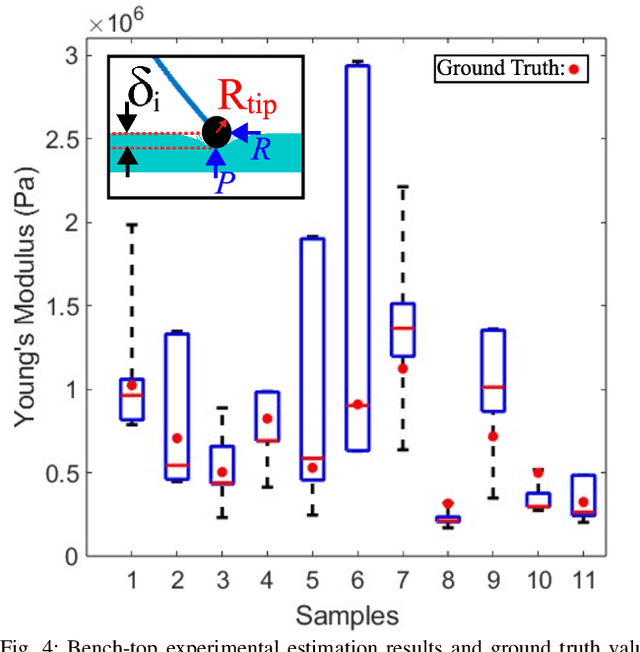

An FBG-based Stiffness Estimation Sensor for In-vivo Diagnostics

May 30, 2024

Abstract:In-vivo tissue stiffness identification can be useful in pulmonary fibrosis diagnostics and minimally invasive tumor identification, among many other applications. In this work, we propose a palpation-based method for tissue stiffness estimation that uses a sensorized beam buckled onto the surface of a tissue. Fiber Bragg Gratings (FBGs) are used in our sensor as a shape-estimation modality to get real-time beam shape, even while the device is not visually monitored. A mechanical model is developed to predict the behavior of a buckling beam and is validated using finite element analysis and bench-top testing with phantom tissue samples (made of PDMS and PA-Gel). Bench-top estimations were conducted and the results were compared with the actual stiffness values. Mean RMSE and standard deviation (from the actual stiffnesses) values of 413.86 KPa and 313.82 KPa were obtained. Estimations for softer samples were relatively closer to the actual values. Ultimately, we used the stiffness sensor within a mock concentric tube robot as a demonstration of \textit{in-vivo} sensor feasibility. Bench-top trials with and without the robot demonstrate the effectiveness of this unique sensing modality in \textit{in-vivo} applications.

Using Neural Networks to Model Hysteretic Kinematics in Tendon-Actuated Continuum Robots

Apr 10, 2024Abstract:The ability to accurately model mechanical hysteretic behavior in tendon-actuated continuum robots using deep learning approaches is a growing area of interest. In this paper, we investigate the hysteretic response of two types of tendon-actuated continuum robots and, ultimately, compare three types of neural network modeling approaches with both forward and inverse kinematic mappings: feedforward neural network (FNN), FNN with a history input buffer, and long short-term memory (LSTM) network. We seek to determine which model best captures temporal dependent behavior. We find that, depending on the robot's design, choosing different kinematic inputs can alter whether hysteresis is exhibited by the system. Furthermore, we present the results of the model fittings, revealing that, in contrast to the standard FNN, both FNN with a history input buffer and the LSTM model exhibit the capacity to model historical dependence with comparable performance in capturing rate-dependent hysteresis.

Hybrid Tendon and Ball Chain Continuum Robots for Enhanced Dexterity in Medical Interventions

Jan 30, 2024Abstract:A hybrid continuum robot design is introduced that combines a proximal tendon-actuated section with a distal telescoping section comprised of permanent-magnet spheres actuated using an external magnet. While, individually, each section can approach a point in its workspace from one or at most several orientations, the two-section combination possesses a dexterous workspace. The paper describes kinematic modeling of the hybrid design and provides a description of the dexterous workspace. We present experimental validation which shows that a simplified kinematic model produces tip position mean and maximum errors of 3% and 7% of total robot length, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge