Patrick J. Codd

Department of Neurosurgery, Duke University School of Medicine, Durham, NC, USA, Department of Mechanical Engineering and Materials Science, Duke University, Durham, NC, USA

PalpAid: Multimodal Pneumatic Tactile Sensor for Tissue Palpation

Dec 22, 2025Abstract:The tactile properties of tissue, such as elasticity and stiffness, often play an important role in surgical oncology when identifying tumors and pathological tissue boundaries. Though extremely valuable, robot-assisted surgery comes at the cost of reduced sensory information to the surgeon; typically, only vision is available. Sensors proposed to overcome this sensory desert are often bulky, complex, and incompatible with the surgical workflow. We present PalpAid, a multimodal pneumatic tactile sensor equipped with a microphone and pressure sensor, converting contact force into an internal pressure differential. The pressure sensor acts as an event detector, while the auditory signature captured by the microphone assists in tissue delineation. We show the design, fabrication, and assembly of sensory units with characterization tests to show robustness to use, inflation-deflation cycles, and integration with a robotic system. Finally, we show the sensor's ability to classify 3D-printed hard objects with varying infills and soft ex vivo tissues. Overall, PalpAid aims to fill the sensory gap intelligently and allow improved clinical decision-making.

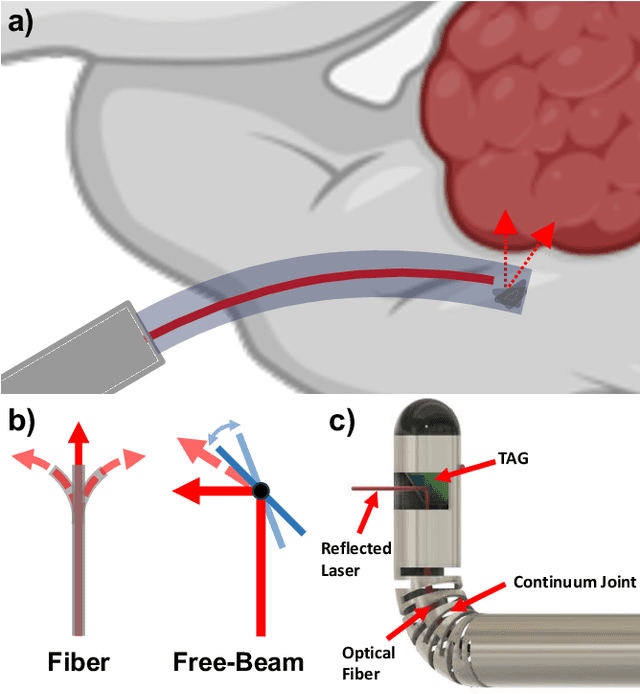

TumorMap: A Laser-based Surgical Platform for 3D Tumor Mapping and Fully-Automated Tumor Resection

Nov 07, 2025Abstract:Surgical resection of malignant solid tumors is critically dependent on the surgeon's ability to accurately identify pathological tissue and remove the tumor while preserving surrounding healthy structures. However, building an intraoperative 3D tumor model for subsequent removal faces major challenges due to the lack of high-fidelity tumor reconstruction, difficulties in developing generalized tissue models to handle the inherent complexities of tumor diagnosis, and the natural physical limitations of bimanual operation, physiologic tremor, and fatigue creep during surgery. To overcome these challenges, we introduce "TumorMap", a surgical robotic platform to formulate intraoperative 3D tumor boundaries and achieve autonomous tissue resection using a set of multifunctional lasers. TumorMap integrates a three-laser mechanism (optical coherence tomography, laser-induced endogenous fluorescence, and cutting laser scalpel) combined with deep learning models to achieve fully-automated and noncontact tumor resection. We validated TumorMap in murine osteoscarcoma and soft-tissue sarcoma tumor models, and established a novel histopathological workflow to estimate sensor performance. With submillimeter laser resection accuracy, we demonstrated multimodal sensor-guided autonomous tumor surgery without any human intervention.

Tendon-Actuated Concentric Tube Endonasal Robot (TACTER)

Apr 28, 2025

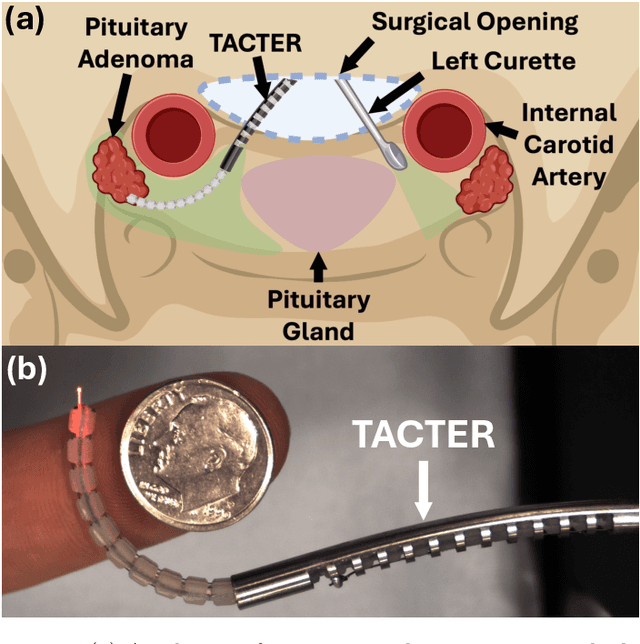

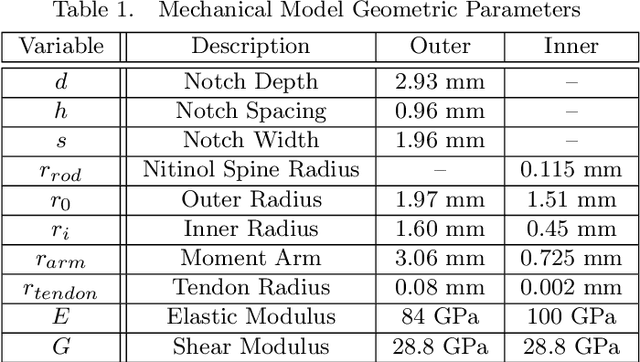

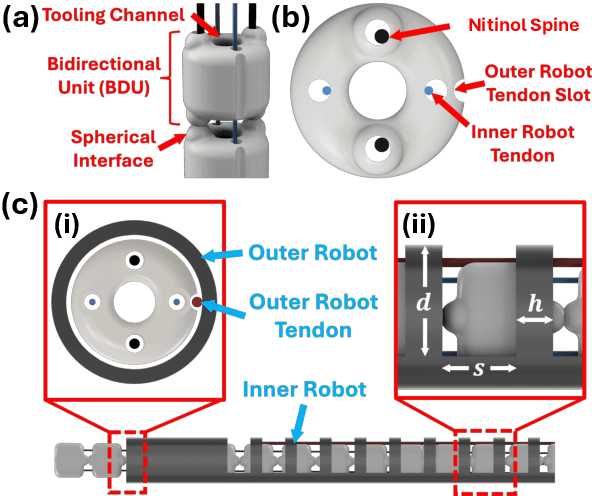

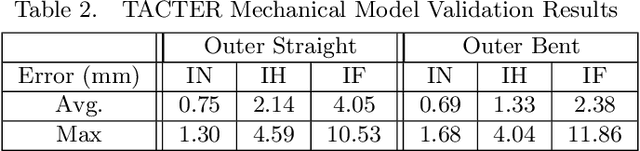

Abstract:Endoscopic endonasal approaches (EEA) have become more prevalent for minimally invasive skull base and sinus surgeries. However, rigid scopes and tools significantly decrease the surgeon's ability to operate in tight anatomical spaces and avoid critical structures such as the internal carotid artery and cranial nerves. This paper proposes a novel tendon-actuated concentric tube endonasal robot (TACTER) design in which two tendon-actuated robots are concentric to each other, resulting in an outer and inner robot that can bend independently. The outer robot is a unidirectionally asymmetric notch (UAN) nickel-titanium robot, and the inner robot is a 3D-printed bidirectional robot, with a nickel-titanium bending member. In addition, the inner robot can translate axially within the outer robot, allowing the tool to traverse through structures while bending, thereby executing follow-the-leader motion. A Cosserat-rod based mechanical model is proposed that uses tendon tension of both tendon-actuated robots and the relative translation between the robots as inputs and predicts the TACTER tip position for varying input parameters. The model is validated with experiments, and a human cadaver experiment is presented to demonstrate maneuverability from the nostril to the sphenoid sinus. This work presents the first tendon-actuated concentric tube (TACT) dexterous robotic tool capable of performing follow-the-leader motion within natural nasal orifices to cover workspaces typically required for a successful EEA.

Sampling-Based Model Predictive Control for Volumetric Ablation in Robotic Laser Surgery

Oct 04, 2024

Abstract:Laser-based surgical ablation relies heavily on surgeon involvement, restricting precision to the limits of human error. The interaction between laser and tissue is governed by various laser parameters that control the laser irradiance on the tissue, including the laser power, distance, spot size, orientation, and exposure time. This complex interaction lends itself to robotic automation, allowing the surgeon to focus on high-level tasks, such as choosing the region and method of ablation, while the lower-level ablation plan can be handled autonomously. This paper describes a sampling-based model predictive control (MPC) scheme to plan ablation sequences for arbitrary tissue volumes. Using a steady-state point ablation model to simulate a single laser-tissue interaction, a random search technique explores the reachable state space while preserving sensitive tissue regions. The sampled MPC strategy provides an ablation sequence that accounts for parameter uncertainty without violating constraints, such as avoiding critical nerve bundles or blood vessels.

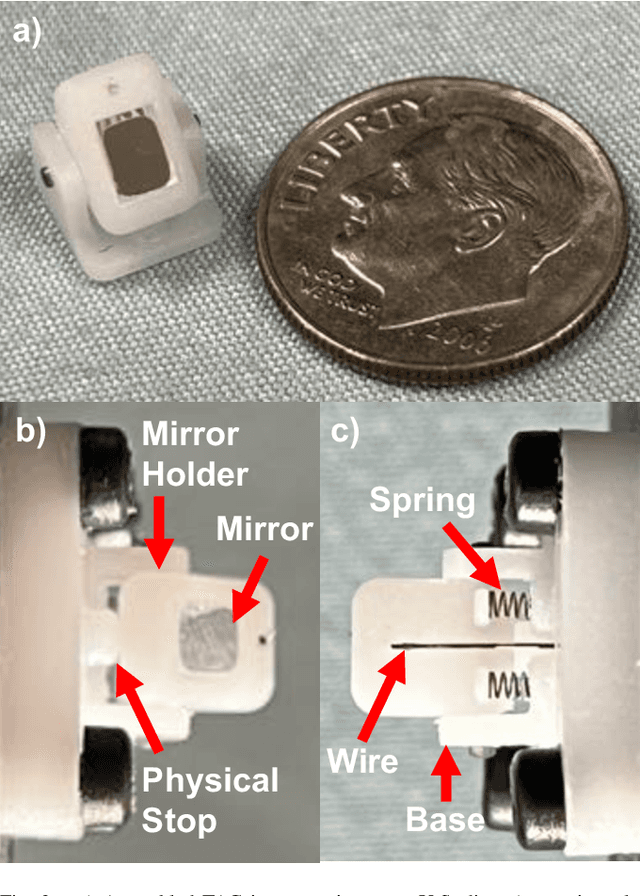

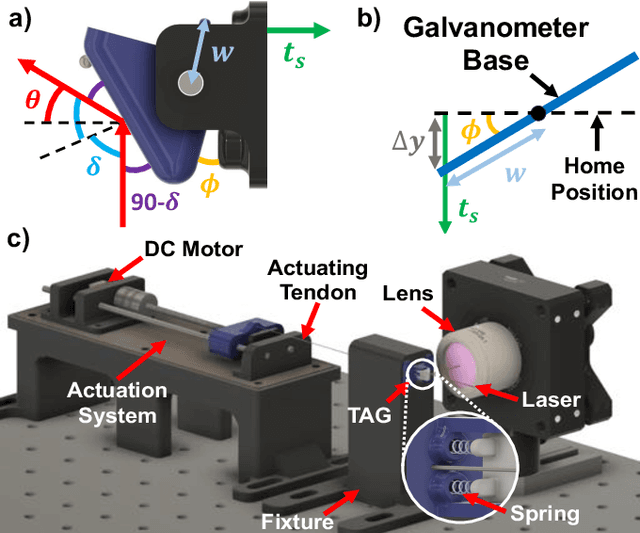

Towards the Development of a Tendon-Actuated Galvanometer for Endoscopic Surgical Laser Scanning

Jun 05, 2024

Abstract:There is a need for precision pathological sensing, imaging, and tissue manipulation in neurosurgical procedures, such as brain tumor resection. Precise tumor margin identification and resection can prevent further growth and protect critical structures. Surgical lasers with small laser diameters and steering capabilities can allow for new minimally invasive procedures by traversing through complex anatomy, then providing energy to sense, visualize, and affect tissue. In this paper, we present the design of a small-scale tendon-actuated galvanometer (TAG) that can serve as an end-effector tool for a steerable surgical laser. The galvanometer sensor design, fabrication, and kinematic modeling are presented and derived. It can accurately rotate up to 30.14 degrees (or a laser reflection angle of 60.28 degrees). A kinematic mapping of input tendon stroke to output galvanometer angle change and a forward-kinematics model relating the end of the continuum joint to the laser end-point are derived and validated.

An FBG-based Stiffness Estimation Sensor for In-vivo Diagnostics

May 30, 2024

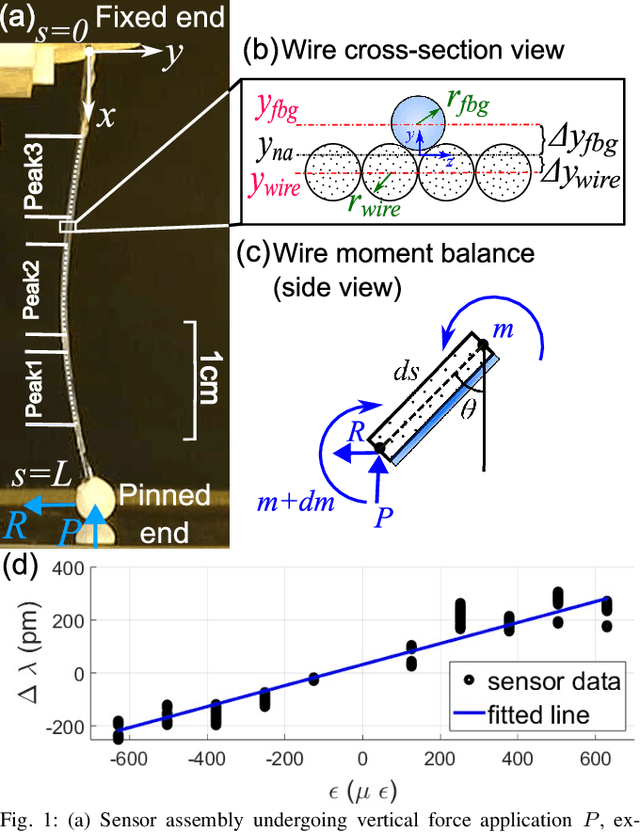

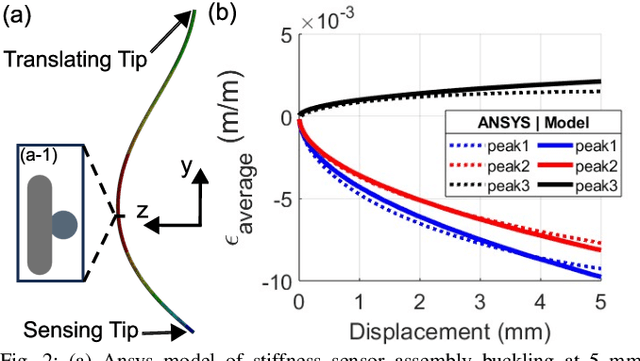

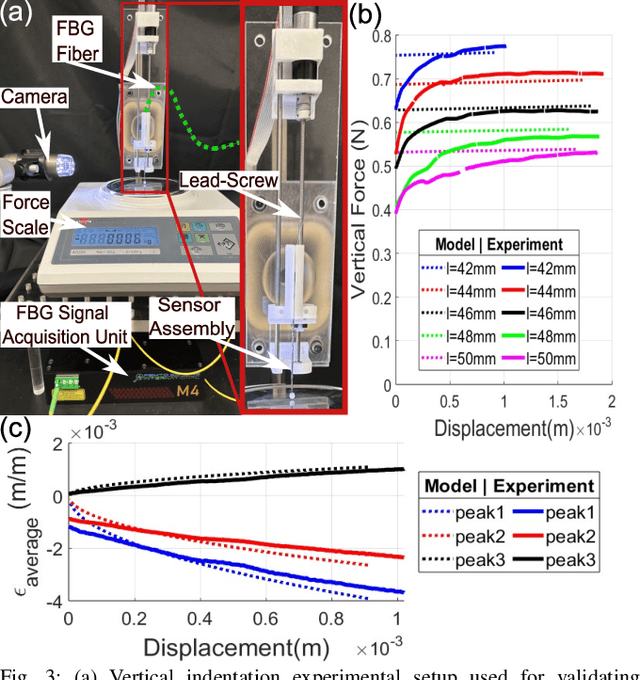

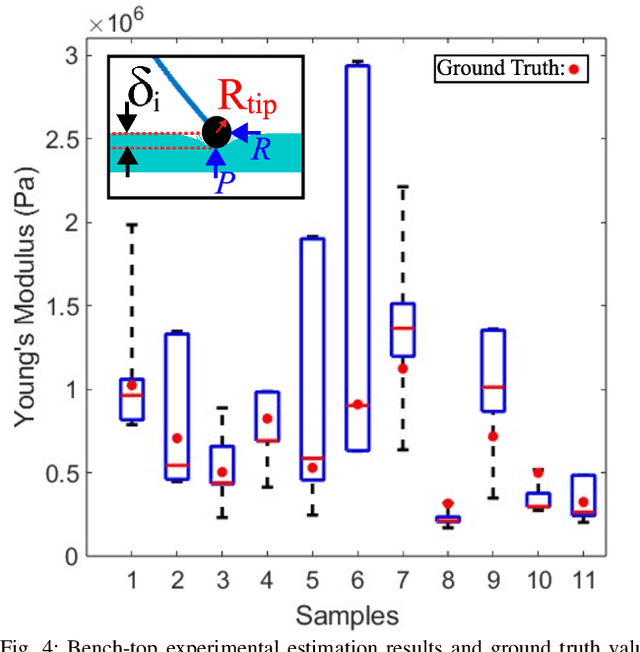

Abstract:In-vivo tissue stiffness identification can be useful in pulmonary fibrosis diagnostics and minimally invasive tumor identification, among many other applications. In this work, we propose a palpation-based method for tissue stiffness estimation that uses a sensorized beam buckled onto the surface of a tissue. Fiber Bragg Gratings (FBGs) are used in our sensor as a shape-estimation modality to get real-time beam shape, even while the device is not visually monitored. A mechanical model is developed to predict the behavior of a buckling beam and is validated using finite element analysis and bench-top testing with phantom tissue samples (made of PDMS and PA-Gel). Bench-top estimations were conducted and the results were compared with the actual stiffness values. Mean RMSE and standard deviation (from the actual stiffnesses) values of 413.86 KPa and 313.82 KPa were obtained. Estimations for softer samples were relatively closer to the actual values. Ultimately, we used the stiffness sensor within a mock concentric tube robot as a demonstration of \textit{in-vivo} sensor feasibility. Bench-top trials with and without the robot demonstrate the effectiveness of this unique sensing modality in \textit{in-vivo} applications.

Surface-Enhanced Raman Spectroscopy and Transfer Learning Toward Accurate Reconstruction of the Surgical Zone

Jan 16, 2024Abstract:Raman spectroscopy, a photonic modality based on the inelastic backscattering of coherent light, is a valuable asset to the intraoperative sensing space, offering non-ionizing potential and highly-specific molecular fingerprint-like spectroscopic signatures that can be used for diagnosis of pathological tissue in the dynamic surgical field. Though Raman suffers from weakness in intensity, Surface-Enhanced Raman Spectroscopy (SERS), which uses metal nanostructures to amplify Raman signals, can achieve detection sensitivities that rival traditional photonic modalities. In this study, we outline a robotic Raman system that can reliably pinpoint the location and boundaries of a tumor embedded in healthy tissue, modeled here as a tissue-mimicking phantom with selectively infused Gold Nanostar regions. Further, due to the relative dearth of collected biological SERS or Raman data, we implement transfer learning to achieve 100% validation classification accuracy for Gold Nanostars compared to Control Agarose, thus providing a proof-of-concept for Raman-based deep learning training pipelines. We reconstruct a surgical field of 30x60mm in 10.2 minutes, and achieve 98.2% accuracy, preserving relative measurements between features in the phantom. We also achieve an 84.3% Intersection-over-Union score, which is the extent of overlap between the ground truth and predicted reconstructions. Lastly, we also demonstrate that the Raman system and classification algorithm do not discern based on sample color, but instead on presence of SERS agents. This study provides a crucial step in the translation of intelligent Raman systems in intraoperative oncological spaces.

Computer Vision for Increased Operative Efficiency via Identification of Instruments in the Neurosurgical Operating Room: A Proof-of-Concept Study

Dec 03, 2023

Abstract:Objectives Computer vision (CV) is a field of artificial intelligence that enables machines to interpret and understand images and videos. CV has the potential to be of assistance in the operating room (OR) to track surgical instruments. We built a CV algorithm for identifying surgical instruments in the neurosurgical operating room as a potential solution for surgical instrument tracking and management to decrease surgical waste and opening of unnecessary tools. Methods We collected 1660 images of 27 commonly used neurosurgical instruments. Images were labeled using the VGG Image Annotator and split into 80% training and 20% testing sets in order to train a U-Net Convolutional Neural Network using 5-fold cross validation. Results Our U-Net achieved a tool identification accuracy of 80-100% when distinguishing 25 classes of instruments, with 19/25 classes having accuracy over 90%. The model performance was not adequate for sub classifying Adson, Gerald, and Debakey forceps, which had accuracies of 60-80%. Conclusions We demonstrated the viability of using machine learning to accurately identify surgical instruments. Instrument identification could help optimize surgical tray packing, decrease tool usage and waste, decrease incidence of instrument misplacement events, and assist in timing of routine instrument maintenance. More training data will be needed to increase accuracy across all surgical instruments that would appear in a neurosurgical operating room. Such technology has the potential to be used as a method to be used for proving what tools are truly needed in each type of operation allowing surgeons across the world to do more with less.

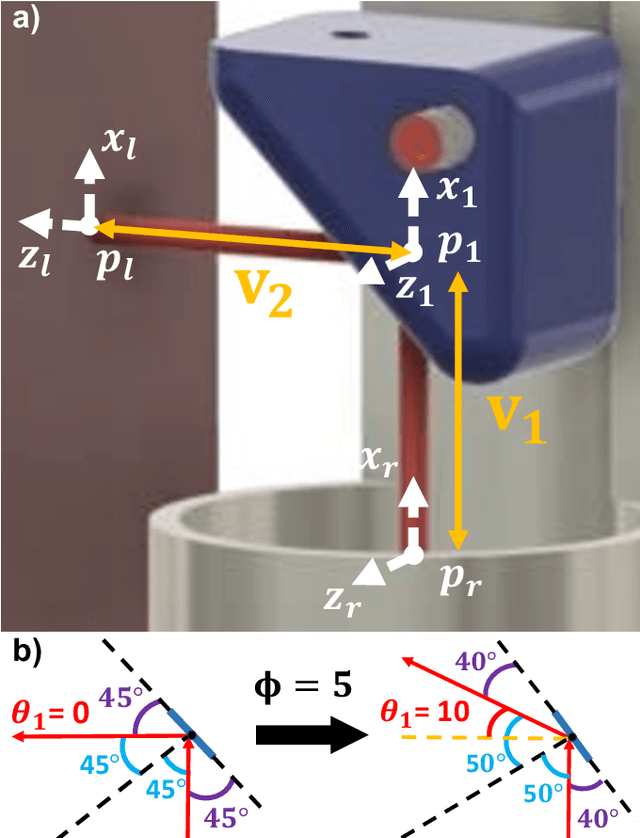

Incident Angle Study for Designing an Endoscopic Tool for Intraoperative Brain Tumor Detection

Nov 07, 2023

Abstract:In neurosurgical procedures maximizing the resection of tumor tissue while avoiding healthy tissue is of paramount importance and a difficult task due to many factors, such as surrounding eloquent brain. Swiftly identifying tumor tissue for removal could increase surgical outcomes. The TumorID is a laser-induced fluorescence spectroscopy device that utilizes endogenous fluorophores such as NADH and FAD to detect tumor regions. With the goal of creating an endoscopic tool for intraoperative tumor detection in mind, a study of the TumorID was conducted to assess how the angle of incidence (AoI) affects the collected spectral response of the scanned tumor. For this study, flat and convex NADH/FAD gellan gum phantoms were scanned at various AoI (a range of 36 degrees) to observe the spectral behavior. Results showed that spectral signature did not change significantly across flat and convex phantoms, and the Area under Curve (AUC) values calculated for each spectrum had a standard deviation of 0.02 and 0.01 for flat and convex phantoms, respectively. Therefore, the study showed that AoI will affect the intensity of the spectral response, but the peaks representative of the endogenous fluorophores are still observable and similar. Future work includes conducting an AoI study with a longer working-distance lens, then incorporating said lens to design an endoscopic, intraoperative tumor detection device for minimally invasive surgery, with first applications in endonasal endoscopic approaches for pituitary tumors.

Robotic Laser Orientation Planning with a 3D Data-driven Method

Jan 05, 2022

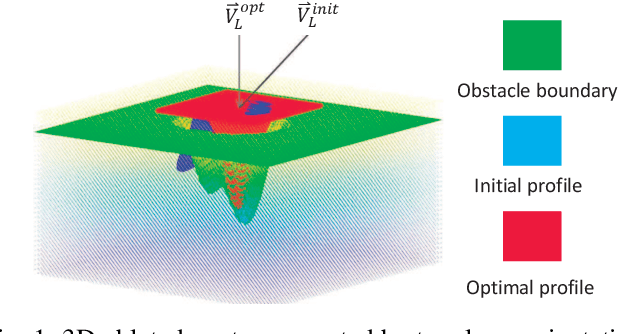

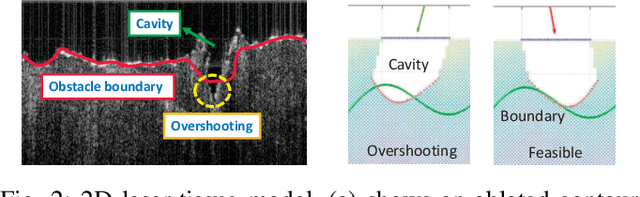

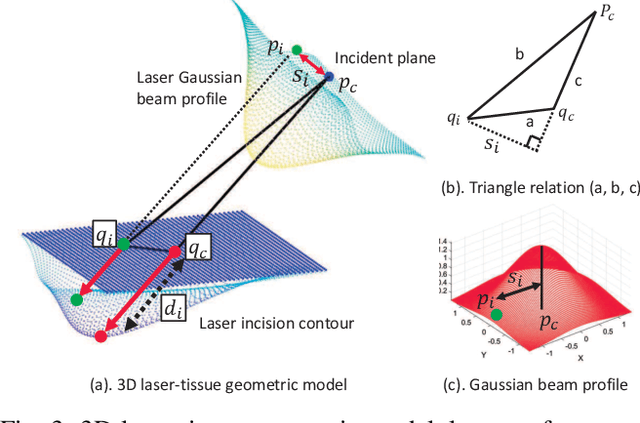

Abstract:This paper focuses on a research problem of robotic controlled laser orientation to minimize errant overcutting of healthy tissue during the course of pathological tissue resection. Laser scalpels have been widely used in surgery to remove pathological tissue targets such as tumors or other lesions. However, different laser orientations can create various tissue ablation cavities, and incorrect incident angles can cause over-irradiation of healthy tissue that should not be ablated. This work aims to formulate an optimization problem to find the optimal laser orientation in order to minimize the possibility of excessive laser-induced tissue ablation. We first develop a 3D data-driven geometric model to predict the shape of the tissue cavity after a single laser ablation. Modelling the target and non-target tissue region by an obstacle boundary, the determination of an optimal orientation is converted to a collision-minimization problem. The goal of this optimization formulation is maintaining the ablated contour distance from the obstacle boundary, which is solved by Projected gradient descent. Simulation experiments were conducted and the results validated the proposed method with conditions of various obstacle shapes and different initial incident angles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge