Weston Ross

Department of Neurosurgery, Duke University School of Medicine, Durham, NC, USA

Surface-Enhanced Raman Spectroscopy and Transfer Learning Toward Accurate Reconstruction of the Surgical Zone

Jan 16, 2024Abstract:Raman spectroscopy, a photonic modality based on the inelastic backscattering of coherent light, is a valuable asset to the intraoperative sensing space, offering non-ionizing potential and highly-specific molecular fingerprint-like spectroscopic signatures that can be used for diagnosis of pathological tissue in the dynamic surgical field. Though Raman suffers from weakness in intensity, Surface-Enhanced Raman Spectroscopy (SERS), which uses metal nanostructures to amplify Raman signals, can achieve detection sensitivities that rival traditional photonic modalities. In this study, we outline a robotic Raman system that can reliably pinpoint the location and boundaries of a tumor embedded in healthy tissue, modeled here as a tissue-mimicking phantom with selectively infused Gold Nanostar regions. Further, due to the relative dearth of collected biological SERS or Raman data, we implement transfer learning to achieve 100% validation classification accuracy for Gold Nanostars compared to Control Agarose, thus providing a proof-of-concept for Raman-based deep learning training pipelines. We reconstruct a surgical field of 30x60mm in 10.2 minutes, and achieve 98.2% accuracy, preserving relative measurements between features in the phantom. We also achieve an 84.3% Intersection-over-Union score, which is the extent of overlap between the ground truth and predicted reconstructions. Lastly, we also demonstrate that the Raman system and classification algorithm do not discern based on sample color, but instead on presence of SERS agents. This study provides a crucial step in the translation of intelligent Raman systems in intraoperative oncological spaces.

Computer Vision for Increased Operative Efficiency via Identification of Instruments in the Neurosurgical Operating Room: A Proof-of-Concept Study

Dec 03, 2023

Abstract:Objectives Computer vision (CV) is a field of artificial intelligence that enables machines to interpret and understand images and videos. CV has the potential to be of assistance in the operating room (OR) to track surgical instruments. We built a CV algorithm for identifying surgical instruments in the neurosurgical operating room as a potential solution for surgical instrument tracking and management to decrease surgical waste and opening of unnecessary tools. Methods We collected 1660 images of 27 commonly used neurosurgical instruments. Images were labeled using the VGG Image Annotator and split into 80% training and 20% testing sets in order to train a U-Net Convolutional Neural Network using 5-fold cross validation. Results Our U-Net achieved a tool identification accuracy of 80-100% when distinguishing 25 classes of instruments, with 19/25 classes having accuracy over 90%. The model performance was not adequate for sub classifying Adson, Gerald, and Debakey forceps, which had accuracies of 60-80%. Conclusions We demonstrated the viability of using machine learning to accurately identify surgical instruments. Instrument identification could help optimize surgical tray packing, decrease tool usage and waste, decrease incidence of instrument misplacement events, and assist in timing of routine instrument maintenance. More training data will be needed to increase accuracy across all surgical instruments that would appear in a neurosurgical operating room. Such technology has the potential to be used as a method to be used for proving what tools are truly needed in each type of operation allowing surgeons across the world to do more with less.

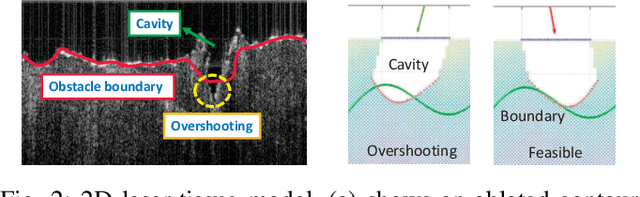

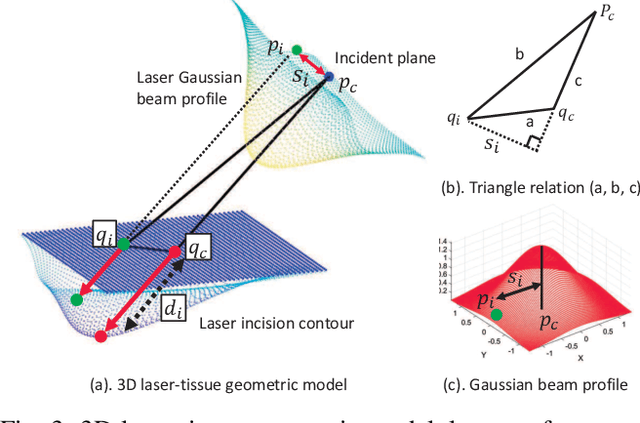

3D Laser-and-tissue Agnostic Data-driven Method for Robotic Laser Surgical Planning

May 02, 2023Abstract:In robotic laser surgery, shape prediction of an one-shot ablation cavity is an important problem for minimizing errant overcutting of healthy tissue during the course of pathological tissue resection and precise tumor removal. Since it is difficult to physically model the laser-tissue interaction due to the variety of optical tissue properties, complicated process of heat transfer, and uncertainty about the chemical reaction, we propose a 3D cavity prediction model based on an entirely data-driven method without any assumptions of laser settings and tissue properties. Based on the cavity prediction model, we formulate a novel robotic laser planning problem to determine the optimal laser incident configuration, which aims to create a cavity that aligns with the surface target (e.g. tumor, pathological tissue). To solve the one-shot ablation cavity prediction problem, we model the 3D geometric relation between the tissue surface and the laser energy profile as a non-linear regression problem that can be represented by a single-layer perceptron (SLP) network. The SLP network is encoded in a novel kinematic model to predict the shape of the post-ablation cavity with an arbitrary laser input. To estimate the SLP network parameters, we formulate a dataset of one-shot laser-phantom cavities reconstructed by the optical coherence tomography (OCT) B-scan images for the data-driven modelling. To verify the method. The learned cavity prediction model is applied to solve a simplified robotic laser planning problem modelled as a surface alignment error minimization problem. The initial results report (91.1 +- 3.0)% 3D-cavity-Intersection-over-Union (3D-cavity-IoU) for the 3D cavity prediction and an average of 97.9% success rate for the simulated surface alignment experiments.

Robotic Laser Orientation Planning with a 3D Data-driven Method

Jan 05, 2022

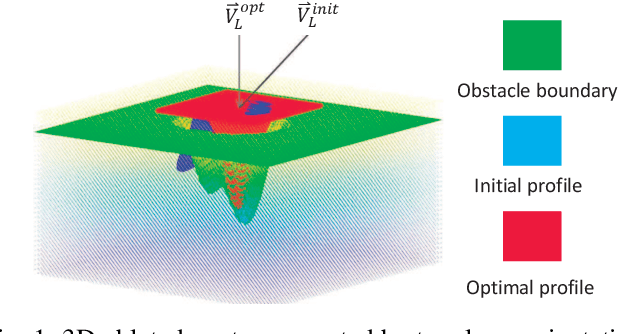

Abstract:This paper focuses on a research problem of robotic controlled laser orientation to minimize errant overcutting of healthy tissue during the course of pathological tissue resection. Laser scalpels have been widely used in surgery to remove pathological tissue targets such as tumors or other lesions. However, different laser orientations can create various tissue ablation cavities, and incorrect incident angles can cause over-irradiation of healthy tissue that should not be ablated. This work aims to formulate an optimization problem to find the optimal laser orientation in order to minimize the possibility of excessive laser-induced tissue ablation. We first develop a 3D data-driven geometric model to predict the shape of the tissue cavity after a single laser ablation. Modelling the target and non-target tissue region by an obstacle boundary, the determination of an optimal orientation is converted to a collision-minimization problem. The goal of this optimization formulation is maintaining the ablated contour distance from the obstacle boundary, which is solved by Projected gradient descent. Simulation experiments were conducted and the results validated the proposed method with conditions of various obstacle shapes and different initial incident angles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge