Patrick Codd

3D Laser-and-tissue Agnostic Data-driven Method for Robotic Laser Surgical Planning

May 02, 2023Abstract:In robotic laser surgery, shape prediction of an one-shot ablation cavity is an important problem for minimizing errant overcutting of healthy tissue during the course of pathological tissue resection and precise tumor removal. Since it is difficult to physically model the laser-tissue interaction due to the variety of optical tissue properties, complicated process of heat transfer, and uncertainty about the chemical reaction, we propose a 3D cavity prediction model based on an entirely data-driven method without any assumptions of laser settings and tissue properties. Based on the cavity prediction model, we formulate a novel robotic laser planning problem to determine the optimal laser incident configuration, which aims to create a cavity that aligns with the surface target (e.g. tumor, pathological tissue). To solve the one-shot ablation cavity prediction problem, we model the 3D geometric relation between the tissue surface and the laser energy profile as a non-linear regression problem that can be represented by a single-layer perceptron (SLP) network. The SLP network is encoded in a novel kinematic model to predict the shape of the post-ablation cavity with an arbitrary laser input. To estimate the SLP network parameters, we formulate a dataset of one-shot laser-phantom cavities reconstructed by the optical coherence tomography (OCT) B-scan images for the data-driven modelling. To verify the method. The learned cavity prediction model is applied to solve a simplified robotic laser planning problem modelled as a surface alignment error minimization problem. The initial results report (91.1 +- 3.0)% 3D-cavity-Intersection-over-Union (3D-cavity-IoU) for the 3D cavity prediction and an average of 97.9% success rate for the simulated surface alignment experiments.

Do Public Datasets Assure Unbiased Comparisons for Registration Evaluation?

Mar 20, 2020

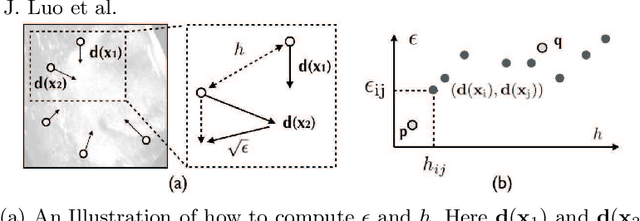

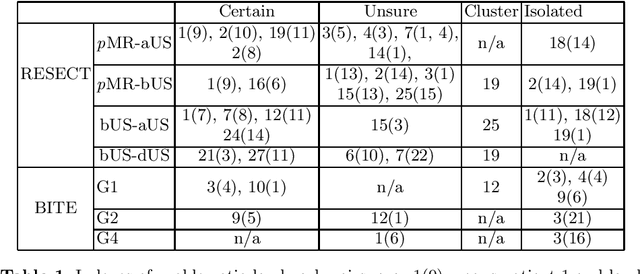

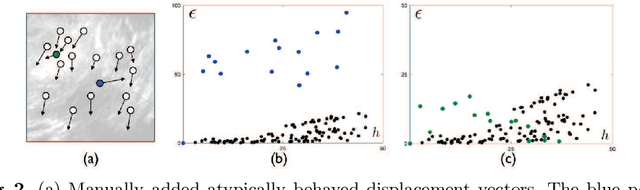

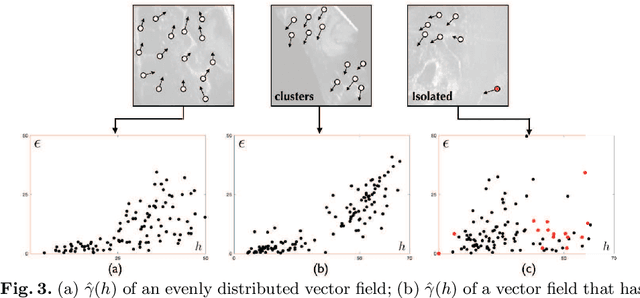

Abstract:With the increasing availability of new image registration approaches, an unbiased evaluation is becoming more needed so that clinicians can choose the most suitable approaches for their applications. Current evaluations typically use landmarks in manually annotated datasets. As a result, the quality of annotations is crucial for unbiased comparisons. Even though most data providers claim to have quality control over their datasets, an objective third-party screening can be reassuring for intended users. In this study, we use the variogram to screen the manually annotated landmarks in two datasets used to benchmark registration in image-guided neurosurgeries. The variogram provides an intuitive 2D representation of the spatial characteristics of annotated landmarks. Using variograms, we identified potentially problematic cases and had them examined by experienced radiologists. We found that (1) a small number of annotations may have fiducial localization errors; (2) the landmark distribution for some cases is not ideal to offer fair comparisons. If unresolved, both findings could incur bias in registration evaluation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge