Yaqiong Li

DeMod: A Holistic Tool with Explainable Detection and Personalized Modification for Toxicity Censorship

Nov 04, 2024

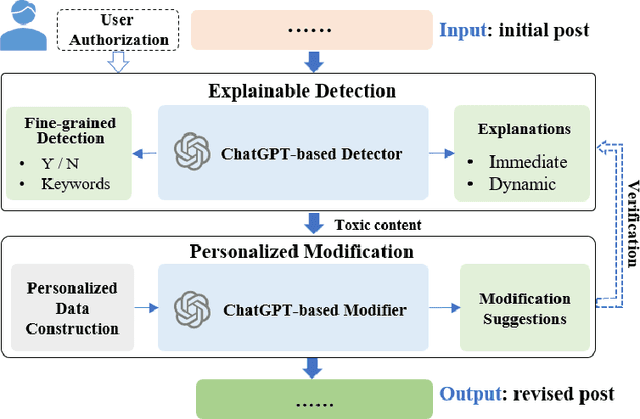

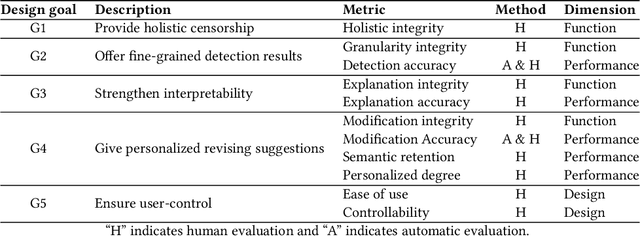

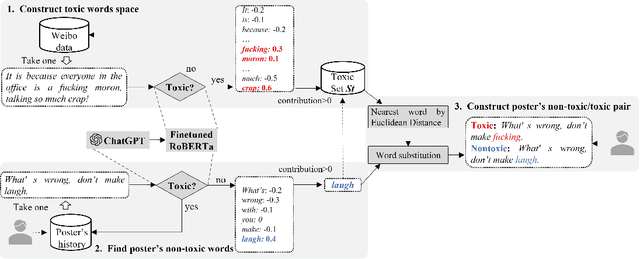

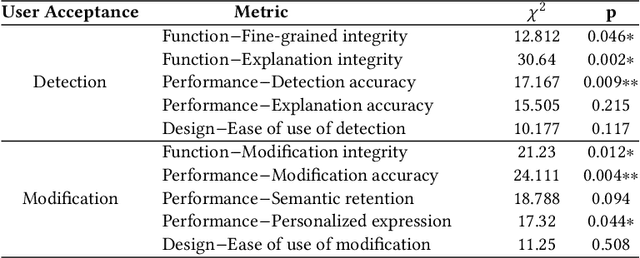

Abstract:Although there have been automated approaches and tools supporting toxicity censorship for social posts, most of them focus on detection. Toxicity censorship is a complex process, wherein detection is just an initial task and a user can have further needs such as rationale understanding and content modification. For this problem, we conduct a needfinding study to investigate people's diverse needs in toxicity censorship and then build a ChatGPT-based censorship tool named DeMod accordingly. DeMod is equipped with the features of explainable Detection and personalized Modification, providing fine-grained detection results, detailed explanations, and personalized modification suggestions. We also implemented the tool and recruited 35 Weibo users for evaluation. The results suggest DeMod's multiple strengths like the richness of functionality, the accuracy of censorship, and ease of use. Based on the findings, we further propose several insights into the design of content censorship systems.

Federated Neural Nonparametric Point Processes

Oct 08, 2024Abstract:Temporal point processes (TPPs) are effective for modeling event occurrences over time, but they struggle with sparse and uncertain events in federated systems, where privacy is a major concern. To address this, we propose \textit{FedPP}, a Federated neural nonparametric Point Process model. FedPP integrates neural embeddings into Sigmoidal Gaussian Cox Processes (SGCPs) on the client side, which is a flexible and expressive class of TPPs, allowing it to generate highly flexible intensity functions that capture client-specific event dynamics and uncertainties while efficiently summarizing historical records. For global aggregation, FedPP introduces a divergence-based mechanism that communicates the distributions of SGCPs' kernel hyperparameters between the server and clients, while keeping client-specific parameters local to ensure privacy and personalization. FedPP effectively captures event uncertainty and sparsity, and extensive experiments demonstrate its superior performance in federated settings, particularly with KL divergence and Wasserstein distance-based global aggregation.

Smoothing Graphons for Modelling Exchangeable Relational Data

Feb 25, 2020

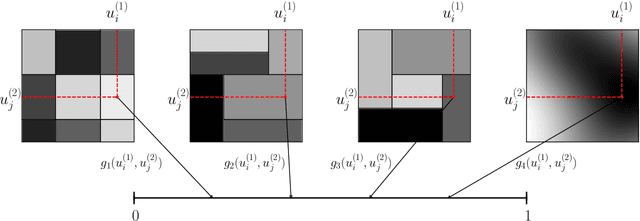

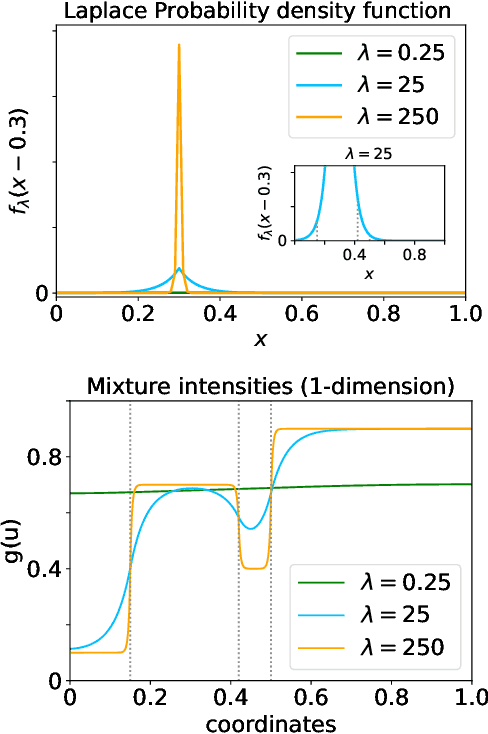

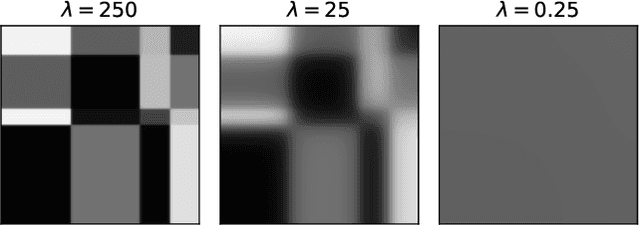

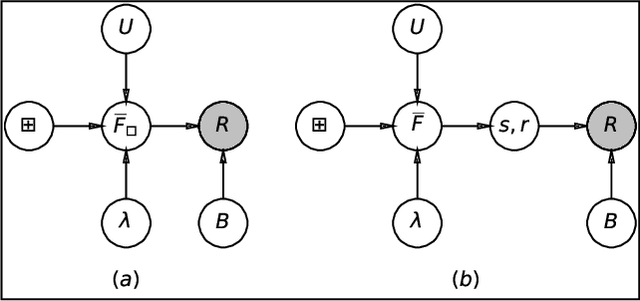

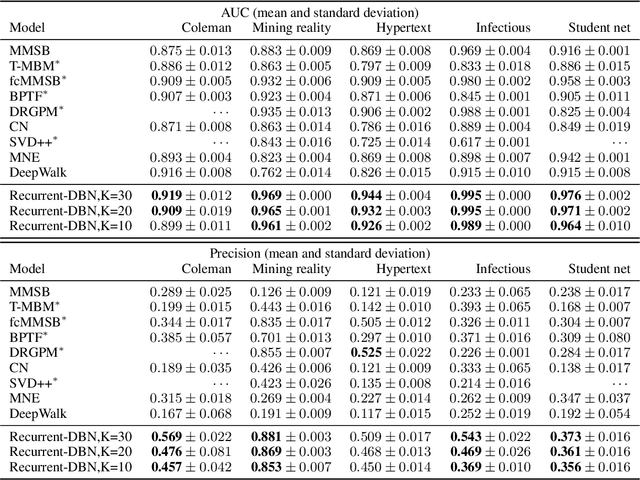

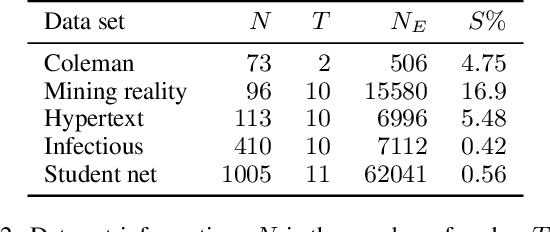

Abstract:Modelling exchangeable relational data can be described by \textit{graphon theory}. Most Bayesian methods for modelling exchangeable relational data can be attributed to this framework by exploiting different forms of graphons. However, the graphons adopted by existing Bayesian methods are either piecewise-constant functions, which are insufficiently flexible for accurate modelling of the relational data, or are complicated continuous functions, which incur heavy computational costs for inference. In this work, we introduce a smoothing procedure to piecewise-constant graphons to form {\em smoothing graphons}, which permit continuous intensity values for describing relations, but without impractically increasing computational costs. In particular, we focus on the Bayesian Stochastic Block Model (SBM) and demonstrate how to adapt the piecewise-constant SBM graphon to the smoothed version. We initially propose the Integrated Smoothing Graphon (ISG) which introduces one smoothing parameter to the SBM graphon to generate continuous relational intensity values. We then develop the Latent Feature Smoothing Graphon (LFSG), which improves on the ISG by introducing auxiliary hidden labels to decompose the calculation of the ISG intensity and enable efficient inference. Experimental results on real-world data sets validate the advantages of applying smoothing strategies to the Stochastic Block Model, demonstrating that smoothing graphons can greatly improve AUC and precision for link prediction without increasing computational complexity.

Recurrent Dirichlet Belief Networks for Interpretable Dynamic Relational Data Modelling

Feb 24, 2020

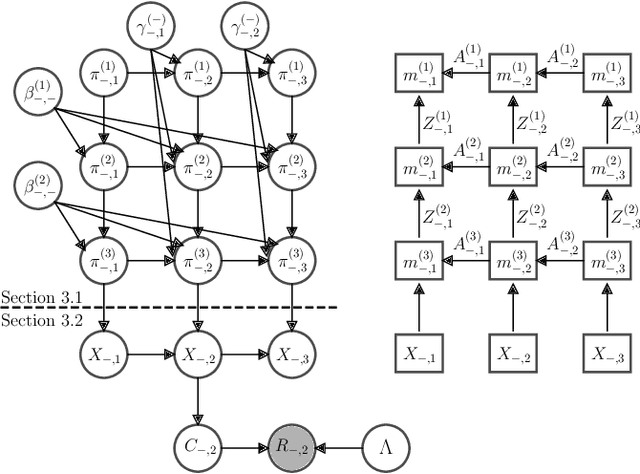

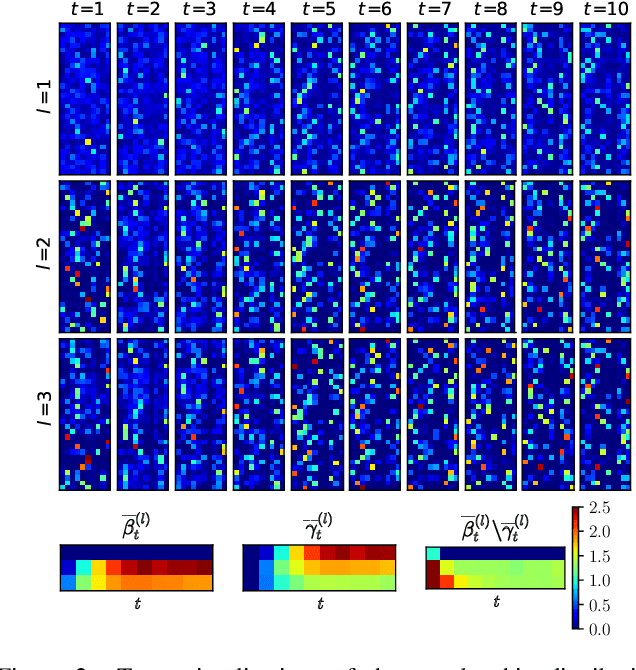

Abstract:The Dirichlet Belief Network~(DirBN) has been recently proposed as a promising approach in learning interpretable deep latent representations for objects. In this work, we leverage its interpretable modelling architecture and propose a deep dynamic probabilistic framework -- the Recurrent Dirichlet Belief Network~(Recurrent-DBN) -- to study interpretable hidden structures from dynamic relational data. The proposed Recurrent-DBN has the following merits: (1) it infers interpretable and organised hierarchical latent structures for objects within and across time steps; (2) it enables recurrent long-term temporal dependence modelling, which outperforms the one-order Markov descriptions in most of the dynamic probabilistic frameworks. In addition, we develop a new inference strategy, which first upward-and-backward propagates latent counts and then downward-and-forward samples variables, to enable efficient Gibbs sampling for the Recurrent-DBN. We apply the Recurrent-DBN to dynamic relational data problems. The extensive experiment results on real-world data validate the advantages of the Recurrent-DBN over the state-of-the-art models in interpretable latent structure discovery and improved link prediction performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge