Yangbo He

Robust Bayesian Dynamic Programming for On-policy Risk-sensitive Reinforcement Learning

Dec 31, 2025Abstract:We propose a novel framework for risk-sensitive reinforcement learning (RSRL) that incorporates robustness against transition uncertainty. We define two distinct yet coupled risk measures: an inner risk measure addressing state and cost randomness and an outer risk measure capturing transition dynamics uncertainty. Our framework unifies and generalizes most existing RL frameworks by permitting general coherent risk measures for both inner and outer risk measures. Within this framework, we construct a risk-sensitive robust Markov decision process (RSRMDP), derive its Bellman equation, and provide error analysis under a given posterior distribution. We further develop a Bayesian Dynamic Programming (Bayesian DP) algorithm that alternates between posterior updates and value iteration. The approach employs an estimator for the risk-based Bellman operator that combines Monte Carlo sampling with convex optimization, for which we prove strong consistency guarantees. Furthermore, we demonstrate that the algorithm converges to a near-optimal policy in the training environment and analyze both the sample complexity and the computational complexity under the Dirichlet posterior and CVaR. Finally, we validate our approach through two numerical experiments. The results exhibit excellent convergence properties while providing intuitive demonstrations of its advantages in both risk-sensitivity and robustness. Empirically, we further demonstrate the advantages of the proposed algorithm through an application on option hedging.

VACT: A Video Automatic Causal Testing System and a Benchmark

Mar 08, 2025Abstract:With the rapid advancement of text-conditioned Video Generation Models (VGMs), the quality of generated videos has significantly improved, bringing these models closer to functioning as ``*world simulators*'' and making real-world-level video generation more accessible and cost-effective. However, the generated videos often contain factual inaccuracies and lack understanding of fundamental physical laws. While some previous studies have highlighted this issue in limited domains through manual analysis, a comprehensive solution has not yet been established, primarily due to the absence of a generalized, automated approach for modeling and assessing the causal reasoning of these models across diverse scenarios. To address this gap, we propose VACT: an **automated** framework for modeling, evaluating, and measuring the causal understanding of VGMs in real-world scenarios. By combining causal analysis techniques with a carefully designed large language model assistant, our system can assess the causal behavior of models in various contexts without human annotation, which offers strong generalization and scalability. Additionally, we introduce multi-level causal evaluation metrics to provide a detailed analysis of the causal performance of VGMs. As a demonstration, we use our framework to benchmark several prevailing VGMs, offering insight into their causal reasoning capabilities. Our work lays the foundation for systematically addressing the causal understanding deficiencies in VGMs and contributes to advancing their reliability and real-world applicability.

Local Causal Discovery with Background Knowledge

Aug 15, 2024

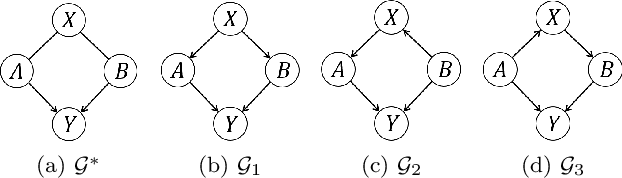

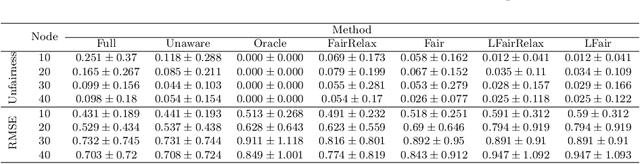

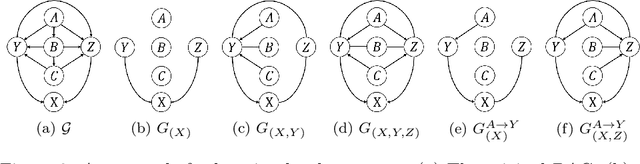

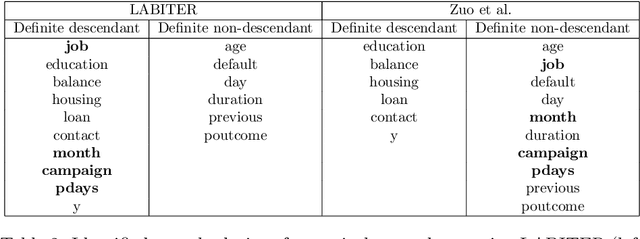

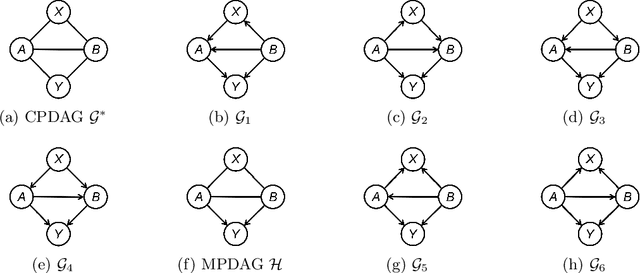

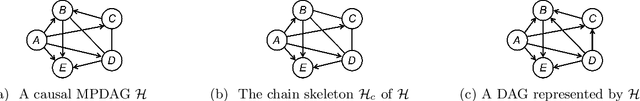

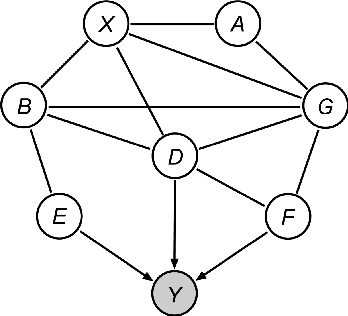

Abstract:Causality plays a pivotal role in various fields of study. Based on the framework of causal graphical models, previous works have proposed identifying whether a variable is a cause or non-cause of a target in every Markov equivalent graph solely by learning a local structure. However, the presence of prior knowledge, often represented as a partially known causal graph, is common in many causal modeling applications. Leveraging this prior knowledge allows for the further identification of causal relationships. In this paper, we first propose a method for learning the local structure using all types of causal background knowledge, including direct causal information, non-ancestral information and ancestral information. Then we introduce criteria for identifying causal relationships based solely on the local structure in the presence of prior knowledge. We also apply out method to fair machine learning, and experiments involving local structure learning, causal relationship identification, and fair machine learning demonstrate that our method is both effective and efficient.

On the Representation of Causal Background Knowledge and its Applications in Causal Inference

Jul 10, 2022

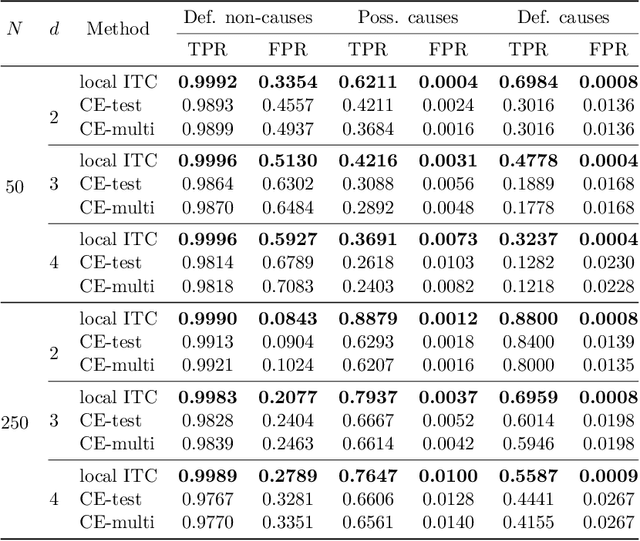

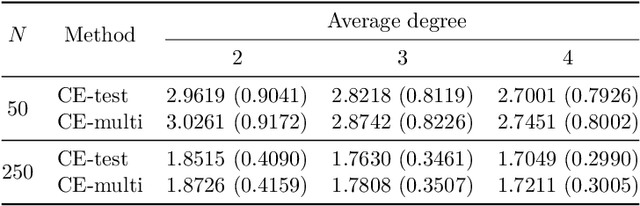

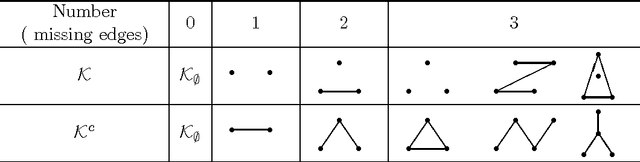

Abstract:Causal background knowledge about the existence or the absence of causal edges and paths is frequently encountered in observational studies. The shared directed edges and links of a subclass of Markov equivalent DAGs refined due to background knowledge can be represented by a causal maximally partially directed acyclic graph (MPDAG). In this paper, we first provide a sound and complete graphical characterization of causal MPDAGs and give a minimal representation of a causal MPDAG. Then, we introduce a novel representation called direct causal clause (DCC) to represent all types of causal background knowledge in a unified form. Using DCCs, we study the consistency and equivalency of causal background knowledge and show that any causal background knowledge set can be equivalently decomposed into a causal MPDAG plus a minimal residual set of DCCs. Polynomial-time algorithms are also provided for checking the consistency, equivalency, and finding the decomposed MPDAG and residual DCCs. Finally, with causal background knowledge, we prove a sufficient and necessary condition to identify causal effects and surprisingly find that the identifiability of causal effects only depends on the decomposed MPDAG. We also develop a local IDA-type algorithm to estimate the possible values of an unidentifiable effect. Simulations suggest that causal background knowledge can significantly improve the identifiability of causal effects.

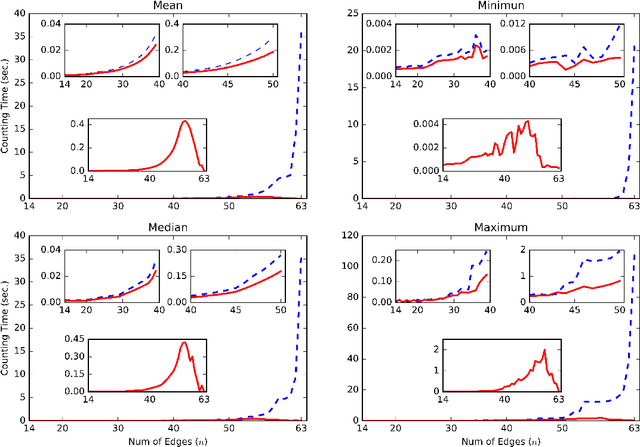

A Local Method for Identifying Causal Relations under Markov Equivalence

Feb 25, 2021

Abstract:Causality is important for designing interpretable and robust methods in artificial intelligence research. We propose a local approach to identify whether a variable is a cause of a given target based on causal graphical models of directed acyclic graphs (DAGs). In general, the causal relation between two variables may not be identifiable from observational data as many causal DAGs encoding different causal relations are Markov equivalent. In this paper, we first introduce a sufficient and necessary graphical condition to check the existence of a causal path from a variable to a target in every Markov equivalent DAG. Next, we provide local criteria for identifying whether the variable is a cause/non-cause of the target. Finally, we propose a local learning algorithm for this causal query via learning local structure of the variable and some additional statistical independence tests related to the target. Simulation studies show that our local algorithm is efficient and effective, compared with other state-of-art methods.

Low Rank Directed Acyclic Graphs and Causal Structure Learning

Jun 10, 2020

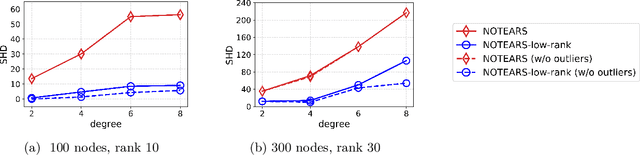

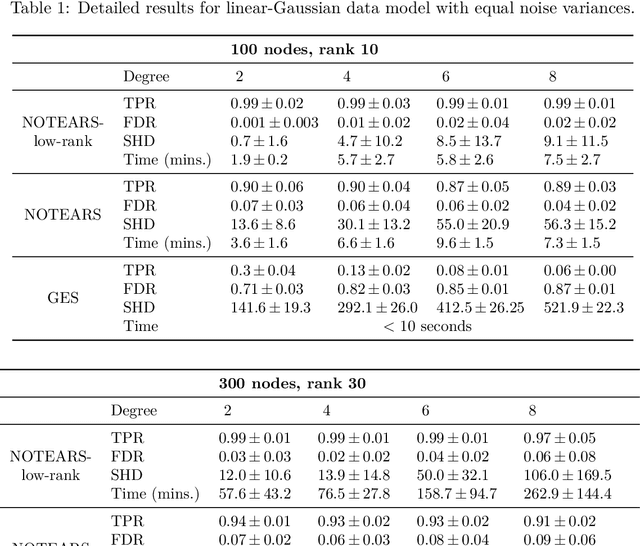

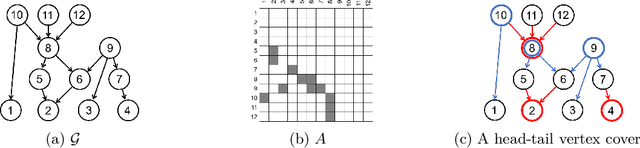

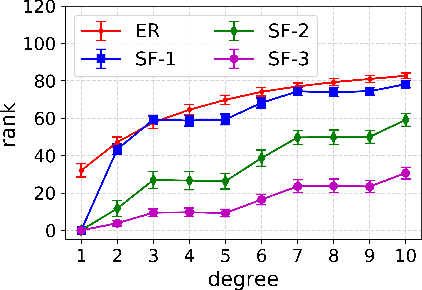

Abstract:Despite several important advances in recent years, learning causal structures represented by directed acyclic graphs (DAGs) remains a challenging task in high dimensional settings when the graphs to be learned are not sparse. In particular, the recent formulation of structure learning as a continuous optimization problem proved to have considerable advantages over the traditional combinatorial formulation, but the performance of the resulting algorithms is still wanting when the target graph is relatively large and dense. In this paper we propose a novel approach to mitigate this problem, by exploiting a low rank assumption regarding the (weighted) adjacency matrix of a DAG causal model. We establish several useful results relating interpretable graphical conditions to the low rank assumption, and show how to adapt existing methods for causal structure learning to take advantage of this assumption. We also provide empirical evidence for the utility of our low rank algorithms, especially on graphs that are not sparse. Not only do they outperform state-of-the-art algorithms when the low rank condition is satisfied, the performance on randomly generated scale-free graphs is also very competitive even though the true ranks may not be as low as is assumed.

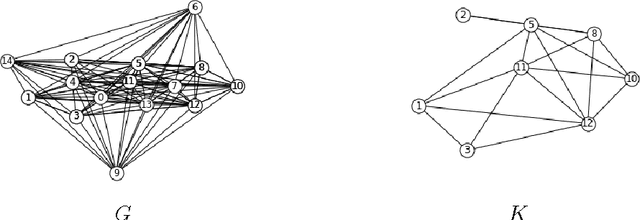

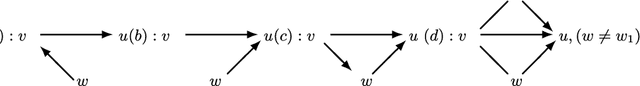

Formulas for Counting the Sizes of Markov Equivalence Classes of Directed Acyclic Graphs

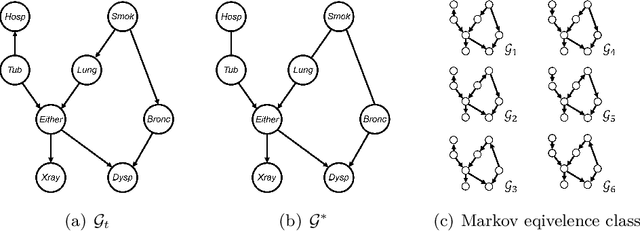

Oct 23, 2016

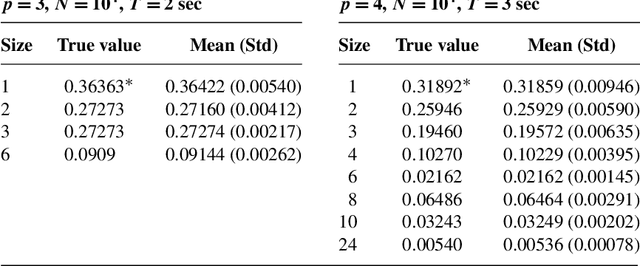

Abstract:The sizes of Markov equivalence classes of directed acyclic graphs play important roles in measuring the uncertainty and complexity in causal learning. A Markov equivalence class can be represented by an essential graph and its undirected subgraphs determine the size of the class. In this paper, we develop a method to derive the formulas for counting the sizes of Markov equivalence classes. We first introduce a new concept of core graph. The size of a Markov equivalence class of interest is a polynomial of the number of vertices given its core graph. Then, we discuss the recursive and explicit formula of the polynomial, and provide an algorithm to derive the size formula via symbolic computation for any given core graph. The proposed size formula derivation sheds light on the relationships between the size of a Markov equivalence class and its representation graph, and makes size counting efficient, even when the essential graphs contain non-sparse undirected subgraphs.

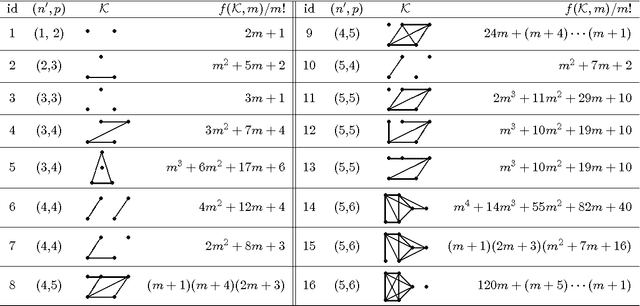

Reversible MCMC on Markov equivalence classes of sparse directed acyclic graphs

Jan 27, 2014

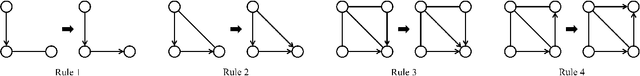

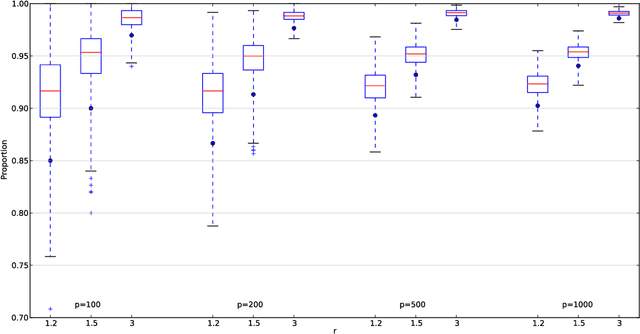

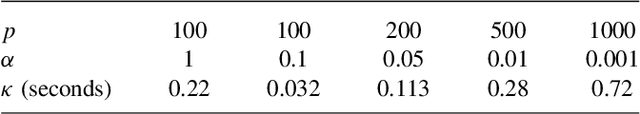

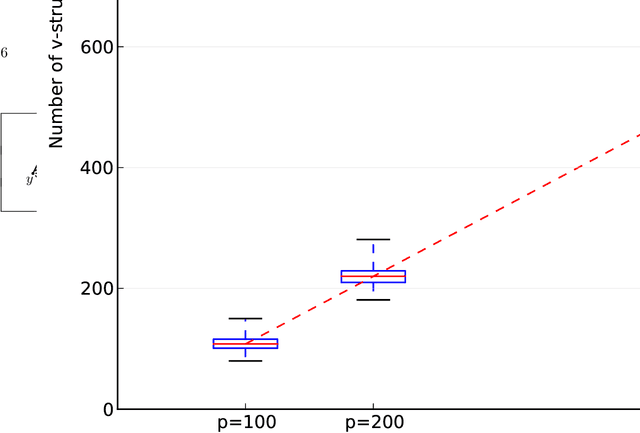

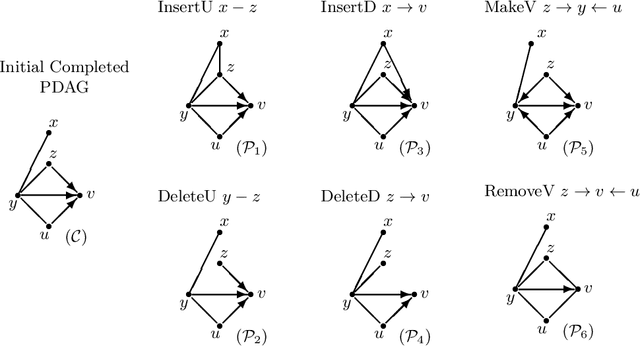

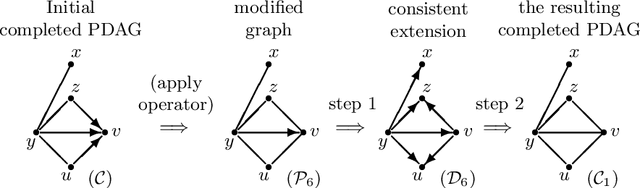

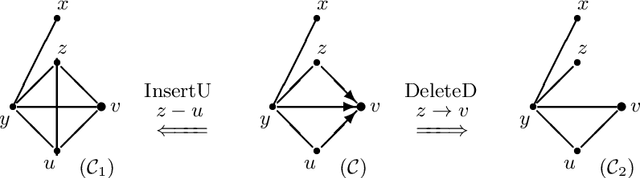

Abstract:Graphical models are popular statistical tools which are used to represent dependent or causal complex systems. Statistically equivalent causal or directed graphical models are said to belong to a Markov equivalent class. It is of great interest to describe and understand the space of such classes. However, with currently known algorithms, sampling over such classes is only feasible for graphs with fewer than approximately 20 vertices. In this paper, we design reversible irreducible Markov chains on the space of Markov equivalent classes by proposing a perfect set of operators that determine the transitions of the Markov chain. The stationary distribution of a proposed Markov chain has a closed form and can be computed easily. Specifically, we construct a concrete perfect set of operators on sparse Markov equivalence classes by introducing appropriate conditions on each possible operator. Algorithms and their accelerated versions are provided to efficiently generate Markov chains and to explore properties of Markov equivalence classes of sparse directed acyclic graphs (DAGs) with thousands of vertices. We find experimentally that in most Markov equivalence classes of sparse DAGs, (1) most edges are directed, (2) most undirected subgraphs are small and (3) the number of these undirected subgraphs grows approximately linearly with the number of vertices. The article contains supplement arXiv:1303.0632, http://dx.doi.org/10.1214/13-AOS1125SUPP

* Published in at http://dx.doi.org/10.1214/13-AOS1125 the Annals of Statistics (http://www.imstat.org/aos/) by the Institute of Mathematical Statistics (http://www.imstat.org)

Supplement to "Reversible MCMC on Markov equivalence classes of sparse directed acyclic graphs"

Aug 10, 2013

Abstract:This supplementary material includes three parts: some preliminary results, four examples, an experiment, three new algorithms, and all proofs of the results in the paper "Reversible MCMC on Markov equivalence classes of sparse directed acyclic graphs".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge