Xue-Feng Zhu

Evidential Detection and Tracking Collaboration: New Problem, Benchmark and Algorithm for Robust Anti-UAV System

Jul 04, 2023

Abstract:Unmanned Aerial Vehicles (UAVs) have been widely used in many areas, including transportation, surveillance, and military. However, their potential for safety and privacy violations is an increasing issue and highly limits their broader applications, underscoring the critical importance of UAV perception and defense (anti-UAV). Still, previous works have simplified such an anti-UAV task as a tracking problem, where the prior information of UAVs is always provided; such a scheme fails in real-world anti-UAV tasks (i.e. complex scenes, indeterminate-appear and -reappear UAVs, and real-time UAV surveillance). In this paper, we first formulate a new and practical anti-UAV problem featuring the UAVs perception in complex scenes without prior UAVs information. To benchmark such a challenging task, we propose the largest UAV dataset dubbed AntiUAV600 and a new evaluation metric. The AntiUAV600 comprises 600 video sequences of challenging scenes with random, fast, and small-scale UAVs, with over 723K thermal infrared frames densely annotated with bounding boxes. Finally, we develop a novel anti-UAV approach via an evidential collaboration of global UAVs detection and local UAVs tracking, which effectively tackles the proposed problem and can serve as a strong baseline for future research. Extensive experiments show our method outperforms SOTA approaches and validate the ability of AntiUAV600 to enhance UAV perception performance due to its large scale and complexity. Our dataset, pretrained models, and source codes will be released publically.

RGBD1K: A Large-scale Dataset and Benchmark for RGB-D Object Tracking

Aug 21, 2022

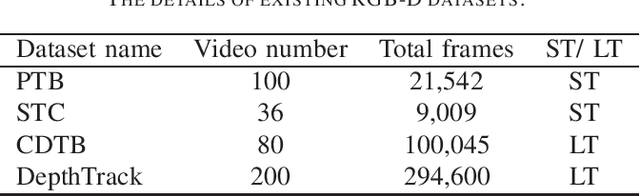

Abstract:RGB-D object tracking has attracted considerable attention recently, achieving promising performance thanks to the symbiosis between visual and depth channels. However, given a limited amount of annotated RGB-D tracking data, most state-of-the-art RGB-D trackers are simple extensions of high-performance RGB-only trackers, without fully exploiting the underlying potential of the depth channel in the offline training stage. To address the dataset deficiency issue, a new RGB-D dataset named RGBD1K is released in this paper. The RGBD1K contains 1,050 sequences with about 2.5M frames in total. To demonstrate the benefits of training on a larger RGB-D data set in general, and RGBD1K in particular, we develop a transformer-based RGB-D tracker, named SPT, as a baseline for future visual object tracking studies using the new dataset. The results, of extensive experiments using the SPT tracker emonstrate the potential of the RGBD1K dataset to improve the performance of RGB-D tracking, inspiring future developments of effective tracker designs. The dataset and codes will be available on the project homepage: https://will.be.available.at.this.website.

Visual Object Tracking on Multi-modal RGB-D Videos: A Review

Jan 29, 2022

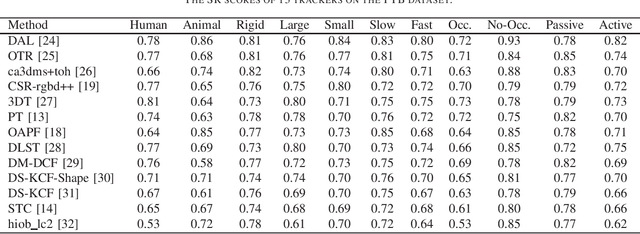

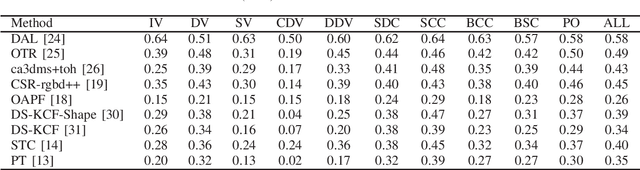

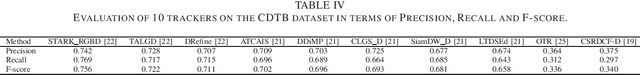

Abstract:The development of visual object tracking has continued for decades. Recent years, as the wide accessibility of the low-cost RGBD sensors, the task of visual object tracking on RGB-D videos has drawn much attention. Compared to conventional RGB-only tracking, the RGB-D videos can provide more information that facilitates objecting tracking in some complicated scenarios. The goal of this review is to summarize the relative knowledge of the research filed of RGB-D tracking. To be specific, we will generalize the related RGB-D tracking benchmarking datasets as well as the corresponding performance measurements. Besides, the existing RGB-D tracking methods are summarized in the paper. Moreover, we discuss the possible future direction in the field of RGB-D tracking.

Probability-Density-Based Deep Learning Paradigm for the Fuzzy Design of Functional Metastructures

Nov 11, 2020Abstract:In quantum mechanics, a norm squared wave function can be interpreted as the probability density that describes the likelihood of a particle to be measured in a given position or momentum. This statistical property is at the core of the fuzzy structure of microcosmos. Recently, hybrid neural structures raised intense attention, resulting in various intelligent systems with far-reaching influence. Here, we propose a probability-density-based deep learning paradigm for the fuzzy design of functional meta-structures. In contrast to other inverse design methods, our probability-density-based neural network can efficiently evaluate and accurately capture all plausible meta-structures in a high-dimensional parameter space. Local maxima in probability density distribution correspond to the most likely candidates to meet the desired performances. We verify this universally adaptive approach in but not limited to acoustics by designing multiple meta-structures for each targeted transmission spectrum, with experiments unequivocally demonstrating the effectiveness and generalization of the inverse design.

* Published in Research, an AAAS Science Partner Journal

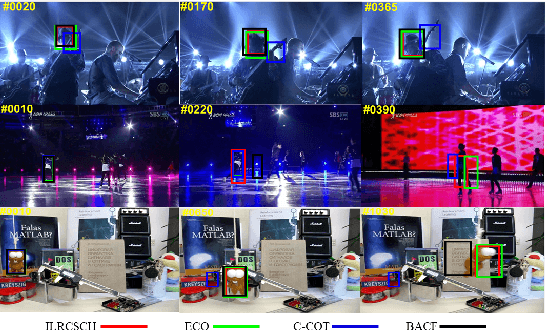

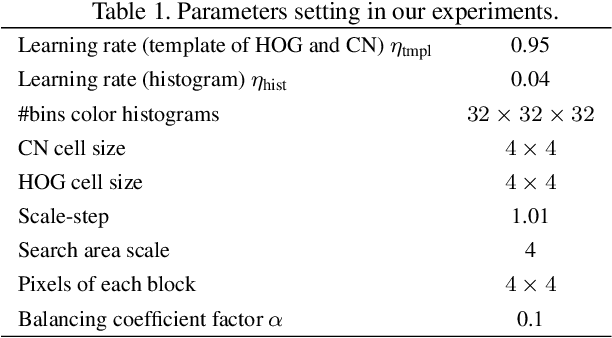

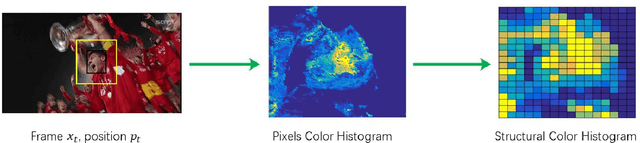

Robust Visual Tracking via Implicit Low-Rank Constraints and Structural Color Histograms

Dec 24, 2019

Abstract:With the guaranteed discrimination and efficiency of spatial appearance model, Discriminative Correlation Filters (DCF-) based tracking methods have achieved outstanding performance recently. However, the construction of effective temporal appearance model is still challenging on account of filter degeneration becomes a significant factor that causes tracking failures in the DCF framework. To encourage temporal continuity and to explore the smooth variation of target appearance, we propose to enhance low-rank structure of the learned filters, which can be realized by constraining the successive filters within a $\ell_2$-norm ball. Moreover, we design a global descriptor, structural color histograms, to provide complementary support to the final response map, improving the stability and robustness to the DCF framework. The experimental results on standard benchmarks demonstrate that our Implicit Low-Rank Constraints and Structural Color Histograms (ILRCSCH) tracker outperforms state-of-the-art methods.

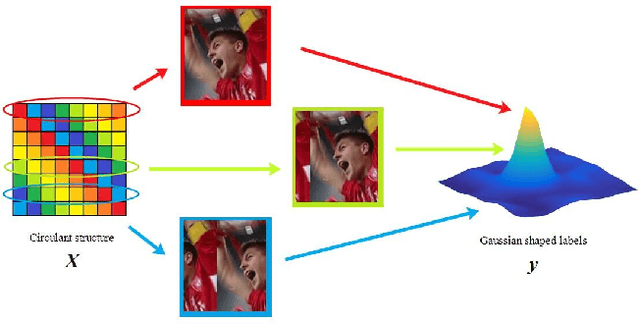

Adaptive Distraction Context Aware Tracking Based on Correlation Filter

Dec 24, 2019

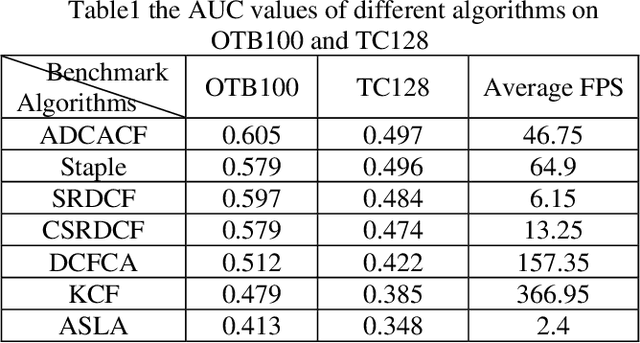

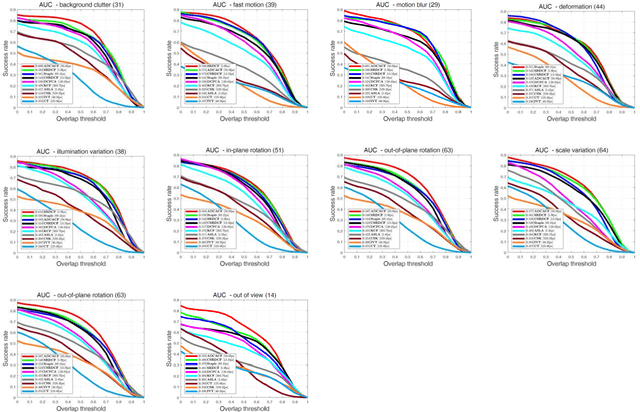

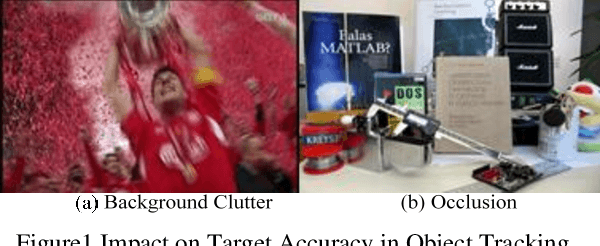

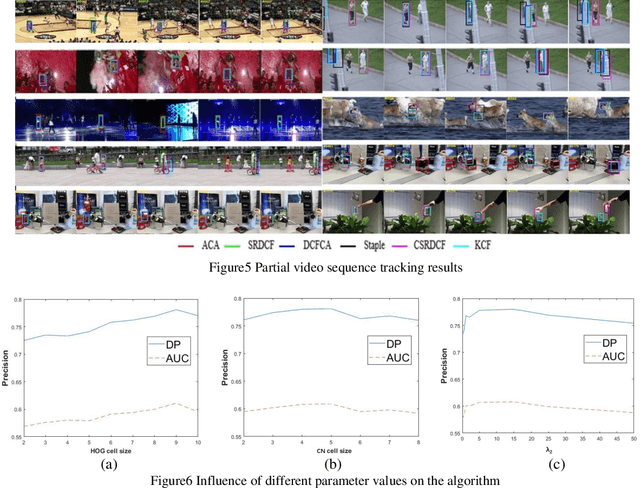

Abstract:The Discriminative Correlation Filter (CF) uses a circulant convolution operation to provide several training samples for the design of a classifier that can distinguish the target from the background. The filter design may be interfered by objects close to the target during the tracking process, resulting in tracking failure. This paper proposes an adaptive distraction context aware tracking algorithm to solve this problem. In the response map obtained for the previous frame by the CF algorithm, we adaptively find the image blocks that are similar to the target and use them as negative samples. This diminishes the influence of similar image blocks on the classifier in the tracking process and its accuracy is improved. The tracking results on video sequences show that the algorithm can cope with rapid changes such as occlusion and rotation, and can adaptively use the distractive objects around the target as negative samples to improve the accuracy of target tracking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge