Wentai Zhang

ChatKBQA: A Generate-then-Retrieve Framework for Knowledge Base Question Answering with Fine-tuned Large Language Models

Oct 13, 2023

Abstract:Knowledge Base Question Answering (KBQA) aims to derive answers to natural language questions over large-scale knowledge bases (KBs), which are generally divided into two research components: knowledge retrieval and semantic parsing. However, three core challenges remain, including inefficient knowledge retrieval, retrieval errors adversely affecting semantic parsing, and the complexity of previous KBQA methods. In the era of large language models (LLMs), we introduce ChatKBQA, a novel generate-then-retrieve KBQA framework built on fine-tuning open-source LLMs such as Llama-2, ChatGLM2 and Baichuan2. ChatKBQA proposes generating the logical form with fine-tuned LLMs first, then retrieving and replacing entities and relations through an unsupervised retrieval method, which improves both generation and retrieval more straightforwardly. Experimental results reveal that ChatKBQA achieves new state-of-the-art performance on standard KBQA datasets, WebQSP, and ComplexWebQuestions (CWQ). This work also provides a new paradigm for combining LLMs with knowledge graphs (KGs) for interpretable and knowledge-required question answering. Our code is publicly available.

Text2NKG: Fine-Grained N-ary Relation Extraction for N-ary relational Knowledge Graph Construction

Oct 12, 2023Abstract:Beyond traditional binary relational facts, n-ary relational knowledge graphs (NKGs) are comprised of n-ary relational facts containing more than two entities, which are closer to real-world facts with broader applications. However, the construction of NKGs still significantly relies on manual labor, and n-ary relation extraction still remains at a course-grained level, which is always in a single schema and fixed arity of entities. To address these restrictions, we propose Text2NKG, a novel fine-grained n-ary relation extraction framework for n-ary relational knowledge graph construction. We introduce a span-tuple classification approach with hetero-ordered merging to accomplish fine-grained n-ary relation extraction in different arity. Furthermore, Text2NKG supports four typical NKG schemas: hyper-relational schema, event-based schema, role-based schema, and hypergraph-based schema, with high flexibility and practicality. Experimental results demonstrate that Text2NKG outperforms the previous state-of-the-art model by nearly 20\% points in the $F_1$ scores on the fine-grained n-ary relation extraction benchmark in the hyper-relational schema. Our code and datasets are publicly available.

Component Segmentation of Engineering Drawings Using Graph Convolutional Networks

Dec 01, 2022

Abstract:We present a data-driven framework to automate the vectorization and machine interpretation of 2D engineering part drawings. In industrial settings, most manufacturing engineers still rely on manual reads to identify the topological and manufacturing requirements from drawings submitted by designers. The interpretation process is laborious and time-consuming, which severely inhibits the efficiency of part quotation and manufacturing tasks. While recent advances in image-based computer vision methods have demonstrated great potential in interpreting natural images through semantic segmentation approaches, the application of such methods in parsing engineering technical drawings into semantically accurate components remains a significant challenge. The severe pixel sparsity in engineering drawings also restricts the effective featurization of image-based data-driven methods. To overcome these challenges, we propose a deep learning based framework that predicts the semantic type of each vectorized component. Taking a raster image as input, we vectorize all components through thinning, stroke tracing, and cubic bezier fitting. Then a graph of such components is generated based on the connectivity between the components. Finally, a graph convolutional neural network is trained on this graph data to identify the semantic type of each component. We test our framework in the context of semantic segmentation of text, dimension and, contour components in engineering drawings. Results show that our method yields the best performance compared to recent image, and graph-based segmentation methods.

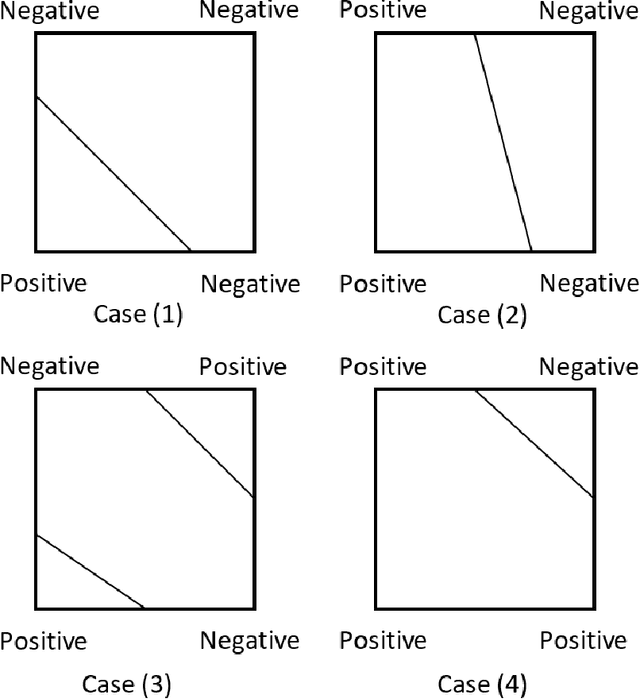

Attention Routing: track-assignment detailed routing using attention-based reinforcement learning

Apr 20, 2020

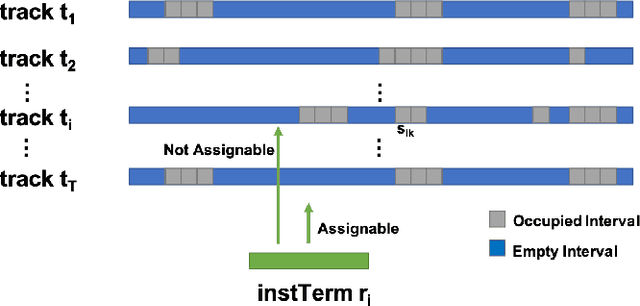

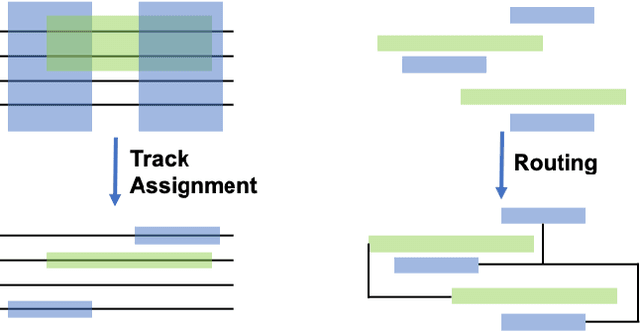

Abstract:In the physical design of integrated circuits, global and detailed routing are critical stages involving the determination of the interconnected paths of each net on a circuit while satisfying the design constraints. Existing actual routers as well as routability predictors either have to resort to expensive approaches that lead to high computational times, or use heuristics that do not generalize well. Even though new, learning-based routing methods have been proposed to address this need, requirements on labelled data and difficulties in addressing complex design rule constraints have limited their adoption in advanced technology node physical design problems. In this work, we propose a new router: attention router, which is the first attempt to solve the track-assignment detailed routing problem using reinforcement learning. Complex design rule constraints are encoded into the routing algorithm and an attention-model-based REINFORCE algorithm is applied to solve the most critical step in routing: sequencing device pairs to be routed. The attention router and its baseline genetic router are applied to solve different commercial advanced technologies analog circuits problem sets. The attention router demonstrates generalization ability to unseen problems and is also able to achieve more than 100 times acceleration over the genetic router without significantly compromising the routing solution quality. We also discover a similarity between the attention router and the baseline genetic router in terms of positive correlations in cost and routing patterns, which demonstrate the attention router's ability to be utilized not only as a detailed router but also as a predictor for routability and congestion.

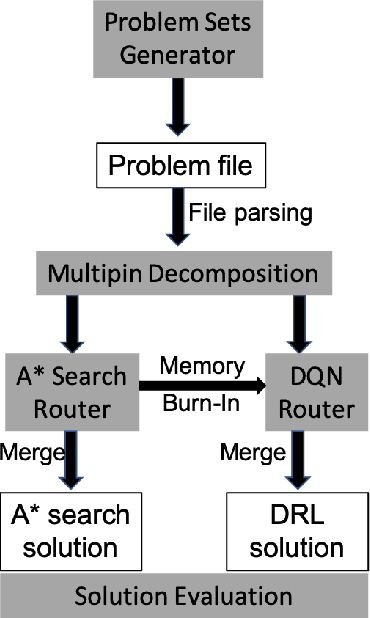

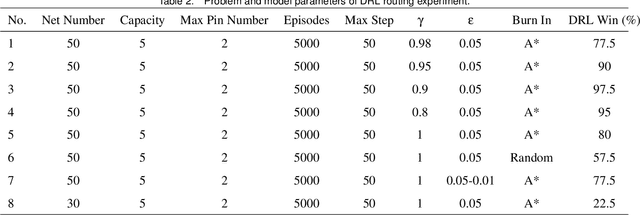

A Deep Reinforcement Learning Approach for Global Routing

Jun 20, 2019

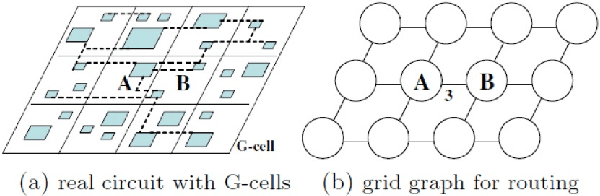

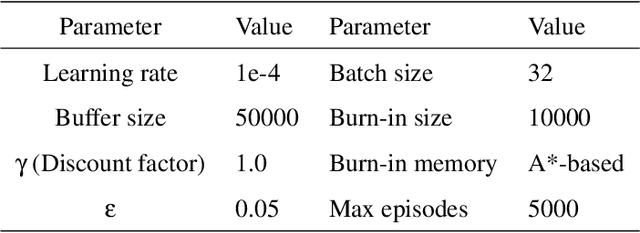

Abstract:Global routing has been a historically challenging problem in electronic circuit design, where the challenge is to connect a large and arbitrary number of circuit components with wires without violating the design rules for the printed circuit boards or integrated circuits. Similar routing problems also exist in the design of complex hydraulic systems, pipe systems and logistic networks. Existing solutions typically consist of greedy algorithms and hard-coded heuristics. As such, existing approaches suffer from a lack of model flexibility and non-optimum solutions. As an alternative approach, this work presents a deep reinforcement learning method for solving the global routing problem in a simulated environment. At the heart of the proposed method is deep reinforcement learning that enables an agent to produce an optimal policy for routing based on the variety of problems it is presented with leveraging the conjoint optimization mechanism of deep reinforcement learning. Conjoint optimization mechanism is explained and demonstrated in details; the best network structure and the parameters of the learned model are explored. Based on the fine-tuned model, routing solutions and rewards are presented and analyzed. The results indicate that the approach can outperform the benchmark method of a sequential A* method, suggesting a promising potential for deep reinforcement learning for global routing and other routing or path planning problems in general. Another major contribution of this work is the development of a global routing problem sets generator with the ability to generate parameterized global routing problem sets with different size and constraints, enabling evaluation of different routing algorithms and the generation of training datasets for future data-driven routing approaches.

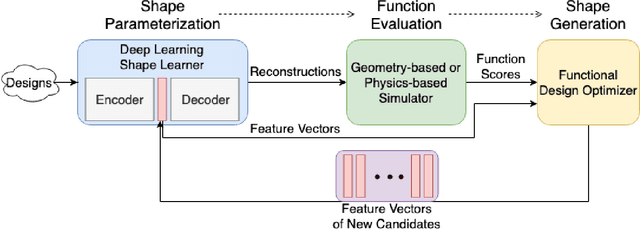

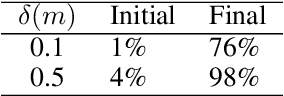

3D Shape Synthesis for Conceptual Design and Optimization Using Variational Autoencoders

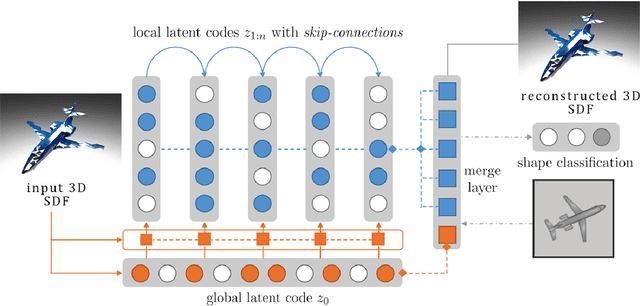

Apr 16, 2019

Abstract:We propose a data-driven 3D shape design method that can learn a generative model from a corpus of existing designs, and use this model to produce a wide range of new designs. The approach learns an encoding of the samples in the training corpus using an unsupervised variational autoencoder-decoder architecture, without the need for an explicit parametric representation of the original designs. To facilitate the generation of smooth final surfaces, we develop a 3D shape representation based on a distance transformation of the original 3D data, rather than using the commonly utilized binary voxel representation. Once established, the generator maps the latent space representations to the high-dimensional distance transformation fields, which are then automatically surfaced to produce 3D representations amenable to physics simulations or other objective function evaluation modules. We demonstrate our approach for the computational design of gliders that are optimized to attain prescribed performance scores. Our results show that when combined with genetic optimization, the proposed approach can generate a rich set of candidate concept designs that achieve prescribed functional goals, even when the original dataset has only a few or no solutions that achieve these goals.

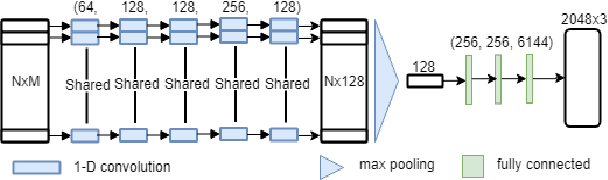

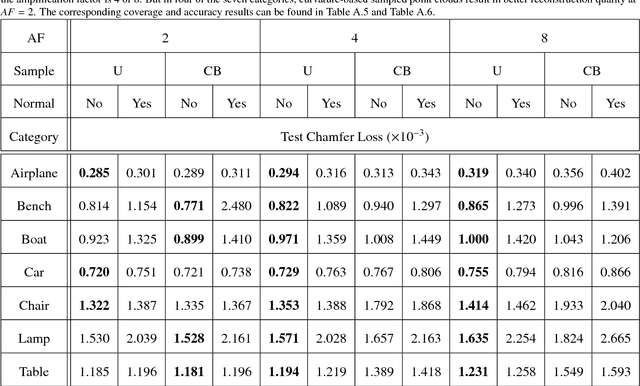

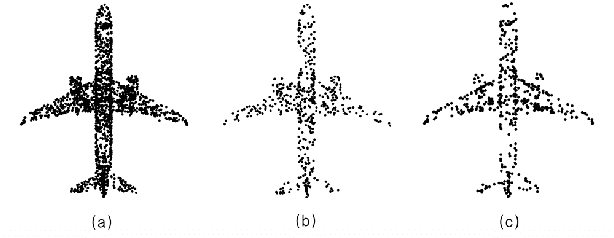

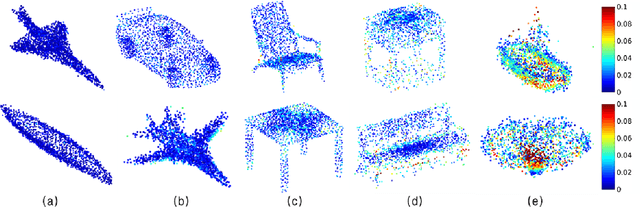

Data-driven Upsampling of Point Clouds

Jul 08, 2018

Abstract:High quality upsampling of sparse 3D point clouds is critically useful for a wide range of geometric operations such as reconstruction, rendering, meshing, and analysis. In this paper, we propose a data-driven algorithm that enables an upsampling of 3D point clouds without the need for hard-coded rules. Our approach uses a deep network with Chamfer distance as the loss function, capable of learning the latent features in point clouds belonging to different object categories. We evaluate our algorithm across different amplification factors, with upsampling learned and performed on objects belonging to the same category as well as different categories. We also explore the desirable characteristics of input point clouds as a function of the distribution of the point samples. Finally, we demonstrate the performance of our algorithm in single-category training versus multi-category training scenarios. The final proposed model is compared against a baseline, optimization-based upsampling method. Results indicate that our algorithm is capable of generating more uniform and accurate upsamplings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge