Wenjie Zheng

Training-Free Multi-View Extension of IC-Light for Textual Position-Aware Scene Relighting

Nov 17, 2025Abstract:We introduce GS-Light, an efficient, textual position-aware pipeline for text-guided relighting of 3D scenes represented via Gaussian Splatting (3DGS). GS-Light implements a training-free extension of a single-input diffusion model to handle multi-view inputs. Given a user prompt that may specify lighting direction, color, intensity, or reference objects, we employ a large vision-language model (LVLM) to parse the prompt into lighting priors. Using off-the-shelf estimators for geometry and semantics (depth, surface normals, and semantic segmentation), we fuse these lighting priors with view-geometry constraints to compute illumination maps and generate initial latent codes for each view. These meticulously derived init latents guide the diffusion model to generate relighting outputs that more accurately reflect user expectations, especially in terms of lighting direction. By feeding multi-view rendered images, along with the init latents, into our multi-view relighting model, we produce high-fidelity, artistically relit images. Finally, we fine-tune the 3DGS scene with the relit appearance to obtain a fully relit 3D scene. We evaluate GS-Light on both indoor and outdoor scenes, comparing it to state-of-the-art baselines including per-view relighting, video relighting, and scene editing methods. Using quantitative metrics (multi-view consistency, imaging quality, aesthetic score, semantic similarity, etc.) and qualitative assessment (user studies), GS-Light demonstrates consistent improvements over baselines. Code and assets will be made available upon publication.

No Pixel Left Behind: A Detail-Preserving Architecture for Robust High-Resolution AI-Generated Image Detection

Aug 24, 2025Abstract:The rapid growth of high-resolution, meticulously crafted AI-generated images poses a significant challenge to existing detection methods, which are often trained and evaluated on low-resolution, automatically generated datasets that do not align with the complexities of high-resolution scenarios. A common practice is to resize or center-crop high-resolution images to fit standard network inputs. However, without full coverage of all pixels, such strategies risk either obscuring subtle, high-frequency artifacts or discarding information from uncovered regions, leading to input information loss. In this paper, we introduce the High-Resolution Detail-Aggregation Network (HiDA-Net), a novel framework that ensures no pixel is left behind. We use the Feature Aggregation Module (FAM), which fuses features from multiple full-resolution local tiles with a down-sampled global view of the image. These local features are aggregated and fused with global representations for final prediction, ensuring that native-resolution details are preserved and utilized for detection. To enhance robustness against challenges such as localized AI manipulations and compression, we introduce Token-wise Forgery Localization (TFL) module for fine-grained spatial sensitivity and JPEG Quality Factor Estimation (QFE) module to disentangle generative artifacts from compression noise explicitly. Furthermore, to facilitate future research, we introduce HiRes-50K, a new challenging benchmark consisting of 50,568 images with up to 64 megapixels. Extensive experiments show that HiDA-Net achieves state-of-the-art, increasing accuracy by over 13% on the challenging Chameleon dataset and 10% on our HiRes-50K.

Towards Explainable Multimodal Depression Recognition for Clinical Interviews

Jan 27, 2025

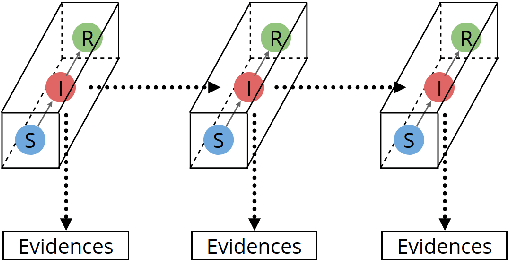

Abstract:Recently, multimodal depression recognition for clinical interviews (MDRC) has recently attracted considerable attention. Existing MDRC studies mainly focus on improving task performance and have achieved significant development. However, for clinical applications, model transparency is critical, and previous works ignore the interpretability of decision-making processes. To address this issue, we propose an Explainable Multimodal Depression Recognition for Clinical Interviews (EMDRC) task, which aims to provide evidence for depression recognition by summarizing symptoms and uncovering underlying causes. Given an interviewer-participant interaction scenario, the goal of EMDRC is to structured summarize participant's symptoms based on the eight-item Patient Health Questionnaire depression scale (PHQ-8), and predict their depression severity. To tackle the EMDRC task, we construct a new dataset based on an existing MDRC dataset. Moreover, we utilize the PHQ-8 and propose a PHQ-aware multimodal multi-task learning framework, which captures the utterance-level symptom-related semantic information to help generate dialogue-level summary. Experiment results on our annotated dataset demonstrate the superiority of our proposed methods over baseline systems on the EMDRC task.

Enhancing Facial Consistency in Conditional Video Generation via Facial Landmark Transformation

Dec 12, 2024

Abstract:Landmark-guided character animation generation is an important field. Generating character animations with facial features consistent with a reference image remains a significant challenge in conditional video generation, especially involving complex motions like dancing. Existing methods often fail to maintain facial feature consistency due to mismatches between the facial landmarks extracted from source videos and the target facial features in the reference image. To address this problem, we propose a facial landmark transformation method based on the 3D Morphable Model (3DMM). We obtain transformed landmarks that align with the target facial features by reconstructing 3D faces from the source landmarks and adjusting the 3DMM parameters to match the reference image. Our method improves the facial consistency between the generated videos and the reference images, effectively improving the facial feature mismatch problem.

Hyperbolic Hierarchical Knowledge Graph Embeddings for Link Prediction in Low Dimensions

Apr 28, 2022

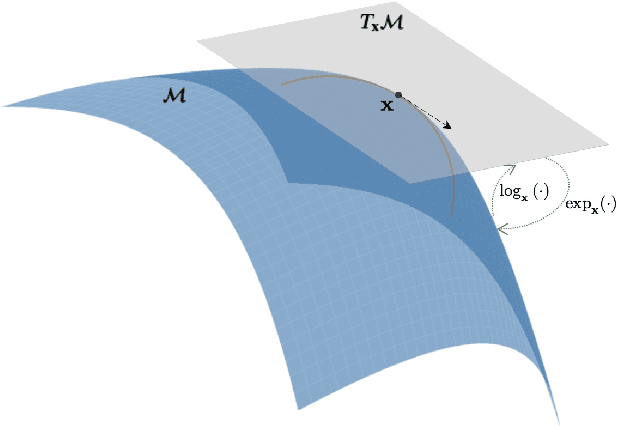

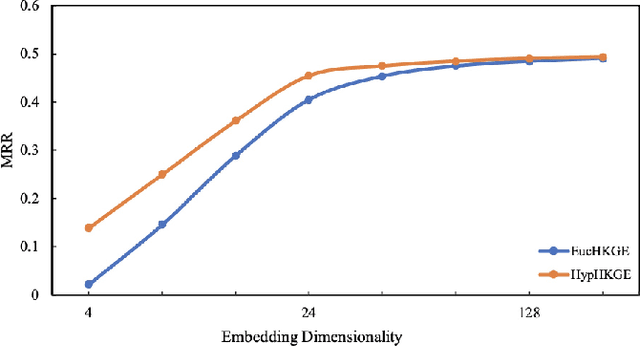

Abstract:Knowledge graph embeddings (KGE) have been validated as powerful methods for inferring missing links in knowledge graphs (KGs) since they map entities into Euclidean space and treat relations as transformations of entities. Currently, some Euclidean KGE methods model semantic hierarchies prevalent in KGs and promote the performance of link prediction. For hierarchical data, instead of traditional Euclidean space, hyperbolic space as an embedding space has shown the promise of high fidelity and low memory consumption; however, existing hyperbolic KGE methods neglect to model them. To address this issue, we propose a novel KGE model -- hyperbolic hierarchical KGE (HypHKGE). To be specific, we first design the attention-based learnable curvatures for hyperbolic space to preserve rich semantic hierarchies. Moreover, we define the hyperbolic hierarchical transformations based on the theory of hyperbolic geometry, which utilize hierarchies that we preserved to infer the links. Experiments show that HypHKGE can effectively model semantic hierarchies in hyperbolic space and outperforms the state-of-the-art hyperbolic methods, especially in low dimensions.

Total Variation Regularization for Compartmental Epidemic Models with Time-varying Dynamics

Apr 01, 2020

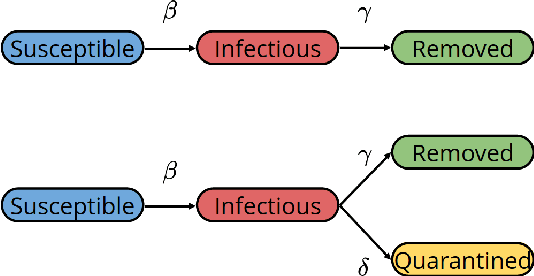

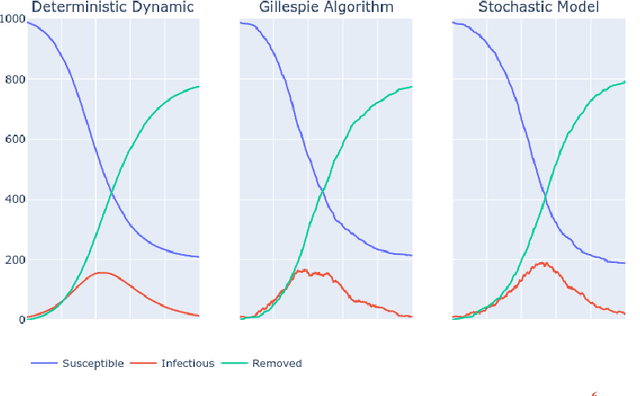

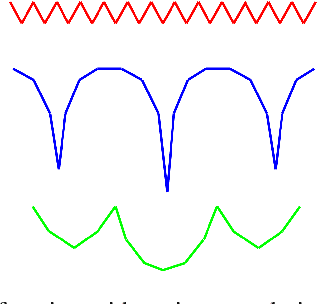

Abstract:Traditional methods to infer compartmental epidemic models with time-varying dynamics can only capture continuous changes in the dynamic. However, many changes are discontinuous due to sudden interventions, such as city lockdown and opening of field hospitals. To model the discontinuities, this study introduces the tool of total variation regularization, which regulates the temporal changes of the dynamic parameters, such as the transmission rate. To recover the ground truth dynamic, this study designs a novel yet straightforward optimization algorithm, dubbed iterative Nelder-Mead, which repeatedly applies the Nelder-Mead algorithm. Experiments on the simulated data show that the proposed approach can qualitatively reproduce the discontinuities of the underlying dynamics. To extend this research to real data as well as to help researchers worldwide to fight against COVID-19, the author releases his research platform as an open-source package.

$hv$-Block Cross Validation is not a BIBD: a Note on the Paper by Jeff Racine

Oct 20, 2019

Abstract:This note corrects a mistake in the paper "consistent cross-validatory model-selection for dependent data: $hv$-block cross-validation" by Racine (2000). In his paper, he implied that the therein proposed $hv$-block cross-validation is consistent in the sense of Shao (1993). To get this intuition, he relied on the speculation that $hv$-block is a balanced incomplete block design (BIBD). This note demonstrates that this is not the case, and thus the theoretical consistency of $hv$-block remains an open question. In addition, I also provide a Python program counting the number of occurrences of each sample and each pair of samples.

A Distributed Frank-Wolfe Framework for Learning Low-Rank Matrices with the Trace Norm

May 11, 2018

Abstract:We consider the problem of learning a high-dimensional but low-rank matrix from a large-scale dataset distributed over several machines, where low-rankness is enforced by a convex trace norm constraint. We propose DFW-Trace, a distributed Frank-Wolfe algorithm which leverages the low-rank structure of its updates to achieve efficiency in time, memory and communication usage. The step at the heart of DFW-Trace is solved approximately using a distributed version of the power method. We provide a theoretical analysis of the convergence of DFW-Trace, showing that we can ensure sublinear convergence in expectation to an optimal solution with few power iterations per epoch. We implement DFW-Trace in the Apache Spark distributed programming framework and validate the usefulness of our approach on synthetic and real data, including the ImageNet dataset with high-dimensional features extracted from a deep neural network.

Toward a Better Understanding of Leaderboard

Jun 07, 2017

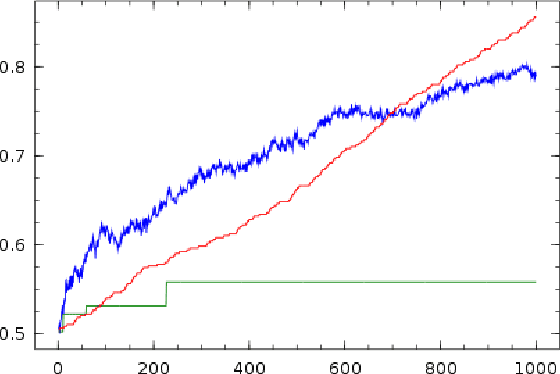

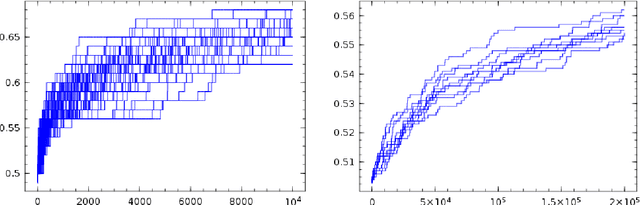

Abstract:The leaderboard in machine learning competitions is a tool to show the performance of various participants and to compare them. However, the leaderboard quickly becomes no longer accurate, due to hack or overfitting. This article gives two pieces of advice to prevent easy hack or overfitting. By following these advice, we reach the conclusion that something like the Ladder leaderboard introduced in [blum2015ladder] is inevitable. With this understanding, we naturally simplify Ladder by eliminating its redundant computation and explain how to choose the parameter and interpret it. We also prove that the sample complexity is cubic to the desired precision of the leaderboard.

Two Differentially Private Rating Collection Mechanisms for Recommender Systems

Apr 28, 2016

Abstract:We design two mechanisms for the recommender system to collect user ratings. One is modified Laplace mechanism, and the other is randomized response mechanism. We prove that they are both differentially private and preserve the data utility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge