Vijay Saraswat

Xerox PARC

Handling tree-structured text: parsing directory pages

Nov 24, 2021

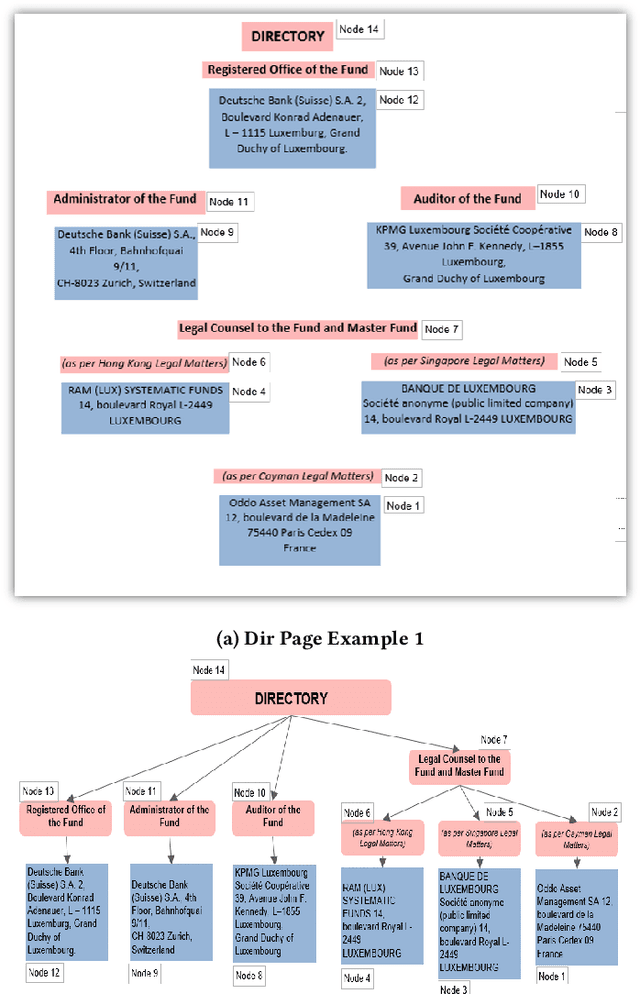

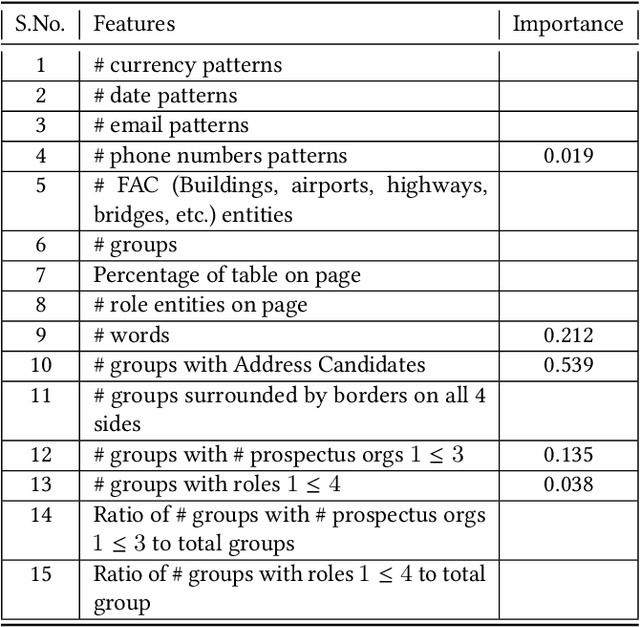

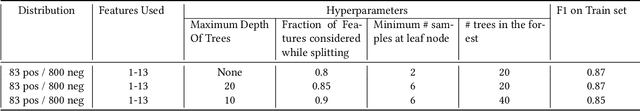

Abstract:The determination of the reading sequence of text is fundamental to document understanding. This problem is easily solved in pages where the text is organized into a sequence of lines and vertical alignment runs the height of the page (producing multiple columns which can be read from left to right). We present a situation -- the directory page parsing problem -- where information is presented on the page in an irregular, visually-organized, two-dimensional format. Directory pages are fairly common in financial prospectuses and carry information about organizations, their addresses and relationships that is key to business tasks in client onboarding. Interestingly, directory pages sometimes have hierarchical structure, motivating the need to generalize the reading sequence to a reading tree. We present solutions to the problem of identifying directory pages and constructing the reading tree, using (learnt) classifiers for text segments and a bottom-up (right to left, bottom-to-top) traversal of segments. The solution is a key part of a production service supporting automatic extraction of organization, address and relationship information from client onboarding documents.

KGPool: Dynamic Knowledge Graph Context Selection for Relation Extraction

Jun 06, 2021

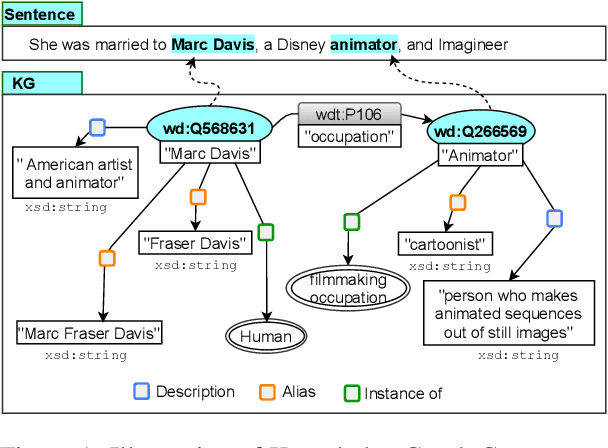

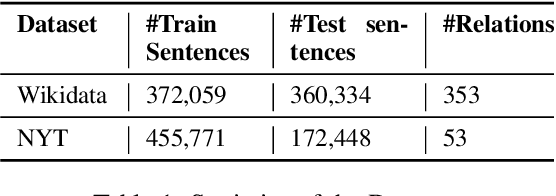

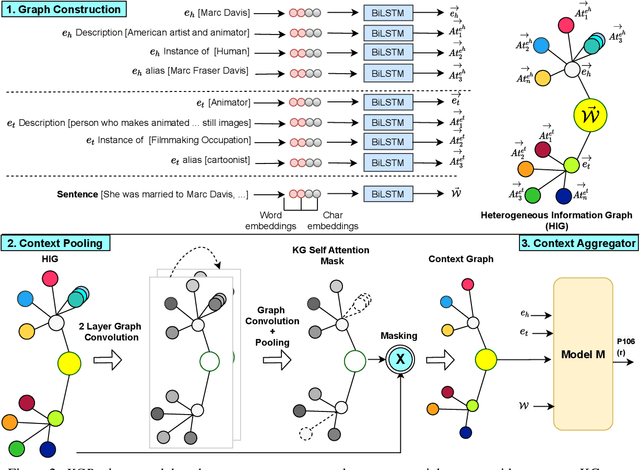

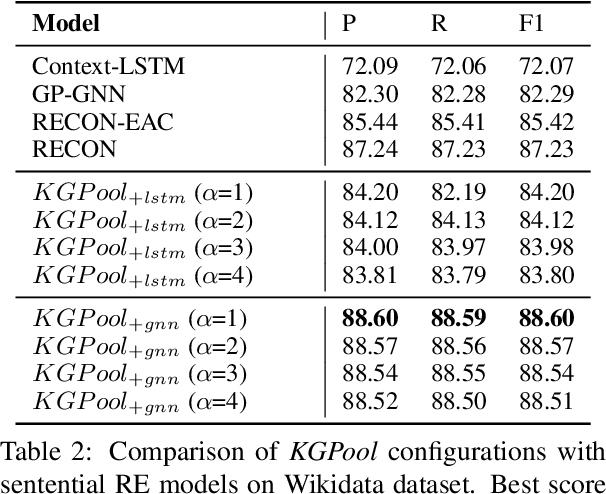

Abstract:We present a novel method for relation extraction (RE) from a single sentence, mapping the sentence and two given entities to a canonical fact in a knowledge graph (KG). Especially in this presumed sentential RE setting, the context of a single sentence is often sparse. This paper introduces the KGPool method to address this sparsity, dynamically expanding the context with additional facts from the KG. It learns the representation of these facts (entity alias, entity descriptions, etc.) using neural methods, supplementing the sentential context. Unlike existing methods that statically use all expanded facts, KGPool conditions this expansion on the sentence. We study the efficacy of KGPool by evaluating it with different neural models and KGs (Wikidata and NYT Freebase). Our experimental evaluation on standard datasets shows that by feeding the KGPool representation into a Graph Neural Network, the overall method is significantly more accurate than state-of-the-art methods.

Learning an Executable Neural Semantic Parser

Aug 12, 2018Abstract:This paper describes a neural semantic parser that maps natural language utterances onto logical forms which can be executed against a task-specific environment, such as a knowledge base or a database, to produce a response. The parser generates tree-structured logical forms with a transition-based approach which combines a generic tree-generation algorithm with domain-general operations defined by the logical language. The generation process is modeled by structured recurrent neural networks, which provide a rich encoding of the sentential context and generation history for making predictions. To tackle mismatches between natural language and logical form tokens, various attention mechanisms are explored. Finally, we consider different training settings for the neural semantic parser, including a fully supervised training where annotated logical forms are given, weakly-supervised training where denotations are provided, and distant supervision where only unlabeled sentences and a knowledge base are available. Experiments across a wide range of datasets demonstrate the effectiveness of our parser.

Learning Structured Natural Language Representations for Semantic Parsing

Jun 14, 2017

Abstract:We introduce a neural semantic parser that converts natural language utterances to intermediate representations in the form of predicate-argument structures, which are induced with a transition system and subsequently mapped to target domains. The semantic parser is trained end-to-end using annotated logical forms or their denotations. We obtain competitive results on various datasets. The induced predicate-argument structures shed light on the types of representations useful for semantic parsing and how these are different from linguistically motivated ones.

Logical Conditional Preference Theories

Apr 24, 2015

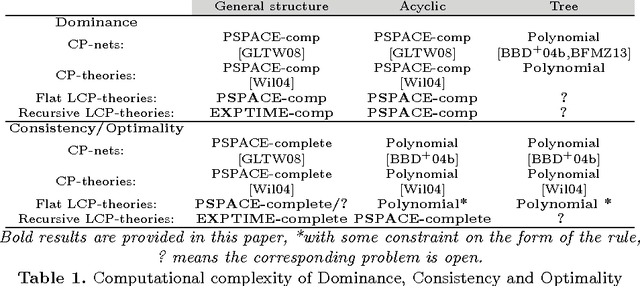

Abstract:CP-nets represent the dominant existing framework for expressing qualitative conditional preferences between alternatives, and are used in a variety of areas including constraint solving. Over the last fifteen years, a significant literature has developed exploring semantics, algorithms, implementation and use of CP-nets. This paper introduces a comprehensive new framework for conditional preferences: logical conditional preference theories (LCP theories). To express preferences, the user specifies arbitrary (constraint) Datalog programs over a binary ordering relation on outcomes. We show how LCP theories unify and generalize existing conditional preference proposals, and leverage the rich semantic, algorithmic and implementation frameworks of Datalog.

Quantifiers, Anaphora, and Intensionality

Apr 29, 1995

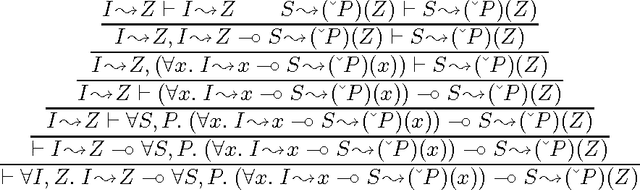

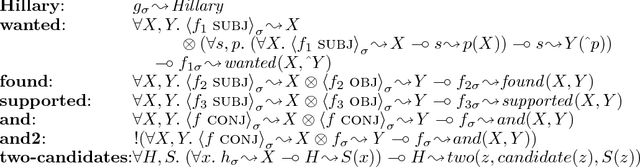

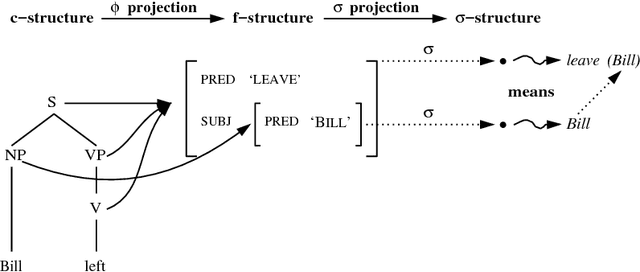

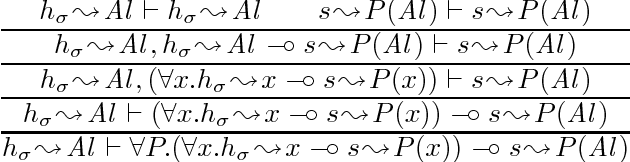

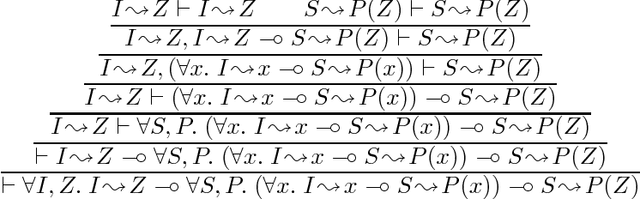

Abstract:The relationship between Lexical-Functional Grammar (LFG) {\em functional structures} (f-structures) for sentences and their semantic interpretations can be expressed directly in a fragment of linear logic in a way that correctly explains the constrained interactions between quantifier scope ambiguity, bound anaphora and intensionality. This deductive approach to semantic interpretaion obviates the need for additional mechanisms, such as Cooper storage, to represent the possible scopes of a quantified NP, and explains the interactions between quantified NPs, anaphora and intensional verbs such as `seek'. A single specification in linear logic of the argument requirements of intensional verbs is sufficient to derive the correct reading predictions for intensional-verb clauses both with nonquantified and with quantified direct objects. In particular, both de dicto and de re readings are derived for quantified objects. The effects of type-raising or quantifying-in rules in other frameworks here just follow as linear-logic theorems. While our approach resembles current categorial approaches in important ways, it differs from them in allowing the greater type flexibility of categorial semantics while maintaining a precise connection to syntax. As a result, we are able to provide derivations for certain readings of sentences with intensional verbs and complex direct objects that are not derivable in current purely categorial accounts of the syntax-semantics interface.

Linear Logic for Meaning Assembly

Apr 18, 1995Abstract:Semantic theories of natural language associate meanings with utterances by providing meanings for lexical items and rules for determining the meaning of larger units given the meanings of their parts. Meanings are often assumed to combine via function application, which works well when constituent structure trees are used to guide semantic composition. However, we believe that the functional structure of Lexical-Functional Grammar is best used to provide the syntactic information necessary for constraining derivations of meaning in a cross-linguistically uniform format. It has been difficult, however, to reconcile this approach with the combination of meanings by function application. In contrast to compositional approaches, we present a deductive approach to assembling meanings, based on reasoning with constraints, which meshes well with the unordered nature of information in the functional structure. Our use of linear logic as a `glue' for assembling meanings allows for a coherent treatment of the LFG requirements of completeness and coherence as well as of modification and quantification.

* 19 pages, uses lingmacros.sty, fullname.sty, tree-dvips.sty, latexsym.sty, requires the new version of Latex

The Semantics of Resource Sharing in Lexical-Functional Grammar

Feb 13, 1995

Abstract:We argue that the resource sharing that is commonly manifest in semantic accounts of coordination is instead appropriately handled in terms of structure-sharing in LFG f-structures. We provide an extension to the previous account of LFG semantics (Dalrymple et al., 1993b) according to which dependencies between f-structures are viewed as resources; as a result a one-to-one correspondence between uses of f-structures and meanings is maintained. The resulting system is sufficiently restricted in cases where other approaches overgenerate; the very property of resource-sensitivity for which resource sharing appears to be problematic actually provides explanatory advantages over systems that more freely replicate resources during derivation.

* 8 pages, to appear in EACL-95. Requires eaclap.sty, tree-dvips.sty, tree-dvips.pro, lingmacros.sty, dgmacros.tex, lfgmacros.tex. Comments welcome.

Intensional Verbs Without Type-Raising or Lexical Ambiguity

Aug 22, 1994

Abstract:We present an analysis of the semantic interpretation of intensional verbs such as seek that allows them to take direct objects of either individual or quantifier type, producing both de dicto and de re readings in the quantifier case, all without needing to stipulate type-raising or quantifying-in rules. This simple account follows directly from our use of logical deduction in linear logic to express the relationship between syntactic structures and meanings. While our analysis resembles current categorial approaches in important ways, it differs from them in allowing the greater type flexibility of categorial semantics while maintaining a precise connection to syntax. As a result, we are able to provide derivations for certain readings of sentences with intensional verbs and complex direct objects that are not derivable in current purely categorial accounts of the syntax-semantics interface. The analysis forms a part of our ongoing work on semantic interpretation within the framework of Lexical-Functional Grammar.

A Deductive Account of Quantification in LFG

May 27, 1994Abstract:The relationship between Lexical-Functional Grammar (LFG) functional structures (f-structures) for sentences and their semantic interpretations can be expressed directly in a fragment of linear logic in a way that explains correctly the constrained interactions between quantifier scope ambiguity and bound anaphora. The use of a deductive framework to account for the compositional properties of quantifying expressions in natural language obviates the need for additional mechanisms, such as Cooper storage, to represent the different scopes that a quantifier might take. Instead, the semantic contribution of a quantifier is recorded as an ordinary logical formula, one whose use in a proof will establish the scope of the quantifier. The properties of linear logic ensure that each quantifier is scoped exactly once. Our analysis of quantifier scope can be seen as a recasting of Pereira's analysis (Pereira, 1991), which was expressed in higher-order intuitionistic logic. But our use of LFG and linear logic provides a much more direct and computationally more flexible interpretation mechanism for at least the same range of phenomena. We have developed a preliminary Prolog implementation of the linear deductions described in this work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge