Tran Cao Son

New Mexico State University

xDNN(ASP): Explanation Generation System for Deep Neural Networks powered by Answer Set Programming

Jan 07, 2026Abstract:Explainable artificial intelligence (xAI) has gained significant attention in recent years. Among other things, explainablility for deep neural networks has been a topic of intensive research due to the meteoric rise in prominence of deep neural networks and their "black-box" nature. xAI approaches can be characterized along different dimensions such as their scope (global versus local explanations) or underlying methodologies (statistic-based versus rule-based strategies). Methods generating global explanations aim to provide reasoning process applicable to all possible output classes while local explanation methods focus only on a single, specific class. SHAP (SHapley Additive exPlanations), a well-known statistical technique, identifies important features of a network. Deep neural network rule extraction method constructs IF-THEN rules that link input conditions to a class. Another approach focuses on generating counterfactuals which help explain how small changes to an input can affect the model's predictions. However, these techniques primarily focus on the input-output relationship and thus neglect the structure of the network in explanation generation. In this work, we propose xDNN(ASP), an explanation generation system for deep neural networks that provides global explanations. Given a neural network model and its training data, xDNN(ASP) extracts a logic program under answer set semantics that-in the ideal case-represents the trained model, i.e., answer sets of the extracted program correspond one-to-one to input-output pairs of the network. We demonstrate experimentally, using two synthetic datasets, that not only the extracted logic program maintains a high-level of accuracy in the prediction task, but it also provides valuable information for the understanding of the model such as the importance of features as well as the impact of hidden nodes on the prediction. The latter can be used as a guide for reducing the number of nodes used in hidden layers, i.e., providing a means for optimizing the network.

* In Proceedings ICLP 2025, arXiv:2601.00047

An LLM + ASP Workflow for Joint Entity-Relation Extraction

Aug 18, 2025

Abstract:Joint entity-relation extraction (JERE) identifies both entities and their relationships simultaneously. Traditional machine-learning based approaches to performing this task require a large corpus of annotated data and lack the ability to easily incorporate domain specific information in the construction of the model. Therefore, creating a model for JERE is often labor intensive, time consuming, and elaboration intolerant. In this paper, we propose harnessing the capabilities of generative pretrained large language models (LLMs) and the knowledge representation and reasoning capabilities of Answer Set Programming (ASP) to perform JERE. We present a generic workflow for JERE using LLMs and ASP. The workflow is generic in the sense that it can be applied for JERE in any domain. It takes advantage of LLM's capability in natural language understanding in that it works directly with unannotated text. It exploits the elaboration tolerant feature of ASP in that no modification of its core program is required when additional domain specific knowledge, in the form of type specifications, is found and needs to be used. We demonstrate the usefulness of the proposed workflow through experiments with limited training data on three well-known benchmarks for JERE. The results of our experiments show that the LLM + ASP workflow is better than state-of-the-art JERE systems in several categories with only 10\% of training data. It is able to achieve a 2.5 times (35\% over 15\%) improvement in the Relation Extraction task for the SciERC corpus, one of the most difficult benchmarks.

A Methodology for Gradual Semantics for Structured Argumentation under Incomplete Information

Oct 29, 2024

Abstract:Gradual semantics have demonstrated great potential in argumentation, in particular for deploying quantitative bipolar argumentation frameworks (QBAFs) in a number of real-world settings, from judgmental forecasting to explainable AI. In this paper, we provide a novel methodology for obtaining gradual semantics for structured argumentation frameworks, where the building blocks of arguments and relations between them are known, unlike in QBAFs, where arguments are abstract entities. Differently from existing approaches, our methodology accommodates incomplete information about arguments' premises. We demonstrate the potential of our approach by introducing two different instantiations of the methodology, leveraging existing gradual semantics for QBAFs in these more complex frameworks. We also define a set of novel properties for gradual semantics in structured argumentation, discuss their suitability over a set of existing properties. Finally, we provide a comprehensive theoretical analysis assessing the instantiations, demonstrating the their advantages over existing gradual semantics for QBAFs and structured argumentation.

UnSeenTimeQA: Time-Sensitive Question-Answering Beyond LLMs' Memorization

Jul 03, 2024

Abstract:This paper introduces UnSeenTimeQA, a novel time-sensitive question-answering (TSQA) benchmark that diverges from traditional TSQA benchmarks by avoiding factual and web-searchable queries. We present a series of time-sensitive event scenarios decoupled from real-world factual information. It requires large language models (LLMs) to engage in genuine temporal reasoning, disassociating from the knowledge acquired during the pre-training phase. Our evaluation of six open-source LLMs (ranging from 2B to 70B in size) and three closed-source LLMs reveal that the questions from the UnSeenTimeQA present substantial challenges. This indicates the models' difficulties in handling complex temporal reasoning scenarios. Additionally, we present several analyses shedding light on the models' performance in answering time-sensitive questions.

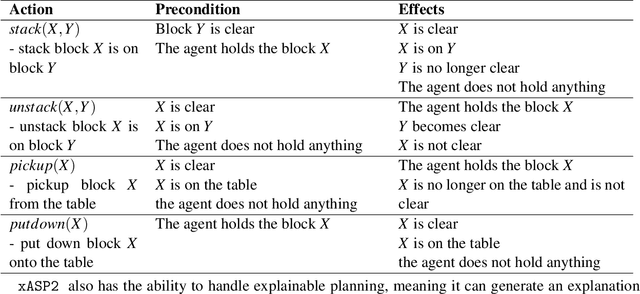

ActionReasoningBench: Reasoning about Actions with and without Ramification Constraints

Jun 06, 2024

Abstract:Reasoning about actions and change (RAC) has historically driven the development of many early AI challenges, such as the frame problem, and many AI disciplines, including non-monotonic and commonsense reasoning. The role of RAC remains important even now, particularly for tasks involving dynamic environments, interactive scenarios, and commonsense reasoning. Despite the progress of Large Language Models (LLMs) in various AI domains, their performance on RAC is underexplored. To address this gap, we introduce a new benchmark, ActionReasoningBench, encompassing 13 domains and rigorously evaluating LLMs across eight different areas of RAC. These include - Object Tracking, Fluent Tracking, State Tracking, Action Executability, Effects of Actions, Numerical RAC, Hallucination Detection, and Composite Questions. Furthermore, we also investigate the indirect effect of actions due to ramification constraints for every domain. Finally, we evaluate our benchmark using open-sourced and commercial state-of-the-art LLMs, including GPT-4o, Gemini-1.0-Pro, Llama2-7b-chat, Llama2-13b-chat, Llama3-8b-instruct, Gemma-2b-instruct, and Gemma-7b-instruct. Our findings indicate that these models face significant challenges across all categories included in our benchmark.

On Generating Monolithic and Model Reconciling Explanations in Probabilistic Scenarios

May 29, 2024

Abstract:Explanation generation frameworks aim to make AI systems' decisions transparent and understandable to human users. However, generating explanations in uncertain environments characterized by incomplete information and probabilistic models remains a significant challenge. In this paper, we propose a novel framework for generating probabilistic monolithic explanations and model reconciling explanations. Monolithic explanations provide self-contained reasons for an explanandum without considering the agent receiving the explanation, while model reconciling explanations account for the knowledge of the agent receiving the explanation. For monolithic explanations, our approach integrates uncertainty by utilizing probabilistic logic to increase the probability of the explanandum. For model reconciling explanations, we propose a framework that extends the logic-based variant of the model reconciliation problem to account for probabilistic human models, where the goal is to find explanations that increase the probability of the explanandum while minimizing conflicts between the explanation and the probabilistic human model. We introduce explanatory gain and explanatory power as quantitative metrics to assess the quality of these explanations. Further, we present algorithms that exploit the duality between minimal correction sets and minimal unsatisfiable sets to efficiently compute both types of explanations in probabilistic contexts. Extensive experimental evaluations on various benchmarks demonstrate the effectiveness and scalability of our approach in generating explanations under uncertainty.

Routing and Scheduling in Answer Set Programming applied to Multi-Agent Path Finding: Preliminary Report

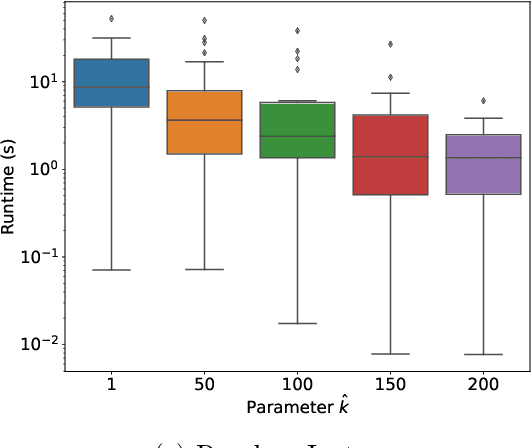

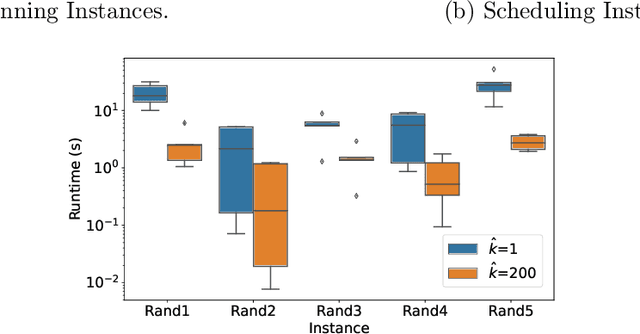

Mar 18, 2024Abstract:We present alternative approaches to routing and scheduling in Answer Set Programming (ASP), and explore them in the context of Multi-agent Path Finding. The idea is to capture the flow of time in terms of partial orders rather than time steps attached to actions and fluents. This also abolishes the need for fixed upper bounds on the length of plans. The trade-off for this avoidance is that (parts of) temporal trajectories must be acyclic, since multiple occurrences of the same action or fluent cannot be distinguished anymore. While this approach provides an interesting alternative for modeling routing, it is without alternative for scheduling since fine-grained timings cannot be represented in ASP in a feasible way. This is different for partial orders that can be efficiently handled by external means such as acyclicity and difference constraints. We formally elaborate upon this idea and present several resulting ASP encodings. Finally, we demonstrate their effectiveness via an empirical analysis.

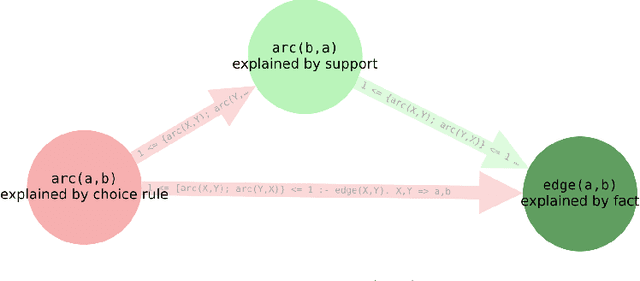

Explanations for Answer Set Programming

Aug 30, 2023

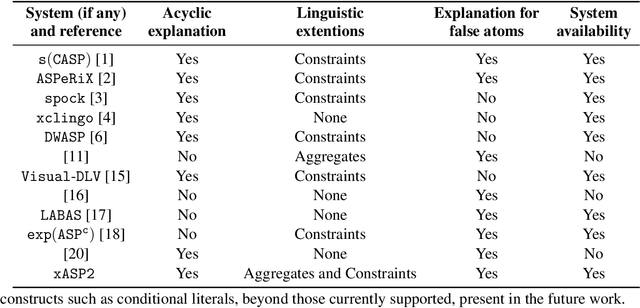

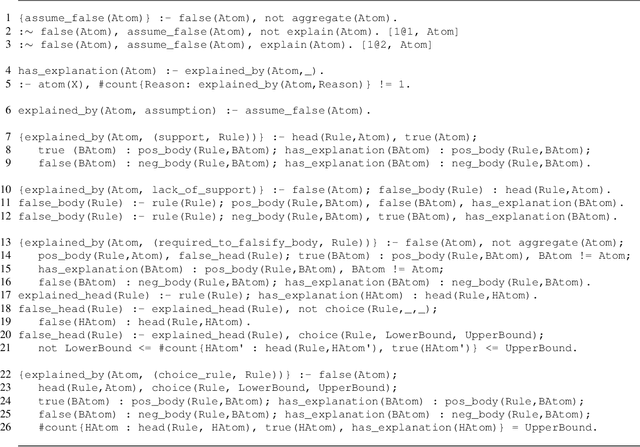

Abstract:The paper presents an enhancement of xASP, a system that generates explanation graphs for Answer Set Programming (ASP). Different from xASP, the new system, xASP2, supports different clingo constructs like the choice rules, the constraints, and the aggregates such as #sum, #min. This work formalizes and presents an explainable artificial intelligence system for a broad fragment of ASP, capable of shrinking as much as possible the set of assumptions and presenting explanations in terms of directed acyclic graphs.

* In Proceedings ICLP 2023, arXiv:2308.14898

DR-HAI: Argumentation-based Dialectical Reconciliation in Human-AI Interactions

Jun 26, 2023

Abstract:We introduce DR-HAI -- a novel argumentation-based framework designed to extend model reconciliation approaches, commonly used in explainable AI planning, for enhanced human-AI interaction. By adopting a multi-shot reconciliation paradigm and not assuming a-priori knowledge of the human user's model, DR-HAI enables interactive reconciliation to address knowledge discrepancies between an explainer and an explainee. We formally describe the operational semantics of DR-HAI, provide theoretical guarantees related to termination and success, and empirically evaluate its efficacy. Our findings suggest that DR-HAI offers a promising direction for fostering effective human-AI interactions.

ASPER: Answer Set Programming Enhanced Neural Network Models for Joint Entity-Relation Extraction

May 24, 2023

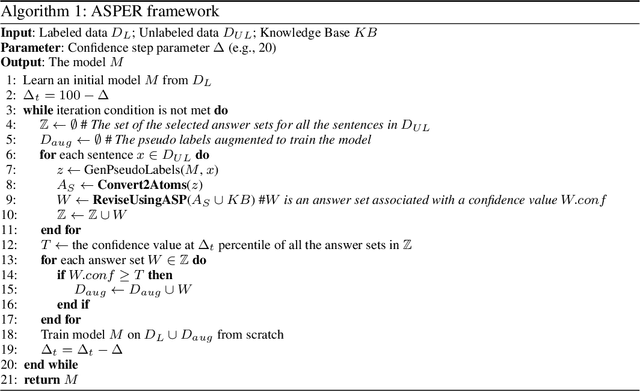

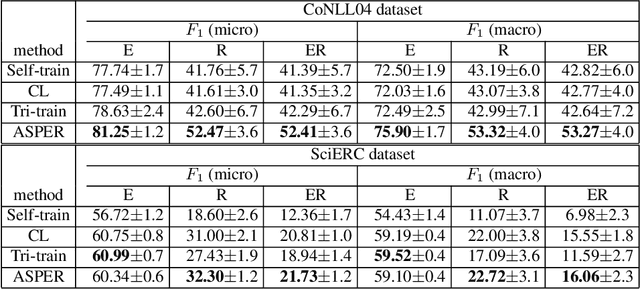

Abstract:A plethora of approaches have been proposed for joint entity-relation (ER) extraction. Most of these methods largely depend on a large amount of manually annotated training data. However, manual data annotation is time consuming, labor intensive, and error prone. Human beings learn using both data (through induction) and knowledge (through deduction). Answer Set Programming (ASP) has been a widely utilized approach for knowledge representation and reasoning that is elaboration tolerant and adept at reasoning with incomplete information. This paper proposes a new approach, ASP-enhanced Entity-Relation extraction (ASPER), to jointly recognize entities and relations by learning from both data and domain knowledge. In particular, ASPER takes advantage of the factual knowledge (represented as facts in ASP) and derived knowledge (represented as rules in ASP) in the learning process of neural network models. We have conducted experiments on two real datasets and compare our method with three baselines. The results show that our ASPER model consistently outperforms the baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge