Huiping Cao

An LLM + ASP Workflow for Joint Entity-Relation Extraction

Aug 18, 2025

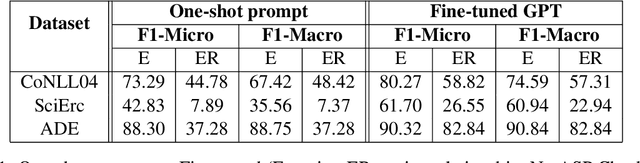

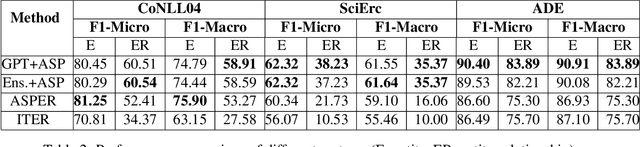

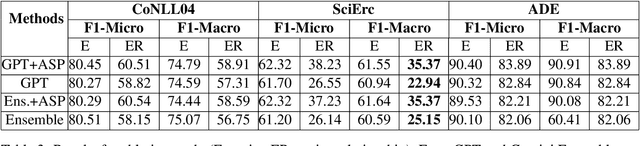

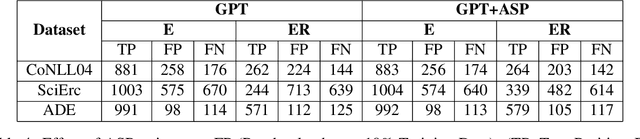

Abstract:Joint entity-relation extraction (JERE) identifies both entities and their relationships simultaneously. Traditional machine-learning based approaches to performing this task require a large corpus of annotated data and lack the ability to easily incorporate domain specific information in the construction of the model. Therefore, creating a model for JERE is often labor intensive, time consuming, and elaboration intolerant. In this paper, we propose harnessing the capabilities of generative pretrained large language models (LLMs) and the knowledge representation and reasoning capabilities of Answer Set Programming (ASP) to perform JERE. We present a generic workflow for JERE using LLMs and ASP. The workflow is generic in the sense that it can be applied for JERE in any domain. It takes advantage of LLM's capability in natural language understanding in that it works directly with unannotated text. It exploits the elaboration tolerant feature of ASP in that no modification of its core program is required when additional domain specific knowledge, in the form of type specifications, is found and needs to be used. We demonstrate the usefulness of the proposed workflow through experiments with limited training data on three well-known benchmarks for JERE. The results of our experiments show that the LLM + ASP workflow is better than state-of-the-art JERE systems in several categories with only 10\% of training data. It is able to achieve a 2.5 times (35\% over 15\%) improvement in the Relation Extraction task for the SciERC corpus, one of the most difficult benchmarks.

ASPER: Answer Set Programming Enhanced Neural Network Models for Joint Entity-Relation Extraction

May 24, 2023

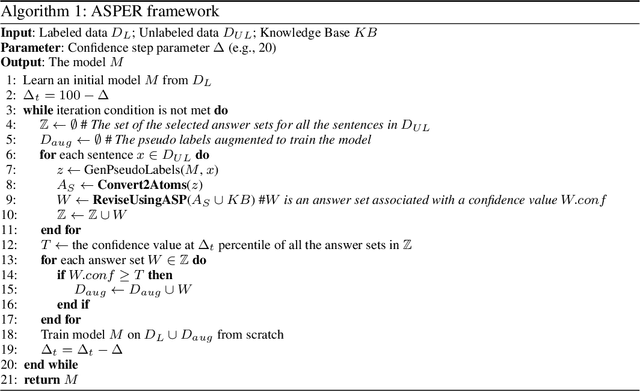

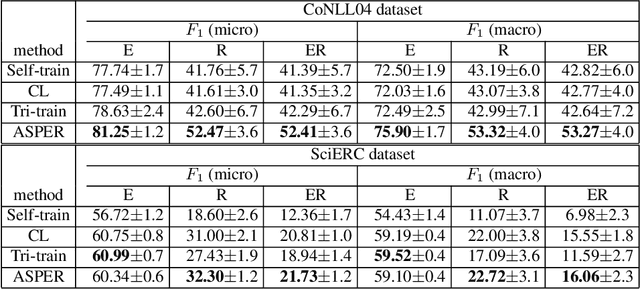

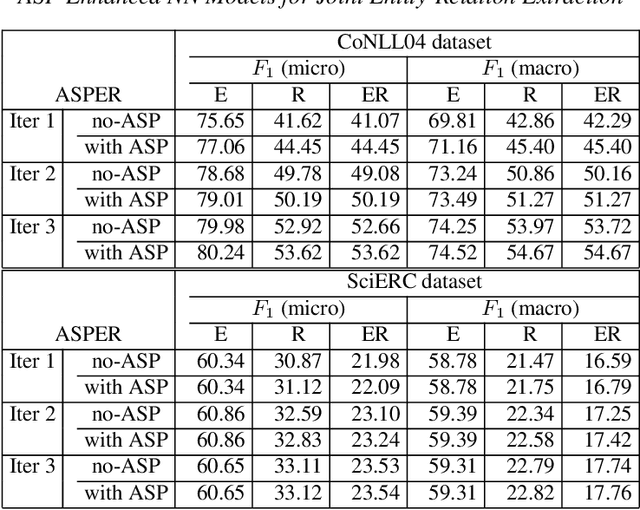

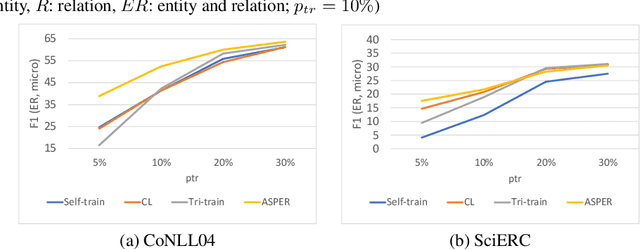

Abstract:A plethora of approaches have been proposed for joint entity-relation (ER) extraction. Most of these methods largely depend on a large amount of manually annotated training data. However, manual data annotation is time consuming, labor intensive, and error prone. Human beings learn using both data (through induction) and knowledge (through deduction). Answer Set Programming (ASP) has been a widely utilized approach for knowledge representation and reasoning that is elaboration tolerant and adept at reasoning with incomplete information. This paper proposes a new approach, ASP-enhanced Entity-Relation extraction (ASPER), to jointly recognize entities and relations by learning from both data and domain knowledge. In particular, ASPER takes advantage of the factual knowledge (represented as facts in ASP) and derived knowledge (represented as rules in ASP) in the learning process of neural network models. We have conducted experiments on two real datasets and compare our method with three baselines. The results show that our ASPER model consistently outperforms the baselines.

Class-Specific Attention (CSA) for Time-Series Classification

Nov 19, 2022Abstract:Most neural network-based classifiers extract features using several hidden layers and make predictions at the output layer by utilizing these extracted features. We observe that not all features are equally pronounced in all classes; we call such features class-specific features. Existing models do not fully utilize the class-specific differences in features as they feed all extracted features from the hidden layers equally to the output layers. Recent attention mechanisms allow giving different emphasis (or attention) to different features, but these attention models are themselves class-agnostic. In this paper, we propose a novel class-specific attention (CSA) module to capture significant class-specific features and improve the overall classification performance of time series. The CSA module is designed in a way such that it can be adopted in existing neural network (NN) based models to conduct time series classification. In the experiments, this module is plugged into five start-of-the-art neural network models for time series classification to test its effectiveness by using 40 different real datasets. Extensive experiments show that an NN model embedded with the CSA module can improve the base model in most cases and the accuracy improvement can be up to 42%. Our statistical analysis show that the performance of an NN model embedding the CSA module is better than the base NN model on 67% of MTS and 80% of UTS test cases and is significantly better on 11% of MTS and 13% of UTS test cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge