Tixiao Shan

SayCoNav: Utilizing Large Language Models for Adaptive Collaboration in Decentralized Multi-Robot Navigation

May 19, 2025Abstract:Adaptive collaboration is critical to a team of autonomous robots to perform complicated navigation tasks in large-scale unknown environments. An effective collaboration strategy should be determined and adapted according to each robot's skills and current status to successfully achieve the shared goal. We present SayCoNav, a new approach that leverages large language models (LLMs) for automatically generating this collaboration strategy among a team of robots. Building on the collaboration strategy, each robot uses the LLM to generate its plans and actions in a decentralized way. By sharing information to each other during navigation, each robot also continuously updates its step-by-step plans accordingly. We evaluate SayCoNav on Multi-Object Navigation (MultiON) tasks, that require the team of the robots to utilize their complementary strengths to efficiently search multiple different objects in unknown environments. By validating SayCoNav with varied team compositions and conditions against baseline methods, our experimental results show that SayCoNav can improve search efficiency by at most 44.28% through effective collaboration among heterogeneous robots. It can also dynamically adapt to the changing conditions during task execution.

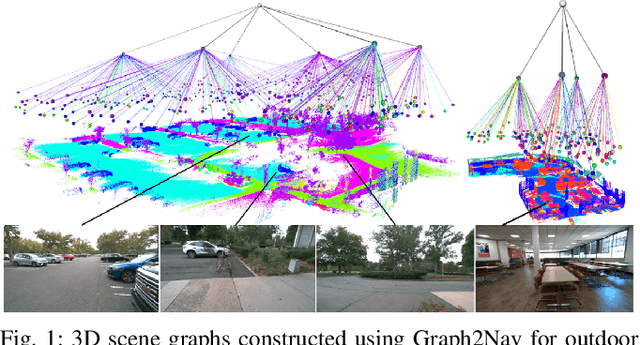

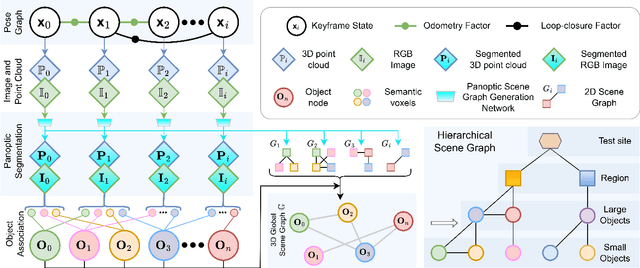

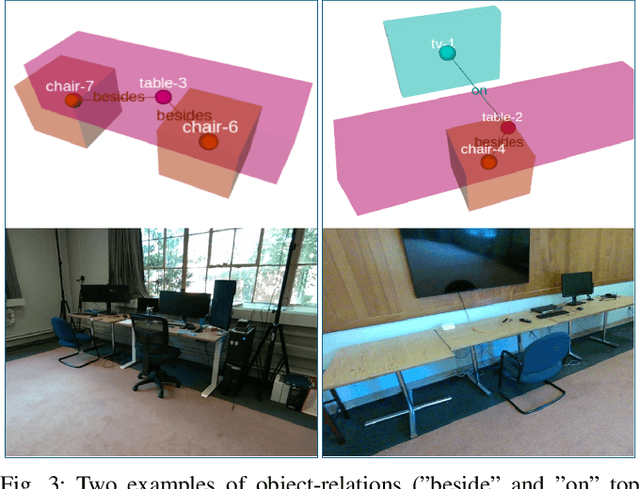

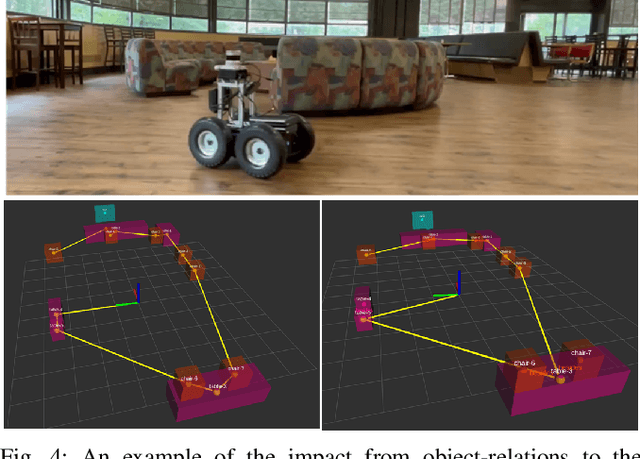

Graph2Nav: 3D Object-Relation Graph Generation to Robot Navigation

Apr 23, 2025

Abstract:We propose Graph2Nav, a real-time 3D object-relation graph generation framework, for autonomous navigation in the real world. Our framework fully generates and exploits both 3D objects and a rich set of semantic relationships among objects in a 3D layered scene graph, which is applicable to both indoor and outdoor scenes. It learns to generate 3D semantic relations among objects, by leveraging and advancing state-of-the-art 2D panoptic scene graph works into the 3D world via 3D semantic mapping techniques. This approach avoids previous training data constraints in learning 3D scene graphs directly from 3D data. We conduct experiments to validate the accuracy in locating 3D objects and labeling object-relations in our 3D scene graphs. We also evaluate the impact of Graph2Nav via integration with SayNav, a state-of-the-art planner based on large language models, on an unmanned ground robot to object search tasks in real environments. Our results demonstrate that modeling object relations in our scene graphs improves search efficiency in these navigation tasks.

Virtual Maps for Autonomous Exploration of Cluttered Underwater Environments

Feb 16, 2022

Abstract:We consider the problem of autonomous mobile robot exploration in an unknown environment, taking into account a robot's coverage rate, map uncertainty, and state estimation uncertainty. This paper presents a novel exploration framework for underwater robots operating in cluttered environments, built upon simultaneous localization and mapping (SLAM) with imaging sonar. The proposed system comprises path generation, place recognition forecasting, belief propagation and utility evaluation using a virtual map, which estimates the uncertainty associated with map cells throughout a robot's workspace. We evaluate the performance of this framework in simulated experiments, showing that our algorithm maintains a high coverage rate during exploration while also maintaining low mapping and localization error. The real-world applicability of our framework is also demonstrated on an underwater remotely operated vehicle (ROV) exploring a harbor environment.

Zero-Shot Reinforcement Learning on Graphs for Autonomous Exploration Under Uncertainty

May 11, 2021

Abstract:This paper studies the problem of autonomous exploration under localization uncertainty for a mobile robot with 3D range sensing. We present a framework for self-learning a high-performance exploration policy in a single simulation environment, and transferring it to other environments, which may be physical or virtual. Recent work in transfer learning achieves encouraging performance by domain adaptation and domain randomization to expose an agent to scenarios that fill the inherent gaps in sim2sim and sim2real approaches. However, it is inefficient to train an agent in environments with randomized conditions to learn the important features of its current state. An agent can use domain knowledge provided by human experts to learn efficiently. We propose a novel approach that uses graph neural networks in conjunction with deep reinforcement learning, enabling decision-making over graphs containing relevant exploration information provided by human experts to predict a robot's optimal sensing action in belief space. The policy, which is trained only in a single simulation environment, offers a real-time, scalable, and transferable decision-making strategy, resulting in zero-shot transfer to other simulation environments and even real-world environments.

LVI-SAM: Tightly-coupled Lidar-Visual-Inertial Odometry via Smoothing and Mapping

Apr 22, 2021

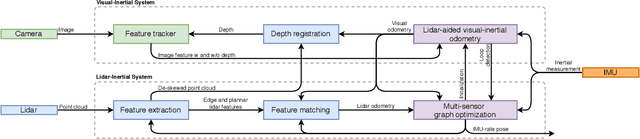

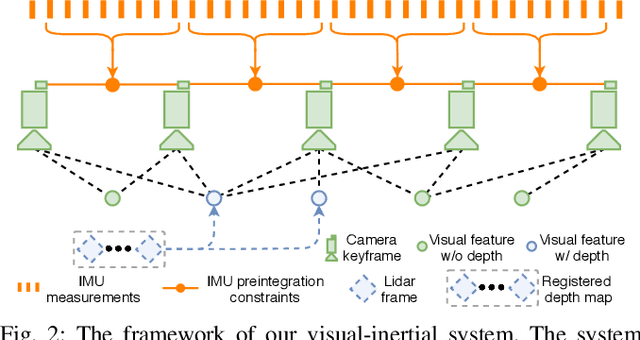

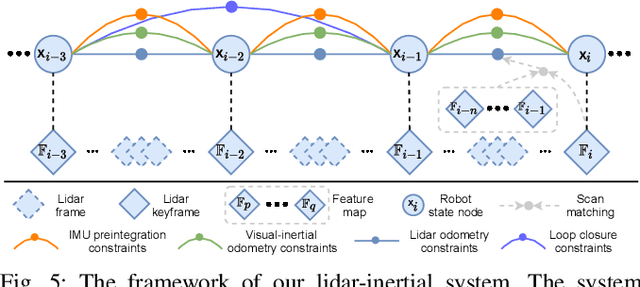

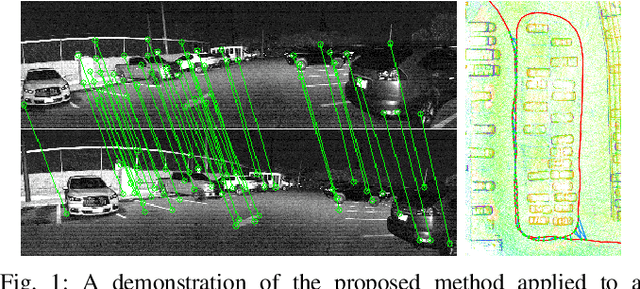

Abstract:We propose a framework for tightly-coupled lidar-visual-inertial odometry via smoothing and mapping, LVI-SAM, that achieves real-time state estimation and map-building with high accuracy and robustness. LVI-SAM is built atop a factor graph and is composed of two sub-systems: a visual-inertial system (VIS) and a lidar-inertial system (LIS). The two sub-systems are designed in a tightly-coupled manner, in which the VIS leverages LIS estimation to facilitate initialization. The accuracy of the VIS is improved by extracting depth information for visual features using lidar measurements. In turn, the LIS utilizes VIS estimation for initial guesses to support scan-matching. Loop closures are first identified by the VIS and further refined by the LIS. LVI-SAM can also function when one of the two sub-systems fails, which increases its robustness in both texture-less and feature-less environments. LVI-SAM is extensively evaluated on datasets gathered from several platforms over a variety of scales and environments. Our implementation is available at https://git.io/lvi-sam

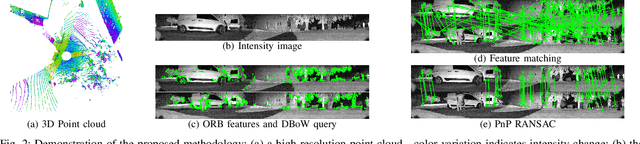

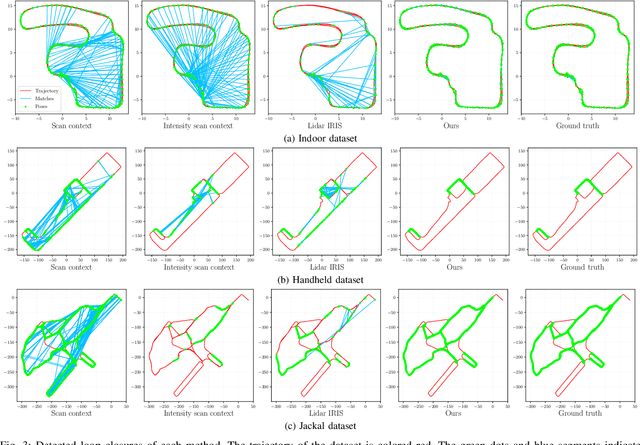

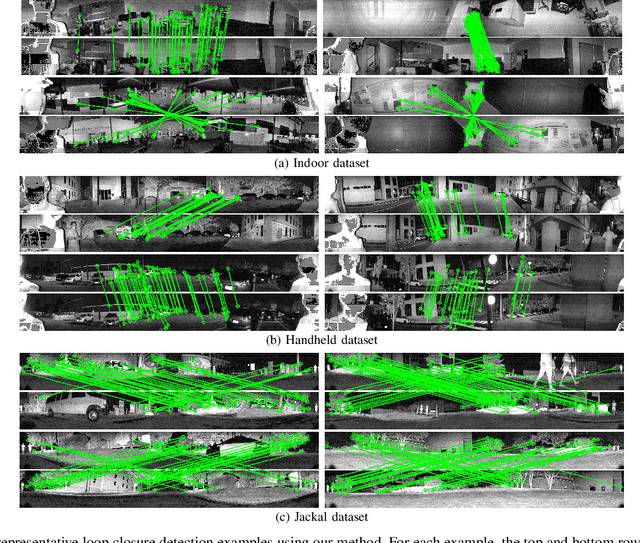

Robust Place Recognition using an Imaging Lidar

Mar 03, 2021

Abstract:We propose a methodology for robust, real-time place recognition using an imaging lidar, which yields image-quality high-resolution 3D point clouds. Utilizing the intensity readings of an imaging lidar, we project the point cloud and obtain an intensity image. ORB feature descriptors are extracted from the image and encoded into a bag-of-words vector. The vector, used to identify the point cloud, is inserted into a database that is maintained by DBoW for fast place recognition queries. The returned candidate is further validated by matching visual feature descriptors. To reject matching outliers, we apply PnP, which minimizes the reprojection error of visual features' positions in Euclidean space with their correspondences in 2D image space, using RANSAC. Combining the advantages from both camera and lidar-based place recognition approaches, our method is truly rotation-invariant and can tackle reverse revisiting and upside-down revisiting. The proposed method is evaluated on datasets gathered from a variety of platforms over different scales and environments. Our implementation is available at https://git.io/imaging-lidar-place-recognition

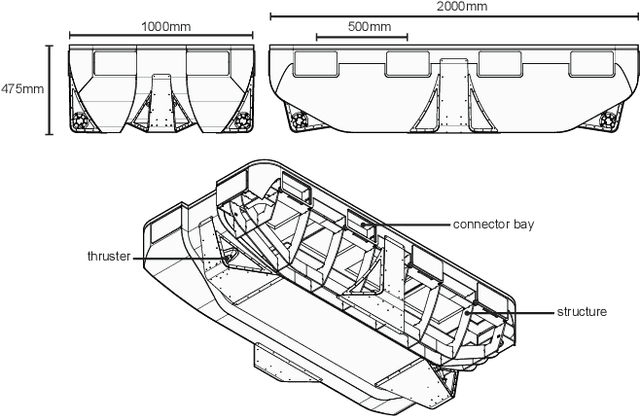

Roboat II: A Novel Autonomous Surface Vessel for Urban Environments

Aug 24, 2020

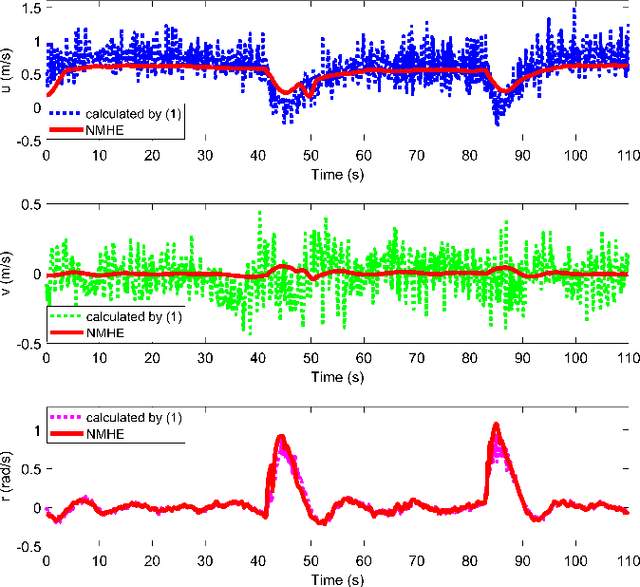

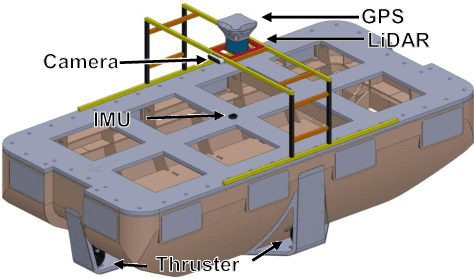

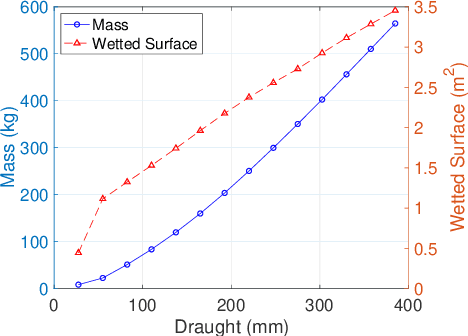

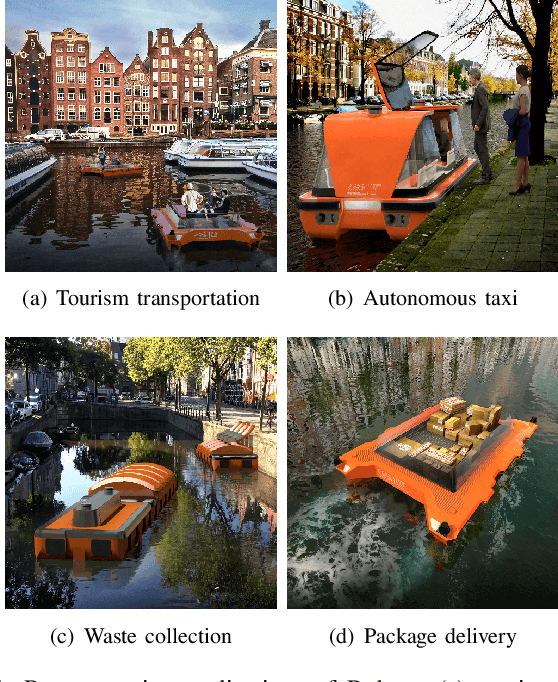

Abstract:This paper presents a novel autonomous surface vessel (ASV), called Roboat II for urban transportation. Roboat II is capable of accurate simultaneous localization and mapping (SLAM), receding horizon tracking control and estimation, and path planning. Roboat II is designed to maximize the internal space for transport and can carry payloads several times of its own weight. Moreover, it is capable of holonomic motions to facilitate transporting, docking, and inter-connectivity between boats. The proposed SLAM system receives sensor data from a 3D LiDAR, an IMU, and a GPS, and utilizes a factor graph to tackle the multi-sensor fusion problem. To cope with the complex dynamics in the water, Roboat II employs an online nonlinear model predictive controller (NMPC), where we experimentally estimated the dynamical model of the vessel in order to achieve superior performance for tracking control. The states of Roboat II are simultaneously estimated using a nonlinear moving horizon estimation (NMHE) algorithm. Experiments demonstrate that Roboat II is able to successfully perform online mapping and localization, plan its path and robustly track the planned trajectory in the confined river, implying that this autonomous vessel holds the promise on potential applications in transporting humans and goods in many of the waterways nowadays.

A Receding Horizon Multi-Objective Planner for Autonomous Surface Vehicles in Urban Waterways

Jul 16, 2020

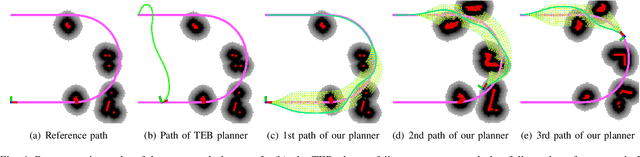

Abstract:We propose a novel receding horizon planner for an autonomous surface vehicle (ASV) path planning in urban waterways. The proposed planner is lightweight, as it requires no prior map and is suitable for deployment on platforms with limited computational resources. To find a feasible path in the presence of obstacles, the planner repeatedly generates a graph, which takes the dynamic constraints of the robot into account, using a global reference path. We also propose a novel method for multi-objective motion planning over the graph by leveraging the paradigm of lexicographic optimization and applying it for the first time to graph search within our receding horizon planner. The competing resources of interest are penalized hierarchically during the search. Higher-ranked resources cause a robot to incur non-negative costs over the paths traveled, which are occasionally zero-valued. This is intended to capture problems in which a robot must manage resources such as risk of collision. This leaves freedom for tie-breaking with respect to lower-priority resources; at the bottom of the hierarchy is a strictly positive quantity consumed by the robot, such as distance traveled, energy expended or time elapsed. We conduct experiments in both simulated and real-world environments to validate the proposed planner and demonstrate its capability for enabling ASV navigation in complex environments.

LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping

Jul 14, 2020

Abstract:We propose a framework for tightly-coupled lidar inertial odometry via smoothing and mapping, LIO-SAM, that achieves highly accurate, real-time mobile robot trajectory estimation and map-building. LIO-SAM formulates lidar-inertial odometry atop a factor graph, allowing a multitude of relative and absolute measurements, including loop closures, to be incorporated from different sources as factors into the system. The estimated motion from inertial measurement unit (IMU) pre-integration de-skews point clouds and produces an initial guess for lidar odometry optimization. The obtained lidar odometry solution is used to estimate the bias of the IMU. To ensure high performance in real-time, we marginalize old lidar scans for pose optimization, rather than matching lidar scans to a global map. Scan-matching at a local scale instead of a global scale significantly improves the real-time performance of the system, as does the selective introduction of keyframes, and an efficient sliding window approach that registers a new keyframe to a fixed-size set of prior ``sub-keyframes.'' The proposed method is extensively evaluated on datasets gathered from three platforms over various scales and environments.

Simulation-based Lidar Super-resolution for Ground Vehicles

Apr 10, 2020

Abstract:We propose a methodology for lidar super-resolution with ground vehicles driving on roadways, which relies completely on a driving simulator to enhance, via deep learning, the apparent resolution of a physical lidar. To increase the resolution of the point cloud captured by a sparse 3D lidar, we convert this problem from 3D Euclidean space into an image super-resolution problem in 2D image space, which is solved using a deep convolutional neural network. By projecting a point cloud onto a range image, we are able to efficiently enhance the resolution of such an image using a deep neural network. Typically, the training of a deep neural network requires vast real-world data. Our approach does not require any real-world data, as we train the network purely using computer-generated data. Thus our method is applicable to the enhancement of any type of 3D lidar theoretically. By novelly applying Monte-Carlo dropout in the network and removing the predictions with high uncertainty, our method produces high accuracy point clouds comparable with the observations of a real high resolution lidar. We present experimental results applying our method to several simulated and real-world datasets. We argue for the method's potential benefits in real-world robotics applications such as occupancy mapping and terrain modeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge