John McConnell

DRACo-SLAM2: Distributed Robust Acoustic Communication-efficient SLAM for Imaging Sonar EquippedUnderwater Robot Teams with Object Graph Matching

Jul 31, 2025

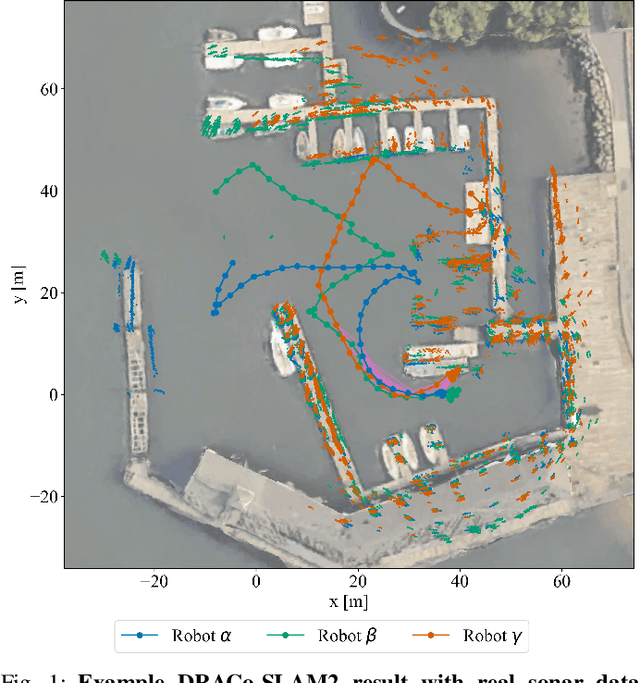

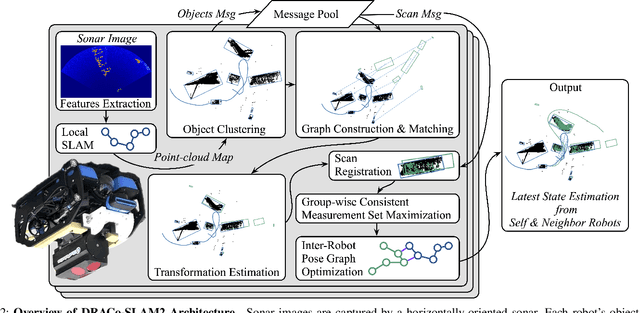

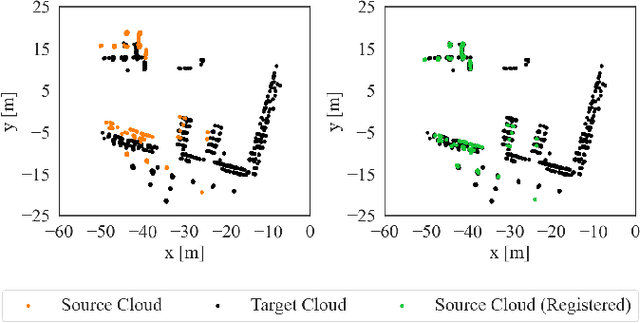

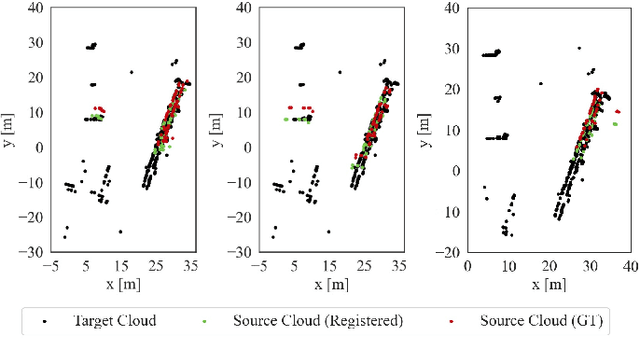

Abstract:We present DRACo-SLAM2, a distributed SLAM framework for underwater robot teams equipped with multibeam imaging sonar. This framework improves upon the original DRACo-SLAM by introducing a novel representation of sonar maps as object graphs and utilizing object graph matching to achieve time-efficient inter-robot loop closure detection without relying on prior geometric information. To better-accommodate the needs and characteristics of underwater scan matching, we propose incremental Group-wise Consistent Measurement Set Maximization (GCM), a modification of Pairwise Consistent Measurement Set Maximization (PCM), which effectively handles scenarios where nearby inter-robot loop closures share similar registration errors. The proposed approach is validated through extensive comparative analyses on simulated and real-world datasets.

Large-Scale Dense 3D Mapping Using Submaps Derived From Orthogonal Imaging Sonars

Dec 04, 2024Abstract:3D situational awareness is critical for any autonomous system. However, when operating underwater, environmental conditions often dictate the use of acoustic sensors. These acoustic sensors are plagued by high noise and a lack of 3D information in sonar imagery, motivating the use of an orthogonal pair of imaging sonars to recover 3D perceptual data. Thus far, mapping systems in this area only use a subset of the available data at discrete timesteps and rely on object-level prior information in the environment to develop high-coverage 3D maps. Moreover, simple repeating objects must be present to build high-coverage maps. In this work, we propose a submap-based mapping system integrated with a simultaneous localization and mapping (SLAM) system to produce dense, 3D maps of complex unknown environments with varying densities of simple repeating objects. We compare this submapping approach to our previous works in this area, analyzing simple and highly complex environments, such as submerged aircraft. We analyze the tradeoffs between a submapping-based approach and our previous work leveraging simple repeating objects. We show where each method is well-motivated and where they fall short. Importantly, our proposed use of submapping achieves an advance in underwater situational awareness with wide aperture multi-beam imaging sonar, moving toward generalized large-scale dense 3D mapping capability for fully unknown complex environments.

Unlocking the Archives: Using Large Language Models to Transcribe Handwritten Historical Documents

Nov 02, 2024

Abstract:This study demonstrates that Large Language Models (LLMs) can transcribe historical handwritten documents with significantly higher accuracy than specialized Handwritten Text Recognition (HTR) software, while being faster and more cost-effective. We introduce an open-source software tool called Transcription Pearl that leverages these capabilities to automatically transcribe and correct batches of handwritten documents using commercially available multimodal LLMs from OpenAI, Anthropic, and Google. In tests on a diverse corpus of 18th/19th century English language handwritten documents, LLMs achieved Character Error Rates (CER) of 5.7 to 7% and Word Error Rates (WER) of 8.9 to 15.9%, improvements of 14% and 32% respectively over specialized state-of-the-art HTR software like Transkribus. Most significantly, when LLMs were then used to correct those transcriptions as well as texts generated by conventional HTR software, they achieved near-human levels of accuracy, that is CERs as low as 1.8% and WERs of 3.5%. The LLMs also completed these tasks 50 times faster and at approximately 1/50th the cost of proprietary HTR programs. These results demonstrate that when LLMs are incorporated into software tools like Transcription Pearl, they provide an accessible, fast, and highly accurate method for mass transcription of historical handwritten documents, significantly streamlining the digitization process.

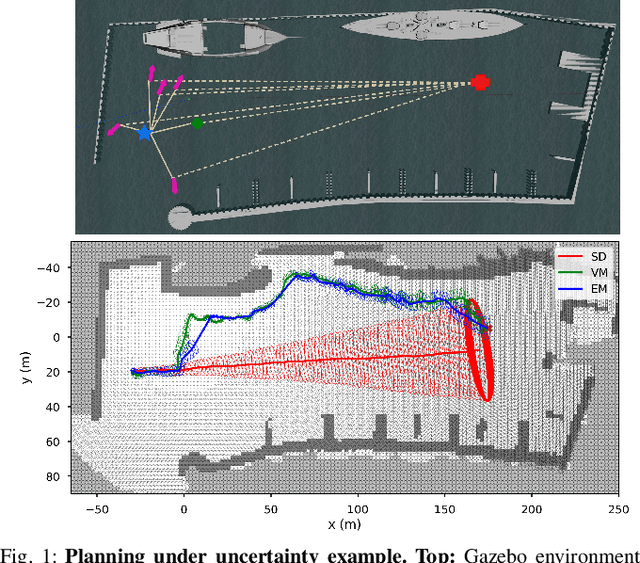

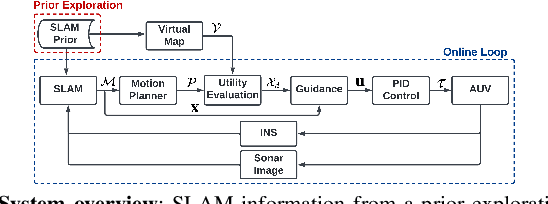

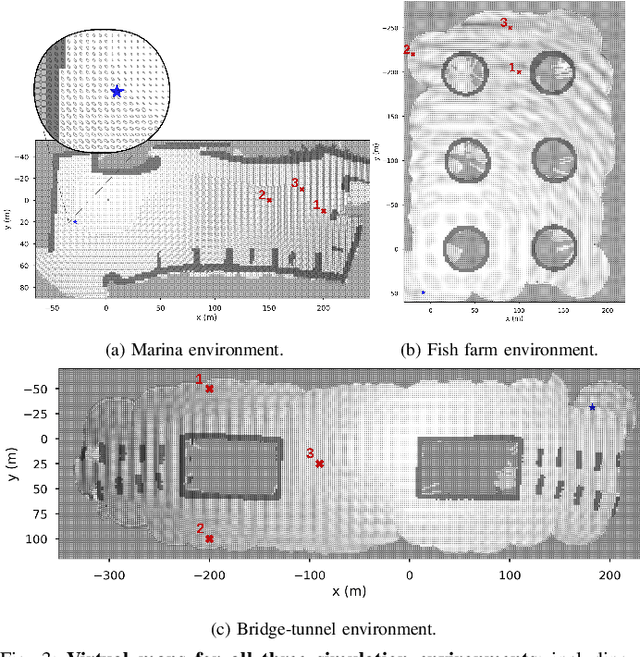

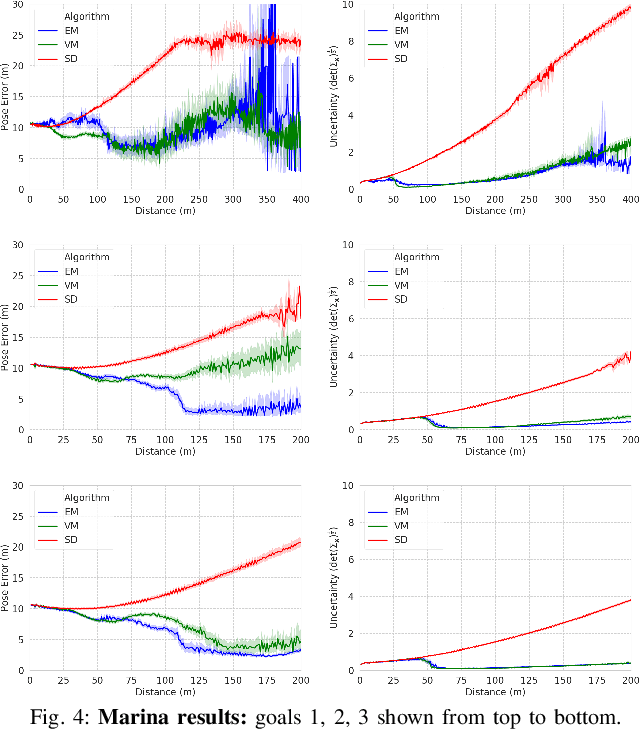

Real-Time Planning Under Uncertainty for AUVs Using Virtual Maps

Mar 07, 2024

Abstract:Reliable localization is an essential capability for marine robots navigating in GPS-denied environments. SLAM, commonly used to mitigate dead reckoning errors, still fails in feature-sparse environments or with limited-range sensors. Pose estimation can be improved by incorporating the uncertainty prediction of future poses into the planning process and choosing actions that reduce uncertainty. However, performing belief propagation is computationally costly, especially when operating in large-scale environments. This work proposes a computationally efficient planning under uncertainty frame-work suitable for large-scale, feature-sparse environments. Our strategy leverages SLAM graph and occupancy map data obtained from a prior exploration phase to create a virtual map, describing the uncertainty of each map cell using a multivariate Gaussian. The virtual map is then used as a cost map in the planning phase, and performing belief propagation at each step is avoided. A receding horizon planning strategy is implemented, managing a goal-reaching and uncertainty-reduction tradeoff. Simulation experiments in a realistic underwater environment validate this approach. Experimental comparisons against a full belief propagation approach and a standard shortest-distance approach are conducted.

Robust Unmanned Surface Vehicle Navigation with Distributional Reinforcement Learning

Jul 30, 2023Abstract:Autonomous navigation of Unmanned Surface Vehicles (USV) in marine environments with current flows is challenging, and few prior works have addressed the sensorbased navigation problem in such environments under no prior knowledge of the current flow and obstacles. We propose a Distributional Reinforcement Learning (RL) based local path planner that learns return distributions which capture the uncertainty of action outcomes, and an adaptive algorithm that automatically tunes the level of sensitivity to the risk in the environment. The proposed planner achieves a more stable learning performance and converges to safer policies than a traditional RL based planner. Computational experiments demonstrate that comparing to a traditional RL based planner and classical local planning methods such as Artificial Potential Fields and the Bug Algorithm, the proposed planner is robust against environmental flows, and is able to plan trajectories that are superior in safety, time and energy consumption.

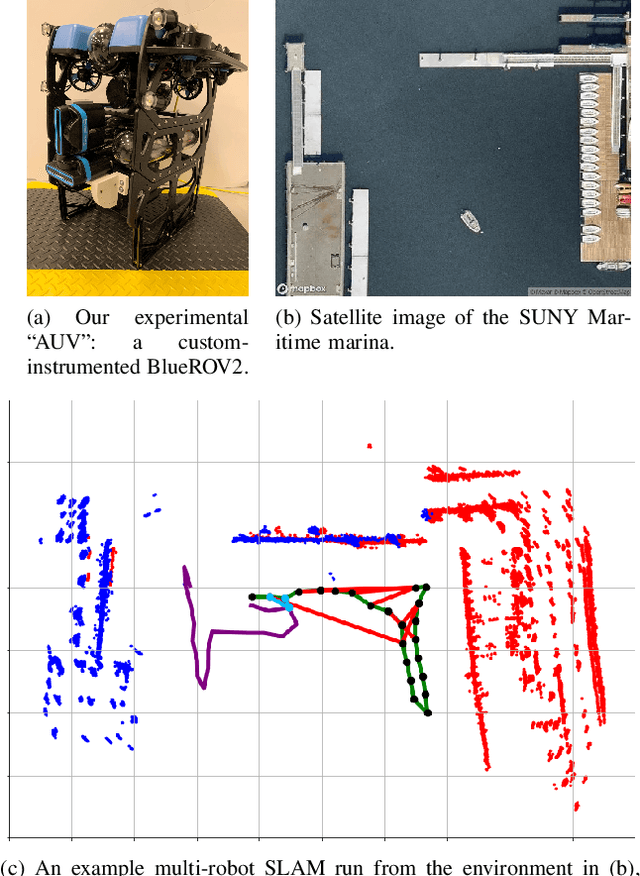

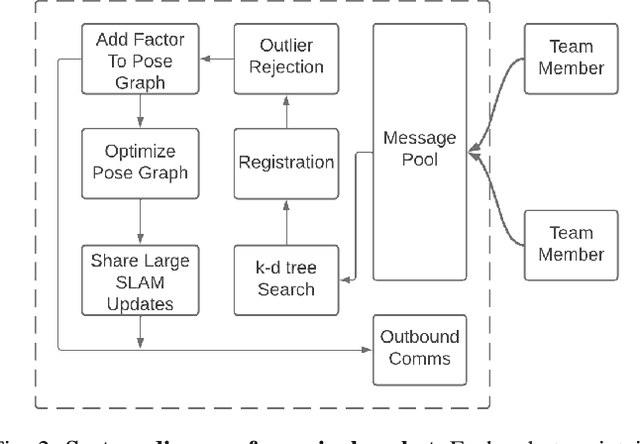

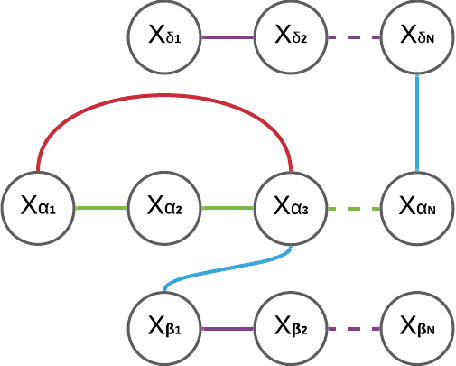

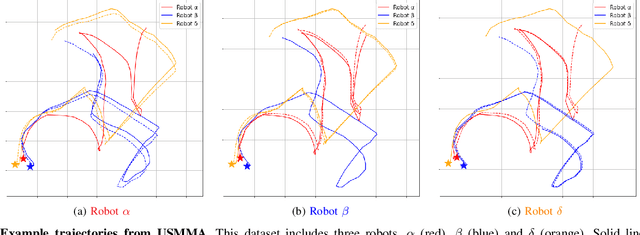

DRACo-SLAM: Distributed Robust Acoustic Communication-efficient SLAM for Imaging Sonar Equipped Underwater Robot Teams

Oct 03, 2022

Abstract:An essential task for a multi-robot system is generating a common understanding of the environment and relative poses between robots. Cooperative tasks can be executed only when a vehicle has knowledge of its own state and the states of the team members. However, this has primarily been achieved with direct rendezvous between underwater robots, via inter-robot ranging. We propose a novel distributed multi-robot simultaneous localization and mapping (SLAM) framework for underwater robots using imaging sonar-based perception. By passing only scene descriptors between robots, we do not need to pass raw sensor data unless there is a likelihood of inter-robot loop closure. We utilize pairwise consistent measurement set maximization (PCM), making our system robust to erroneous loop closures. The functionality of our system is demonstrated using two real-world datasets, one with three robots and another with two robots. We show that our system effectively estimates the trajectories of the multi-robot system and keeps the bandwidth requirements of inter-robot communication low. To our knowledge, this paper describes the first instance of multi-robot SLAM using real imaging sonar data (which we implement offline, using simulated communication). Code link: https://github.com/jake3991/DRACo-SLAM.

Virtual Maps for Autonomous Exploration of Cluttered Underwater Environments

Feb 16, 2022

Abstract:We consider the problem of autonomous mobile robot exploration in an unknown environment, taking into account a robot's coverage rate, map uncertainty, and state estimation uncertainty. This paper presents a novel exploration framework for underwater robots operating in cluttered environments, built upon simultaneous localization and mapping (SLAM) with imaging sonar. The proposed system comprises path generation, place recognition forecasting, belief propagation and utility evaluation using a virtual map, which estimates the uncertainty associated with map cells throughout a robot's workspace. We evaluate the performance of this framework in simulated experiments, showing that our algorithm maintains a high coverage rate during exploration while also maintaining low mapping and localization error. The real-world applicability of our framework is also demonstrated on an underwater remotely operated vehicle (ROV) exploring a harbor environment.

Overhead Image Factors for Underwater Sonar-based SLAM

Feb 11, 2022

Abstract:Simultaneous localization and mapping (SLAM) is a critical capability for any autonomous underwater vehicle (AUV). However, robust, accurate state estimation is still a work in progress when using low-cost sensors. We propose enhancing a typical low-cost sensor package using widely available and often free prior information; overhead imagery. Given an AUV's sonar image and a partially overlapping, globally-referenced overhead image, we propose using a convolutional neural network (CNN) to generate a synthetic overhead image predicting the above-surface appearance of the sonar image contents. We then use this synthetic overhead image to register our observations to the provided global overhead image. Once registered, the transformation is introduced as a factor into a pose SLAM factor graph. We use a state-of-the-art simulation environment to perform validation over a series of benchmark trajectories and quantitatively show the improved accuracy of robot state estimation using the proposed approach. We also show qualitative outcomes from a real AUV field deployment. Video attachment: https://youtu.be/_uWljtp58ks

Predictive 3D Sonar Mapping of Underwater Environments via Object-specific Bayesian Inference

Apr 07, 2021

Abstract:Recent work has achieved dense 3D reconstruction with wide-aperture imaging sonar using a stereo pair of orthogonally oriented sonars. This allows each sonar to observe a spatial dimension that the other is missing, without requiring any prior assumptions about scene geometry. However, this is achieved only in a small region with overlapping fields-of-view, leaving large regions of sonar image observations with an unknown elevation angle. Our work aims to achieve large-scale 3D reconstruction more efficiently using this sensor arrangement. We propose dividing the world into semantic classes to exploit the presence of repeating structures in the subsea environment. We use a Bayesian inference framework to build an understanding of each object class's geometry when 3D information is available from the orthogonal sonar fusion system, and when the elevation angle of our returns is unknown, our framework is used to infer unknown 3D structure. We quantitatively validate our method in a simulation and use data collected from a real outdoor littoral environment to demonstrate the efficacy of our framework in the field. Video attachment: https://www.youtube.com/watch?v=WouCrY9eK4o&t=75s

Fusing Concurrent Orthogonal Wide-aperture Sonar Images for Dense Underwater 3D Reconstruction

Jul 20, 2020

Abstract:We propose a novel approach to handling the ambiguity in elevation angle associated with the observations of a forward looking multi-beam imaging sonar, and the challenges it poses for performing an accurate 3D reconstruction. We utilize a pair of sonars with orthogonal axes of uncertainty to independently observe the same points in the environment from two different perspectives, and associate these observations. Using these concurrent observations, we can create a dense, fully defined point cloud at every time-step to aid in reconstructing the 3D geometry of underwater scenes. We will evaluate our method in the context of the current state of the art, for which strong assumptions on object geometry limit applicability to generalized 3D scenes. We will discuss results from laboratory tests that quantitatively benchmark our algorithm's reconstruction capabilities, and results from a real-world, tidal river basin which qualitatively demonstrate our ability to reconstruct a cluttered field of underwater objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge