Paul Szenher

Large-Scale Dense 3D Mapping Using Submaps Derived From Orthogonal Imaging Sonars

Dec 04, 2024Abstract:3D situational awareness is critical for any autonomous system. However, when operating underwater, environmental conditions often dictate the use of acoustic sensors. These acoustic sensors are plagued by high noise and a lack of 3D information in sonar imagery, motivating the use of an orthogonal pair of imaging sonars to recover 3D perceptual data. Thus far, mapping systems in this area only use a subset of the available data at discrete timesteps and rely on object-level prior information in the environment to develop high-coverage 3D maps. Moreover, simple repeating objects must be present to build high-coverage maps. In this work, we propose a submap-based mapping system integrated with a simultaneous localization and mapping (SLAM) system to produce dense, 3D maps of complex unknown environments with varying densities of simple repeating objects. We compare this submapping approach to our previous works in this area, analyzing simple and highly complex environments, such as submerged aircraft. We analyze the tradeoffs between a submapping-based approach and our previous work leveraging simple repeating objects. We show where each method is well-motivated and where they fall short. Importantly, our proposed use of submapping achieves an advance in underwater situational awareness with wide aperture multi-beam imaging sonar, moving toward generalized large-scale dense 3D mapping capability for fully unknown complex environments.

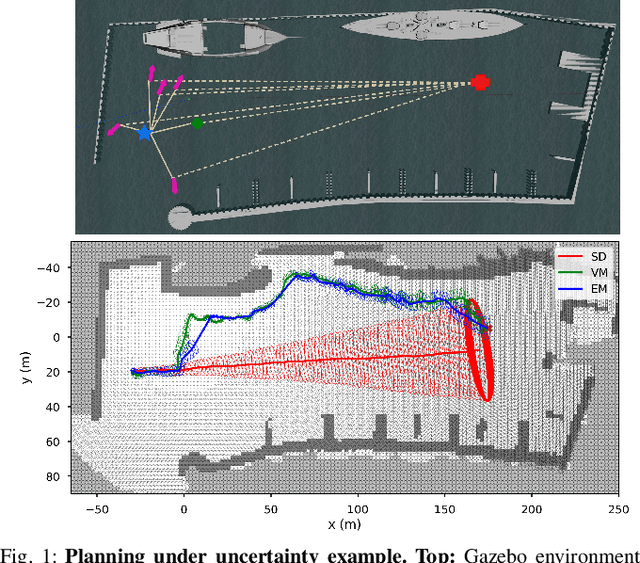

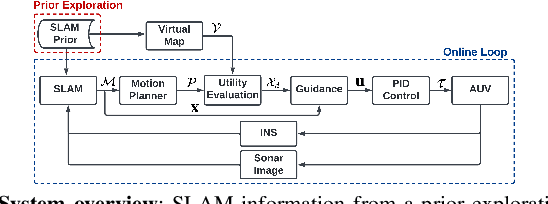

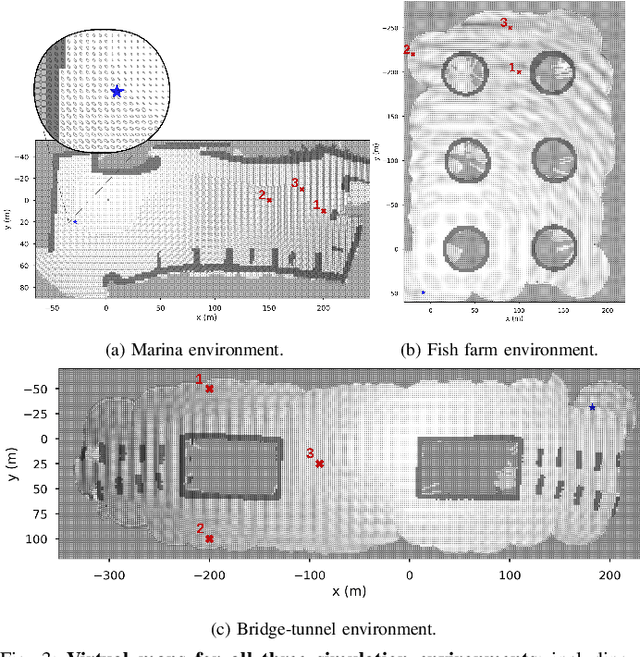

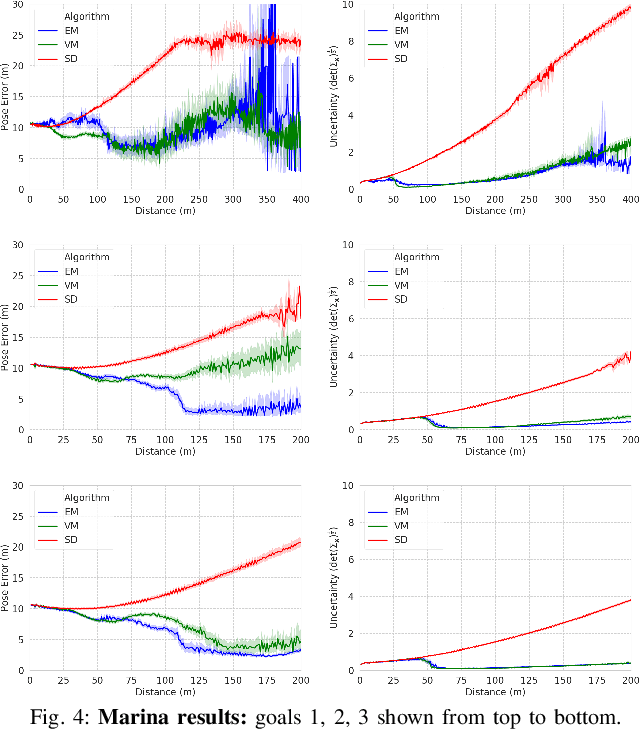

Real-Time Planning Under Uncertainty for AUVs Using Virtual Maps

Mar 07, 2024

Abstract:Reliable localization is an essential capability for marine robots navigating in GPS-denied environments. SLAM, commonly used to mitigate dead reckoning errors, still fails in feature-sparse environments or with limited-range sensors. Pose estimation can be improved by incorporating the uncertainty prediction of future poses into the planning process and choosing actions that reduce uncertainty. However, performing belief propagation is computationally costly, especially when operating in large-scale environments. This work proposes a computationally efficient planning under uncertainty frame-work suitable for large-scale, feature-sparse environments. Our strategy leverages SLAM graph and occupancy map data obtained from a prior exploration phase to create a virtual map, describing the uncertainty of each map cell using a multivariate Gaussian. The virtual map is then used as a cost map in the planning phase, and performing belief propagation at each step is avoided. A receding horizon planning strategy is implemented, managing a goal-reaching and uncertainty-reduction tradeoff. Simulation experiments in a realistic underwater environment validate this approach. Experimental comparisons against a full belief propagation approach and a standard shortest-distance approach are conducted.

Mobile Manipulation Platform for Autonomous Indoor Inspections in Low-Clearance Areas

Sep 19, 2023

Abstract:Mobile manipulators have been used for inspection, maintenance and repair tasks over the years, but there are some key limitations. Stability concerns typically require mobile platforms to be large in order to handle far-reaching manipulators, or for the manipulators to have drastically reduced workspaces to fit onto smaller mobile platforms. Therefore we propose a combination of two widely-used robots, the Clearpath Jackal unmanned ground vehicle and the Kinova Gen3 six degree-of-freedom manipulator. The Jackal has a small footprint and works well in low-clearance indoor environments. Extensive testing of localization, navigation and mapping using LiDAR sensors makes the Jackal a well developed mobile platform suitable for mobile manipulation. The Gen3 has a long reach with reasonable power consumption for manipulation tasks. A wrist camera for RGB-D sensing and a customizable end effector interface makes the Gen3 suitable for a myriad of manipulation tasks. Typically these features would result in an unstable platform, however with a few minor hardware and software modifications, we have produced a stable, high-performance mobile manipulation platform with significant mobility, reach, sensing, and maneuverability for indoor inspection tasks, without degradation of the component robots' individual capabilities. These assertions were investigated with hardware via semi-autonomous navigation to waypoints in a busy indoor environment, and high-precision self-alignment alongside planar structures for intervention tasks.

Robust Route Planning with Distributional Reinforcement Learning in a Stochastic Road Network Environment

Apr 19, 2023

Abstract:Route planning is essential to mobile robot navigation problems. In recent years, deep reinforcement learning (DRL) has been applied to learning optimal planning policies in stochastic environments without prior knowledge. However, existing works focus on learning policies that maximize the expected return, the performance of which can vary greatly when the level of stochasticity in the environment is high. In this work, we propose a distributional reinforcement learning based framework that learns return distributions which explicitly reflect environmental stochasticity. Policies based on the second-order stochastic dominance (SSD) relation can be used to make adjustable route decisions according to user preference on performance robustness. Our proposed method is evaluated in a simulated road network environment, and experimental results show that our method is able to plan the shortest routes that minimize stochasticity in travel time when robustness is preferred, while other state-of-the-art DRL methods are agnostic to environmental stochasticity.

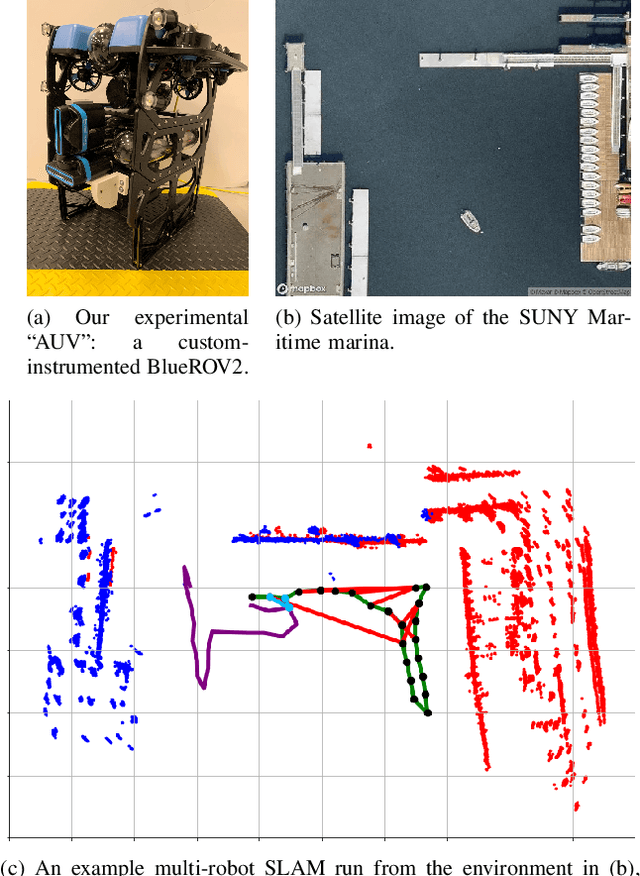

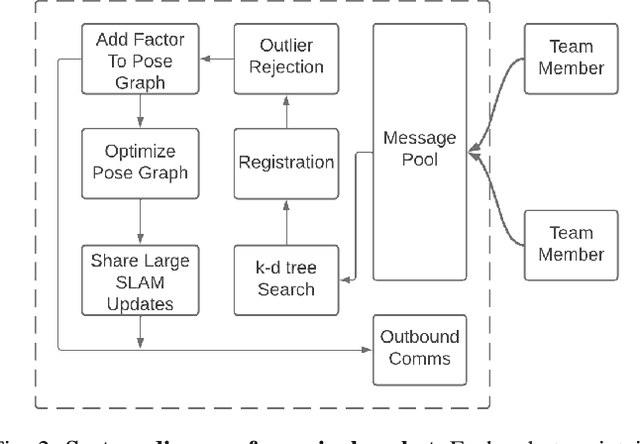

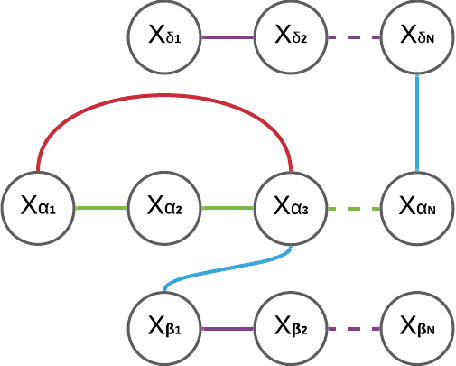

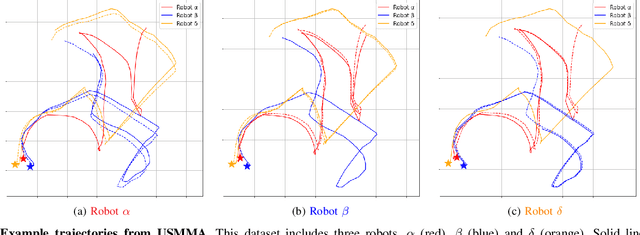

DRACo-SLAM: Distributed Robust Acoustic Communication-efficient SLAM for Imaging Sonar Equipped Underwater Robot Teams

Oct 03, 2022

Abstract:An essential task for a multi-robot system is generating a common understanding of the environment and relative poses between robots. Cooperative tasks can be executed only when a vehicle has knowledge of its own state and the states of the team members. However, this has primarily been achieved with direct rendezvous between underwater robots, via inter-robot ranging. We propose a novel distributed multi-robot simultaneous localization and mapping (SLAM) framework for underwater robots using imaging sonar-based perception. By passing only scene descriptors between robots, we do not need to pass raw sensor data unless there is a likelihood of inter-robot loop closure. We utilize pairwise consistent measurement set maximization (PCM), making our system robust to erroneous loop closures. The functionality of our system is demonstrated using two real-world datasets, one with three robots and another with two robots. We show that our system effectively estimates the trajectories of the multi-robot system and keeps the bandwidth requirements of inter-robot communication low. To our knowledge, this paper describes the first instance of multi-robot SLAM using real imaging sonar data (which we implement offline, using simulated communication). Code link: https://github.com/jake3991/DRACo-SLAM.

Zero-Shot Reinforcement Learning on Graphs for Autonomous Exploration Under Uncertainty

May 11, 2021

Abstract:This paper studies the problem of autonomous exploration under localization uncertainty for a mobile robot with 3D range sensing. We present a framework for self-learning a high-performance exploration policy in a single simulation environment, and transferring it to other environments, which may be physical or virtual. Recent work in transfer learning achieves encouraging performance by domain adaptation and domain randomization to expose an agent to scenarios that fill the inherent gaps in sim2sim and sim2real approaches. However, it is inefficient to train an agent in environments with randomized conditions to learn the important features of its current state. An agent can use domain knowledge provided by human experts to learn efficiently. We propose a novel approach that uses graph neural networks in conjunction with deep reinforcement learning, enabling decision-making over graphs containing relevant exploration information provided by human experts to predict a robot's optimal sensing action in belief space. The policy, which is trained only in a single simulation environment, offers a real-time, scalable, and transferable decision-making strategy, resulting in zero-shot transfer to other simulation environments and even real-world environments.

Simulation-based Lidar Super-resolution for Ground Vehicles

Apr 10, 2020

Abstract:We propose a methodology for lidar super-resolution with ground vehicles driving on roadways, which relies completely on a driving simulator to enhance, via deep learning, the apparent resolution of a physical lidar. To increase the resolution of the point cloud captured by a sparse 3D lidar, we convert this problem from 3D Euclidean space into an image super-resolution problem in 2D image space, which is solved using a deep convolutional neural network. By projecting a point cloud onto a range image, we are able to efficiently enhance the resolution of such an image using a deep neural network. Typically, the training of a deep neural network requires vast real-world data. Our approach does not require any real-world data, as we train the network purely using computer-generated data. Thus our method is applicable to the enhancement of any type of 3D lidar theoretically. By novelly applying Monte-Carlo dropout in the network and removing the predictions with high uncertainty, our method produces high accuracy point clouds comparable with the observations of a real high resolution lidar. We present experimental results applying our method to several simulated and real-world datasets. We argue for the method's potential benefits in real-world robotics applications such as occupancy mapping and terrain modeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge