Thomas A. Berrueta

Maximum Diffusion Reinforcement Learning

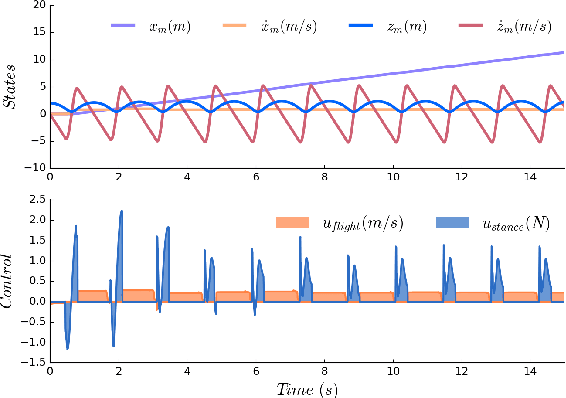

Sep 28, 2023Abstract:The assumption that data are independent and identically distributed underpins all machine learning. When data are collected sequentially from agent experiences this assumption does not generally hold, as in reinforcement learning. Here, we derive a method that overcomes these limitations by exploiting the statistical mechanics of ergodic processes, which we term maximum diffusion reinforcement learning. By decorrelating agent experiences, our approach provably enables agents to learn continually in single-shot deployments regardless of how they are initialized. Moreover, we prove our approach generalizes well-known maximum entropy techniques, and show that it robustly exceeds state-of-the-art performance across popular benchmarks. Our results at the nexus of physics, learning, and control pave the way towards more transparent and reliable decision-making in reinforcement learning agents, such as locomoting robots and self-driving cars.

Active Learning in Robotics: A Review of Control Principles

Jun 25, 2021

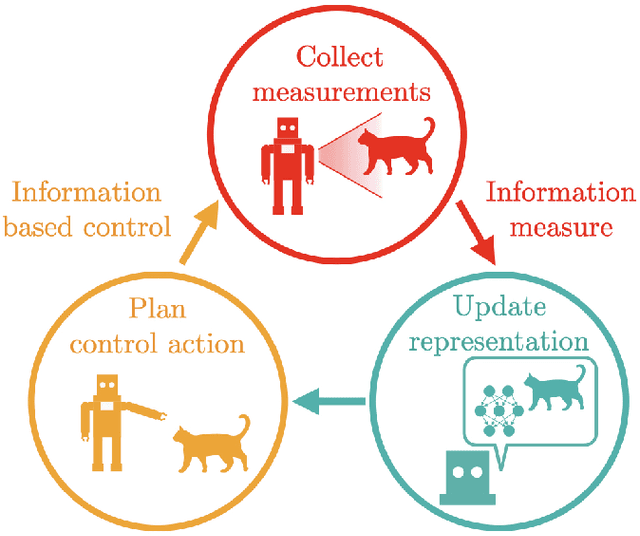

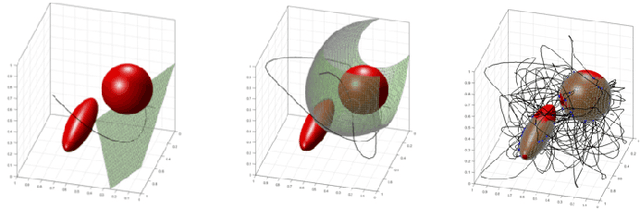

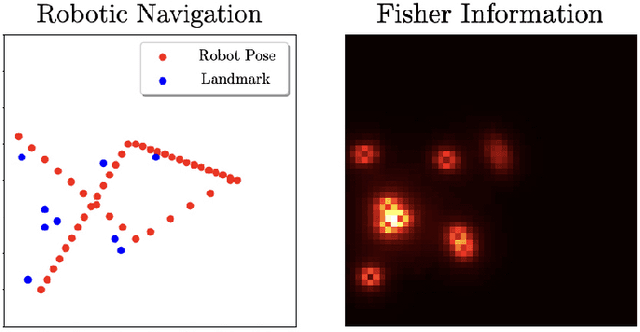

Abstract:Active learning is a decision-making process. In both abstract and physical settings, active learning demands both analysis and action. This is a review of active learning in robotics, focusing on methods amenable to the demands of embodied learning systems. Robots must be able to learn efficiently and flexibly through continuous online deployment. This poses a distinct set of control-oriented challenges -- one must choose suitable measures as objectives, synthesize real-time control, and produce analyses that guarantee performance and safety with limited knowledge of the environment or robot itself. In this work, we survey the fundamental components of robotic active learning systems. We discuss classes of learning tasks that robots typically encounter, measures with which they gauge the information content of observations, and algorithms for generating action plans. Moreover, we provide a variety of examples -- from environmental mapping to nonparametric shape estimation -- that highlight the qualitative differences between learning tasks, information measures, and control techniques. We conclude with a discussion of control-oriented open challenges, including safety-constrained learning and distributed learning.

* 25 pages

Low rattling: A predictive principle for self-organization in active collectives

Jan 03, 2021Abstract:Self-organization is frequently observed in active collectives, from ant rafts to molecular motor assemblies. General principles describing self-organization away from equilibrium have been challenging to identify. We offer a unifying framework that models the behavior of complex systems as largely random, while capturing their configuration-dependent response to external forcing. This allows derivation of a Boltzmann-like principle for understanding and manipulating driven self-organization. We validate our predictions experimentally in shape-changing robotic active matter, and outline a methodology for controlling collective behavior. Our findings highlight how emergent order depends sensitively on the matching between external patterns of forcing and internal dynamical response properties, pointing towards future approaches for design and control of active particle mixtures and metamaterials.

Dynamical System Segmentation for Information Measures in Motion

Dec 09, 2020

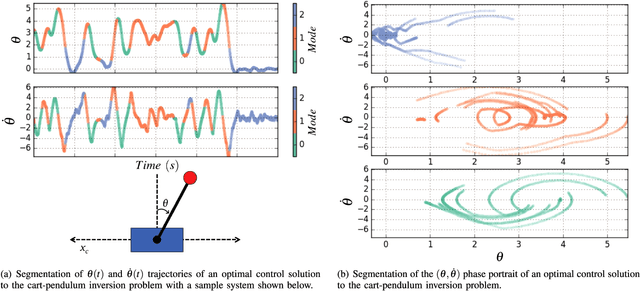

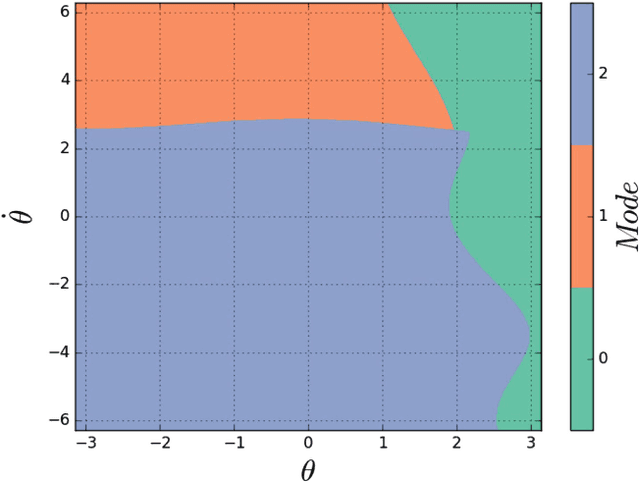

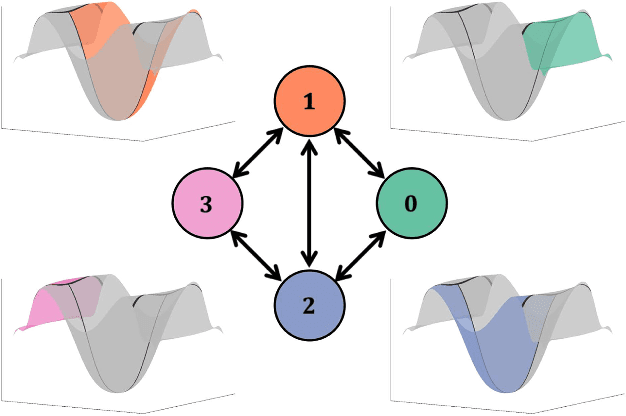

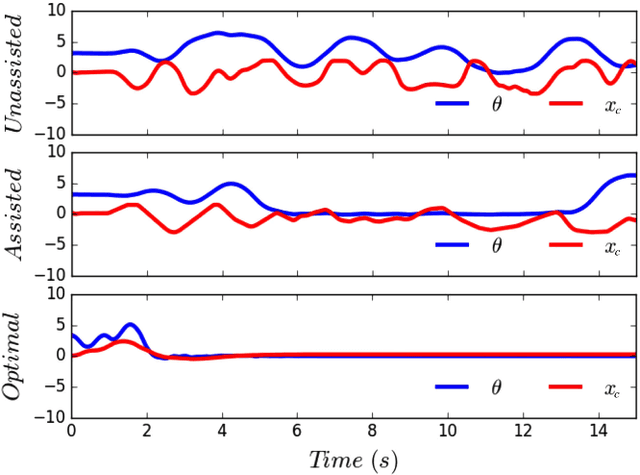

Abstract:Motions carry information about the underlying task being executed. Previous work in human motion analysis suggests that complex motions may result from the composition of fundamental submovements called movemes. The existence of finite structure in motion motivates information-theoretic approaches to motion analysis and robotic assistance. We define task embodiment as the amount of task information encoded in an agent's motions. By decoding task-specific information embedded in motion, we can use task embodiment to create detailed performance assessments. We extract an alphabet of behaviors comprising a motion without \textit{a priori} knowledge using a novel algorithm, which we call dynamical system segmentation. For a given task, we specify an optimal agent, and compute an alphabet of behaviors representative of the task. We identify these behaviors in data from agent executions, and compare their relative frequencies against that of the optimal agent using the Kullback-Leibler divergence. We validate this approach using a dataset of human subjects (n=53) performing a dynamic task, and under this measure find that individuals receiving assistance better embody the task. Moreover, we find that task embodiment is a better predictor of assistance than integrated mean-squared-error.

* 8 pages

Information Requirements of Collision-Based Micromanipulation

Jul 17, 2020

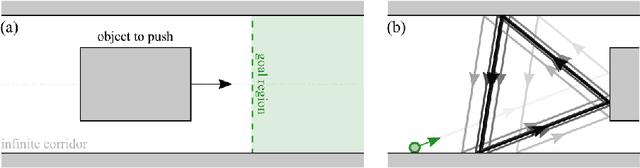

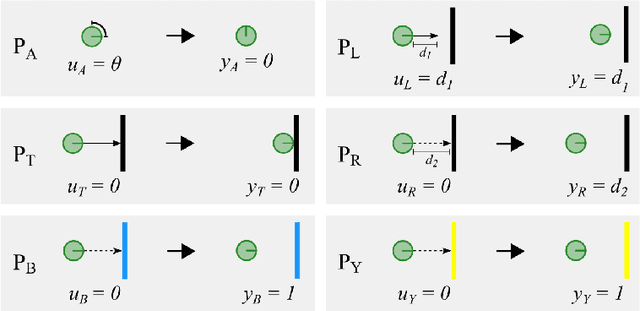

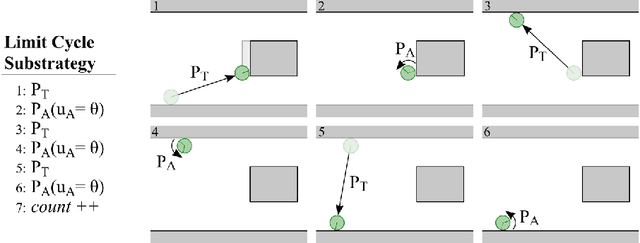

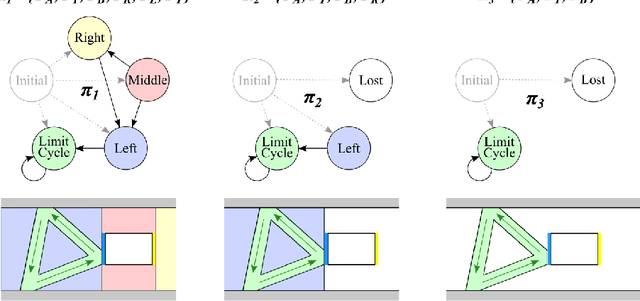

Abstract:We present a task-centered formal analysis of the relative power of several robot designs, inspired by the unique properties and constraints of micro-scale robotic systems. Our task of interest is object manipulation because it is a fundamental prerequisite for more complex applications such as micro-scale assembly or cell manipulation. Motivated by the difficulty in observing and controlling agents at the micro-scale, we focus on the design of boundary interactions: the robot's motion strategy when it collides with objects or the environment boundary, otherwise known as a bounce rule. We present minimal conditions on the sensing, memory, and actuation requirements of periodic ``bouncing'' robot trajectories that move an object in a desired direction through the incidental forces arising from robot-object collisions. Using an information space framework and a hierarchical controller, we compare several robot designs, emphasizing the information requirements of goal completion under different initial conditions, as well as what is required to recognize irreparable task failure. Finally, we present a physically-motivated model of boundary interactions, and analyze the robustness and dynamical properties of resulting trajectories.

Data-Driven Gait Segmentation for Walking Assistance in a Lower-Limb Assistive Device

Feb 28, 2019

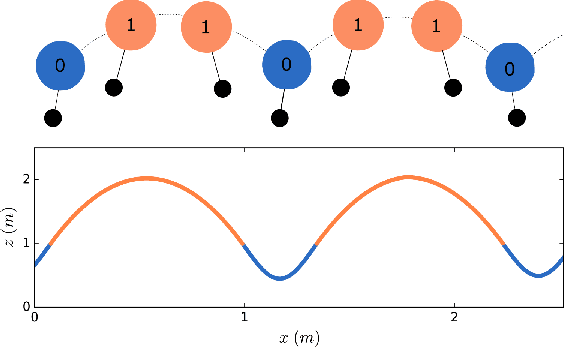

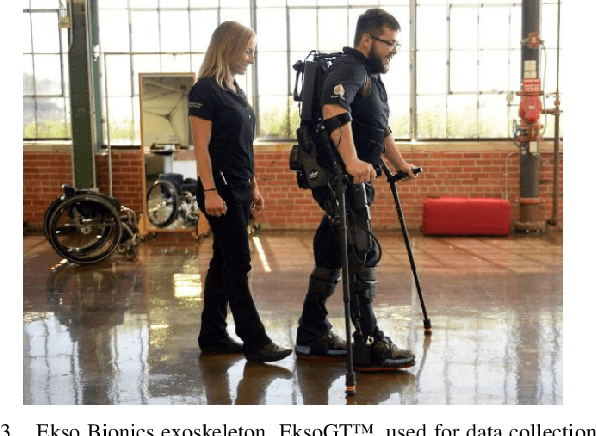

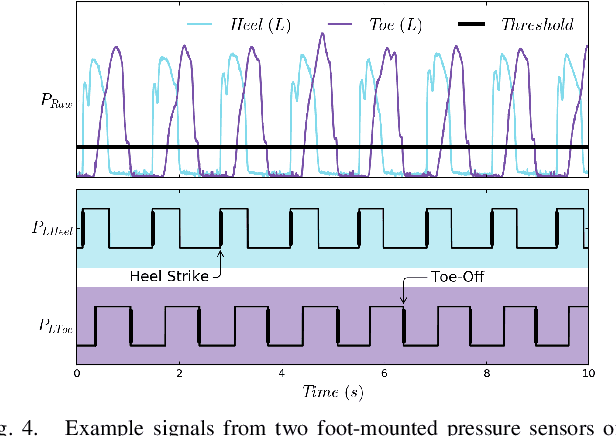

Abstract:Hybrid systems, such as bipedal walkers, are challenging to control because of discontinuities in their nonlinear dynamics. Little can be predicted about the systems' evolution without modeling the guard conditions that govern transitions between hybrid modes, so even systems with reliable state sensing can be difficult to control. We propose an algorithm that allows for determining the hybrid mode of a system in real-time using data-driven analysis. The algorithm is used with data-driven dynamics identification to enable model predictive control based entirely on data. Two examples---a simulated hopper and experimental data from a bipedal walker---are used. In the context of the first example, we are able to closely approximate the dynamics of a hybrid SLIP model and then successfully use them for control in simulation. In the second example, we demonstrate gait partitioning of human walking data, accurately differentiating between stance and swing, as well as selected subphases of swing. We identify contact events, such as heel strike and toe-off, without a contact sensor using only kinematics data from the knee and hip joints, which could be particularly useful in providing online assistance during walking. Our algorithm does not assume a predefined gait structure or gait phase transitions, lending itself to segmentation of both healthy and pathological gaits. With this flexibility, impairment-specific rehabilitation strategies or assistance could be designed.

* 7 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge