Kathleen Fitzsimons

Ergodic imitation: Learning from what to do and what not to do

Mar 31, 2021

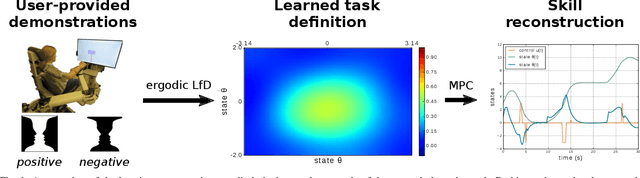

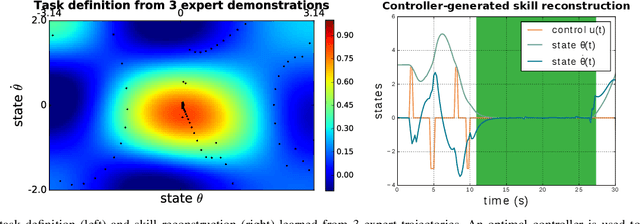

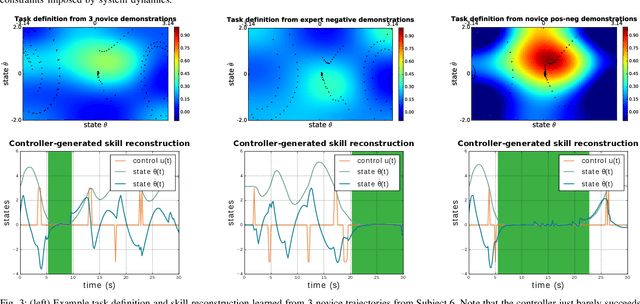

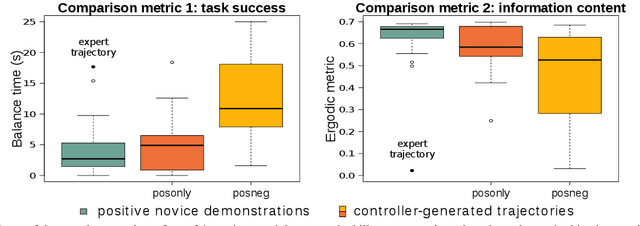

Abstract:With growing access to versatile robotics, it is beneficial for end users to be able to teach robots tasks without needing to code a control policy. One possibility is to teach the robot through successful task executions. However, near-optimal demonstrations of a task can be difficult to provide and even successful demonstrations can fail to capture task aspects key to robust skill replication. Here, we propose a learning from demonstration (LfD) approach that enables learning of robust task definitions without the need for near-optimal demonstrations. We present a novel algorithmic framework for learning tasks based on the ergodic metric -- a measure of information content in motion. Moreover, we make use of negative demonstrations -- demonstrations of what not to do -- and show that they can help compensate for imperfect demonstrations, reduce the number of demonstrations needed, and highlight crucial task elements improving robot performance. In a proof-of-concept example of cart-pole inversion, we show that negative demonstrations alone can be sufficient to successfully learn and recreate a skill. Through a human subject study with 24 participants, we show that consistently more information about a task can be captured from combined positive and negative (posneg) demonstrations than from the same amount of just positive demonstrations. Finally, we demonstrate our learning approach on simulated tasks of target reaching and table cleaning with a 7-DoF Franka arm. Our results point towards a future with robust, data-efficient LfD for novice users.

* Kalinowska and Prabhakar contributed equally to this work

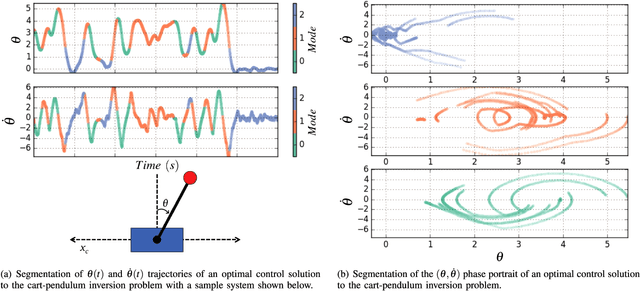

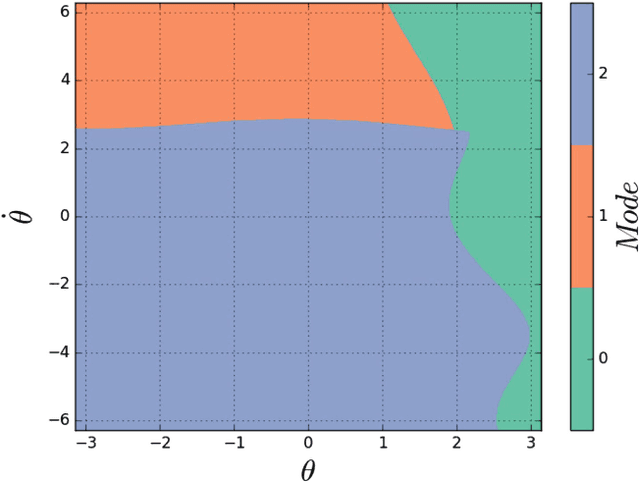

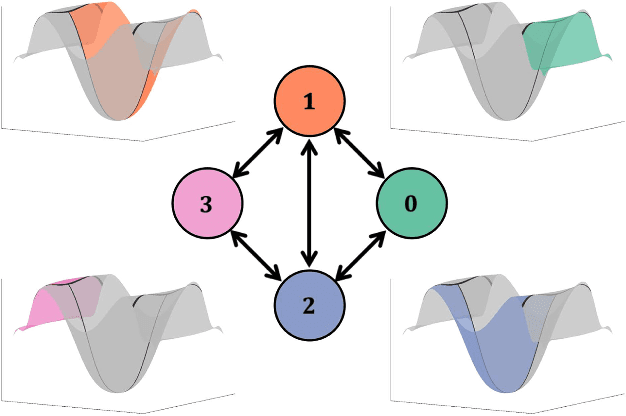

Dynamical System Segmentation for Information Measures in Motion

Dec 09, 2020

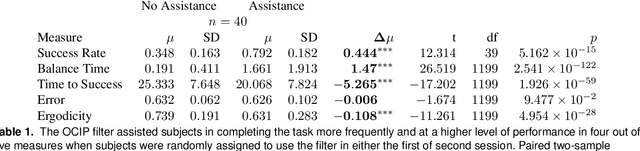

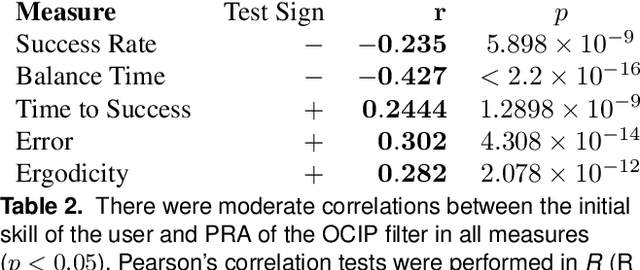

Abstract:Motions carry information about the underlying task being executed. Previous work in human motion analysis suggests that complex motions may result from the composition of fundamental submovements called movemes. The existence of finite structure in motion motivates information-theoretic approaches to motion analysis and robotic assistance. We define task embodiment as the amount of task information encoded in an agent's motions. By decoding task-specific information embedded in motion, we can use task embodiment to create detailed performance assessments. We extract an alphabet of behaviors comprising a motion without \textit{a priori} knowledge using a novel algorithm, which we call dynamical system segmentation. For a given task, we specify an optimal agent, and compute an alphabet of behaviors representative of the task. We identify these behaviors in data from agent executions, and compare their relative frequencies against that of the optimal agent using the Kullback-Leibler divergence. We validate this approach using a dataset of human subjects (n=53) performing a dynamic task, and under this measure find that individuals receiving assistance better embody the task. Moreover, we find that task embodiment is a better predictor of assistance than integrated mean-squared-error.

* 8 pages

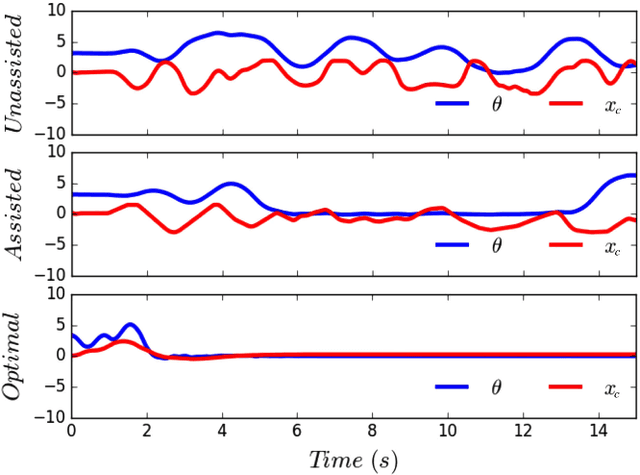

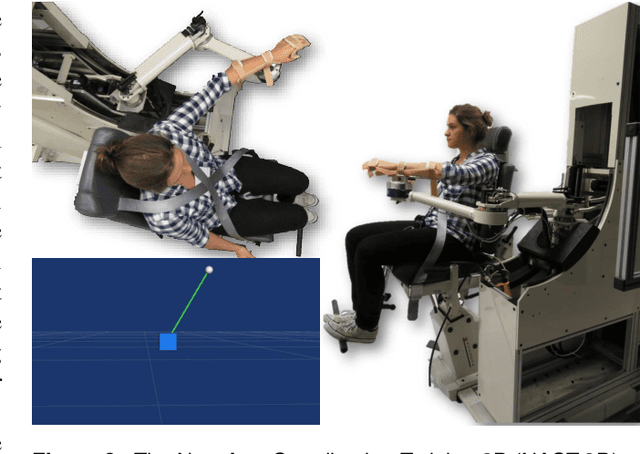

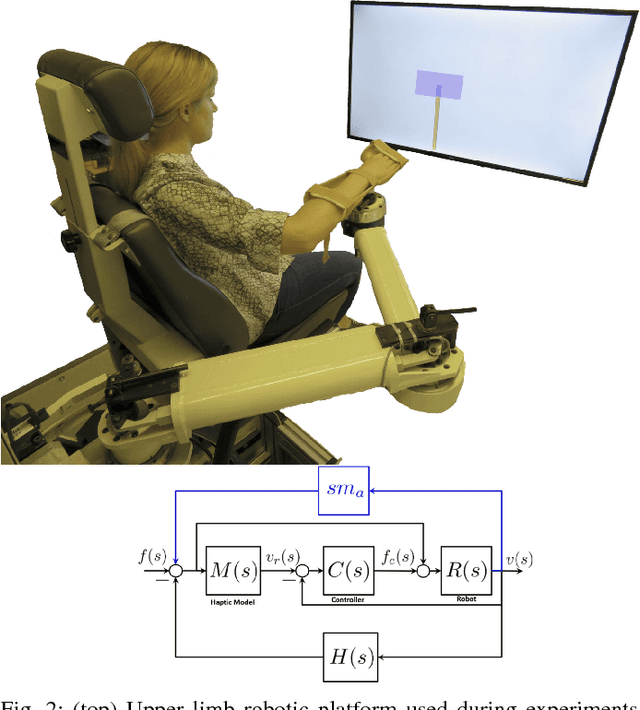

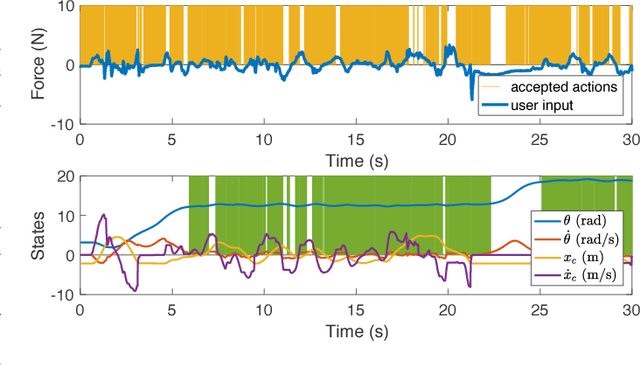

Task-Based Hybrid Shared Control for Training Through Forceful Interaction

Nov 18, 2019

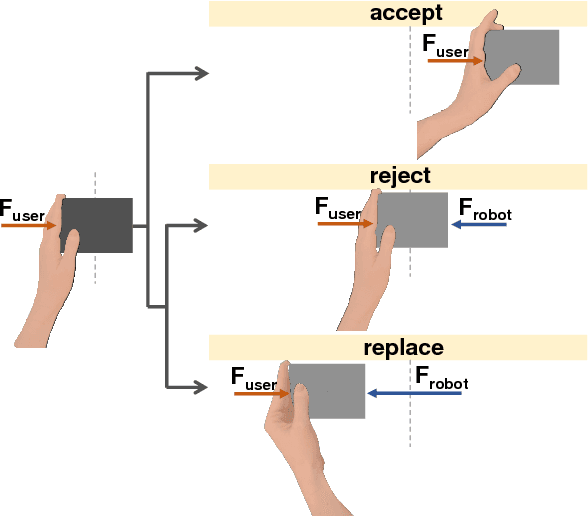

Abstract:Despite the fact that robotic platforms can provide both consistent practice and objective assessments of users over the course of their training, there are relatively few instances where physical human robot interaction has been significantly more effective than unassisted practice or human-mediated training. This paper describes a hybrid shared control robot, which enhances task learning through kinesthetic feedback. The assistance assesses user actions using a task-specific evaluation criterion and selectively accepts or rejects them at each time instant. Through two human subject studies (total n=68), we show that this hybrid approach of switching between full transparency and full rejection of user inputs leads to increased skill acquisition and short-term retention compared to unassisted practice. Moreover, we show that the shared control paradigm exhibits features previously shown to promote successful training. It avoids user passivity by only rejecting user actions and allowing failure at the task. It improves performance during assistance, providing meaningful task-specific feedback. It is sensitive to initial skill of the user and behaves as an `assist-as-needed' control scheme---adapting its engagement in real time based on the performance and needs of the user. Unlike other successful algorithms, it does not require explicit modulation of the level of impedance or error amplification during training and it is permissive to a range of strategies because of its evaluation criterion. We demonstrate that the proposed hybrid shared control paradigm with a task-based minimal intervention criterion significantly enhances task-specific training.

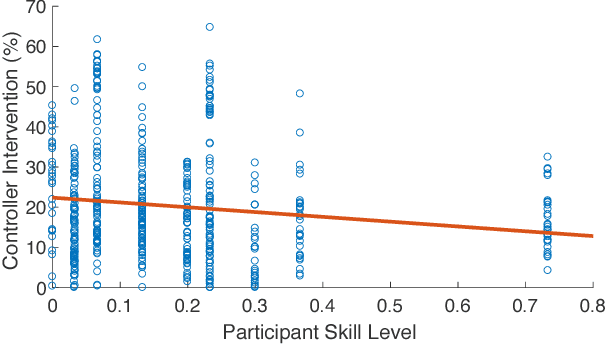

Online User Assessment for Minimal Intervention During Task-Based Robotic Assistance

Jun 06, 2018

Abstract:We propose a novel criterion for evaluating user input for human-robot interfaces for known tasks. We use the mode insertion gradient (MIG)---a tool from hybrid control theory---as a filtering criterion that instantaneously assesses the impact of user actions on a dynamic system over a time window into the future. As a result, the filter is permissive to many chosen strategies, minimally engaging, and skill-sensitive---qualities desired when evaluating human actions. Through a human study with 28 healthy volunteers, we show that the criterion exhibits a low, but significant, negative correlation between skill level, as estimated from task-specific measures in unassisted trials, and the rate of controller intervention during assistance. Moreover, a MIG-based filter can be utilized to create a shared control scheme for training or assistance. In the human study, we observe a substantial training effect when using a MIG-based filter to perform cart-pendulum inversion, particularly when comparing improvement via the RMS error measure. Using simulation of a controlled spring-loaded inverted pendulum (SLIP) as a test case, we observe that the MIG criterion could be used for assistance to guarantee either task completion or safety of a joint human-robot system, while maintaining the system's flexibility with respect to user-chosen strategies.

* 10 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge