Ana Pervan

Fauna Sprout: A lightweight, approachable, developer-ready humanoid robot

Jan 26, 2026Abstract:Recent advances in learned control, large-scale simulation, and generative models have accelerated progress toward general-purpose robotic controllers, yet the field still lacks platforms suitable for safe, expressive, long-term deployment in human environments. Most existing humanoids are either closed industrial systems or academic prototypes that are difficult to deploy and operate around people, limiting progress in robotics. We introduce Sprout, a developer platform designed to address these limitations through an emphasis on safety, expressivity, and developer accessibility. Sprout adopts a lightweight form factor with compliant control, limited joint torques, and soft exteriors to support safe operation in shared human spaces. The platform integrates whole-body control, manipulation with integrated grippers, and virtual-reality-based teleoperation within a unified hardware-software stack. An expressive head further enables social interaction -- a domain that remains underexplored on most utilitarian humanoids. By lowering physical and technical barriers to deployment, Sprout expands access to capable humanoid platforms and provides a practical basis for developing embodied intelligence in real human environments.

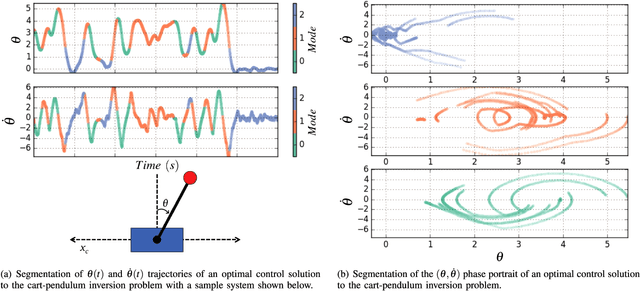

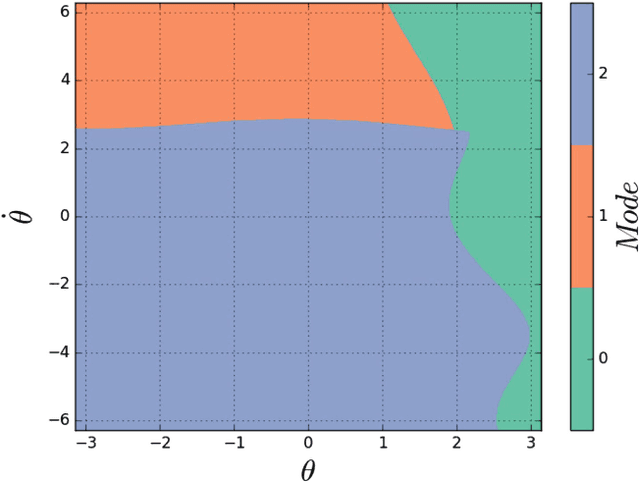

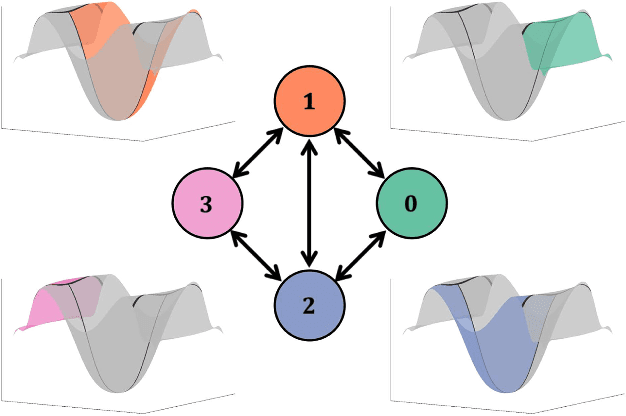

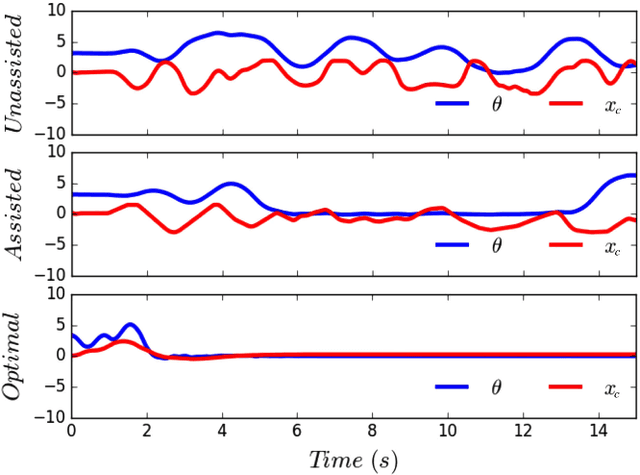

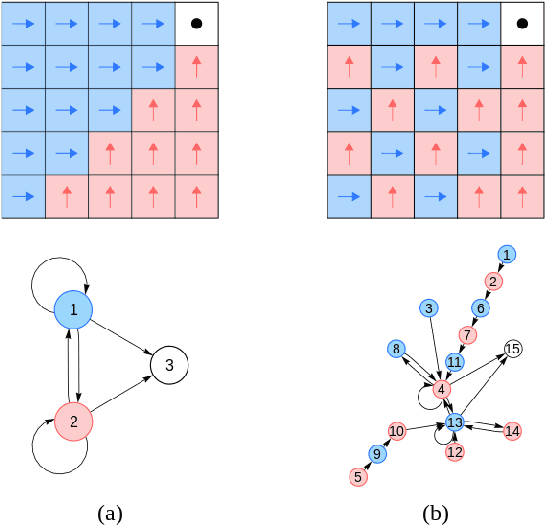

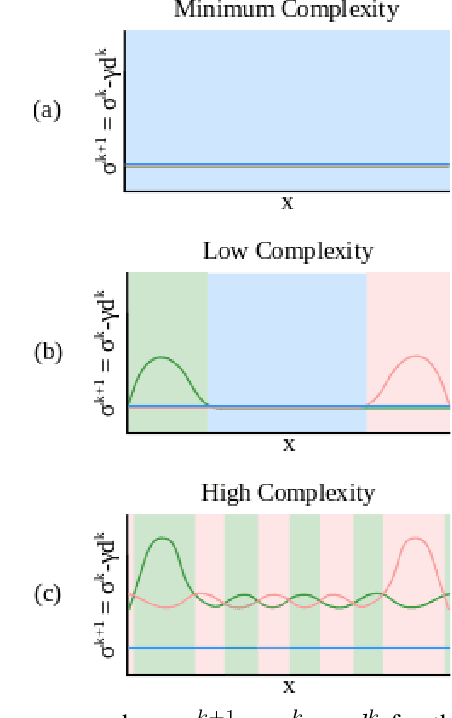

Dynamical System Segmentation for Information Measures in Motion

Dec 09, 2020

Abstract:Motions carry information about the underlying task being executed. Previous work in human motion analysis suggests that complex motions may result from the composition of fundamental submovements called movemes. The existence of finite structure in motion motivates information-theoretic approaches to motion analysis and robotic assistance. We define task embodiment as the amount of task information encoded in an agent's motions. By decoding task-specific information embedded in motion, we can use task embodiment to create detailed performance assessments. We extract an alphabet of behaviors comprising a motion without \textit{a priori} knowledge using a novel algorithm, which we call dynamical system segmentation. For a given task, we specify an optimal agent, and compute an alphabet of behaviors representative of the task. We identify these behaviors in data from agent executions, and compare their relative frequencies against that of the optimal agent using the Kullback-Leibler divergence. We validate this approach using a dataset of human subjects (n=53) performing a dynamic task, and under this measure find that individuals receiving assistance better embody the task. Moreover, we find that task embodiment is a better predictor of assistance than integrated mean-squared-error.

* 8 pages

Information Requirements of Collision-Based Micromanipulation

Jul 17, 2020

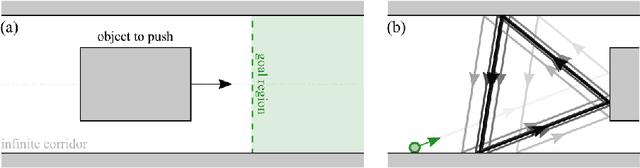

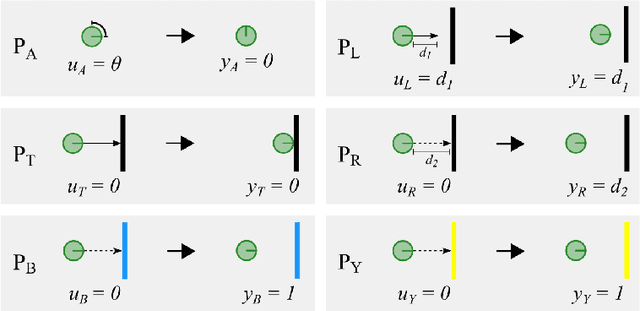

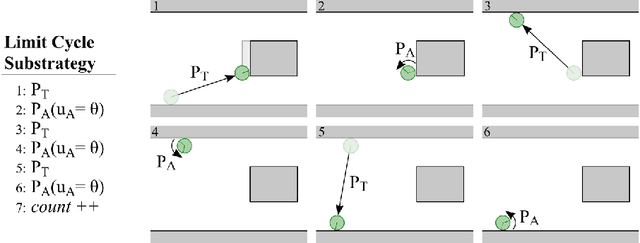

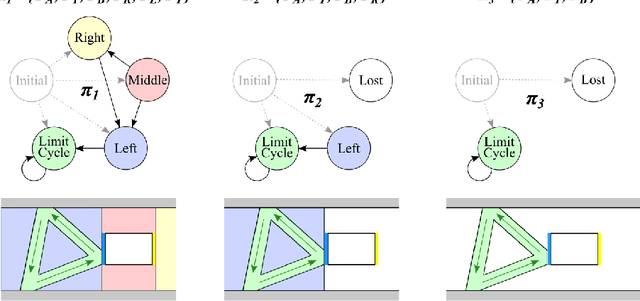

Abstract:We present a task-centered formal analysis of the relative power of several robot designs, inspired by the unique properties and constraints of micro-scale robotic systems. Our task of interest is object manipulation because it is a fundamental prerequisite for more complex applications such as micro-scale assembly or cell manipulation. Motivated by the difficulty in observing and controlling agents at the micro-scale, we focus on the design of boundary interactions: the robot's motion strategy when it collides with objects or the environment boundary, otherwise known as a bounce rule. We present minimal conditions on the sensing, memory, and actuation requirements of periodic ``bouncing'' robot trajectories that move an object in a desired direction through the incidental forces arising from robot-object collisions. Using an information space framework and a hierarchical controller, we compare several robot designs, emphasizing the information requirements of goal completion under different initial conditions, as well as what is required to recognize irreparable task failure. Finally, we present a physically-motivated model of boundary interactions, and analyze the robustness and dynamical properties of resulting trajectories.

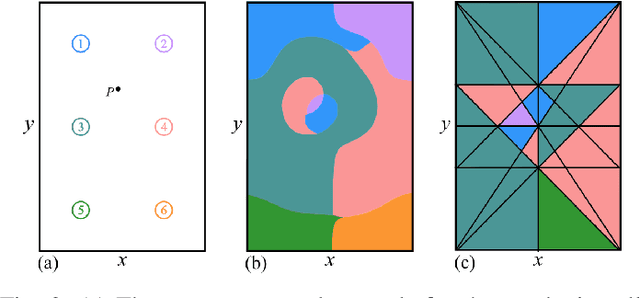

Algorithmic Design for Embodied Intelligence in Synthetic Cells

Jun 12, 2020

Abstract:In nature, biological organisms jointly evolve both their morphology and their neurological capabilities to improve their chances for survival. Consequently, task information is encoded in both their brains and their bodies. In robotics, the development of complex control and planning algorithms often bears sole responsibility for improving task performance. This dependence on centralized control can be problematic for systems with computational limitations, such as mechanical systems and robots on the microscale. In these cases we need to be able to offload complex computation onto the physical morphology of the system. To this end, we introduce a methodology for algorithmically arranging sensing and actuation components into a robot design while maintaining a low level of design complexity (quantified using a measure of graph entropy), and a high level of task embodiment (evaluated by analyzing the Kullback-Leibler divergence between physical executions of the robot and those of an idealized system). This approach computes an idealized, unconstrained control policy which is projected onto a limited selection of sensors and actuators in a given library, resulting in intelligence that is distributed away from a central processor and instead embodied in the physical body of a robot. The method is demonstrated by computationally optimizing a simulated synthetic cell.

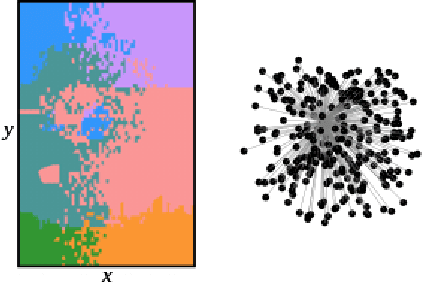

Bayesian Particles on Cyclic Graphs

Mar 08, 2020

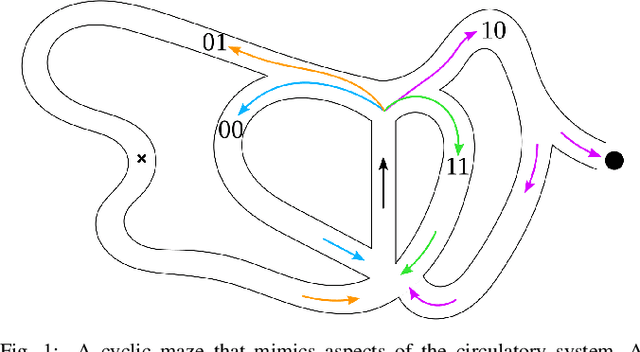

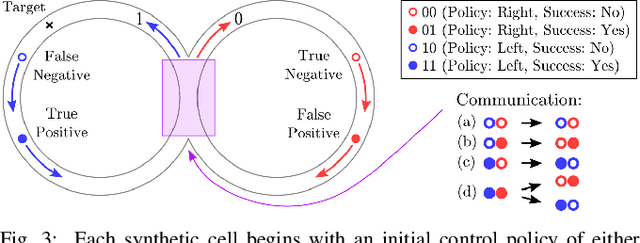

Abstract:We consider the problem of designing synthetic cells to achieve a complex goal (e.g., mimicking the immune system by seeking invaders) in a complex environment (e.g., the circulatory system), where they might have to change their control policy, communicate with each other, and deal with stochasticity including false positives and negatives---all with minimal capabilities and only a few bits of memory. We simulate the immune response using cyclic, maze-like environments and use targets at unknown locations to represent invading cells. Using only a few bits of memory, the synthetic cells are programmed to perform a reinforcement learning-type algorithm with which they update their control policy based on randomized encounters with other cells. As the synthetic cells work together to find the target, their interactions as an ensemble function as a physical implementation of a Bayesian update. That is, the particles act as a particle filter. This result provides formal properties about the behavior of the synthetic cell ensemble that can be used to ensure robustness and safety. This method of simplified reinforcement learning is evaluated in simulations, and applied to an actual model of the human circulatory system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge