Bayesian Particles on Cyclic Graphs

Paper and Code

Mar 08, 2020

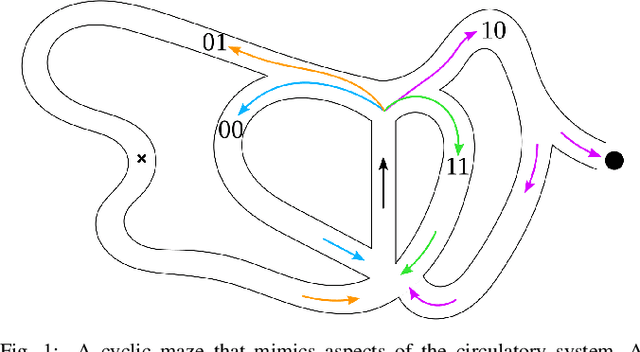

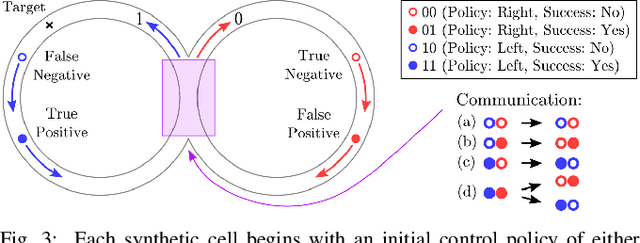

We consider the problem of designing synthetic cells to achieve a complex goal (e.g., mimicking the immune system by seeking invaders) in a complex environment (e.g., the circulatory system), where they might have to change their control policy, communicate with each other, and deal with stochasticity including false positives and negatives---all with minimal capabilities and only a few bits of memory. We simulate the immune response using cyclic, maze-like environments and use targets at unknown locations to represent invading cells. Using only a few bits of memory, the synthetic cells are programmed to perform a reinforcement learning-type algorithm with which they update their control policy based on randomized encounters with other cells. As the synthetic cells work together to find the target, their interactions as an ensemble function as a physical implementation of a Bayesian update. That is, the particles act as a particle filter. This result provides formal properties about the behavior of the synthetic cell ensemble that can be used to ensure robustness and safety. This method of simplified reinforcement learning is evaluated in simulations, and applied to an actual model of the human circulatory system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge