Algorithmic Design for Embodied Intelligence in Synthetic Cells

Paper and Code

Jun 12, 2020

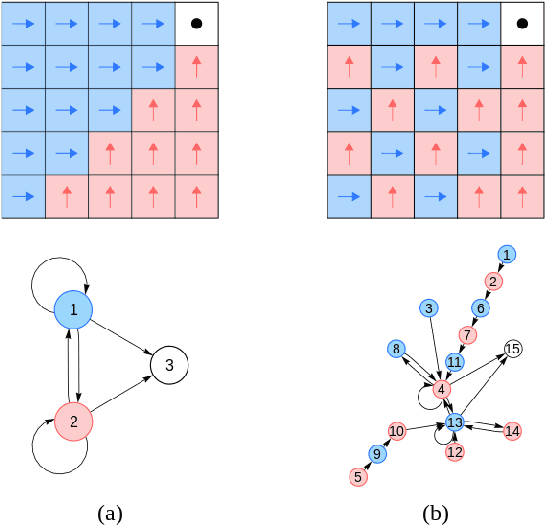

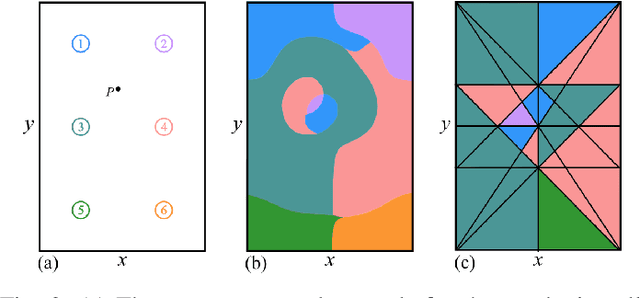

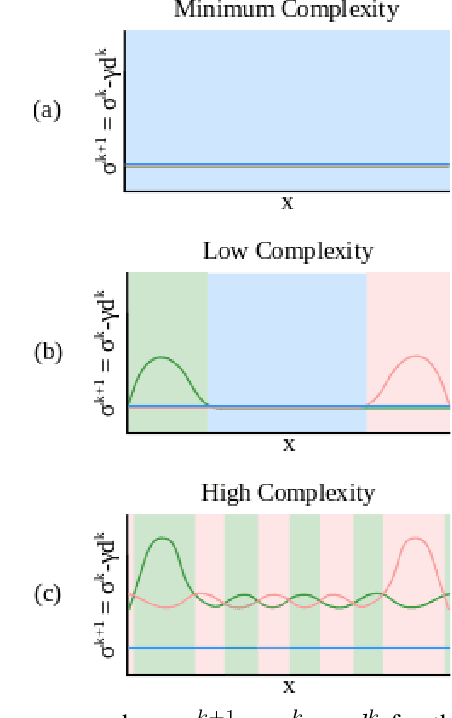

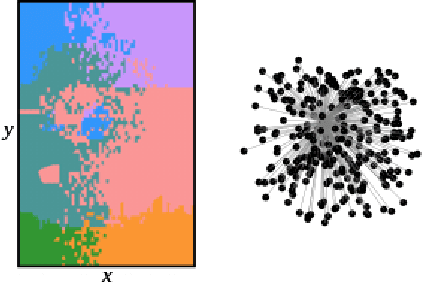

In nature, biological organisms jointly evolve both their morphology and their neurological capabilities to improve their chances for survival. Consequently, task information is encoded in both their brains and their bodies. In robotics, the development of complex control and planning algorithms often bears sole responsibility for improving task performance. This dependence on centralized control can be problematic for systems with computational limitations, such as mechanical systems and robots on the microscale. In these cases we need to be able to offload complex computation onto the physical morphology of the system. To this end, we introduce a methodology for algorithmically arranging sensing and actuation components into a robot design while maintaining a low level of design complexity (quantified using a measure of graph entropy), and a high level of task embodiment (evaluated by analyzing the Kullback-Leibler divergence between physical executions of the robot and those of an idealized system). This approach computes an idealized, unconstrained control policy which is projected onto a limited selection of sensors and actuators in a given library, resulting in intelligence that is distributed away from a central processor and instead embodied in the physical body of a robot. The method is demonstrated by computationally optimizing a simulated synthetic cell.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge