Tarik Tosun

A Low-Cost, Highly Customizable Solution for Position Estimation in Modular Robots

Jan 11, 2022

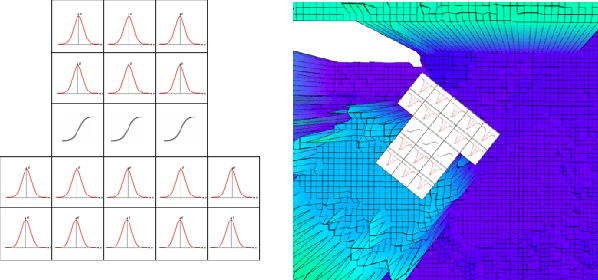

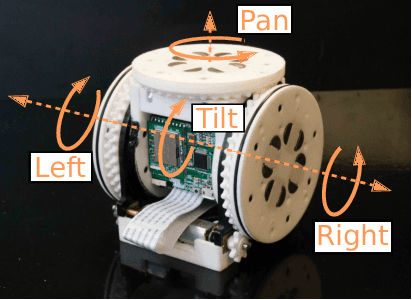

Abstract:Accurate position sensing is important for state estimation and control in robotics. Reliable and accurate position sensors are usually expensive and difficult to customize. Incorporating them into systems that have very tight volume constraints such as modular robots are particularly difficult. PaintPots are low-cost, reliable, and highly customizable position sensors, but their performance is highly dependent on the manufacturing and calibration process. This paper presents a Kalman filter with a simplified observation model developed to deal with the non-linearity issues that result in the use of low-cost microcontrollers. In addition, a complete solution for the use of PaintPots in a variety of sensing modalities including manufacturing, characterization, and estimation is presented for an example modular robot, SMORES-EP. This solution can be easily adapted to a wide range of applications.

* 10 pages, 28 figures

Robotic Grasping through Combined image-Based Grasp Proposal and 3D Reconstruction

Mar 03, 2020

Abstract:We present a novel approach to robotic grasp planning using both a learned grasp proposal network and a learned 3D shape reconstruction network. Our system generates 6-DOF grasps from a single RGB-D image of the target object, which is provided as input to both networks. By using the geometric reconstruction to refine the the candidate grasp produced by the grasp proposal network, our system is able to accurately grasp both known and unknown objects, even when the grasp location on the object is not visible in the input image. This paper presents the network architectures, training procedures, and grasp refinement method that comprise our system. Hardware experiments demonstrate the efficacy of our system at grasping both known and unknown objects (91% success rate). We additionally perform ablation studies that show the benefits of combining a learned grasp proposal with geometric reconstruction for grasping, and also show that our system outperforms several baselines in a grasping task.

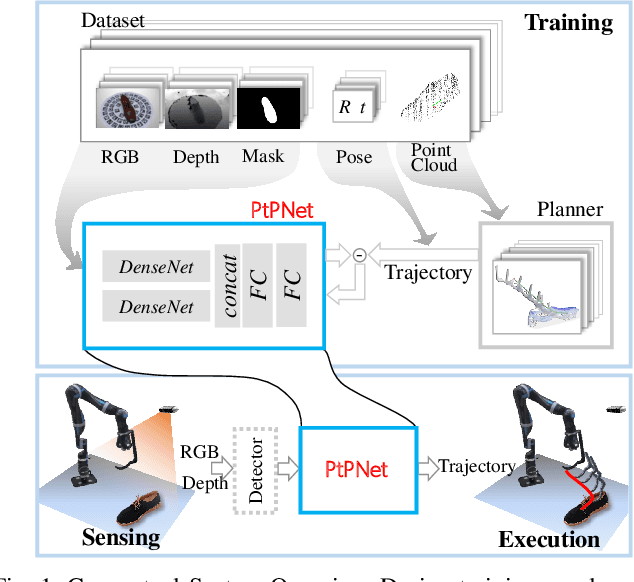

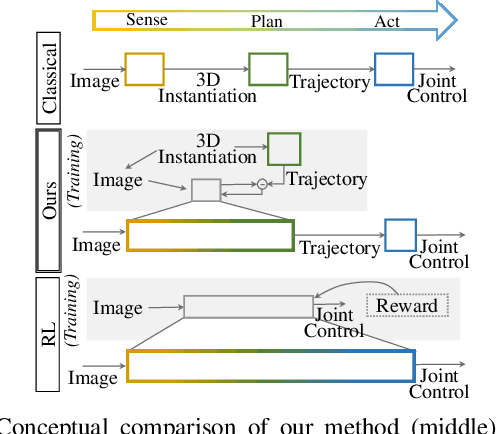

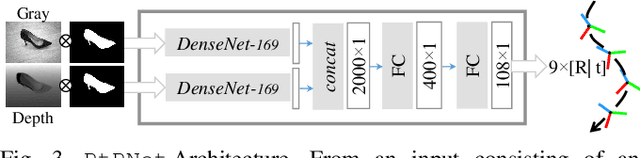

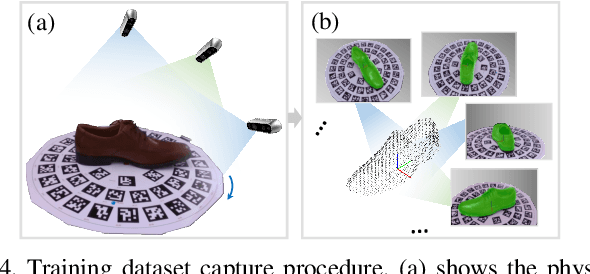

Pixels to Plans: Learning Non-Prehensile Manipulation by Imitating a Planner

Apr 05, 2019

Abstract:We present a novel method enabling robots to quickly learn to manipulate objects by leveraging a motion planner to generate "expert" training trajectories from a small amount of human-labeled data. In contrast to the traditional sense-plan-act cycle, we propose a deep learning architecture and training regimen called PtPNet that can estimate effective end-effector trajectories for manipulation directly from a single RGB-D image of an object. Additionally, we present a data collection and augmentation pipeline that enables the automatic generation of large numbers (millions) of training image and trajectory examples with almost no human labeling effort. We demonstrate our approach in a non-prehensile tool-based manipulation task, specifically picking up shoes with a hook. In hardware experiments, PtPNet generates motion plans (open-loop trajectories) that reliably (89% success over 189 trials) pick up four very different shoes from a range of positions and orientations, and reliably picks up a shoe it has never seen before. Compared with a traditional sense-plan-act paradigm, our system has the advantages of operating on sparse information (single RGB-D frame), producing high-quality trajectories much faster than the "expert" planner (300ms versus several seconds), and generalizing effectively to previously unseen shoes.

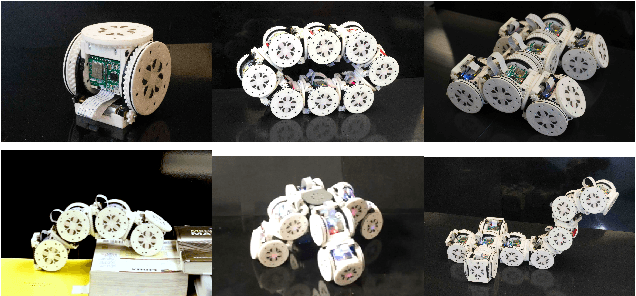

An Integrated System for Perception-Driven Autonomy with Modular Robots

Dec 13, 2018Abstract:The theoretical ability of modular robots to reconfigure in response to complex tasks in a priori unknown environments has frequently been cited as an advantage and remains a major motivator for work in the field. We present a modular robot system capable of autonomously completing high-level tasks by reactively reconfiguring to meet the needs of a perceived, a priori unknown environment. The system integrates perception, high-level planning, and modular hardware, and is validated in three hardware demonstrations. Given a high-level task specification, a modular robot autonomously explores an unknown environment, decides when and how to reconfigure, and manipulates objects to complete its task. The system architecture balances distributed mechanical elements with centralized perception, planning, and control. By providing an example of how a modular robot system can be designed to leverage reactive reconfigurability in unknown environments, we have begun to lay the groundwork for modular self-reconfigurable robots to address tasks in the real world.

* Published article available at: http://robotics.sciencemag.org/cgi/content/full/3/23/eaat4983?ijkey=iBq7yW7Z8vmjE&keytype=ref&siteid=robotics

Optimal Structure Synthesis for Environment Augmenting Robots

Dec 11, 2018

Abstract:Building structures can allow a robot to surmount large obstacles, expanding the set of areas it can reach. This paper presents a planning algorithm to automatically determine what structures a construction-capable robot must build in order to traverse its entire environment. Given an environment, a set of building blocks, and a robot capable of building structures, we seek a optimal set of structures (using a minimum number of building blocks) that could be built to make the entire environment traversable with respect to the robot's movement capabilities. We show that this problem is NP-Hard, and present a complete, optimal algorithm that solves it using a branch-and-bound strategy. The algorithm runs in exponential time in the worst case, but solves typical problems with practical speed. In hardware experiments, we show that the algorithm solves 3D maps of real indoor environments in about one minute, and that the structures selected by the algorithm allow a robot to traverse the entire environment. An accompanying video is available online at https://youtu.be/B9WM557NP44.

Accomplishing High-Level Tasks with Modular Robots

May 01, 2018

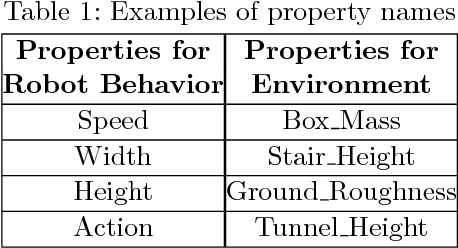

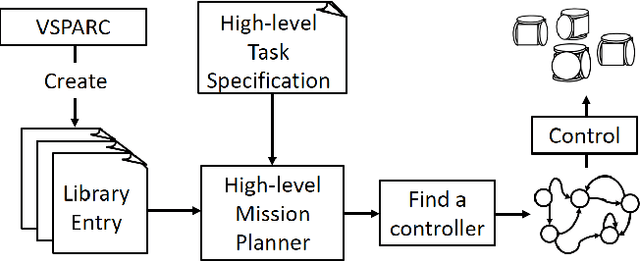

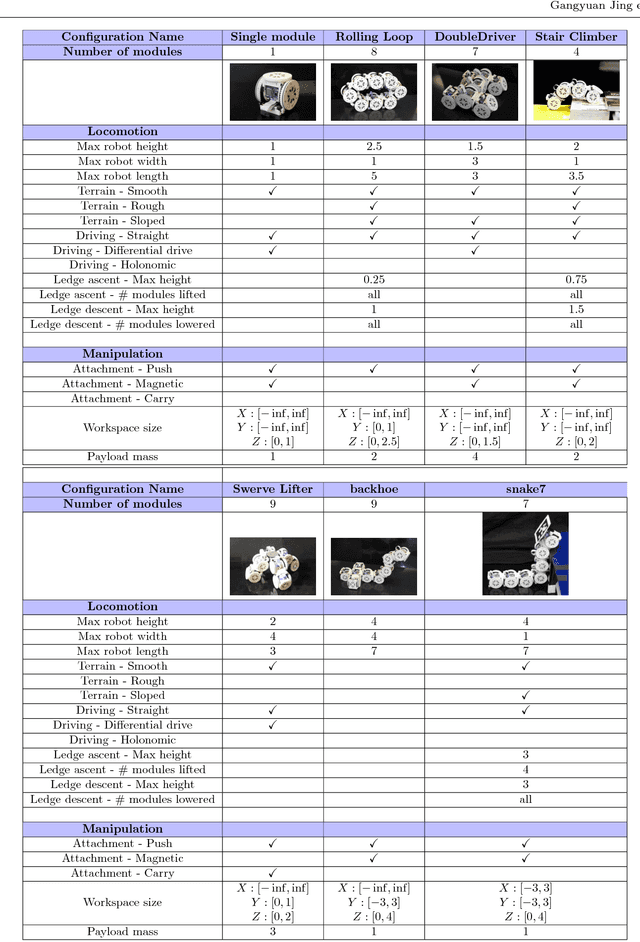

Abstract:The advantage of modular self-reconfigurable robot systems is their flexibility, but this advantage can only be realized if appropriate configurations (shapes) and behaviors (controlling programs) can be selected for a given task. In this paper, we present an integrated system for addressing high-level tasks with modular robots, and demonstrate that it is capable of accomplishing challenging, multi-part tasks in hardware experiments. The system consists of four tightly integrated components: (1) A high-level mission planner, (2) A large design library spanning a wide set of functionality, (3) A design and simulation tool for populating the library with new configurations and behaviors, and (4) modular robot hardware. This paper builds on earlier work by the authors, extending the original system to include environmentally adaptive parametric behaviors, which integrate motion planners and feedback controllers with the system.

Perception-Informed Autonomous Environment Augmentation With Modular Robots

Mar 01, 2018

Abstract:We present a system enabling a modular robot to autonomously build structures in order to accomplish high-level tasks. Building structures allows the robot to surmount large obstacles, expanding the set of tasks it can perform. This addresses a common weakness of modular robot systems, which often struggle to traverse large obstacles. This paper presents the hardware, perception, and planning tools that comprise our system. An environment characterization algorithm identifies features in the environment that can be augmented to create a path between two disconnected regions of the environment. Specially-designed building blocks enable the robot to create structures that can augment the environment to make obstacles traversable. A high-level planner reasons about the task, robot locomotion capabilities, and environment to decide if and where to augment the environment in order to perform the desired task. We validate our system in hardware experiments

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge