Subodh Mishra

Look Both Ways: Bidirectional Visual Sensing for Automatic Multi-Camera Registration

Aug 15, 2022

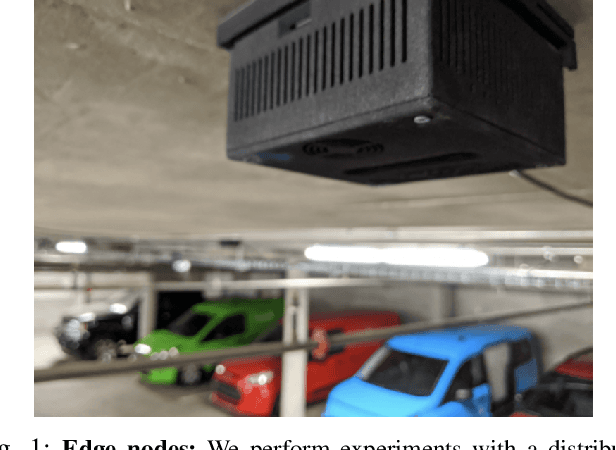

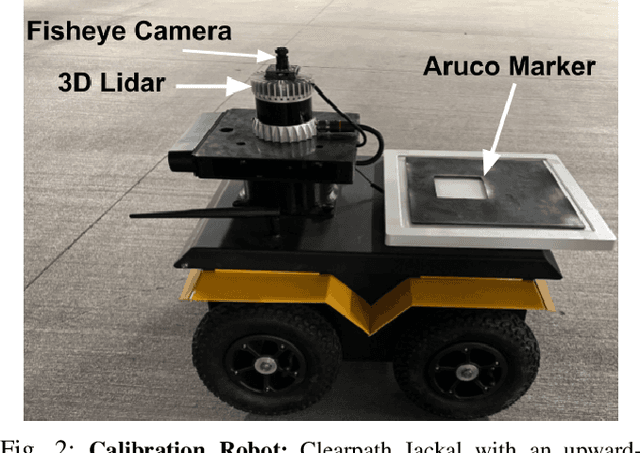

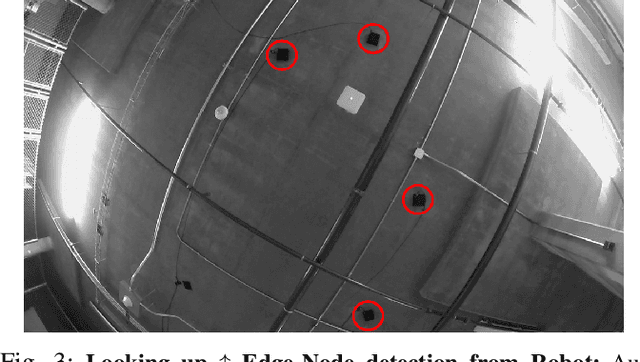

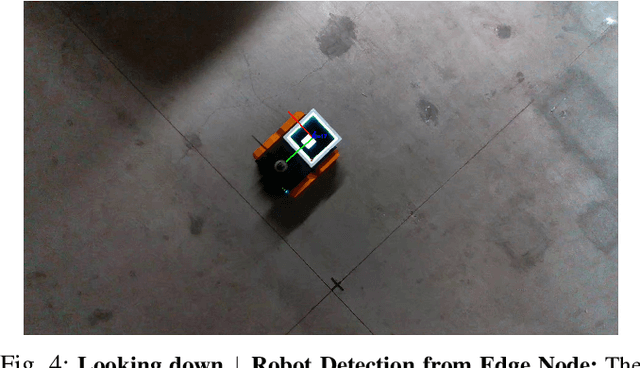

Abstract:This work describes the automatic registration of a large network (approximately 40) of fixed, ceiling-mounted environment cameras spread over a large area (approximately 800 squared meters) using a mobile calibration robot equipped with a single upward-facing fisheye camera and a backlit ArUco marker for easy detection. The fisheye camera is used to do visual odometry (VO), and the ArUco marker facilitates easy detection of the calibration robot in the environment cameras. In addition, the fisheye camera is also able to detect the environment cameras. This two-way, bidirectional detection constrains the pose of the environment cameras to solve an optimization problem. Such an approach can be used to automatically register a large-scale multi-camera system used for surveillance, automated parking, or robotic applications. This VO based multicamera registration method is extensively validated using real-world experiments, and also compared against a similar approach which uses an LiDAR - an expensive, heavier and power hungry sensor.

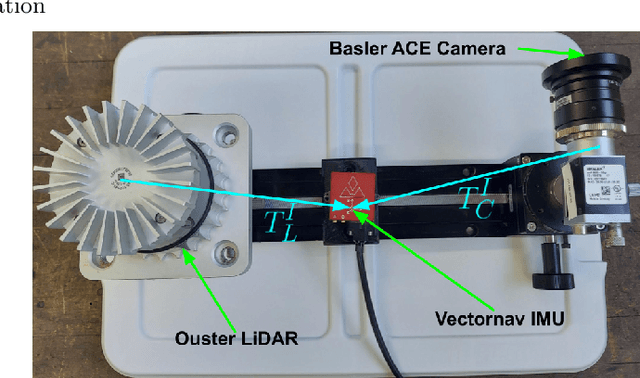

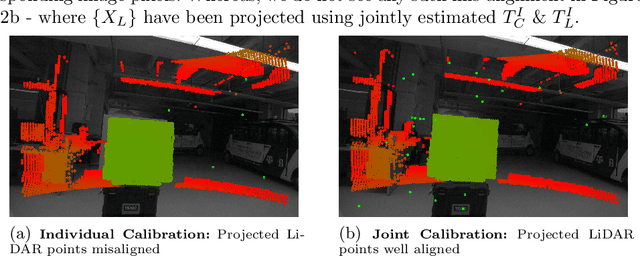

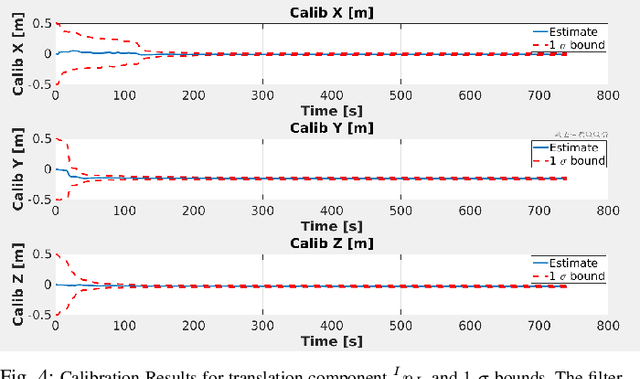

Extrinsic Calibration of LiDAR, IMU and Camera

May 18, 2022

Abstract:In this work we present a novel method to jointly calibrate a sensor suite consisting a 3D-LiDAR, Inertial Measurement Unit (IMU) and Camera under an Extended Kalman Filter (EKF) framework. We exploit pairwise constraints between the 3 sensor pairs to perform EKF update and experimentally demonstrate the superior performance obtained with joint calibration as against individual sensor pair calibration.

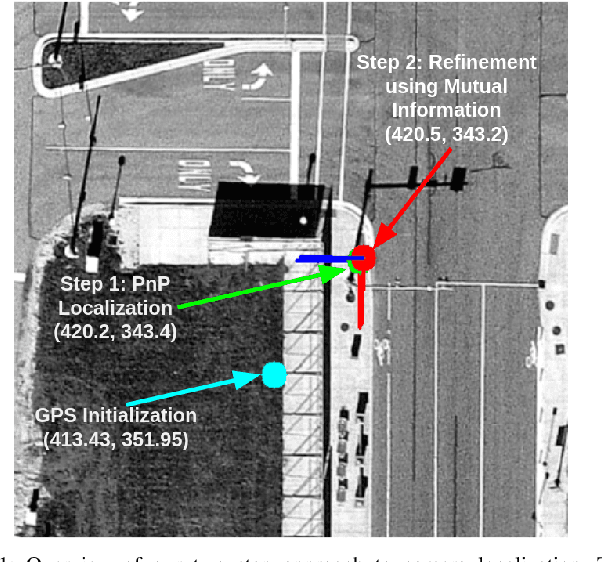

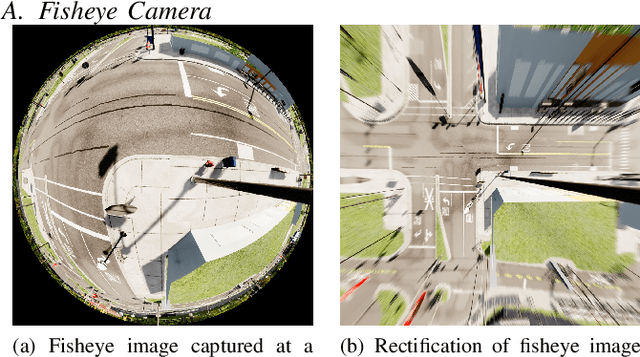

Localization of a Smart Infrastructure Fisheye Camera in a Prior Map for Autonomous Vehicles

Sep 28, 2021

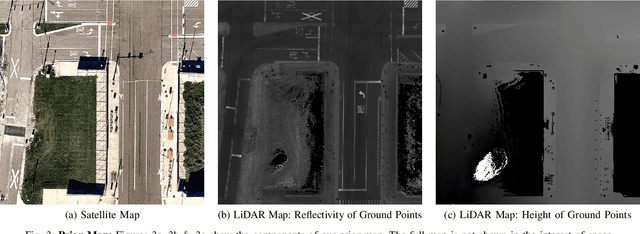

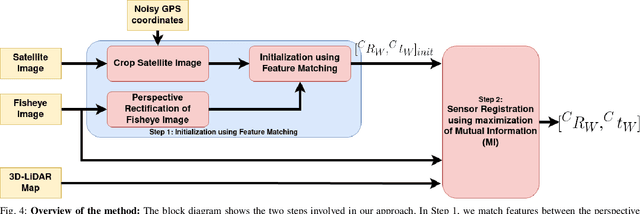

Abstract:This work presents a technique for localization of a smart infrastructure node, consisting of a fisheye camera, in a prior map. These cameras can detect objects that are outside the line of sight of the autonomous vehicles (AV) and send that information to AVs using V2X technology. However, in order for this information to be of any use to the AV, the detected objects should be provided in the reference frame of the prior map that the AV uses for its own navigation. Therefore, it is important to know the accurate pose of the infrastructure camera with respect to the prior map. Here we propose to solve this localization problem in two steps, \textit{(i)} we perform feature matching between perspective projection of fisheye image and bird's eye view (BEV) satellite imagery from the prior map to estimate an initial camera pose, \textit{(ii)} we refine the initialization by maximizing the Mutual Information (MI) between intensity of pixel values of fisheye image and reflectivity of 3D LiDAR points in the map data. We validate our method on simulated data and also present results with real world data.

Motion based Extrinsic Calibration of a 3D Lidar and an IMU

Apr 25, 2021

Abstract:This work presents a novel extrinsic calibration estimation algorithm between a 3D Lidar and an IMU using an Extended Kalman Filter which exploits the motion based calibration constraint for state update. The steps include, data collection by moving the Lidar Inertial sensor suite randomly along all degrees of freedom, determination of the inter sensor rotation by using rotational component of the aforementioned motion based calibration constraint in a least squares optimization framework, and finally determination of inter sensor translation using the motion based calibration constraint in an Extended Kalman Filter (EKF) framework. We experimentally validate our method on data collected in our lab.

Experimental Evaluation of 3D-LIDAR Camera Extrinsic Calibration

Jul 03, 2020

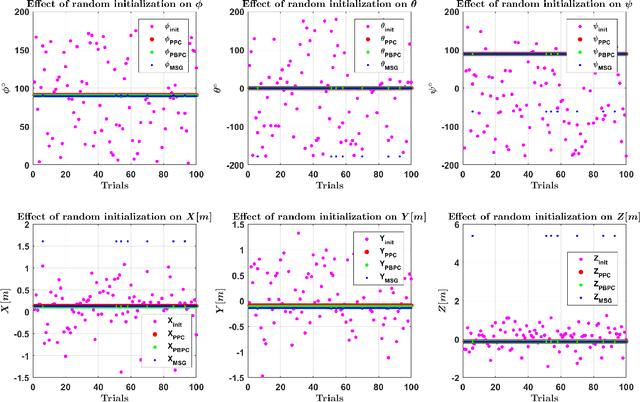

Abstract:In this paper we perform an experimental comparison of three different target based 3D-LIDAR camera calibration algorithms. We briefly elucidate the mathematical background behind each method and provide insights into practical aspects like ease of data collection for all of them. We extensively evaluate these algorithms on a sensor suite which consists multiple cameras and LIDARs by assessing their robustness to random initialization and by using metrics like Mean Line Re-projection Error (MLRE) and Factory Stereo Calibration Error. We also show the effect of noisy sensor on the calibration result from all the algorithms and conclude with a note on which calibration algorithm should be used under what circumstances.

Extrinsic Calibration of a 3D-LIDAR and a Camera

Mar 02, 2020

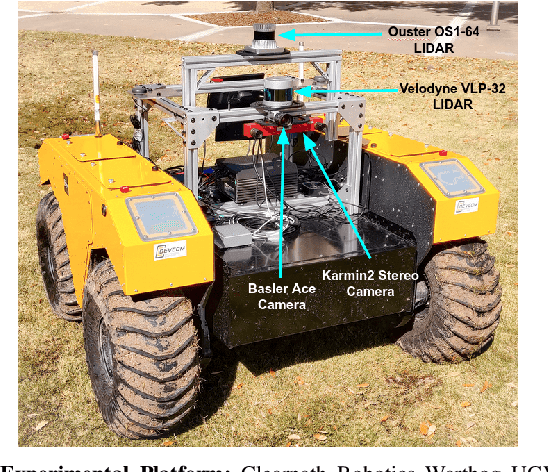

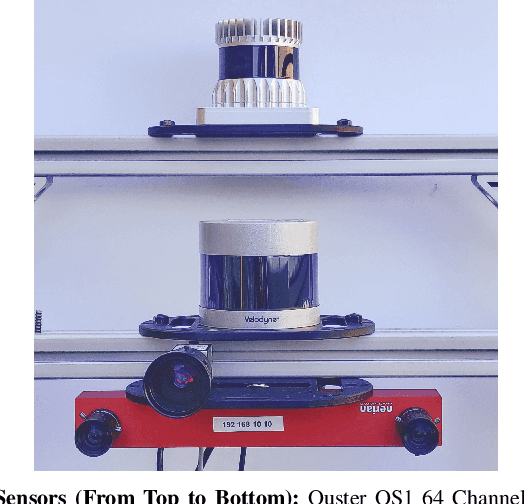

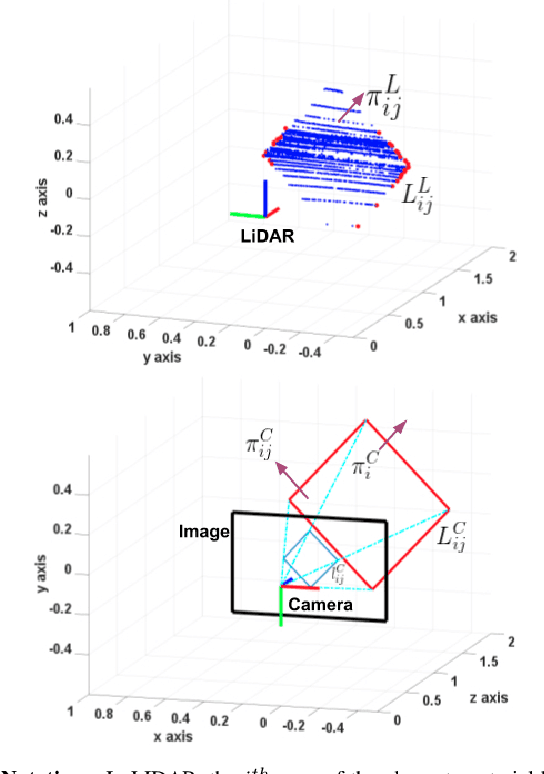

Abstract:This work presents an extrinsic parameter estimation algorithm between a 3D LIDAR and a Projective Camera using a marker-less planar target, by exploiting Planar Surface Point to Plane and Planar Edge Point to back-projected Plane geometric constraints. The proposed method uses the data collected by placing the planar board at different poses in the common field of view of the LIDAR and the Camera. The steps include, detection of the target and the edges of the target in LIDAR and Camera frames, matching the detected planes and lines across both the sensing modalities and finally solving a cost function formed by the aforementioned geometric constraints that link the features detected in both the LIDAR and the Camera using non-linear least squares. We have extensively validated our algorithm using two Basler Cameras, Velodyne VLP-32 and Ouster OS1 LIDARs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge