Steven Marty

DTR: Delaunay Triangulation-based Racing for Scaled Autonomous Racing

May 30, 2025Abstract:Reactive controllers for autonomous racing avoid the computational overhead of full ee-Think-Act autonomy stacks by directly mapping sensor input to control actions, eliminating the need for localization and planning. A widely used reactive strategy is FTG, which identifies gaps in LiDAR range measurements and steers toward a chosen one. While effective on fully bounded circuits, FTG fails in scenarios with incomplete boundaries and is prone to driving into dead-ends, known as FTG-traps. This work presents DTR, a reactive controller that combines Delaunay triangulation, from raw LiDAR readings, with track boundary segmentation to extract a centerline while systematically avoiding FTG-traps. Compared to FTG, the proposed method achieves lap times that are 70\% faster and approaches the performance of map-dependent methods. With a latency of 8.95 ms and CPU usage of only 38.85\% on the robot's OBC, DTR is real-time capable and has been successfully deployed and evaluated in field experiments.

Burst Image Super-Resolution with Mamba

Mar 25, 2025Abstract:Burst image super-resolution (BISR) aims to enhance the resolution of a keyframe by leveraging information from multiple low-resolution images captured in quick succession. In the deep learning era, BISR methods have evolved from fully convolutional networks to transformer-based architectures, which, despite their effectiveness, suffer from the quadratic complexity of self-attention. We see Mamba as the next natural step in the evolution of this field, offering a comparable global receptive field and selective information routing with only linear time complexity. In this work, we introduce BurstMamba, a Mamba-based architecture for BISR. Our approach decouples the task into two specialized branches: a spatial module for keyframe super-resolution and a temporal module for subpixel prior extraction, striking a balance between computational efficiency and burst information integration. To further enhance burst processing with Mamba, we propose two novel strategies: (i) optical flow-based serialization, which aligns burst sequences only during state updates to preserve subpixel details, and (ii) a wavelet-based reparameterization of the state-space update rules, prioritizing high-frequency features for improved burst-to-keyframe information passing. Our framework achieves SOTA performance on public benchmarks of SyntheticSR, RealBSR-RGB, and RealBSR-RAW.

Noise Analysis and Modeling of the PMD Flexx2 Depth Camera for Robotic Applications

Dec 19, 2024Abstract:Time of Flight ToF cameras renowned for their ability to capture realtime 3D information have become indispensable for agile mobile robotics These cameras utilize light signals to accurately measure distances enabling robots to navigate complex environments with precision Innovative depth cameras characterized by their compact size and lightweight design such as the recently released PMD Flexx2 are particularly suited for mobile robots Capable of achieving high frame rates while capturing depth information this innovative sensor is suitable for tasks such as robot navigation and terrain mapping Operating on the ToF measurement principle the sensor offers multiple benefits over classic stereobased depth cameras However the depth images produced by the camera are subject to noise from multiple sources complicating their simulation This paper proposes an accurate quantification and modeling of the nonsystematic noise of the PMD Flexx2 We propose models for both axial and lateral noise across various camera modes assuming Gaussian distributions Axial noise modeled as a function of distance and incidence angle demonstrated a low average KullbackLeibler KL divergence of 0015 nats reflecting precise noise characterization Lateral noise deviating from a Gaussian distribution was modeled conservatively yielding a satisfactory KL divergence of 0868 nats These results validate our noise models crucial for accurately simulating sensor behavior in virtual environments and reducing the simtoreal gap in learningbased control approaches

* Accepted by COINS 2024

Autonomous Navigation in Dynamic Human Environments with an Embedded 2D LiDAR-based Person Tracker

Dec 19, 2024

Abstract:In the rapidly evolving landscape of autonomous mobile robots, the emphasis on seamless human-robot interactions has shifted towards autonomous decision-making. This paper delves into the intricate challenges associated with robotic autonomy, focusing on navigation in dynamic environments shared with humans. It introduces an embedded real-time tracking pipeline, integrated into a navigation planning framework for effective person tracking and avoidance, adapting a state-of-the-art 2D LiDAR-based human detection network and an efficient multi-object tracker. By addressing the key components of detection, tracking, and planning separately, the proposed approach highlights the modularity and transferability of each component to other applications. Our tracking approach is validated on a quadruped robot equipped with 270{\deg} 2D-LiDAR against motion capture system data, with the preferred configuration achieving an average MOTA of 85.45% in three newly recorded datasets, while reliably running in real-time at 20 Hz on the NVIDIA Jetson Xavier NX embedded GPU-accelerated platform. Furthermore, the integrated tracking and avoidance system is evaluated in real-world navigation experiments, demonstrating how accurate person tracking benefits the planner in optimizing the generated trajectories, enhancing its collision avoidance capabilities. This paper contributes to safer human-robot cohabitation, blending recent advances in human detection with responsive planning to navigate shared spaces effectively and securely.

* Accepted by SAS 2024

TinyBird-ML: An ultra-low Power Smart Sensor Node for Bird Vocalization Analysis and Syllable Classification

Jul 31, 2024

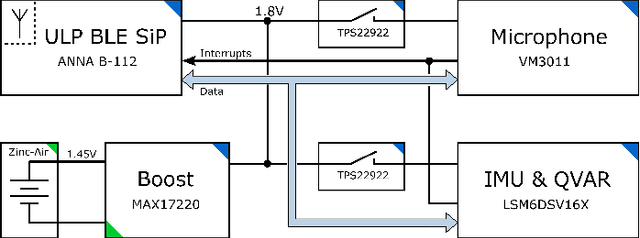

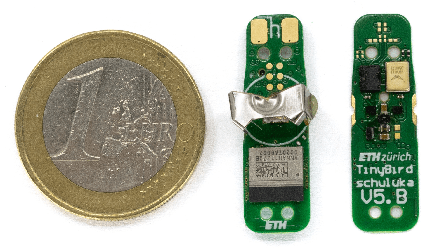

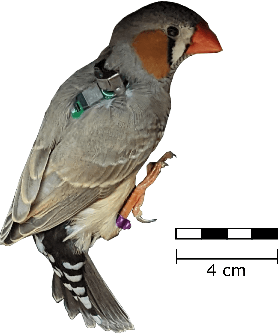

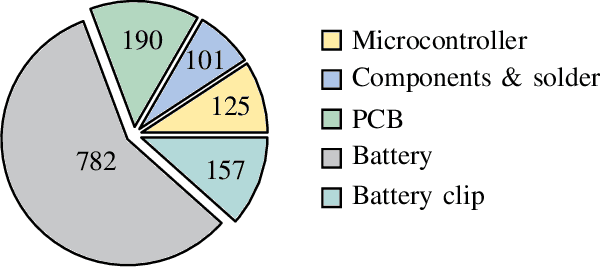

Abstract:Animal vocalisations serve a wide range of vital functions. Although it is possible to record animal vocalisations with external microphones, more insights are gained from miniature sensors mounted directly on animals' backs. We present TinyBird-ML; a wearable sensor node weighing only 1.4 g for acquiring, processing, and wirelessly transmitting acoustic signals to a host system using Bluetooth Low Energy. TinyBird-ML embeds low-latency tiny machine learning algorithms for song syllable classification. To optimize battery lifetime of TinyBird-ML during fault-tolerant continuous recordings, we present an efficient firmware and hardware design. We make use of standard lossy compression schemes to reduce the amount of data sent over the Bluetooth antenna, which increases battery lifetime by 70% without negative impact on offline sound analysis. Furthermore, by not transmitting signals during silent periods, we further increase battery lifetime. One advantage of our sensor is that it allows for closed-loop experiments in the microsecond range by processing sounds directly on the device instead of streaming them to a computer. We demonstrate this capability by detecting and classifying song syllables with minimal latency and a syllable error rate of 7%, using a light-weight neural network that runs directly on the sensor node itself. Thanks to our power-saving hardware and software design, during continuous operation at a sampling rate of 16 kHz, the sensor node achieves a lifetime of 25 hours on a single size 13 zinc-air battery.

Investigation of mmWave Radar Technology For Non-contact Vital Sign Monitoring

Sep 15, 2023Abstract:Non-contact vital sign monitoring has many advantages over conventional methods in being comfortable, unobtrusive and without any risk of spreading infection. The use of millimeter-wave (mmWave) radars is one of the most promising approaches that enable contact-less monitoring of vital signs. Novel low-power implementations of this technology promise to enable vital sign sensing in embedded, battery-operated devices. The nature of these new low-power sensors exacerbates the challenges of accurate and robust vital sign monitoring and especially the problem of heart-rate tracking. This work focuses on the investigation and characterization of three Frequency Modulated Continuous Wave (FMCW) low-power radars with different carrier frequencies of 24 GHz, 60 GHz and 120 GHz. The evaluation platforms were first tested on phantom models that emulated human bodies to accurately evaluate the baseline noise, error in range estimation, and error in displacement estimation. Additionally, the systems were also used to collect data from three human subjects to gauge the feasibility of identifying heartbeat peaks and breathing peaks with simple and lightweight algorithms that could potentially run in low-power embedded processors. The investigation revealed that the 24 GHz radar has the highest baseline noise level, 0.04mm at 0{\deg} angle of incidence, and an error in range estimation of 3.45 +- 1.88 cm at a distance of 60 cm. At the same distance, the 60 GHz and the 120 GHz radar system shows the least noise level, 0.0lmm at 0{\deg} angle of incidence, and error in range estimation 0.64 +- 0.01 cm and 0.04 +- 0.0 cm respectively. Additionally, tests on humans showed that all three radar systems were able to identify heart and breathing activity but the 120 GHz radar system outperformed the other two.

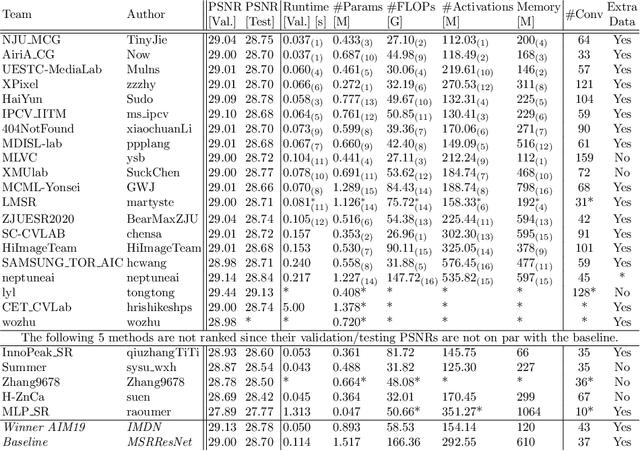

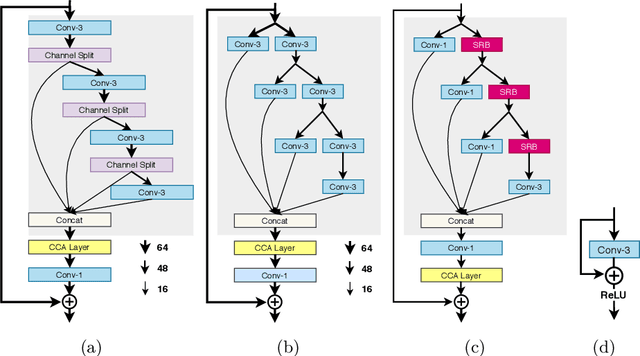

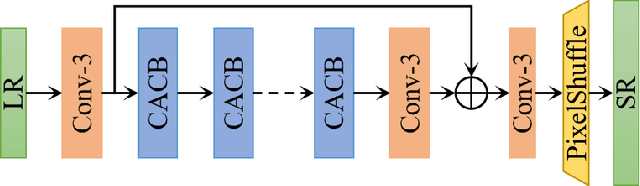

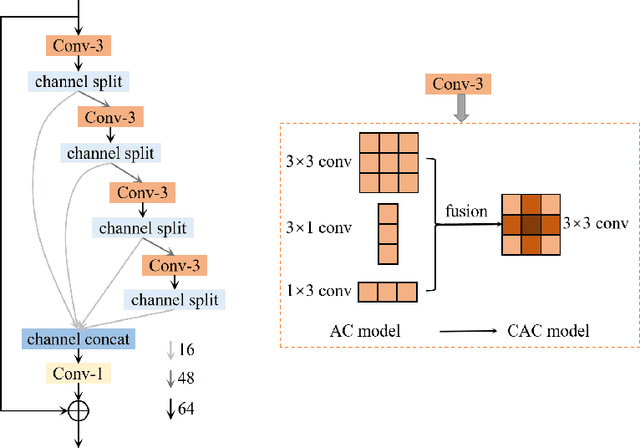

AIM 2020 Challenge on Efficient Super-Resolution: Methods and Results

Sep 15, 2020

Abstract:This paper reviews the AIM 2020 challenge on efficient single image super-resolution with focus on the proposed solutions and results. The challenge task was to super-resolve an input image with a magnification factor x4 based on a set of prior examples of low and corresponding high resolution images. The goal is to devise a network that reduces one or several aspects such as runtime, parameter count, FLOPs, activations, and memory consumption while at least maintaining PSNR of MSRResNet. The track had 150 registered participants, and 25 teams submitted the final results. They gauge the state-of-the-art in efficient single image super-resolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge