Andrea Ronco

Machine Learning In-Sensors: Computation-enabled Intelligent Sensors For Next Generation of IoT

Jul 31, 2024

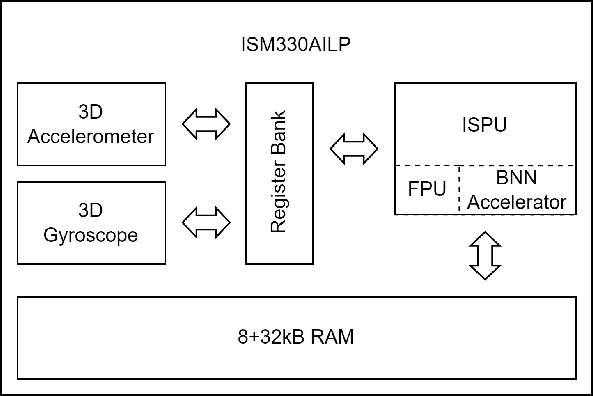

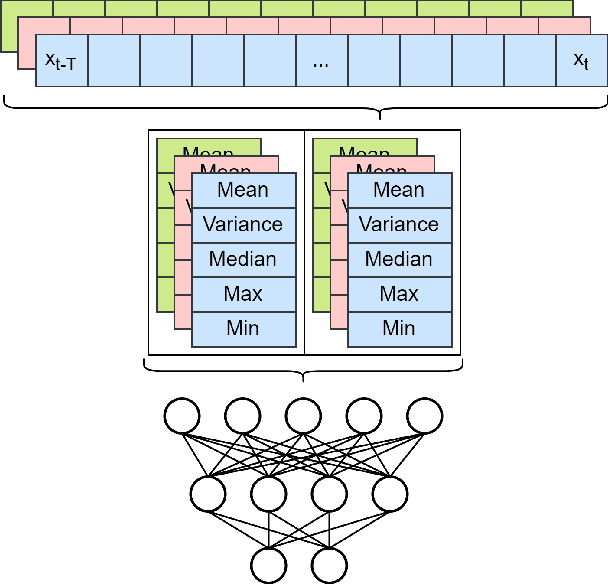

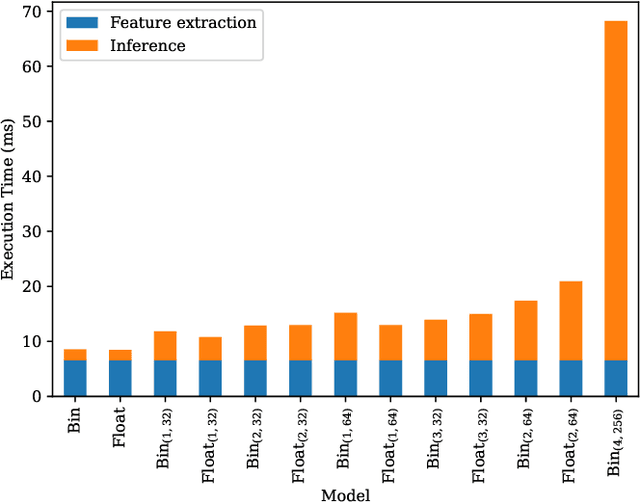

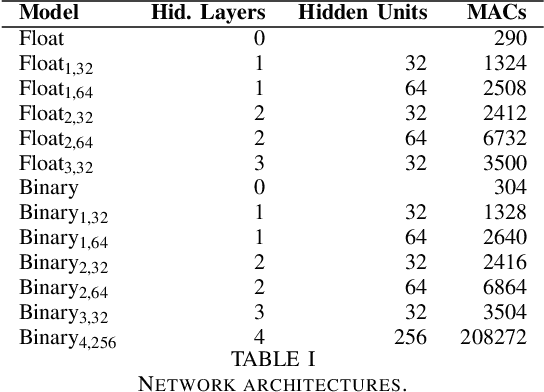

Abstract:Smart sensors are an emerging technology that allows combining the data acquisition with the elaboration directly on the Edge device, very close to the sensors. To push this concept to the extreme, technology companies are proposing a new generation of sensors allowing to move the intelligence from the edge host device, typically a microcontroller, directly to the ultra-low-power sensor itself, in order to further reduce the miniaturization, cost and energy efficiency. This paper evaluates the capabilities of a novel and promising solution from STMicroelectronics. The presence of a floating point unit and an accelerator for binary neural networks provide capabilities for in-sensor feature extraction and machine learning. We propose a comparison of full-precision and binary neural networks for activity recognition with accelerometer data generated by the sensor itself. Experimental results have demonstrated that the sensor can achieve an inference performance of 10.7 cycles/MAC, comparable to a Cortex-M4-based microcontroller, with full-precision networks, and up to 1.5 cycles/MAC with large binary models for low latency inference, with an average energy consumption of only 90 $\mu$J/inference with the core running at 5 MHz.

Assessing the Robustness of LiDAR, Radar and Depth Cameras Against Ill-Reflecting Surfaces in Autonomous Vehicles: An Experimental Study

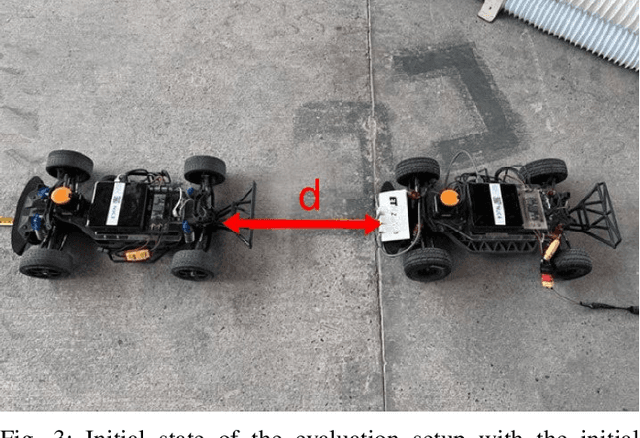

Sep 19, 2023Abstract:Range-measuring sensors play a critical role in autonomous driving systems. While LiDAR technology has been dominant, its vulnerability to adverse weather conditions is well-documented. This paper focuses on secondary adverse conditions and the implications of ill-reflective surfaces on range measurement sensors. We assess the influence of this condition on the three primary ranging modalities used in autonomous mobile robotics: LiDAR, RADAR, and Depth-Camera. Based on accurate experimental evaluation the papers findings reveal that under ill-reflectivity, LiDAR ranging performance drops significantly to 33% of its nominal operating conditions, whereas RADAR and Depth-Cameras maintain up to 100% of their nominal distance ranging capabilities. Additionally, we demonstrate on a 1:10 scaled autonomous racecar how ill-reflectivity adversely impacts downstream robotics tasks, highlighting the necessity for robust range sensing in autonomous driving.

Investigation of mmWave Radar Technology For Non-contact Vital Sign Monitoring

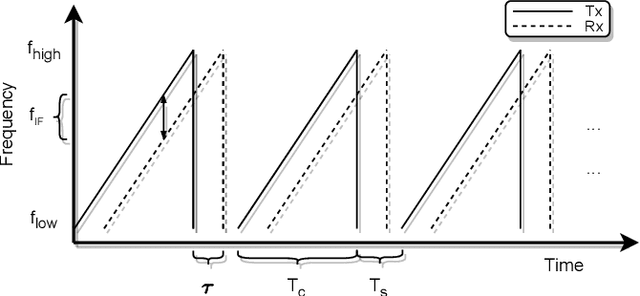

Sep 15, 2023Abstract:Non-contact vital sign monitoring has many advantages over conventional methods in being comfortable, unobtrusive and without any risk of spreading infection. The use of millimeter-wave (mmWave) radars is one of the most promising approaches that enable contact-less monitoring of vital signs. Novel low-power implementations of this technology promise to enable vital sign sensing in embedded, battery-operated devices. The nature of these new low-power sensors exacerbates the challenges of accurate and robust vital sign monitoring and especially the problem of heart-rate tracking. This work focuses on the investigation and characterization of three Frequency Modulated Continuous Wave (FMCW) low-power radars with different carrier frequencies of 24 GHz, 60 GHz and 120 GHz. The evaluation platforms were first tested on phantom models that emulated human bodies to accurately evaluate the baseline noise, error in range estimation, and error in displacement estimation. Additionally, the systems were also used to collect data from three human subjects to gauge the feasibility of identifying heartbeat peaks and breathing peaks with simple and lightweight algorithms that could potentially run in low-power embedded processors. The investigation revealed that the 24 GHz radar has the highest baseline noise level, 0.04mm at 0{\deg} angle of incidence, and an error in range estimation of 3.45 +- 1.88 cm at a distance of 60 cm. At the same distance, the 60 GHz and the 120 GHz radar system shows the least noise level, 0.0lmm at 0{\deg} angle of incidence, and error in range estimation 0.64 +- 0.01 cm and 0.04 +- 0.0 cm respectively. Additionally, tests on humans showed that all three radar systems were able to identify heart and breathing activity but the 120 GHz radar system outperformed the other two.

In-Ear-Voice: Towards Milli-Watt Audio Enhancement With Bone-Conduction Microphones for In-Ear Sensing Platforms

Sep 05, 2023

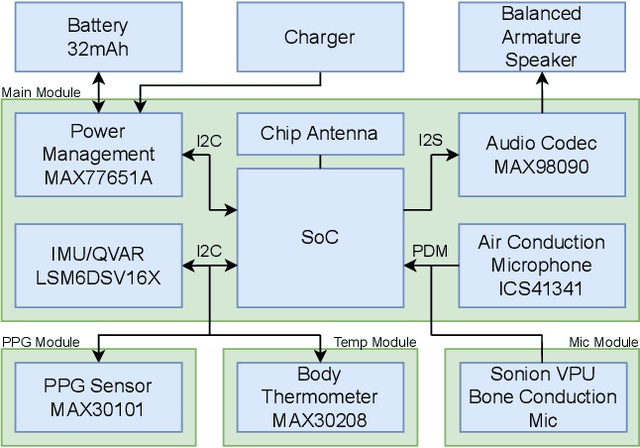

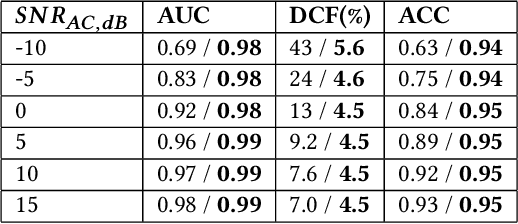

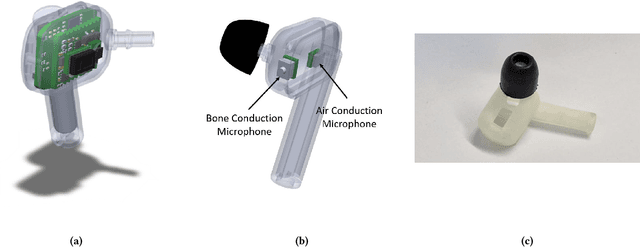

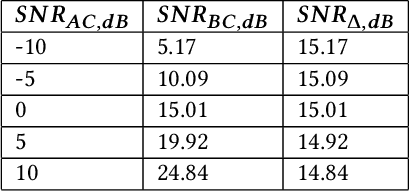

Abstract:The recent ubiquitous adoption of remote conferencing has been accompanied by omnipresent frustration with distorted or otherwise unclear voice communication. Audio enhancement can compensate for low-quality input signals from, for example, small true wireless earbuds, by applying noise suppression techniques. Such processing relies on voice activity detection (VAD) with low latency and the added capability of discriminating the wearer's voice from others - a task of significant computational complexity. The tight energy budget of devices as small as modern earphones, however, requires any system attempting to tackle this problem to do so with minimal power and processing overhead, while not relying on speaker-specific voice samples and training due to usability concerns. This paper presents the design and implementation of a custom research platform for low-power wireless earbuds based on novel, commercial, MEMS bone-conduction microphones. Such microphones can record the wearer's speech with much greater isolation, enabling personalized voice activity detection and further audio enhancement applications. Furthermore, the paper accurately evaluates a proposed low-power personalized speech detection algorithm based on bone conduction data and a recurrent neural network running on the implemented research platform. This algorithm is compared to an approach based on traditional microphone input. The performance of the bone conduction system, achieving detection of speech within 12.8ms at an accuracy of 95\% is evaluated. Different SoC choices are contrasted, with the final implementation based on the cutting-edge Ambiq Apollo 4 Blue SoC achieving 2.64mW average power consumption at 14uJ per inference, reaching 43h of battery life on a miniature 32mAh li-ion cell and without duty cycling.

Towards Robust Velocity and Position Estimation of Opponents for Autonomous Racing Using Low-Power Radar

Sep 04, 2023

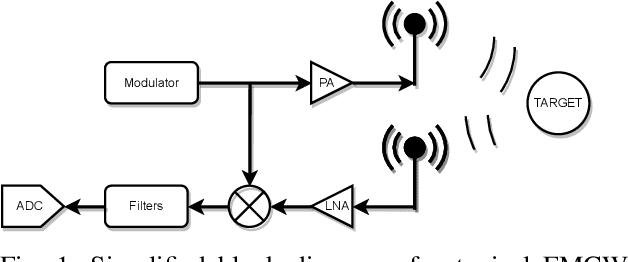

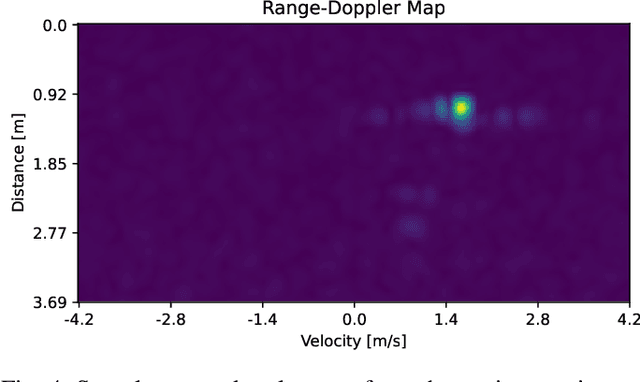

Abstract:This paper presents the design and development of an intelligent subsystem that includes a novel low-power radar sensor integrated into an autonomous racing perception pipeline to robustly estimate the position and velocity of dynamic obstacles. The proposed system, based on the Infineon BGT60TR13D radar, is evaluated in a real-world scenario with scaled race cars. The paper explores the benefits and limitations of using such a sensor subsystem and draws conclusions based on field-collected data. The results demonstrate a tracking error up to 0.21 +- 0.29 m in distance estimation and 0.39 +- 0.19 m/s in velocity estimation, despite the power consumption in the range of 10s of milliwatts. The presented system provides complementary information to other sensors such as LiDAR and camera, and can be used in a wide range of applications beyond autonomous racing.

Gaze Estimation on Spresense

Aug 23, 2023

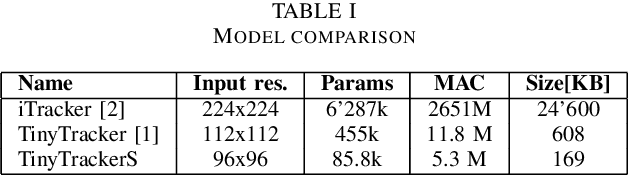

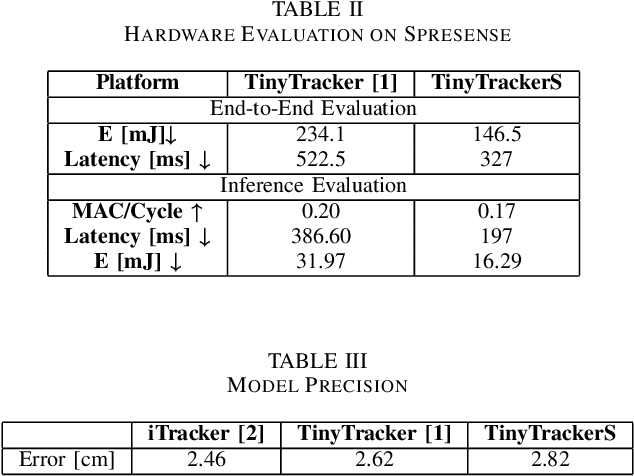

Abstract:Gaze estimation is a valuable technology with numerous applications in fields such as human-computer interaction, virtual reality, and medicine. This report presents the implementation of a gaze estimation system using the Sony Spresense microcontroller board and explores its performance in latency, MAC/cycle, and power consumption. The report also provides insights into the system's architecture, including the gaze estimation model used. Additionally, a demonstration of the system is presented, showcasing its functionality and performance. Our lightweight model TinyTrackerS is a mere 169Kb in size, using 85.8k parameters and runs on the Spresense platform at 3 FPS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge