Milos Cernak

DeepFilterGAN: A Full-band Real-time Speech Enhancement System with GAN-based Stochastic Regeneration

May 29, 2025Abstract:In this work, we propose a full-band real-time speech enhancement system with GAN-based stochastic regeneration. Predictive models focus on estimating the mean of the target distribution, whereas generative models aim to learn the full distribution. This behavior of predictive models may lead to over-suppression, i.e. the removal of speech content. In the literature, it was shown that combining a predictive model with a generative one within the stochastic regeneration framework can reduce the distortion in the output. We use this framework to obtain a real-time speech enhancement system. With 3.58M parameters and a low latency, our system is designed for real-time streaming with a lightweight architecture. Experiments show that our system improves over the first stage in terms of NISQA-MOS metric. Finally, through an ablation study, we show the importance of noisy conditioning in our system. We participated in 2025 Urgent Challenge with our model and later made further improvements.

Model as Loss: A Self-Consistent Training Paradigm

May 27, 2025Abstract:Conventional methods for speech enhancement rely on handcrafted loss functions (e.g., time or frequency domain losses) or deep feature losses (e.g., using WavLM or wav2vec), which often fail to capture subtle signal properties essential for optimal performance. To address this, we propose Model as Loss, a novel training paradigm that utilizes the encoder from the same model as a loss function to guide the training. The Model as Loss paradigm leverages the encoder's task-specific feature space, optimizing the decoder to produce output consistent with perceptual and task-relevant characteristics of the clean signal. By using the encoder's learned features as a loss function, this framework enforces self-consistency between the clean reference speech and the enhanced model output. Our approach outperforms pre-trained deep feature losses on standard speech enhancement benchmarks, offering better perceptual quality and robust generalization to both in-domain and out-of-domain datasets.

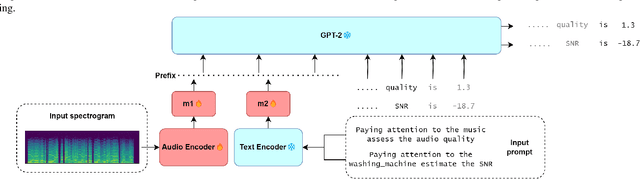

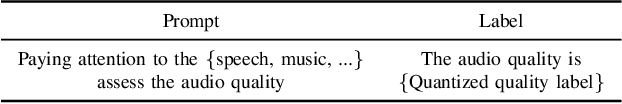

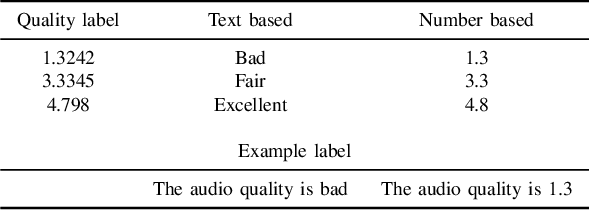

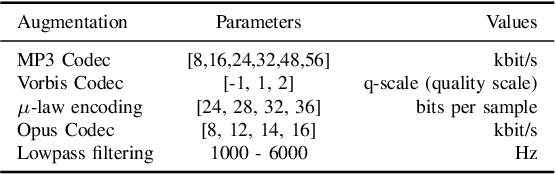

Semi-intrusive audio evaluation: Casting non-intrusive assessment as a multi-modal text prediction task

Sep 21, 2024

Abstract:Assessment of audio by humans possesses the unique ability to attend to specific sources in a mixture of signals. Mimicking this human ability, we propose a semi-intrusive assessment where we frame the audio assessment task as a text prediction task with audio-text input. To this end we leverage instruction fine-tuning of the multi-modal PENGI model. Our experiments on MOS prediction for speech and music using both real and simulated data show that the proposed method, on average, outperforms baselines that operate on a single task. To justify the model generability, we propose a new semi-intrusive SNR estimator that is able to estimate the SNR of arbitrary signal classes in a mixture of signals with different classes.

OpenACE: An Open Benchmark for Evaluating Audio Coding Performance

Sep 12, 2024

Abstract:Audio and speech coding lack unified evaluation and open-source testing. Many candidate systems were evaluated on proprietary, non-reproducible, or small data, and machine learning-based codecs are often tested on datasets with similar distributions as trained on, which is unfairly compared to digital signal processing-based codecs that usually work well with unseen data. This paper presents a full-band audio and speech coding quality benchmark with more variable content types, including traditional open test vectors. An example use case of audio coding quality assessment is presented with open-source Opus, 3GPP's EVS, and recent ETSI's LC3 with LC3+ used in Bluetooth LE Audio profiles. Besides, quality variations of emotional speech encoding at 16 kbps are shown. The proposed open-source benchmark contributes to audio and speech coding democratization and is available at https://github.com/JozefColdenhoff/OpenACE.

Diffusion-based Speech Enhancement with Schrödinger Bridge and Symmetric Noise Schedule

Sep 08, 2024Abstract:Recently, diffusion-based generative models have demonstrated remarkable performance in speech enhancement tasks. However, these methods still encounter challenges, including the lack of structural information and poor performance in low Signal-to-Noise Ratio (SNR) scenarios. To overcome these challenges, we propose the Schr\"oodinger Bridge-based Speech Enhancement (SBSE) method, which learns the diffusion processes directly between the noisy input and the clean distribution, unlike conventional diffusion-based speech enhancement systems that learn data to Gaussian distributions. To enhance performance in extremely noisy conditions, we introduce a two-stage system incorporating ratio mask information into the diffusion-based generative model. Our experimental results show that our proposed SBSE method outperforms all the baseline models and achieves state-of-the-art performance, especially in low SNR conditions. Importantly, only a few inference steps are required to achieve the best result.

On real-time multi-stage speech enhancement systems

Dec 19, 2023Abstract:Recently, multi-stage systems have stood out among deep learning-based speech enhancement methods. However, these systems are always high in complexity, requiring millions of parameters and powerful computational resources, which limits their application for real-time processing in low-power devices. Besides, the contribution of various influencing factors to the success of multi-stage systems remains unclear, which presents challenges to reduce the size of these systems. In this paper, we extensively investigate a lightweight two-stage network with only 560k total parameters. It consists of a Mel-scale magnitude masking model in the first stage and a complex spectrum mapping model in the second stage. We first provide a consolidated view of the roles of gain power factor, post-filter, and training labels for the Mel-scale masking model. Then, we explore several training schemes for the two-stage network and provide some insights into the superiority of the two-stage network. We show that the proposed two-stage network trained by an optimal scheme achieves a performance similar to a four times larger open source model DeepFilterNet2.

Cluster-based pruning techniques for audio data

Sep 21, 2023Abstract:Deep learning models have become widely adopted in various domains, but their performance heavily relies on a vast amount of data. Datasets often contain a large number of irrelevant or redundant samples, which can lead to computational inefficiencies during the training. In this work, we introduce, for the first time in the context of the audio domain, the k-means clustering as a method for efficient data pruning. K-means clustering provides a way to group similar samples together, allowing the reduction of the size of the dataset while preserving its representative characteristics. As an example, we perform clustering analysis on the keyword spotting (KWS) dataset. We discuss how k-means clustering can significantly reduce the size of audio datasets while maintaining the classification performance across neural networks (NNs) with different architectures. We further comment on the role of scaling analysis in identifying the optimal pruning strategies for a large number of samples. Our studies serve as a proof-of-principle, demonstrating the potential of data selection with distance-based clustering algorithms for the audio domain and highlighting promising research avenues.

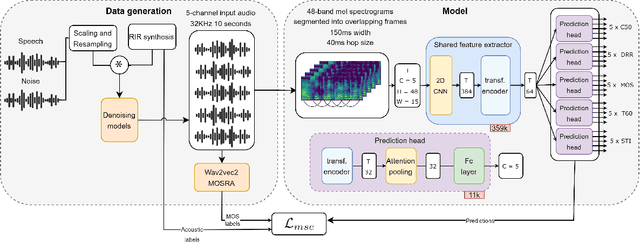

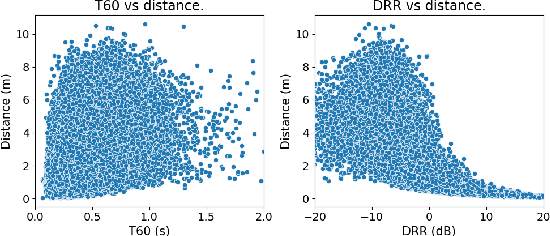

Multi-Channel MOSRA: Mean Opinion Score and Room Acoustics Estimation Using Simulated Data and a Teacher Model

Sep 21, 2023

Abstract:Previous methods for predicting room acoustic parameters and speech quality metrics have focused on the single-channel case, where room acoustics and Mean Opinion Score (MOS) are predicted for a single recording device. However, quality-based device selection for rooms with multiple recording devices may benefit from a multi-channel approach where the descriptive metrics are predicted for multiple devices in parallel. Following our hypothesis that a model may benefit from multi-channel training, we develop a multi-channel model for joint MOS and room acoustics prediction (MOSRA) for five channels in parallel. The lack of multi-channel audio data with ground truth labels necessitated the creation of simulated data using an acoustic simulator with room acoustic labels extracted from the generated impulse responses and labels for MOS generated in a student-teacher setup using a wav2vec2-based MOS prediction model. Our experiments show that the multi-channel model improves the prediction of the direct-to-reverberation ratio, clarity, and speech transmission index over the single-channel model with roughly 5$\times$ less computation while suffering minimal losses in the performance of the other metrics.

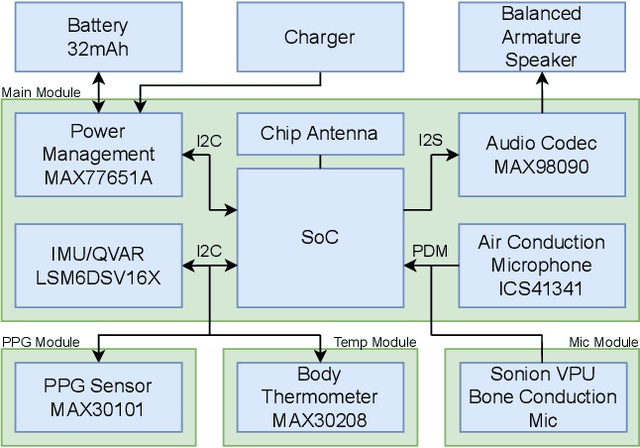

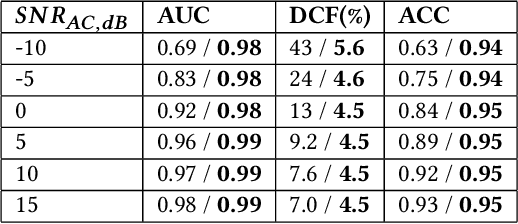

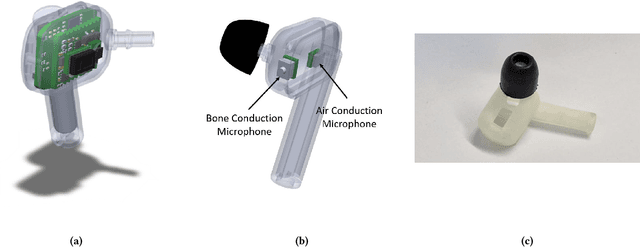

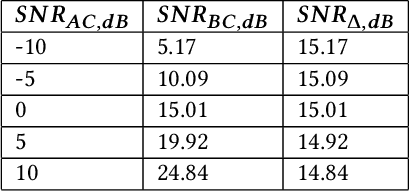

In-Ear-Voice: Towards Milli-Watt Audio Enhancement With Bone-Conduction Microphones for In-Ear Sensing Platforms

Sep 05, 2023

Abstract:The recent ubiquitous adoption of remote conferencing has been accompanied by omnipresent frustration with distorted or otherwise unclear voice communication. Audio enhancement can compensate for low-quality input signals from, for example, small true wireless earbuds, by applying noise suppression techniques. Such processing relies on voice activity detection (VAD) with low latency and the added capability of discriminating the wearer's voice from others - a task of significant computational complexity. The tight energy budget of devices as small as modern earphones, however, requires any system attempting to tackle this problem to do so with minimal power and processing overhead, while not relying on speaker-specific voice samples and training due to usability concerns. This paper presents the design and implementation of a custom research platform for low-power wireless earbuds based on novel, commercial, MEMS bone-conduction microphones. Such microphones can record the wearer's speech with much greater isolation, enabling personalized voice activity detection and further audio enhancement applications. Furthermore, the paper accurately evaluates a proposed low-power personalized speech detection algorithm based on bone conduction data and a recurrent neural network running on the implemented research platform. This algorithm is compared to an approach based on traditional microphone input. The performance of the bone conduction system, achieving detection of speech within 12.8ms at an accuracy of 95\% is evaluated. Different SoC choices are contrasted, with the final implementation based on the cutting-edge Ambiq Apollo 4 Blue SoC achieving 2.64mW average power consumption at 14uJ per inference, reaching 43h of battery life on a miniature 32mAh li-ion cell and without duty cycling.

Speaker Embeddings as Individuality Proxy for Voice Stress Detection

Jun 09, 2023

Abstract:Since the mental states of the speaker modulate speech, stress introduced by cognitive or physical loads could be detected in the voice. The existing voice stress detection benchmark has shown that the audio embeddings extracted from the Hybrid BYOL-S self-supervised model perform well. However, the benchmark only evaluates performance separately on each dataset, but does not evaluate performance across the different types of stress and different languages. Moreover, previous studies found strong individual differences in stress susceptibility. This paper presents the design and development of voice stress detection, trained on more than 100 speakers from 9 language groups and five different types of stress. We address individual variabilities in voice stress analysis by adding speaker embeddings to the hybrid BYOL-S features. The proposed method significantly improves voice stress detection performance with an input audio length of only 3-5 seconds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge