Marco Giordano

ETH Zurich

Real-Time Emergency Vehicle Siren Detection with Efficient CNNs on Embedded Hardware

Jul 02, 2025Abstract:We present a full-stack emergency vehicle (EV) siren detection system designed for real-time deployment on embedded hardware. The proposed approach is based on E2PANNs, a fine-tuned convolutional neural network derived from EPANNs, and optimized for binary sound event detection under urban acoustic conditions. A key contribution is the creation of curated and semantically structured datasets - AudioSet-EV, AudioSet-EV Augmented, and Unified-EV - developed using a custom AudioSet-Tools framework to overcome the low reliability of standard AudioSet annotations. The system is deployed on a Raspberry Pi 5 equipped with a high-fidelity DAC+microphone board, implementing a multithreaded inference engine with adaptive frame sizing, probability smoothing, and a decision-state machine to control false positive activations. A remote WebSocket interface provides real-time monitoring and facilitates live demonstration capabilities. Performance is evaluated using both framewise and event-based metrics across multiple configurations. Results show the system achieves low-latency detection with improved robustness under realistic audio conditions. This work demonstrates the feasibility of deploying IoS-compatible SED solutions that can form distributed acoustic monitoring networks, enabling collaborative emergency vehicle tracking across smart city infrastructures through WebSocket connectivity on low-cost edge devices.

A Survey on Spoken Italian Datasets and Corpora

Jan 11, 2025

Abstract:Spoken language datasets are vital for advancing linguistic research, Natural Language Processing, and speech technology. However, resources dedicated to Italian, a linguistically rich and diverse Romance language, remain underexplored compared to major languages like English or Mandarin. This survey provides a comprehensive analysis of 66 spoken Italian datasets, highlighting their characteristics, methodologies, and applications. The datasets are categorized by speech type, source and context, and demographic and linguistic features, with a focus on their utility in fields such as Automatic Speech Recognition, emotion detection, and education. Challenges related to dataset scarcity, representativeness, and accessibility are discussed alongside recommendations for enhancing dataset creation and utilization. The full dataset inventory is publicly accessible via GitHub and archived on Zenodo, serving as a valuable resource for researchers and developers. By addressing current gaps and proposing future directions, this work aims to support the advancement of Italian speech technologies and linguistic research.

PuLsE: Accurate and Robust Ultrasound-based Continuous Heart-Rate Monitoring on a Wrist-Worn IoT Device

Oct 21, 2024

Abstract:This work explores the feasibility of employing ultrasound (US) US technology in a wrist-worn IoT device for low-power, high-fidelity heart-rate (HR) extraction. US offers deep tissue penetration and can monitor pulsatile arterial blood flow in large vessels and the surrounding tissue, potentially improving robustness and accuracy compared to PPG. We present an IoT wearable system prototype utilizing a commercial microcontroller MCU employing the onboard ADC to capture high frequency US signals and an innovative low-power US pulser. An envelope filter lowers the bandwidth of the US signal by a factor of >5x, reducing the system's acquisition requirements without compromising accuracy (correlation coefficient between HR extracted from enveloped and raw signals, r(92)=0.99, p<0.001). The full signal processing pipeline is ported to fixed point arithmetic for increased energy efficiency and runs entirely onboard. The system has an average power consumption of 5.8mW, competitive with PPG based systems, and the HR extraction algorithm requires only 68kB of RAM and 71ms of processing time on an ARM Cortex-M4 MCU. The system is estimated to run continuously for more than 7 days on a smartwatch battery. To accurately evaluate the proposed circuit and algorithm and identify the anatomical location on the wrist with the highest accuracy for HR extraction, we collected a dataset from 10 healthy adults at three different wrist positions. The dataset comprises roughly 5 hours of HR data with an average of 80.6+-16.3 bpm. During recording, we synchronized the established ECG gold standard with our US-based method. The comparisons yields a Pearson correlation coefficient of r(92)=0.99, p<0.001 and a mean error of 0.69+-1.99 bpm in the lateral wrist position near the radial artery. The dataset and code have been open-sourced at https://github.com/mgiordy/Ultrasound-Heart-Rate

The OCON model: an old but green solution for distributable supervised classification for acoustic monitoring in smart cities

Oct 05, 2024

Abstract:This paper explores a structured application of the One-Class approach and the One-Class-One-Network model for supervised classification tasks, focusing on vowel phonemes classification and speakers recognition for the Automatic Speech Recognition (ASR) domain. For our case-study, the ASR model runs on a proprietary sensing and lightning system, exploited to monitor acoustic and air pollution on urban streets. We formalize combinations of pseudo-Neural Architecture Search and Hyper-Parameters Tuning experiments, using an informed grid-search methodology, to achieve classification accuracy comparable to nowadays most complex architectures, delving into the speaker recognition and energy efficiency aspects. Despite its simplicity, our model proposal has a very good chance to generalize the language and speaker genders context for widespread applicability in computational constrained contexts, proved by relevant statistical and performance metrics. Our experiments code is openly accessible on our GitHub.

* Accepted at "IEEE 5th International Symposium on the Internet of Sounds, 30 Sep / 2 Oct 2024, Erlangen, Germany"

The OCON model: an old but gold solution for distributable supervised classification

Oct 05, 2024

Abstract:This paper introduces to a structured application of the One-Class approach and the One-Class-One-Network model for supervised classification tasks, specifically addressing a vowel phonemes classification case study within the Automatic Speech Recognition research field. Through pseudo-Neural Architecture Search and Hyper-Parameters Tuning experiments conducted with an informed grid-search methodology, we achieve classification accuracy comparable to nowadays complex architectures (90.0 - 93.7%). Despite its simplicity, our model prioritizes generalization of language context and distributed applicability, supported by relevant statistical and performance metrics. The experiments code is openly available at our GitHub.

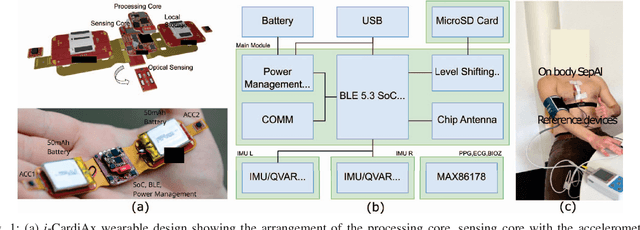

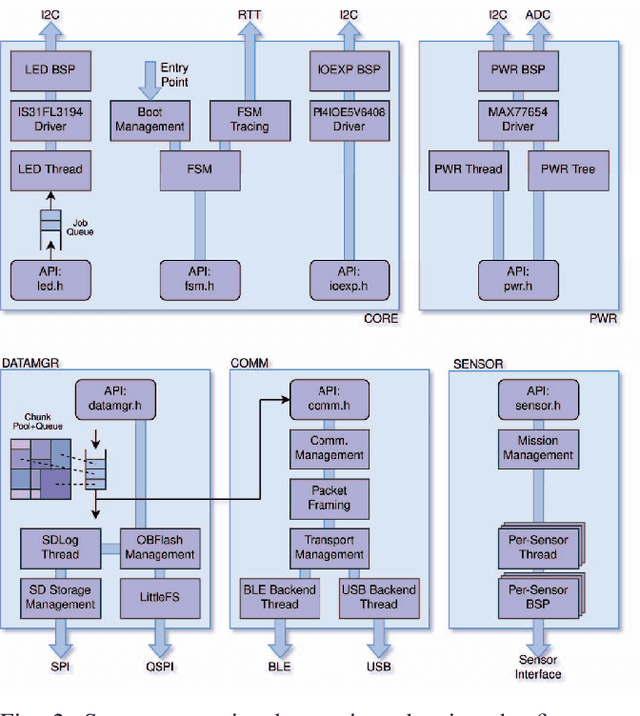

i-CardiAx: Wearable IoT-Driven System for Early Sepsis Detection Through Long-Term Vital Sign Monitoring

Jul 31, 2024

Abstract:Sepsis is a significant cause of early mortality, high healthcare costs, and disability-adjusted life years. Digital interventions like continuous cardiac monitoring can help detect early warning signs and facilitate effective interventions. This paper introduces i-CardiAx, a wearable sensor utilizing low-power high-sensitivity accelerometers to measure vital signs crucial for cardiovascular health: heart rate (HR), blood pressure (BP), and respiratory rate (RR). Data collected from 10 healthy subjects using the i-CardiAx chest patch were used to develop and evaluate lightweight vital sign measurement algorithms. The algorithms demonstrated high performance: RR (-0.11 $\pm$ 0.77 breaths\min), HR (0.82 $\pm$ 2.85 beats\min), and systolic BP (-0.08 $\pm$ 6.245 mmHg). These algorithms are embedded in an ARM Cortex-M33 processor with Bluetooth Low Energy (BLE) support, achieving inference times of 4.2 ms for HR and RR, and 8.5 ms for BP. Additionally, a multi-channel quantized Temporal Convolutional Neural (TCN) Network, trained on the open-source HiRID dataset, was developed to detect sepsis onset using digitally acquired vital signs from i-CardiAx. The quantized TCN, deployed on i-CardiAx, predicted sepsis with a median time of 8.2 hours and an energy per inference of 1.29 mJ. The i-CardiAx wearable boasts a sleep power of 0.152 mW and an average power consumption of 0.77 mW, enabling a 100 mAh battery to last approximately two weeks (432 hours) with continuous monitoring of HR, BP, and RR at 30 measurements per hour and running inference every 30 minutes. In conclusion, i-CardiAx offers an energy-efficient, high-sensitivity method for long-term cardiovascular monitoring, providing predictive alerts for sepsis and other life-threatening events.

Energy-Aware Adaptive Sampling for Self-Sustainability in Resource-Constrained IoT Devices

Oct 31, 2023

Abstract:In the ever-growing Internet of Things (IoT) landscape, smart power management algorithms combined with energy harvesting solutions are crucial to obtain self-sustainability. This paper presents an energy-aware adaptive sampling rate algorithm designed for embedded deployment in resource-constrained, battery-powered IoT devices. The algorithm, based on a finite state machine (FSM) and inspired by Transmission Control Protocol (TCP) Reno's additive increase and multiplicative decrease, maximizes sensor sampling rates, ensuring power self-sustainability without risking battery depletion. Moreover, we characterized our solar cell with data acquired over 48 days and used the model created to obtain energy data from an open-source world-wide dataset. To validate our approach, we introduce the EcoTrack device, a versatile device with global navigation satellite system (GNSS) capabilities and Long-Term Evolution Machine Type Communication (LTE-M) connectivity, supporting MQTT protocol for cloud data relay. This multi-purpose device can be used, for instance, as a health and safety wearable, remote hazard monitoring system, or as a global asset tracker. The results, validated on data from three different European cities, show that the proposed algorithm enables self-sustainability while maximizing sampled locations per day. In experiments conducted with a 3000 mAh battery capacity, the algorithm consistently maintained a minimum of 24 localizations per day and achieved peaks of up to 3000.

Optimizing IoT-Based Asset and Utilization Tracking: Efficient Activity Classification with MiniRocket on Resource-Constrained Devices

Oct 23, 2023

Abstract:This paper introduces an effective solution for retrofitting construction power tools with low-power IoT to enable accurate activity classification. We address the challenge of distinguishing between when a power tool is being moved and when it is actually being used. To achieve classification accuracy and power consumption preservation a newly released algorithm called MiniRocket was employed. Known for its accuracy, scalability, and fast training for time-series classification, in this paper, it is proposed as a TinyML algorithm for inference on resource-constrained IoT devices. The paper demonstrates the portability and performance of MiniRocket on a resource-constrained, ultra-low power sensor node for floating-point and fixed-point arithmetic, matching up to 1% of the floating-point accuracy. The hyperparameters of the algorithm have been optimized for the task at hand to find a Pareto point that balances memory usage, accuracy and energy consumption. For the classification problem, we rely on an accelerometer as the sole sensor source, and BLE for data transmission. Extensive real-world construction data, using 16 different power tools, were collected, labeled, and used to validate the algorithm's performance directly embedded in the IoT device. Experimental results demonstrate that the proposed solution achieves an accuracy of 96.9% in distinguishing between real usage status and other motion statuses while consuming only 7kB of flash and 3kB of RAM. The final application exhibits an average current consumption of less than 15{\mu}W for the whole system, resulting in battery life performance ranging from 3 to 9 years depending on the battery capacity (250-500mAh) and the number of power tool usage hours (100-1500h).

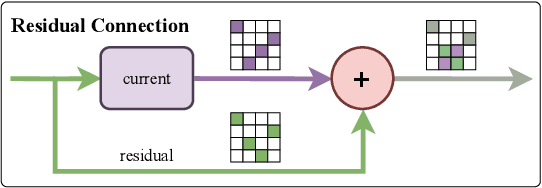

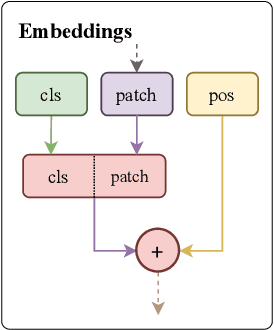

Transformer Fusion with Optimal Transport

Oct 15, 2023

Abstract:Fusion is a technique for merging multiple independently-trained neural networks in order to combine their capabilities. Past attempts have been restricted to the case of fully-connected, convolutional, and residual networks. In this paper, we present a systematic approach for fusing two or more transformer-based networks exploiting Optimal Transport to (soft-)align the various architectural components. We flesh out an abstraction for layer alignment, that can generalize to arbitrary architectures -- in principle -- and we apply this to the key ingredients of Transformers such as multi-head self-attention, layer-normalization, and residual connections, and we discuss how to handle them via various ablation studies. Furthermore, our method allows the fusion of models of different sizes (heterogeneous fusion), providing a new and efficient way for compression of Transformers. The proposed approach is evaluated on both image classification tasks via Vision Transformer and natural language modeling tasks using BERT. Our approach consistently outperforms vanilla fusion, and, after a surprisingly short finetuning, also outperforms the individual converged parent models. In our analysis, we uncover intriguing insights about the significant role of soft alignment in the case of Transformers. Our results showcase the potential of fusing multiple Transformers, thus compounding their expertise, in the budding paradigm of model fusion and recombination.

Towards a Novel Ultrasound System Based on Low-Frequency Feature Extraction From a Fully-Printed Flexible Transducer

Sep 25, 2023Abstract:Ultrasound is a key technology in healthcare, and it is being explored for non-invasive, wearable, continuous monitoring of vital signs. However, its widespread adoption in this scenario is still hindered by the size, complexity, and power consumption of current devices. Moreover, such an application demands adaptability to human anatomy, which is hard to achieve with current transducer technology. This paper presents a novel ultrasound system prototype based on a fully printed, lead-free, and flexible polymer ultrasound transducer, whose bending radius promises good adaptability to the human anatomy. Our application scenario focuses on continuous blood flow monitoring. We implemented a hardware envelope filter to efficiently transpose high-frequency ultrasound signals to a lower-frequency spectrum. This reduces computational and power demands with little to no degradation in the task proposed for this work. We validated our method on a setup that mimics human blood flow by using a flow phantom and a peristaltic pump simulating 3 different heartbeat rhythms: 60, 90 and 120 beats per minute. Our ultrasound setup reconstructs peristaltic pump frequencies with errors of less than 0.05 Hz (3 bpm) from the set pump frequency, both for the raw echo and the enveloped echo. The analog pre-processing showed a promising reduction of signal bandwidth of more than 6x: pulse-echo signals of transducers excited at 12.5 MHz were reduced to about 2 MHz. Thus, allowing consumer MCUs to acquire and elaborate signals within mW-power range in an inexpensive fashion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge