Tommaso Bendinelli

Exploring LLM Agents for Cleaning Tabular Machine Learning Datasets

Mar 09, 2025Abstract:High-quality, error-free datasets are a key ingredient in building reliable, accurate, and unbiased machine learning (ML) models. However, real world datasets often suffer from errors due to sensor malfunctions, data entry mistakes, or improper data integration across multiple sources that can severely degrade model performance. Detecting and correcting these issues typically require tailor-made solutions and demand extensive domain expertise. Consequently, automation is challenging, rendering the process labor-intensive and tedious. In this study, we investigate whether Large Language Models (LLMs) can help alleviate the burden of manual data cleaning. We set up an experiment in which an LLM, paired with Python, is tasked with cleaning the training dataset to improve the performance of a learning algorithm without having the ability to modify the training pipeline or perform any feature engineering. We run this experiment on multiple Kaggle datasets that have been intentionally corrupted with errors. Our results show that LLMs can identify and correct erroneous entries, such as illogical values or outlier, by leveraging contextual information from other features within the same row, as well as feedback from previous iterations. However, they struggle to detect more complex errors that require understanding data distribution across multiple rows, such as trends and biases.

Optimizing IoT-Based Asset and Utilization Tracking: Efficient Activity Classification with MiniRocket on Resource-Constrained Devices

Oct 23, 2023

Abstract:This paper introduces an effective solution for retrofitting construction power tools with low-power IoT to enable accurate activity classification. We address the challenge of distinguishing between when a power tool is being moved and when it is actually being used. To achieve classification accuracy and power consumption preservation a newly released algorithm called MiniRocket was employed. Known for its accuracy, scalability, and fast training for time-series classification, in this paper, it is proposed as a TinyML algorithm for inference on resource-constrained IoT devices. The paper demonstrates the portability and performance of MiniRocket on a resource-constrained, ultra-low power sensor node for floating-point and fixed-point arithmetic, matching up to 1% of the floating-point accuracy. The hyperparameters of the algorithm have been optimized for the task at hand to find a Pareto point that balances memory usage, accuracy and energy consumption. For the classification problem, we rely on an accelerometer as the sole sensor source, and BLE for data transmission. Extensive real-world construction data, using 16 different power tools, were collected, labeled, and used to validate the algorithm's performance directly embedded in the IoT device. Experimental results demonstrate that the proposed solution achieves an accuracy of 96.9% in distinguishing between real usage status and other motion statuses while consuming only 7kB of flash and 3kB of RAM. The final application exhibits an average current consumption of less than 15{\mu}W for the whole system, resulting in battery life performance ranging from 3 to 9 years depending on the battery capacity (250-500mAh) and the number of power tool usage hours (100-1500h).

Gemtelligence: Accelerating Gemstone classification with Deep Learning

May 31, 2023Abstract:The value of luxury goods, particularly investment-grade gemstones, is greatly influenced by their origin and authenticity, sometimes resulting in differences worth millions of dollars. Traditionally, human experts have determined the origin and detected treatments on gemstones through visual inspections and a range of analytical methods. However, the interpretation of the data can be subjective and time-consuming, resulting in inconsistencies. In this study, we propose Gemtelligence, a novel approach based on deep learning that enables accurate and consistent origin determination and treatment detection. Gemtelligence comprises convolutional and attention-based neural networks that process heterogeneous data types collected by multiple instruments. Notably, the algorithm demonstrated comparable predictive performance to expensive laser-ablation inductively-coupled-plasma mass-spectrometry (ICP-MS) analysis and visual examination by human experts, despite using input data from relatively inexpensive analytical methods. Our innovative methodology represents a major breakthrough in the field of gemstone analysis by significantly improving the automation and robustness of the entire analytical process pipeline.

Controllable Neural Symbolic Regression

Apr 20, 2023Abstract:In symbolic regression, the goal is to find an analytical expression that accurately fits experimental data with the minimal use of mathematical symbols such as operators, variables, and constants. However, the combinatorial space of possible expressions can make it challenging for traditional evolutionary algorithms to find the correct expression in a reasonable amount of time. To address this issue, Neural Symbolic Regression (NSR) algorithms have been developed that can quickly identify patterns in the data and generate analytical expressions. However, these methods, in their current form, lack the capability to incorporate user-defined prior knowledge, which is often required in natural sciences and engineering fields. To overcome this limitation, we propose a novel neural symbolic regression method, named Neural Symbolic Regression with Hypothesis (NSRwH) that enables the explicit incorporation of assumptions about the expected structure of the ground-truth expression into the prediction process. Our experiments demonstrate that the proposed conditioned deep learning model outperforms its unconditioned counterparts in terms of accuracy while also providing control over the predicted expression structure.

Dynaformer: A Deep Learning Model for Ageing-aware Battery Discharge Prediction

Jun 01, 2022

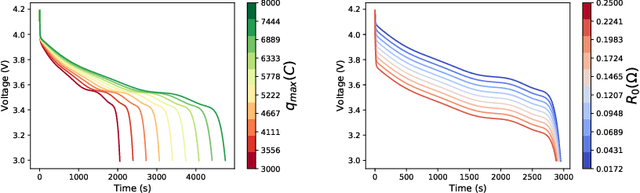

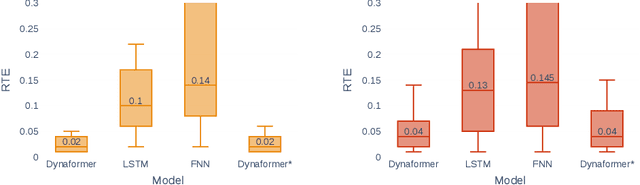

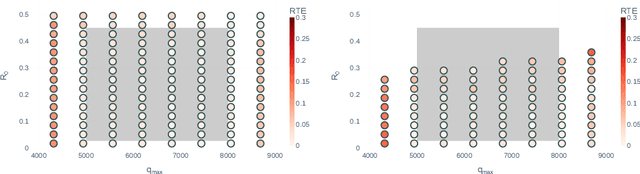

Abstract:Electrochemical batteries are ubiquitous devices in our society. When they are employed in mission-critical applications, the ability to precisely predict the end of discharge under highly variable environmental and operating conditions is of paramount importance in order to support operational decision-making. While there are accurate predictive models of the processes underlying the charge and discharge phases of batteries, the modelling of ageing and its effect on performance remains poorly understood. Such a lack of understanding often leads to inaccurate models or the need for time-consuming calibration procedures whenever the battery ages or its conditions change significantly. This represents a major obstacle to the real-world deployment of efficient and robust battery management systems. In this paper, we propose for the first time an approach that can predict the voltage discharge curve for batteries of any degradation level without the need for calibration. In particular, we introduce Dynaformer, a novel Transformer-based deep learning architecture which is able to simultaneously infer the ageing state from a limited number of voltage/current samples and predict the full voltage discharge curve for real batteries with high precision. Our experiments show that the trained model is effective for input current profiles of different complexities and is robust to a wide range of degradation levels. In addition to evaluating the performance of the proposed framework on simulated data, we demonstrate that a minimal amount of fine-tuning allows the model to bridge the simulation-to-real gap between simulations and real data collected from a set of batteries. The proposed methodology enables the utilization of battery-powered systems until the end of discharge in a controlled and predictable way, thereby significantly prolonging the operating cycles and reducing costs.

Neural Symbolic Regression that Scales

Jun 11, 2021

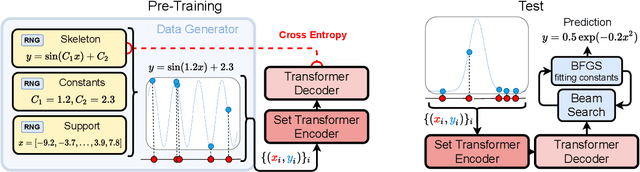

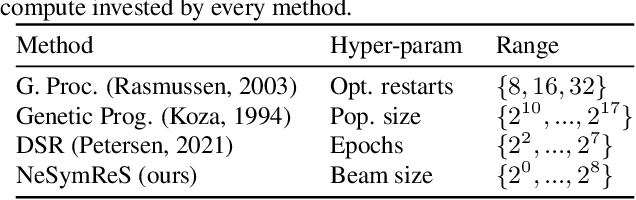

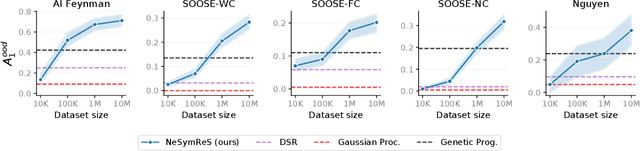

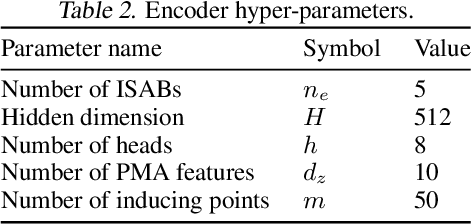

Abstract:Symbolic equations are at the core of scientific discovery. The task of discovering the underlying equation from a set of input-output pairs is called symbolic regression. Traditionally, symbolic regression methods use hand-designed strategies that do not improve with experience. In this paper, we introduce the first symbolic regression method that leverages large scale pre-training. We procedurally generate an unbounded set of equations, and simultaneously pre-train a Transformer to predict the symbolic equation from a corresponding set of input-output-pairs. At test time, we query the model on a new set of points and use its output to guide the search for the equation. We show empirically that this approach can re-discover a set of well-known physical equations, and that it improves over time with more data and compute.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge