Stephan Hasler

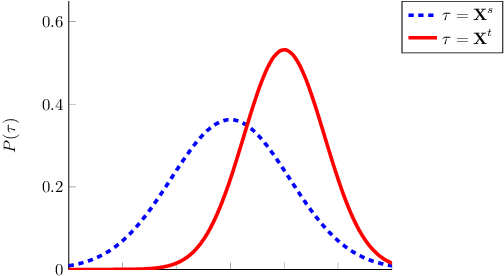

Stacked Confusion Reject Plots (SCORE)

Jun 25, 2024

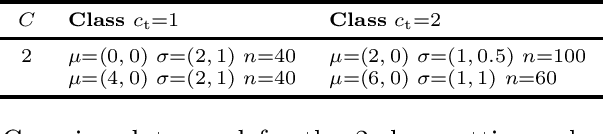

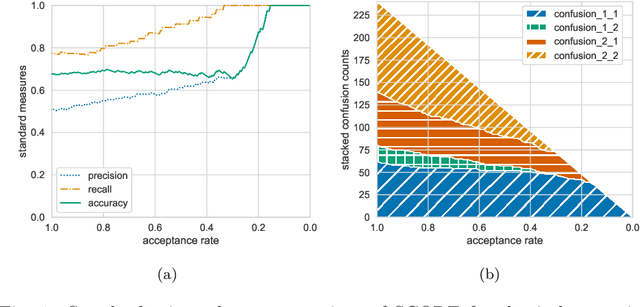

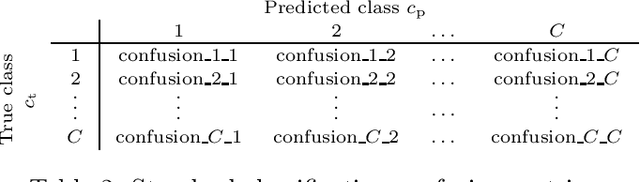

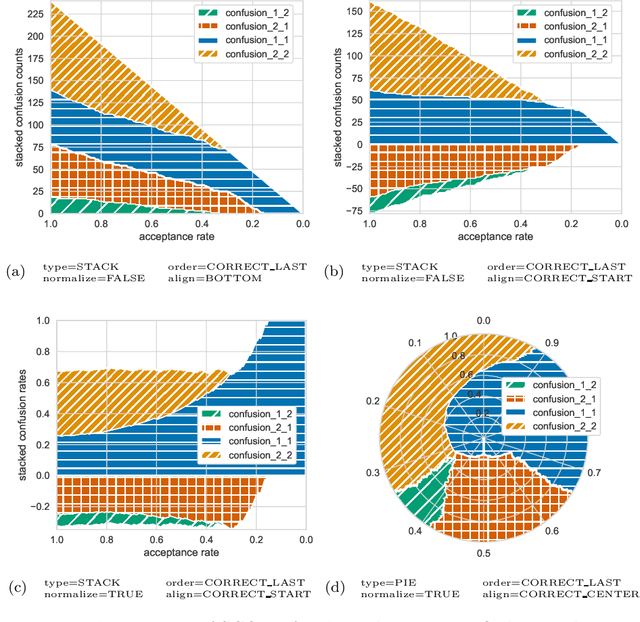

Abstract:Machine learning is more and more applied in critical application areas like health and driver assistance. To minimize the risk of wrong decisions, in such applications it is necessary to consider the certainty of a classification to reject uncertain samples. An established tool for this are reject curves that visualize the trade-off between the number of rejected samples and classification performance metrics. We argue that common reject curves are too abstract and hard to interpret by non-experts. We propose Stacked Confusion Reject Plots (SCORE) that offer a more intuitive understanding of the used data and the classifier's behavior. We present example plots on artificial Gaussian data to document the different options of SCORE and provide the code as a Python package.

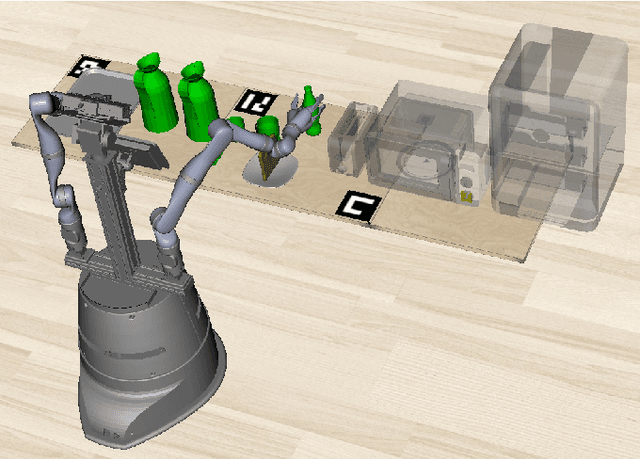

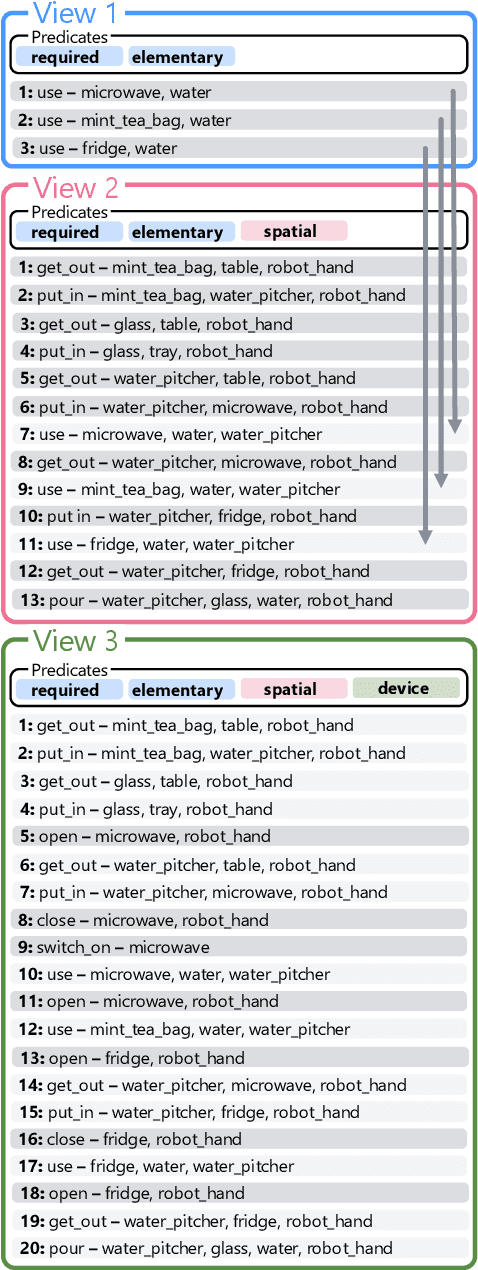

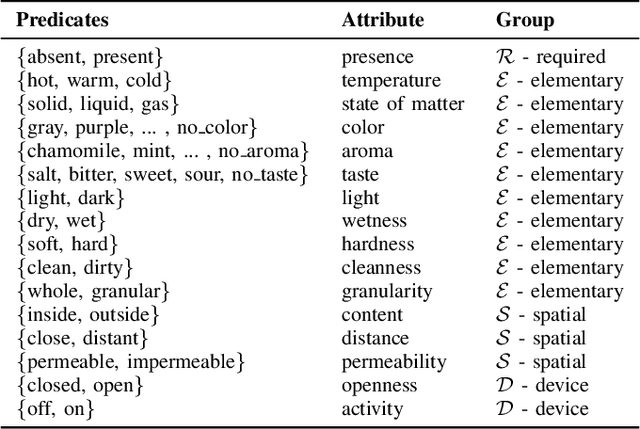

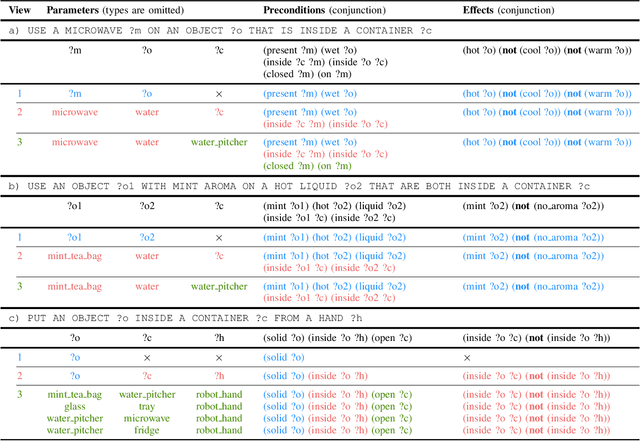

Efficient Symbolic Planning with Views

May 06, 2024

Abstract:Robotic planning systems model spatial relations in detail as these are needed for manipulation tasks. In contrast to this, other physical attributes of objects and the effect of devices are usually oversimplified and expressed by abstract compound attributes. This limits the ability of planners to find alternative solutions. We propose to break these compound attributes down into a shared set of elementary attributes. This strongly facilitates generalization between different tasks and environments and thus helps to find innovative solutions. On the down-side, this generalization comes with an increased complexity of the solution space. Therefore, as the main contribution of the paper, we propose a method that splits the planning problem into a sequence of views, where in each view only an increasing subset of attributes is considered. We show that this view-based strategy offers a good compromise between planning speed and quality of the found plan, and discuss its general applicability and limitations.

To Help or Not to Help: LLM-based Attentive Support for Human-Robot Group Interactions

Mar 19, 2024Abstract:How can a robot provide unobtrusive physical support within a group of humans? We present Attentive Support, a novel interaction concept for robots to support a group of humans. It combines scene perception, dialogue acquisition, situation understanding, and behavior generation with the common-sense reasoning capabilities of Large Language Models (LLMs). In addition to following user instructions, Attentive Support is capable of deciding when and how to support the humans, and when to remain silent to not disturb the group. With a diverse set of scenarios, we show and evaluate the robot's attentive behavior, which supports and helps the humans when required, while not disturbing if no help is needed.

Large Language Models for Multi-Modal Human-Robot Interaction

Jan 26, 2024

Abstract:This paper presents an innovative large language model (LLM)-based robotic system for enhancing multi-modal human-robot interaction (HRI). Traditional HRI systems relied on complex designs for intent estimation, reasoning, and behavior generation, which were resource-intensive. In contrast, our system empowers researchers and practitioners to regulate robot behavior through three key aspects: providing high-level linguistic guidance, creating "atomics" for actions and expressions the robot can use, and offering a set of examples. Implemented on a physical robot, it demonstrates proficiency in adapting to multi-modal inputs and determining the appropriate manner of action to assist humans with its arms, following researchers' defined guidelines. Simultaneously, it coordinates the robot's lid, neck, and ear movements with speech output to produce dynamic, multi-modal expressions. This showcases the system's potential to revolutionize HRI by shifting from conventional, manual state-and-flow design methods to an intuitive, guidance-based, and example-driven approach.

CoPAL: Corrective Planning of Robot Actions with Large Language Models

Oct 11, 2023Abstract:In the pursuit of fully autonomous robotic systems capable of taking over tasks traditionally performed by humans, the complexity of open-world environments poses a considerable challenge. Addressing this imperative, this study contributes to the field of Large Language Models (LLMs) applied to task and motion planning for robots. We propose a system architecture that orchestrates a seamless interplay between multiple cognitive levels, encompassing reasoning, planning, and motion generation. At its core lies a novel replanning strategy that handles physically grounded, logical, and semantic errors in the generated plans. We demonstrate the efficacy of the proposed feedback architecture, particularly its impact on executability, correctness, and time complexity via empirical evaluation in the context of a simulation and two intricate real-world scenarios: blocks world, barman and pizza preparation.

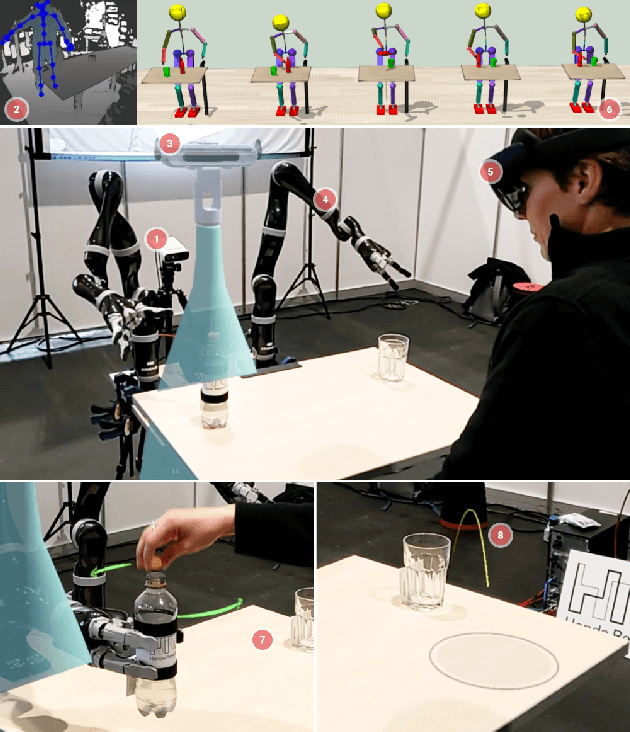

Communicating Robot's Intentions while Assisting Users via Augmented Reality

Aug 21, 2023

Abstract:This paper explores the challenges faced by assistive robots in effectively cooperating with humans, requiring them to anticipate human behavior, predict their actions' impact, and generate understandable robot actions. The study focuses on a use-case involving a user with limited mobility needing assistance with pouring a beverage, where tasks like unscrewing a cap or reaching for objects demand coordinated support from the robot. Yet, anticipating the robot's intentions can be challenging for the user, which can hinder effective collaboration. To address this issue, we propose an innovative solution that utilizes Augmented Reality (AR) to communicate the robot's intentions and expected movements to the user, fostering a seamless and intuitive interaction.

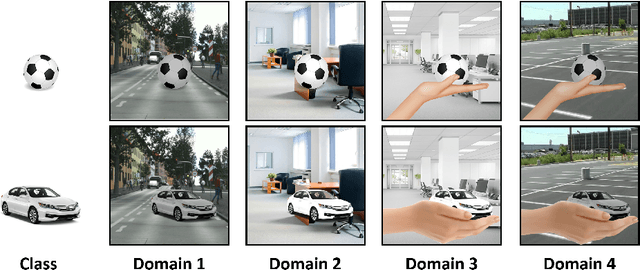

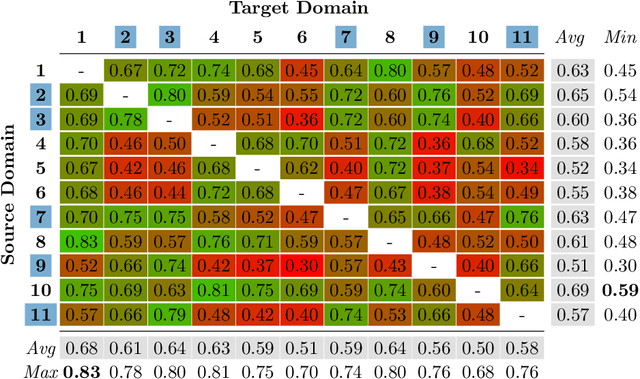

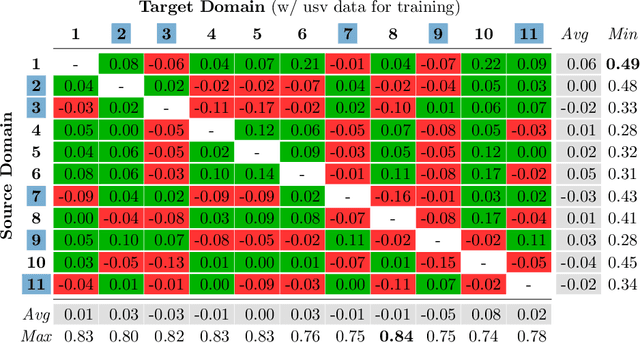

Improved Multi-Source Domain Adaptation by Preservation of Factors

Oct 16, 2020

Abstract:Domain Adaptation (DA) is a highly relevant research topic when it comes to image classification with deep neural networks. Combining multiple source domains in a sophisticated way to optimize a classification model can improve the generalization to a target domain. Here, the difference in data distributions of source and target image datasets plays a major role. In this paper, we describe based on a theory of visual factors how real-world scenes appear in images in general and how recent DA datasets are composed of such. We show that different domains can be described by a set of so called domain factors, whose values are consistent within a domain, but can change across domains. Many DA approaches try to remove all domain factors from the feature representation to be domain invariant. In this paper we show that this can lead to negative transfer since task-informative factors can get lost as well. To address this, we propose Factor-Preserving DA (FP-DA), a method to train a deep adversarial unsupervised DA model, which is able to preserve specific task relevant factors in a multi-domain scenario. We demonstrate on CORe50, a dataset with many domains, how such factors can be identified by standard one-to-one transfer experiments between single domains combined with PCA. By applying FP-DA, we show that the highest average and minimum performance can be achieved.

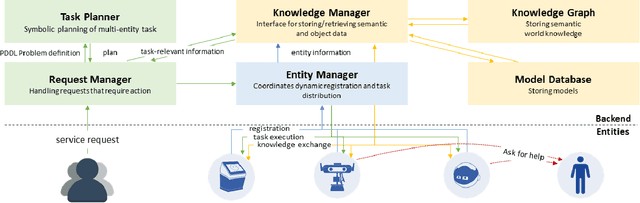

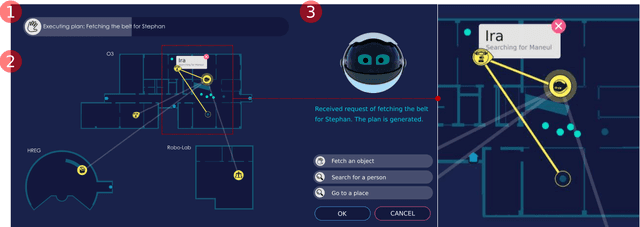

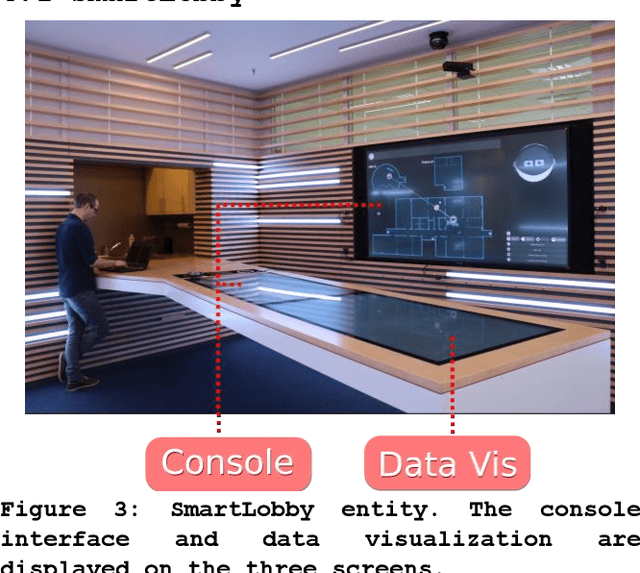

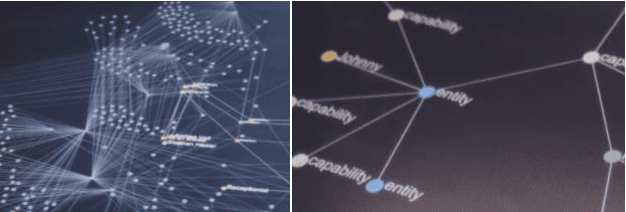

Designing Interaction for Multi-agent Cooperative System in an Office Environment

Feb 15, 2020

Abstract:Future intelligent system will involve very various types of artificial agents, such as mobile robots, smart home infrastructure or personal devices, which share data and collaborate with each other to execute certain tasks.Designing an efficient human-machine interface, which can support users to express needs to the system, supervise the collaboration progress of different entities and evaluate the result, will be challengeable. This paper presents the design and implementation of the human-machine interface of Intelligent Cyber-Physical system (ICPS),which is a multi-entity coordination system of robots and other smart devices in a working environment. ICPS gathers sensory data from entities and then receives users' command, then optimizes plans to utilize the capability of different entities to serve people. Using multi-model interaction methods, e.g. graphical interfaces, speech interaction, gestures and facial expressions, ICPS is able to receive inputs from users through different entities, keep users aware of the progress and accomplish the task efficiently

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge