Stacked Confusion Reject Plots (SCORE)

Paper and Code

Jun 25, 2024

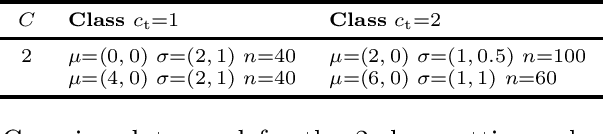

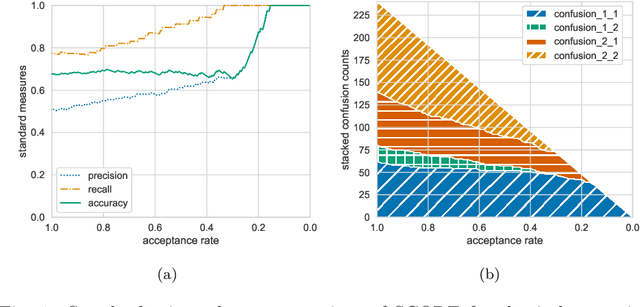

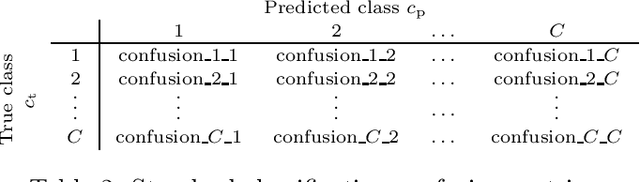

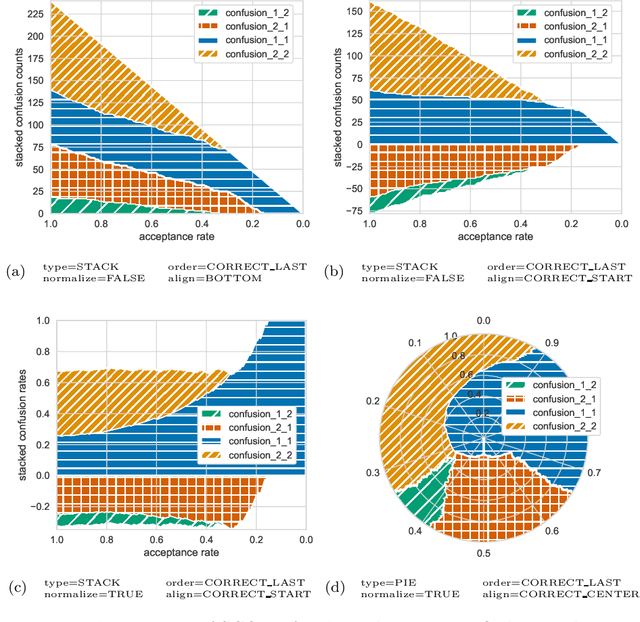

Machine learning is more and more applied in critical application areas like health and driver assistance. To minimize the risk of wrong decisions, in such applications it is necessary to consider the certainty of a classification to reject uncertain samples. An established tool for this are reject curves that visualize the trade-off between the number of rejected samples and classification performance metrics. We argue that common reject curves are too abstract and hard to interpret by non-experts. We propose Stacked Confusion Reject Plots (SCORE) that offer a more intuitive understanding of the used data and the classifier's behavior. We present example plots on artificial Gaussian data to document the different options of SCORE and provide the code as a Python package.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge