Stefan Mengel

Learning Model Agnostic Explanations via Constraint Programming

Nov 13, 2024Abstract:Interpretable Machine Learning faces a recurring challenge of explaining the predictions made by opaque classifiers such as ensemble models, kernel methods, or neural networks in terms that are understandable to humans. When the model is viewed as a black box, the objective is to identify a small set of features that jointly determine the black box response with minimal error. However, finding such model-agnostic explanations is computationally demanding, as the problem is intractable even for binary classifiers. In this paper, the task is framed as a Constraint Optimization Problem, where the constraint solver seeks an explanation of minimum error and bounded size for an input data instance and a set of samples generated by the black box. From a theoretical perspective, this constraint programming approach offers PAC-style guarantees for the output explanation. We evaluate the approach empirically on various datasets and show that it statistically outperforms the state-of-the-art heuristic Anchors method.

Sum of Squares Circuits

Aug 21, 2024

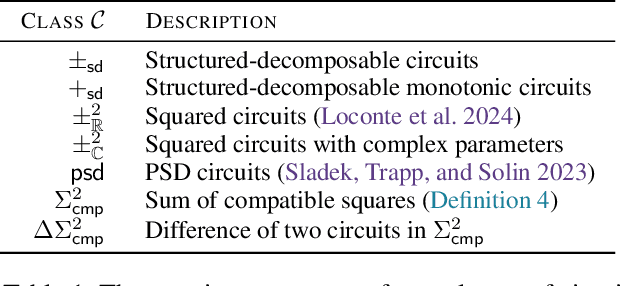

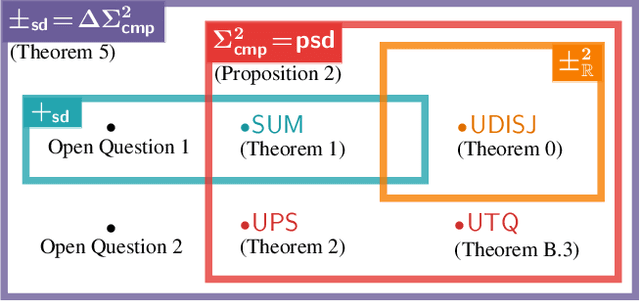

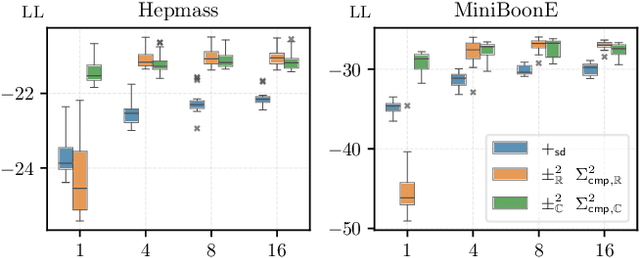

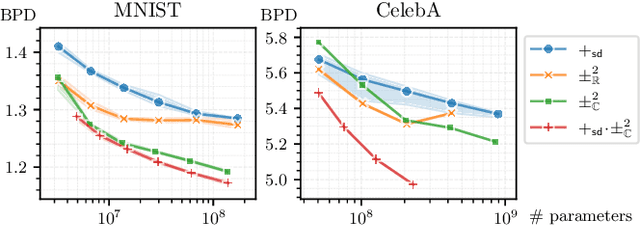

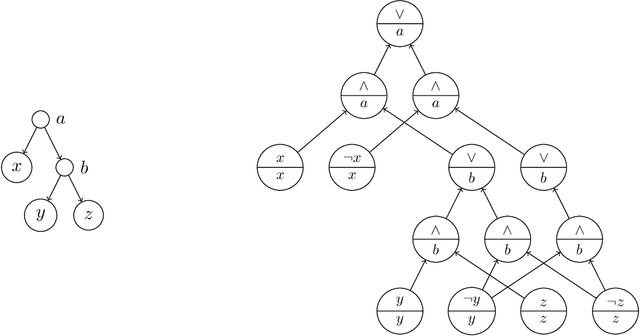

Abstract:Designing expressive generative models that support exact and efficient inference is a core question in probabilistic ML. Probabilistic circuits (PCs) offer a framework where this tractability-vs-expressiveness trade-off can be analyzed theoretically. Recently, squared PCs encoding subtractive mixtures via negative parameters have emerged as tractable models that can be exponentially more expressive than monotonic PCs, i.e., PCs with positive parameters only. In this paper, we provide a more precise theoretical characterization of the expressiveness relationships among these models. First, we prove that squared PCs can be less expressive than monotonic ones. Second, we formalize a novel class of PCs -- sum of squares PCs -- that can be exponentially more expressive than both squared and monotonic PCs. Around sum of squares PCs, we build an expressiveness hierarchy that allows us to precisely unify and separate different tractable model classes such as Born Machines and PSD models, and other recently introduced tractable probabilistic models by using complex parameters. Finally, we empirically show the effectiveness of sum of squares circuits in performing distribution estimation.

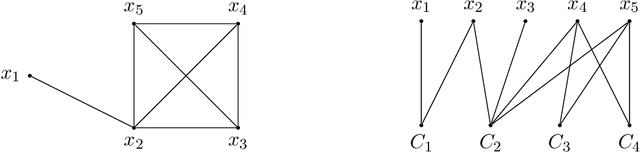

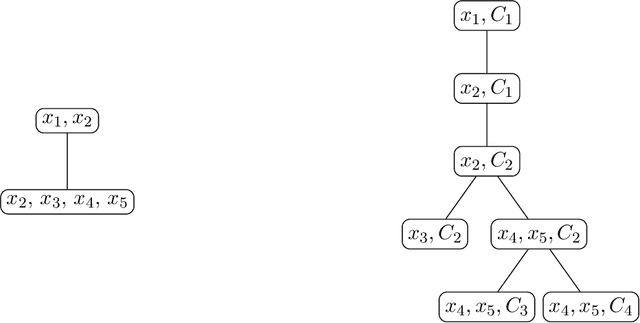

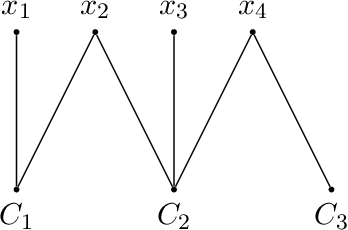

Counting Solutions to Conjunctive Queries: Structural and Hybrid Tractability

Nov 24, 2023Abstract:Counting the number of answers to conjunctive queries is a fundamental problem in databases that, under standard assumptions, does not have an efficient solution. The issue is inherently #P-hard, extending even to classes of acyclic instances. To address this, we pinpoint tractable classes by examining the structural properties of instances and introducing the novel concept of #-hypertree decomposition. We establish the feasibility of counting answers in polynomial time for classes of queries featuring bounded #-hypertree width. Additionally, employing novel techniques from the realm of fixed-parameter computational complexity, we prove that, for bounded arity queries, the bounded #-hypertree width property precisely delineates the frontier of tractability for the counting problem. This result closes an important gap in our understanding of the complexity of such a basic problem for conjunctive queries and, equivalently, for constraint satisfaction problems (CSPs). Drawing upon #-hypertree decompositions, a ''hybrid'' decomposition method emerges. This approach leverages both the structural characteristics of the query and properties intrinsic to the input database, including keys or other (weaker) degree constraints that limit the permissible combinations of values. Intuitively, these features may introduce distinct structural properties that elude identification through the ''worst-possible database'' perspective inherent in purely structural methods.

Subtractive Mixture Models via Squaring: Representation and Learning

Oct 01, 2023

Abstract:Mixture models are traditionally represented and learned by adding several distributions as components. Allowing mixtures to subtract probability mass or density can drastically reduce the number of components needed to model complex distributions. However, learning such subtractive mixtures while ensuring they still encode a non-negative function is challenging. We investigate how to learn and perform inference on deep subtractive mixtures by squaring them. We do this in the framework of probabilistic circuits, which enable us to represent tensorized mixtures and generalize several other subtractive models. We theoretically prove that the class of squared circuits allowing subtractions can be exponentially more expressive than traditional additive mixtures; and, we empirically show this increased expressiveness on a series of real-world distribution estimation tasks.

Bounds on BDD-Based Bucket Elimination

Jun 01, 2023Abstract:We study BDD-based bucket elimination, an approach to satisfiability testing using variable elimination which has seen several practical implementations in the past. We prove that it allows solving the standard pigeonhole principle formulas efficiently, when allowing different orders for variable elimination and BDD-representations, a variant of bucket elimination that was recently introduced. Furthermore, we show that this upper bound is somewhat brittle as for formulas which we get from the pigeonhole principle by restriction, i.e., fixing some of the variables, the same approach with the same variable orders has exponential runtime. We also show that the more common implementation of bucket elimination using the same order for variable elimination and the BDDs has exponential runtime for the pigeonhole principle when using either of the two orders from our upper bound, which suggests that the combination of both is the key to efficiency in the setting.

No Efficient Disjunction or Conjunction of Switch-Lists

Mar 09, 2022Abstract:It is shown that disjunction of two switch-lists can blow up the representation size exponentially. Since switch-lists can be negated without any increase in size, this shows that conjunction of switch-lists also leads to an exponential blow-up in general.

Proof Complexity of Symbolic QBF Reasoning

Apr 06, 2021

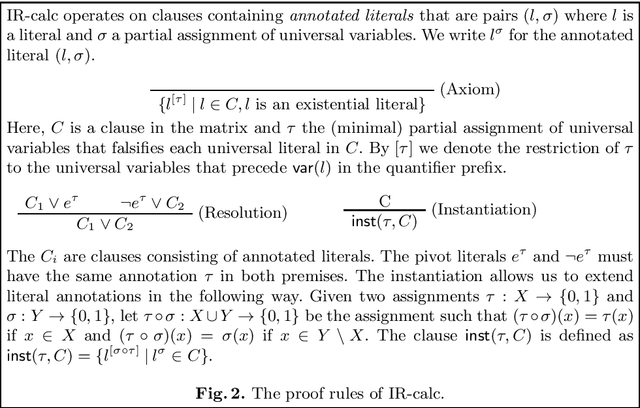

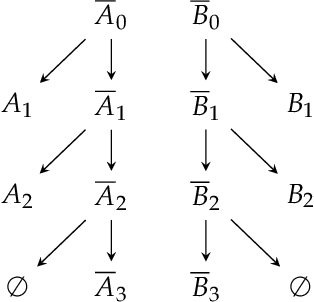

Abstract:We introduce and investigate symbolic proof systems for Quantified Boolean Formulas (QBF) operating on Ordered Binary Decision Diagrams (OBDDs). These systems capture QBF solvers that perform symbolic quantifier elimination, and as such admit short proofs of formulas of bounded path-width and quantifier complexity. As a consequence, we obtain exponential separations from standard clausal proof systems, specifically (long-distance) QU-Resolution and IR-Calc. We further develop a lower bound technique for symbolic QBF proof systems based on strategy extraction that lifts known lower bounds from communication complexity. This allows us to derive strong lower bounds against symbolic QBF proof systems that are independent of the variable ordering of the underlying OBDDs, and that hold even if the proof system is allowed access to an NP-oracle.

On Irrelevant Literals in Pseudo-Boolean Constraint Learning

Dec 08, 2020Abstract:Learning pseudo-Boolean (PB) constraints in PB solvers exploiting cutting planes based inference is not as well understood as clause learning in conflict-driven clause learning solvers. In this paper, we show that PB constraints derived using cutting planes may contain \emph{irrelevant literals}, i.e., literals whose assigned values (whatever they are) never change the truth value of the constraint. Such literals may lead to infer constraints that are weaker than they should be, impacting the size of the proof built by the solver, and thus also affecting its performance. This suggests that current implementations of PB solvers based on cutting planes should be reconsidered to prevent the generation of irrelevant literals. Indeed, detecting and removing irrelevant literals is too expensive in practice to be considered as an option (the associated problem is NP-hard.

Lower Bounds for Approximate Knowledge Compilation

Nov 27, 2020

Abstract:Knowledge compilation studies the trade-off between succinctness and efficiency of different representation languages. For many languages, there are known strong lower bounds on the representation size, but recent work shows that, for some languages, one can bypass these bounds using approximate compilation. The idea is to compile an approximation of the knowledge for which the number of errors can be controlled. We focus on circuits in deterministic decomposable negation normal form (d-DNNF), a compilation language suitable in contexts such as probabilistic reasoning, as it supports efficient model counting and probabilistic inference. Moreover, there are known size lower bounds for d-DNNF which by relaxing to approximation one might be able to avoid. In this paper we formalize two notions of approximation: weak approximation which has been studied before in the decision diagram literature and strong approximation which has been used in recent algorithmic results. We then show lower bounds for approximation by d-DNNF, complementing the positive results from the literature.

Revisiting Graph Width Measures for CNF-Encodings

May 09, 2019

Abstract:We consider bounded width CNF-formulas where the width is measured by popular graph width measures on graphs associated to CNF-formulas. Such restricted graph classes, in particular those of bounded treewidth, have been extensively studied for their uses in the design of algorithms for various computational problems on CNF-formulas. Here we consider the expressivity of these formulas in the model of clausal encodings with auxiliary variables. We first show that bounding the width for many of the measures from the literature leads to a dramatic loss of expressivity, restricting the formulas to such of low communication complexity. We then show that the width of optimal encodings with respect to different measures is strongly linked: there are two classes of width measures, one containing primal treewidth and the other incidence cliquewidth, such that in each class the width of optimal encodings only differs by constant factors. Moreover, between the two classes the width differs at most by a factor logarithmic in the number of variables. Both these results are in stark contrast to the setting without auxiliary variables where all width measures we consider here differ by more than constant factors and in many cases even by linear factors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge