Stanley Bak

The 6th International Verification of Neural Networks Competition (VNN-COMP 2025): Summary and Results

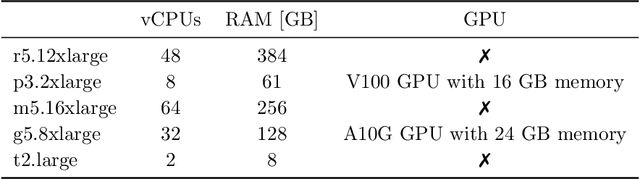

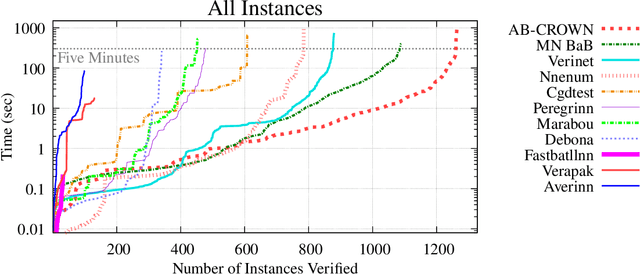

Dec 22, 2025Abstract:This report summarizes the 6th International Verification of Neural Networks Competition (VNN-COMP 2025), held as a part of the 8th International Symposium on AI Verification (SAIV), that was collocated with the 37th International Conference on Computer-Aided Verification (CAV). VNN-COMP is held annually to facilitate the fair and objective comparison of state-of-the-art neural network verification tools, encourage the standardization of tool interfaces, and bring together the neural network verification community. To this end, standardized formats for networks (ONNX) and specification (VNN-LIB) were defined, tools were evaluated on equal-cost hardware (using an automatic evaluation pipeline based on AWS instances), and tool parameters were chosen by the participants before the final test sets were made public. In the 2025 iteration, 8 teams participated on a diverse set of 16 regular and 9 extended benchmarks. This report summarizes the rules, benchmarks, participating tools, results, and lessons learned from this iteration of this competition.

The Fifth International Verification of Neural Networks Competition (VNN-COMP 2024): Summary and Results

Dec 28, 2024

Abstract:This report summarizes the 5th International Verification of Neural Networks Competition (VNN-COMP 2024), held as a part of the 7th International Symposium on AI Verification (SAIV), that was collocated with the 36th International Conference on Computer-Aided Verification (CAV). VNN-COMP is held annually to facilitate the fair and objective comparison of state-of-the-art neural network verification tools, encourage the standardization of tool interfaces, and bring together the neural network verification community. To this end, standardized formats for networks (ONNX) and specification (VNN-LIB) were defined, tools were evaluated on equal-cost hardware (using an automatic evaluation pipeline based on AWS instances), and tool parameters were chosen by the participants before the final test sets were made public. In the 2024 iteration, 8 teams participated on a diverse set of 12 regular and 8 extended benchmarks. This report summarizes the rules, benchmarks, participating tools, results, and lessons learned from this iteration of this competition.

Scalable Surrogate Verification of Image-based Neural Network Control Systems using Composition and Unrolling

May 28, 2024

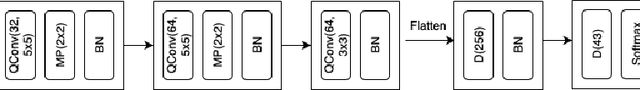

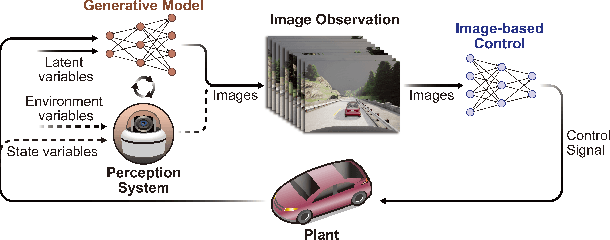

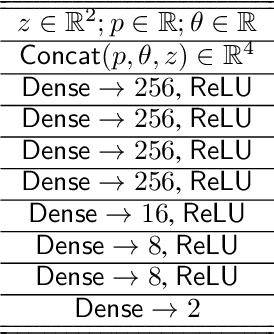

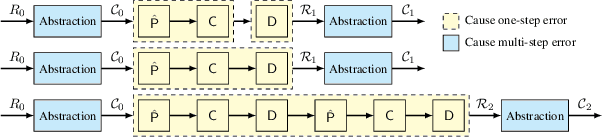

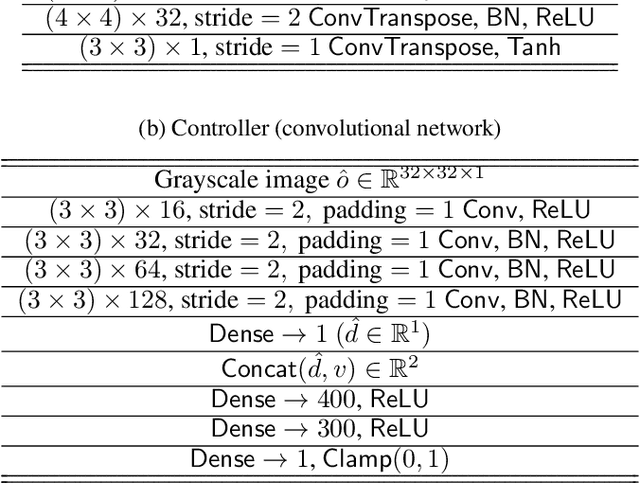

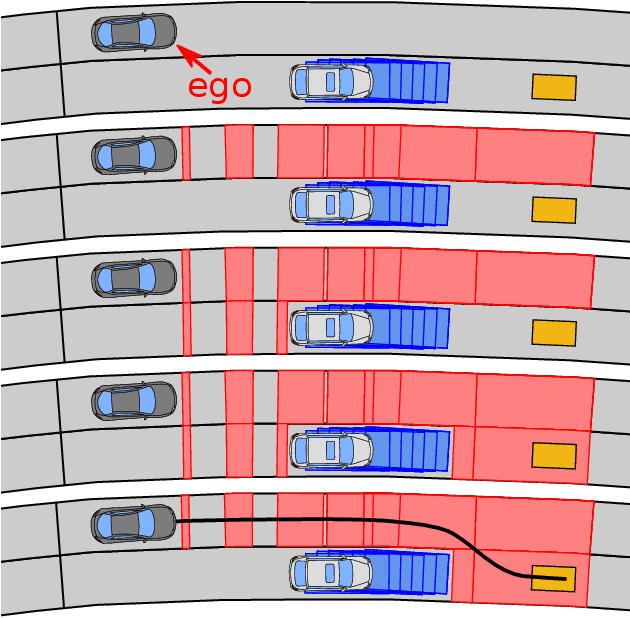

Abstract:Verifying safety of neural network control systems that use images as input is a difficult problem because, from a given system state, there is no known way to mathematically model what images are possible in the real-world. We build on recent work that considers a surrogate verification approach, training a conditional generative adversarial network (cGAN) as an image generator in place of the real world. This enables set-based formal analysis of the closed-loop system, providing analysis beyond simulation and testing. While existing work is effective on small examples, excessive overapproximation both within a single control period and across multiple control periods limits its scalability. We propose approaches to overcome these two sources of error. First, we overcome one-step error by composing the system's dynamics along with the cGAN and neural network controller, without losing the dependencies between input states and the control outputs as in the monotonic analysis of the system dynamics. Second, we reduce multi-step error by repeating the single-step composition, essentially unrolling multiple steps of the control loop into a large neural network. We then leverage existing network verification tools to compute accurate reachable sets for multiple steps, avoiding the accumulation of abstraction error at each step. We demonstrate the effectiveness of our approach in terms of both accuracy and scalability using two case studies: an autonomous aircraft taxiing system and an advanced emergency braking system. On the aircraft taxiing system, the converged reachable set is 175% larger using the prior baseline method compared with our proposed approach. On the emergency braking system, with 24x the number of image output variables from the cGAN, the baseline method fails to prove any states are safe, whereas our improvements enable set-based safety analysis.

The Fourth International Verification of Neural Networks Competition (VNN-COMP 2023): Summary and Results

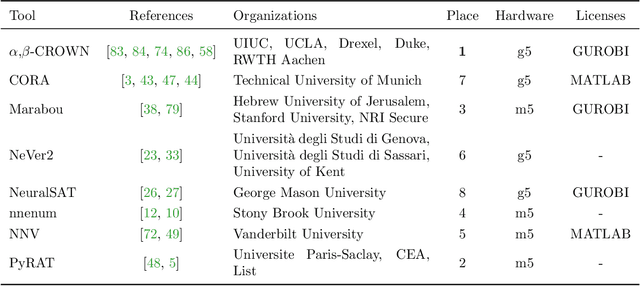

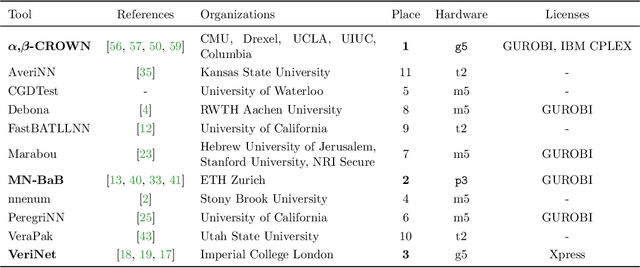

Dec 28, 2023Abstract:This report summarizes the 4th International Verification of Neural Networks Competition (VNN-COMP 2023), held as a part of the 6th Workshop on Formal Methods for ML-Enabled Autonomous Systems (FoMLAS), that was collocated with the 35th International Conference on Computer-Aided Verification (CAV). VNN-COMP is held annually to facilitate the fair and objective comparison of state-of-the-art neural network verification tools, encourage the standardization of tool interfaces, and bring together the neural network verification community. To this end, standardized formats for networks (ONNX) and specification (VNN-LIB) were defined, tools were evaluated on equal-cost hardware (using an automatic evaluation pipeline based on AWS instances), and tool parameters were chosen by the participants before the final test sets were made public. In the 2023 iteration, 7 teams participated on a diverse set of 10 scored and 4 unscored benchmarks. This report summarizes the rules, benchmarks, participating tools, results, and lessons learned from this iteration of this competition.

Real-Time Capable Decision Making for Autonomous Driving Using Reachable Sets

Sep 21, 2023

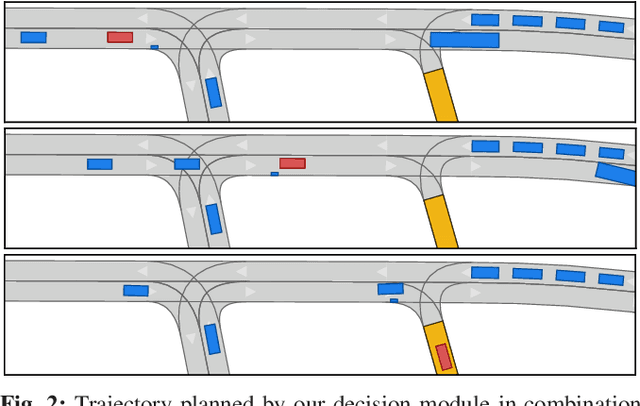

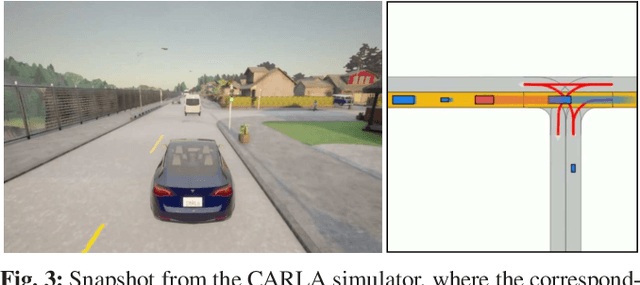

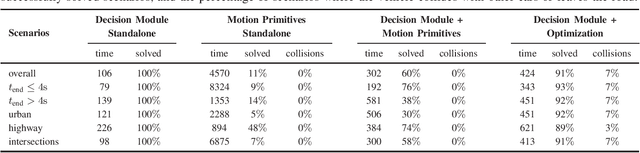

Abstract:Despite large advances in recent years, real-time capable motion planning for autonomous road vehicles remains a huge challenge. In this work, we present a decision module that is based on set-based reachability analysis: First, we identify all possible driving corridors by computing the reachable set for the longitudinal position of the vehicle along the lanelets of the road network, where lane changes are modeled as discrete events. Next, we select the best driving corridor based on a cost function that penalizes lane changes and deviations from a desired velocity profile. Finally, we generate a reference trajectory inside the selected driving corridor, which can be used to guide or warm start low-level trajectory planners. For the numerical evaluation we combine our decision module with a motion-primitive-based and an optimization-based planner and evaluate the performance on 2000 challenging CommonRoad traffic scenarios as well in the realistic CARLA simulator. The results demonstrate that our decision module is real-time capable and yields significant speed-ups compared to executing a motion planner standalone without a decision module.

First Three Years of the International Verification of Neural Networks Competition (VNN-COMP)

Jan 14, 2023Abstract:This paper presents a summary and meta-analysis of the first three iterations of the annual International Verification of Neural Networks Competition (VNN-COMP) held in 2020, 2021, and 2022. In the VNN-COMP, participants submit software tools that analyze whether given neural networks satisfy specifications describing their input-output behavior. These neural networks and specifications cover a variety of problem classes and tasks, corresponding to safety and robustness properties in image classification, neural control, reinforcement learning, and autonomous systems. We summarize the key processes, rules, and results, present trends observed over the last three years, and provide an outlook into possible future developments.

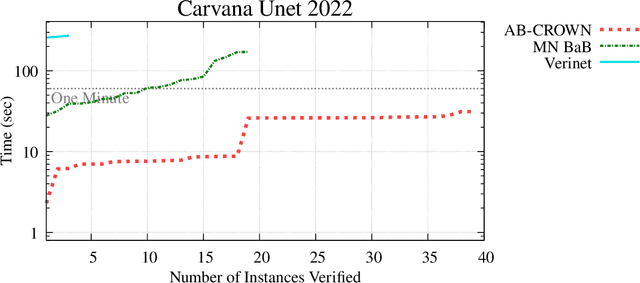

The Third International Verification of Neural Networks Competition (VNN-COMP 2022): Summary and Results

Dec 20, 2022

Abstract:This report summarizes the 3rd International Verification of Neural Networks Competition (VNN-COMP 2022), held as a part of the 5th Workshop on Formal Methods for ML-Enabled Autonomous Systems (FoMLAS), which was collocated with the 34th International Conference on Computer-Aided Verification (CAV). VNN-COMP is held annually to facilitate the fair and objective comparison of state-of-the-art neural network verification tools, encourage the standardization of tool interfaces, and bring together the neural network verification community. To this end, standardized formats for networks (ONNX) and specification (VNN-LIB) were defined, tools were evaluated on equal-cost hardware (using an automatic evaluation pipeline based on AWS instances), and tool parameters were chosen by the participants before the final test sets were made public. In the 2022 iteration, 11 teams participated on a diverse set of 12 scored benchmarks. This report summarizes the rules, benchmarks, participating tools, results, and lessons learned from this iteration of this competition.

Provable Fairness for Neural Network Models using Formal Verification

Dec 16, 2022

Abstract:Machine learning models are increasingly deployed for critical decision-making tasks, making it important to verify that they do not contain gender or racial biases picked up from training data. Typical approaches to achieve fairness revolve around efforts to clean or curate training data, with post-hoc statistical evaluation of the fairness of the model on evaluation data. In contrast, we propose techniques to \emph{prove} fairness using recently developed formal methods that verify properties of neural network models.Beyond the strength of guarantee implied by a formal proof, our methods have the advantage that we do not need explicit training or evaluation data (which is often proprietary) in order to analyze a given trained model. In experiments on two familiar datasets in the fairness literature (COMPAS and ADULTS), we show that through proper training, we can reduce unfairness by an average of 65.4\% at a cost of less than 1\% in AUC score.

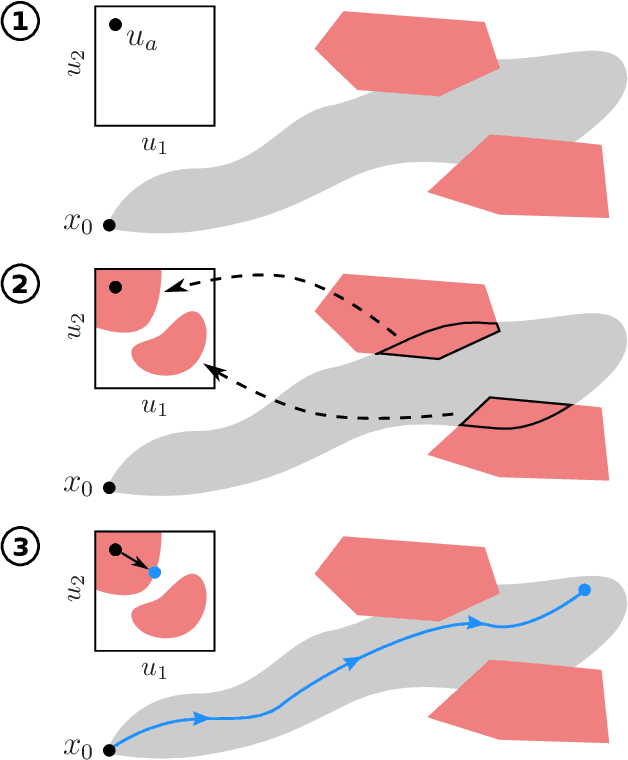

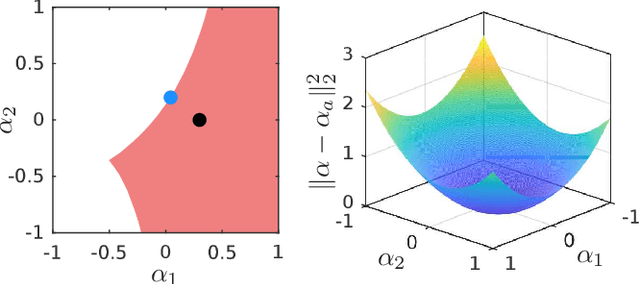

Provably Safe Reinforcement Learning via Action Projection using Reachability Analysis and Polynomial Zonotopes

Oct 19, 2022

Abstract:While reinforcement learning produces very promising results for many applications, its main disadvantage is the lack of safety guarantees, which prevents its use in safety-critical systems. In this work, we address this issue by a safety shield for nonlinear continuous systems that solve reach-avoid tasks. Our safety shield prevents applying potentially unsafe actions from a reinforcement learning agent by projecting the proposed action to the closest safe action. This approach is called action projection and is implemented via mixed-integer optimization. The safety constraints for action projection are obtained by applying parameterized reachability analysis using polynomial zonotopes, which enables to accurately capture the nonlinear effects of the actions on the system. In contrast to other state of the art approaches for action projection, our safety shield can efficiently handle input constraints and dynamic obstacles, eases incorporation of the spatial robot dimensions into the safety constraints, guarantees robust safety despite process noise and measurement errors, and is well suited for high-dimensional systems, as we demonstrate on several challenging benchmark systems.

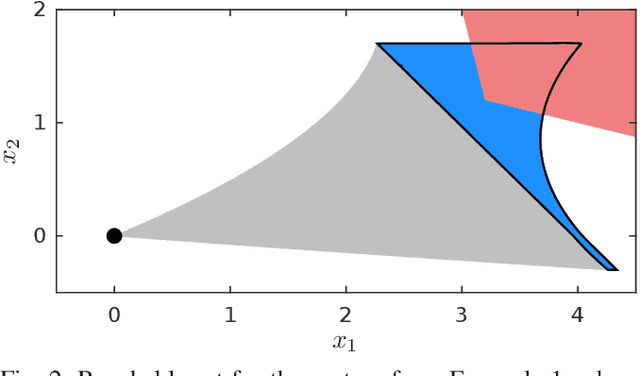

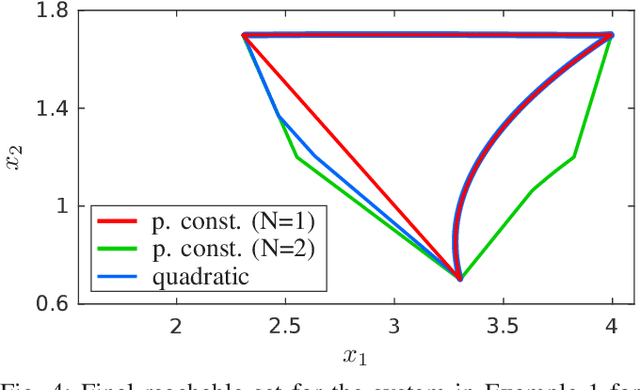

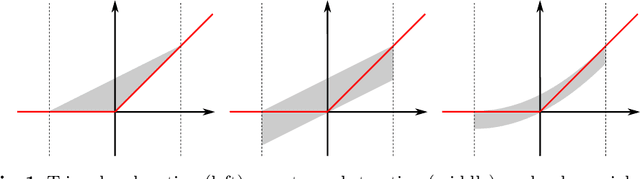

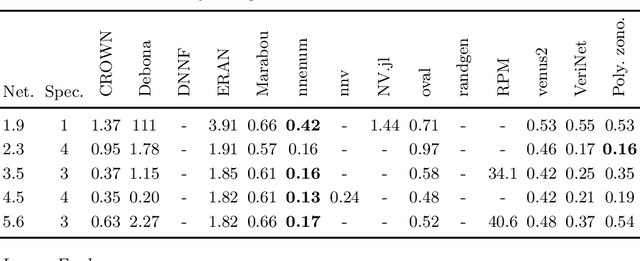

Open- and Closed-Loop Neural Network Verification using Polynomial Zonotopes

Jul 06, 2022

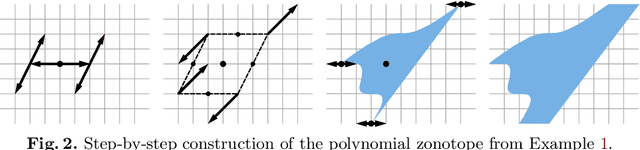

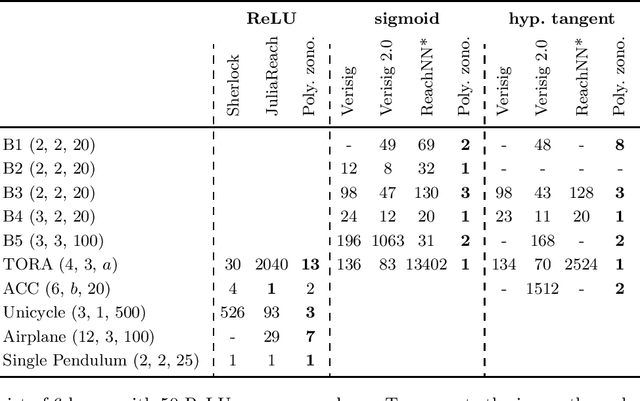

Abstract:We present a novel approach to efficiently compute tight non-convex enclosures of the image through neural networks with ReLU, sigmoid, or hyperbolic tangent activation functions. In particular, we abstract the input-output relation of each neuron by a polynomial approximation, which is evaluated in a set-based manner using polynomial zonotopes. Our proposed method is especially well suited for reachability analysis of neural network controlled systems since polynomial zonotopes are able to capture the non-convexity in both, the image through the neural network as well as the reachable set. We demonstrate the superior performance of our approach compared to other state of the art methods on various benchmark systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge