Hoang-Dung Tran

A Transition System Abstraction Framework for Neural Network Dynamical System Models

Feb 18, 2024

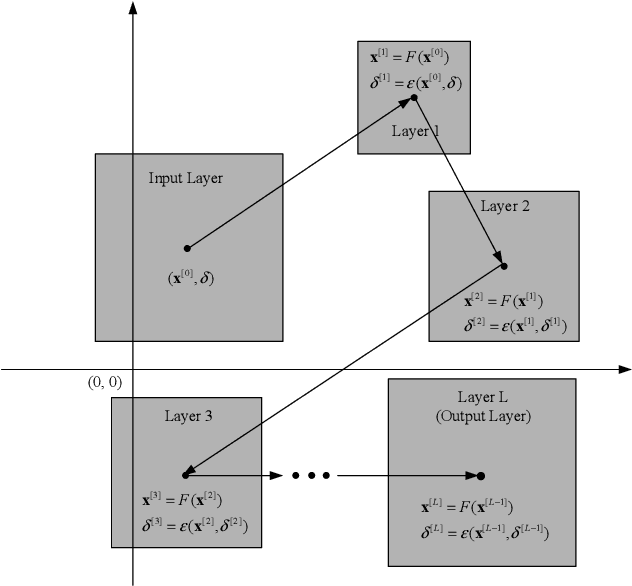

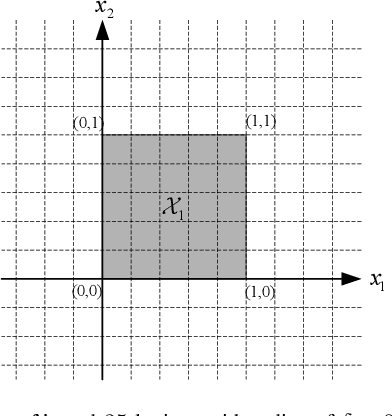

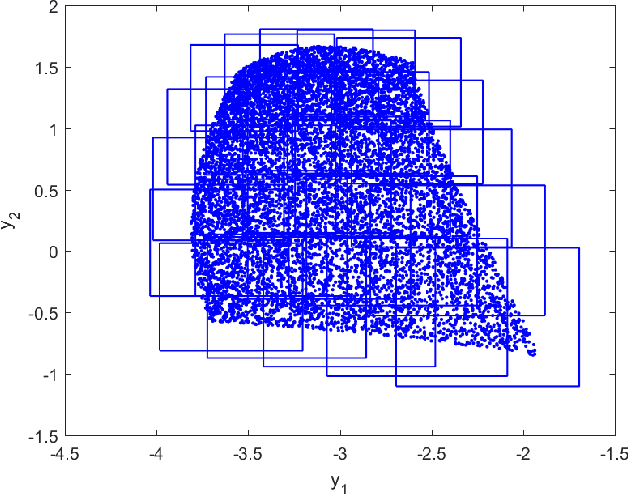

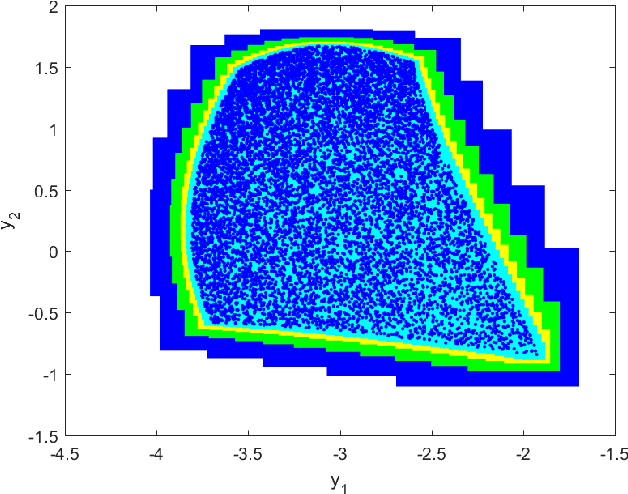

Abstract:This paper proposes a transition system abstraction framework for neural network dynamical system models to enhance the model interpretability, with applications to complex dynamical systems such as human behavior learning and verification. To begin with, the localized working zone will be segmented into multiple localized partitions under the data-driven Maximum Entropy (ME) partitioning method. Then, the transition matrix will be obtained based on the set-valued reachability analysis of neural networks. Finally, applications to human handwriting dynamics learning and verification are given to validate our proposed abstraction framework, which demonstrates the advantages of enhancing the interpretability of the black-box model, i.e., our proposed framework is able to abstract a data-driven neural network model into a transition system, making the neural network model interpretable through verifying specifications described in Computational Tree Logic (CTL) languages.

Neural Network Compression of ACAS Xu is Unsafe: Closed-Loop Verification through Quantized State Backreachability

Jan 26, 2022

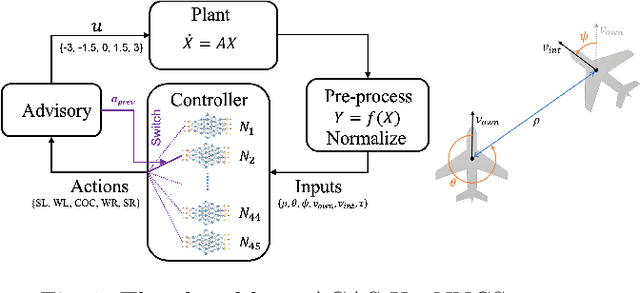

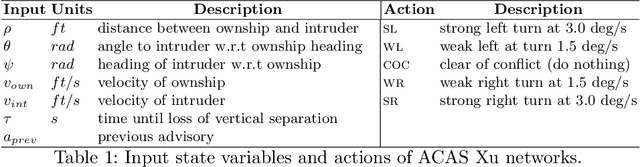

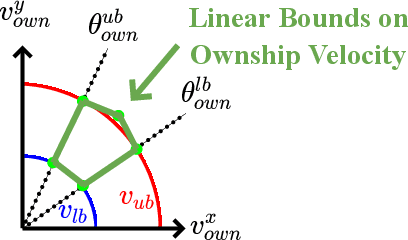

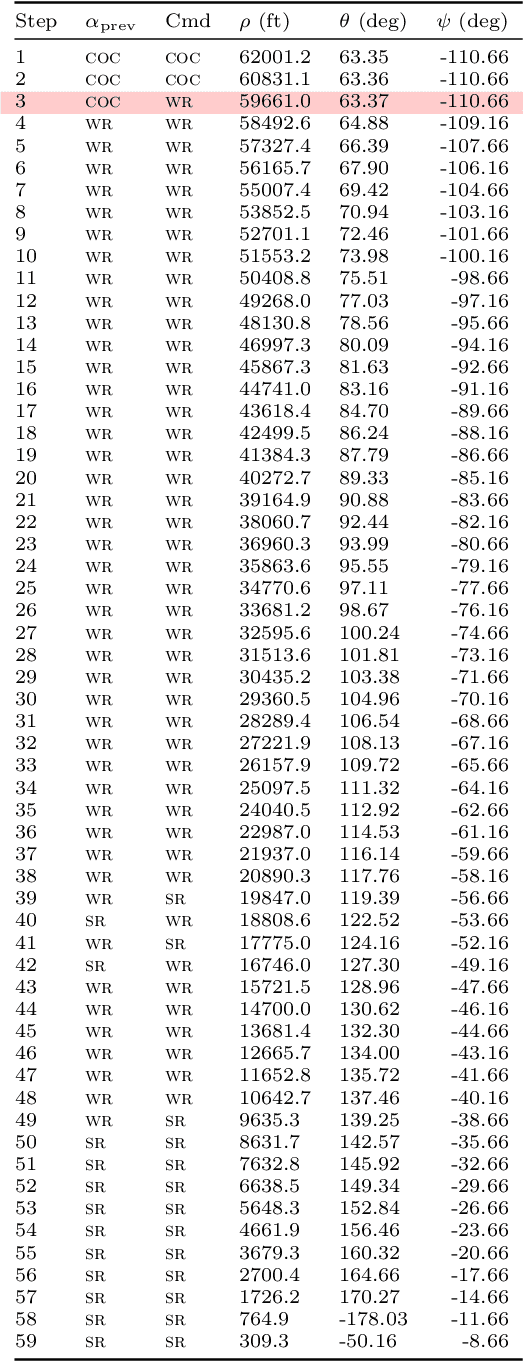

Abstract:ACAS Xu is an air-to-air collision avoidance system designed for unmanned aircraft that issues horizontal turn advisories to avoid an intruder aircraft. Due the use of a large lookup table in the design, a neural network compression of the policy was proposed. Analysis of this system has spurred a significant body of research in the formal methods community on neural network verification. While many powerful methods have been developed, most work focuses on open-loop properties of the networks, rather than the main point of the system -- collision avoidance -- which requires closed-loop analysis. In this work, we develop a technique to verify a closed-loop approximation of ACAS Xu using state quantization and backreachability. We use favorable assumptions for the analysis -- perfect sensor information, instant following of advisories, ideal aircraft maneuvers and an intruder that only flies straight. When the method fails to prove the system is safe, we refine the quantization parameters until generating counterexamples where the original (non-quantized) system also has collisions.

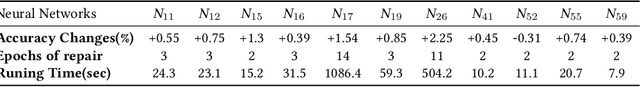

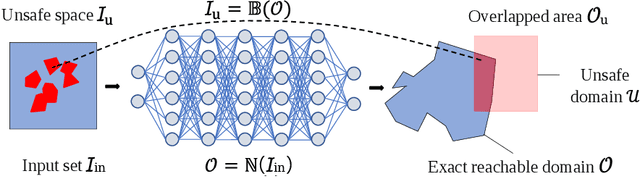

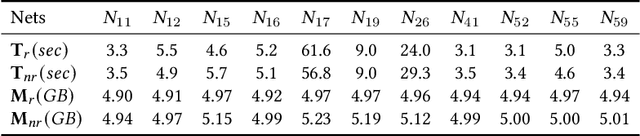

Neural Network Repair with Reachability Analysis

Aug 09, 2021

Abstract:Safety is a critical concern for the next generation of autonomy that is likely to rely heavily on deep neural networks for perception and control. Formally verifying the safety and robustness of well-trained DNNs and learning-enabled systems under attacks, model uncertainties, and sensing errors is essential for safe autonomy. This research proposes a framework to repair unsafe DNNs in safety-critical systems with reachability analysis. The repair process is inspired by adversarial training which has demonstrated high effectiveness in improving the safety and robustness of DNNs. Different from traditional adversarial training approaches where adversarial examples are utilized from random attacks and may not be representative of all unsafe behaviors, our repair process uses reachability analysis to compute the exact unsafe regions and identify sufficiently representative examples to enhance the efficacy and efficiency of the adversarial training. The performance of our framework is evaluated on two types of benchmarks without safe models as references. One is a DNN controller for aircraft collision avoidance with access to training data. The other is a rocket lander where our framework can be seamlessly integrated with the well-known deep deterministic policy gradient (DDPG) reinforcement learning algorithm. The experimental results show that our framework can successfully repair all instances on multiple safety specifications with negligible performance degradation. In addition, to increase the computational and memory efficiency of the reachability analysis algorithm, we propose a depth-first-search algorithm that combines an existing exact analysis method with an over-approximation approach based on a new set representation. Experimental results show that our method achieves a five-fold improvement in runtime and a two-fold improvement in memory usage compared to exact analysis.

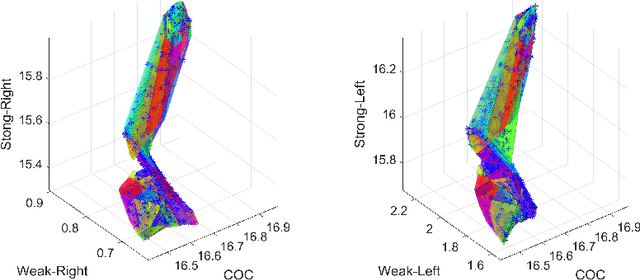

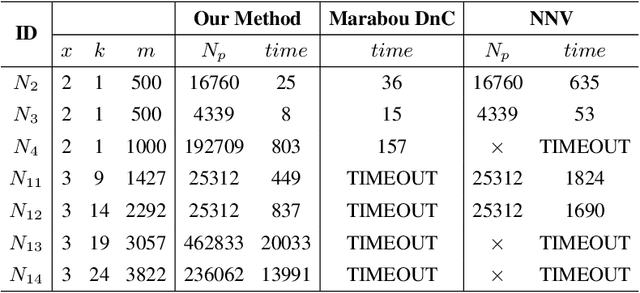

Reachability Analysis of Convolutional Neural Networks

Jun 22, 2021

Abstract:Deep convolutional neural networks have been widely employed as an effective technique to handle complex and practical problems. However, one of the fundamental problems is the lack of formal methods to analyze their behavior. To address this challenge, we propose an approach to compute the exact reachable sets of a network given an input domain, where the reachable set is represented by the face lattice structure. Besides the computation of reachable sets, our approach is also capable of backtracking to the input domain given an output reachable set. Therefore, a full analysis of a network's behavior can be realized. In addition, an approach for fast analysis is also introduced, which conducts fast computation of reachable sets by considering selected sensitive neurons in each layer. The exact pixel-level reachability analysis method is evaluated on a CNN for the CIFAR10 dataset and compared to related works. The fast analysis method is evaluated over a CNN CIFAR10 dataset and VGG16 architecture for the ImageNet dataset.

Verification of Deep Convolutional Neural Networks Using ImageStars

May 14, 2020

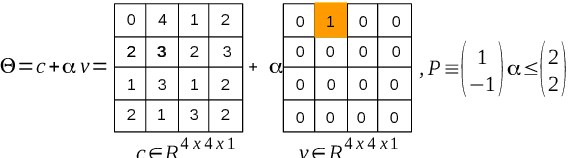

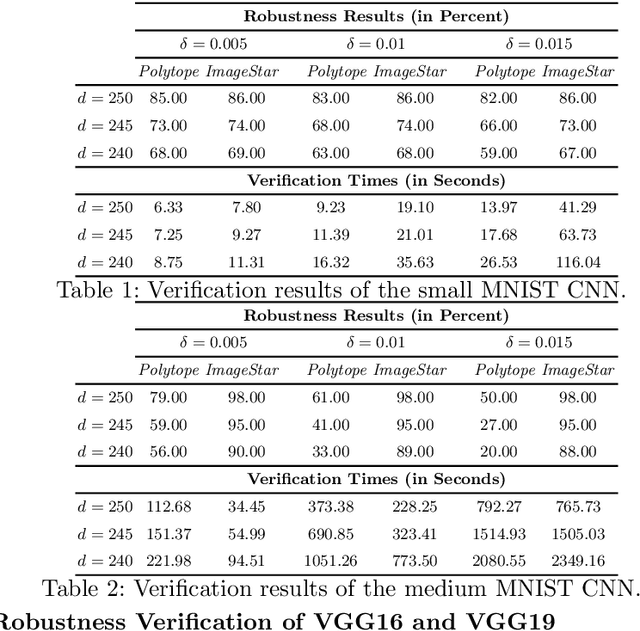

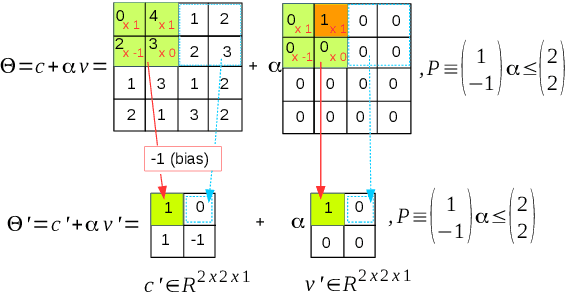

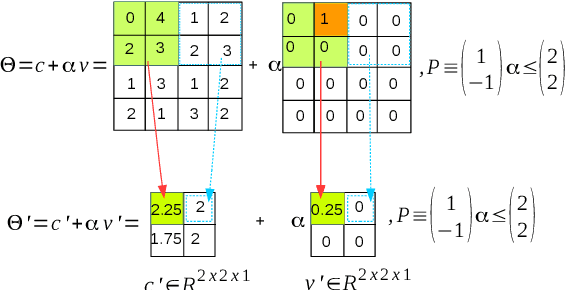

Abstract:Convolutional Neural Networks (CNN) have redefined the state-of-the-art in many real-world applications, such as facial recognition, image classification, human pose estimation, and semantic segmentation. Despite their success, CNNs are vulnerable to adversarial attacks, where slight changes to their inputs may lead to sharp changes in their output in even well-trained networks. Set-based analysis methods can detect or prove the absence of bounded adversarial attacks, which can then be used to evaluate the effectiveness of neural network training methodology. Unfortunately, existing verification approaches have limited scalability in terms of the size of networks that can be analyzed. In this paper, we describe a set-based framework that successfully deals with real-world CNNs, such as VGG16 and VGG19, that have high accuracy on ImageNet. Our approach is based on a new set representation called the ImageStar, which enables efficient exact and over-approximative analysis of CNNs. ImageStars perform efficient set-based analysis by combining operations on concrete images with linear programming (LP). Our approach is implemented in a tool called NNV, and can verify the robustness of VGG networks with respect to a small set of input states, derived from adversarial attacks, such as the DeepFool attack. The experimental results show that our approach is less conservative and faster than existing zonotope methods, such as those used in DeepZ, and the polytope method used in DeepPoly.

NNV: The Neural Network Verification Tool for Deep Neural Networks and Learning-Enabled Cyber-Physical Systems

Apr 12, 2020

Abstract:This paper presents the Neural Network Verification (NNV) software tool, a set-based verification framework for deep neural networks (DNNs) and learning-enabled cyber-physical systems (CPS). The crux of NNV is a collection of reachability algorithms that make use of a variety of set representations, such as polyhedra, star sets, zonotopes, and abstract-domain representations. NNV supports both exact (sound and complete) and over-approximate (sound) reachability algorithms for verifying safety and robustness properties of feed-forward neural networks (FFNNs) with various activation functions. For learning-enabled CPS, such as closed-loop control systems incorporating neural networks, NNV provides exact and over-approximate reachability analysis schemes for linear plant models and FFNN controllers with piecewise-linear activation functions, such as ReLUs. For similar neural network control systems (NNCS) that instead have nonlinear plant models, NNV supports over-approximate analysis by combining the star set analysis used for FFNN controllers with zonotope-based analysis for nonlinear plant dynamics building on CORA. We evaluate NNV using two real-world case studies: the first is safety verification of ACAS Xu networks and the second deals with the safety verification of a deep learning-based adaptive cruise control system.

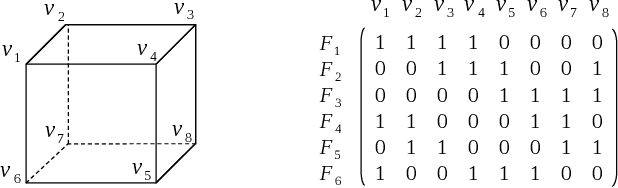

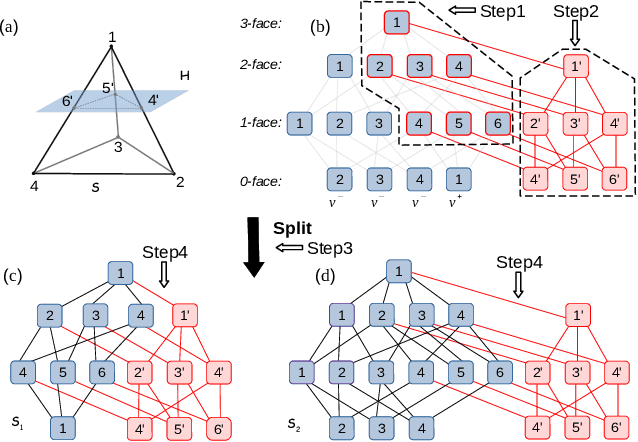

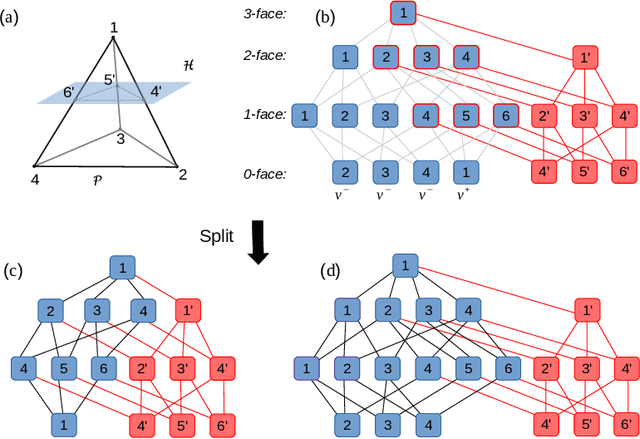

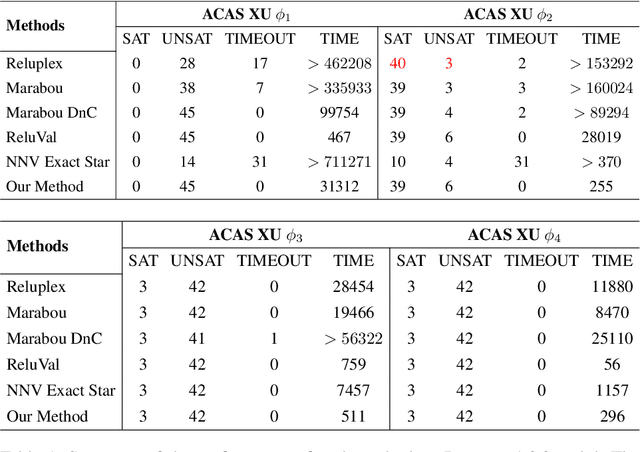

Reachability Analysis for Feed-Forward Neural Networks using Face Lattices

Mar 02, 2020

Abstract:Deep neural networks have been widely applied as an effective approach to handle complex and practical problems. However, one of the most fundamental open problems is the lack of formal methods to analyze the safety of their behaviors. To address this challenge, we propose a parallelizable technique to compute exact reachable sets of a neural network to an input set. Our method currently focuses on feed-forward neural networks with ReLU activation functions. One of the primary challenges for polytope-based approaches is identifying the intersection between intermediate polytopes and hyperplanes from neurons. In this regard, we present a new approach to construct the polytopes with the face lattice, a complete combinatorial structure. The correctness and performance of our methodology are evaluated by verifying the safety of ACAS Xu networks and other benchmarks. Compared to state-of-the-art methods such as Reluplex, Marabou, and NNV, our approach exhibits a significantly higher efficiency. Additionally, our approach is capable of constructing the complete input set given an output set, so that any input that leads to safety violation can be tracked.

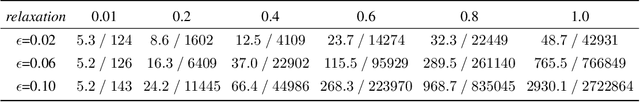

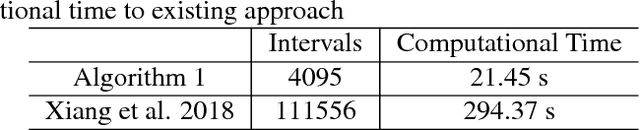

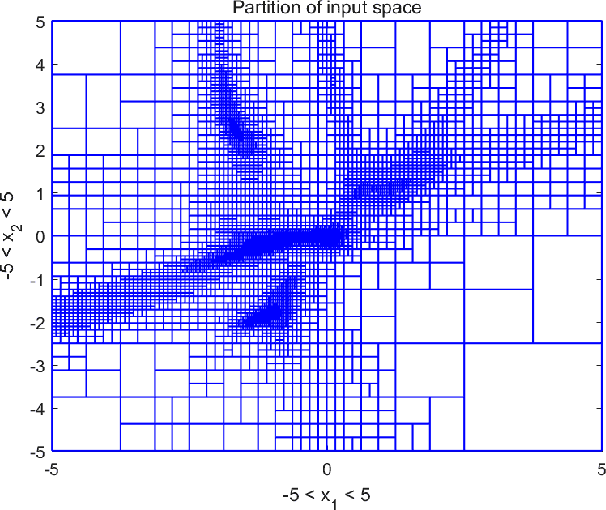

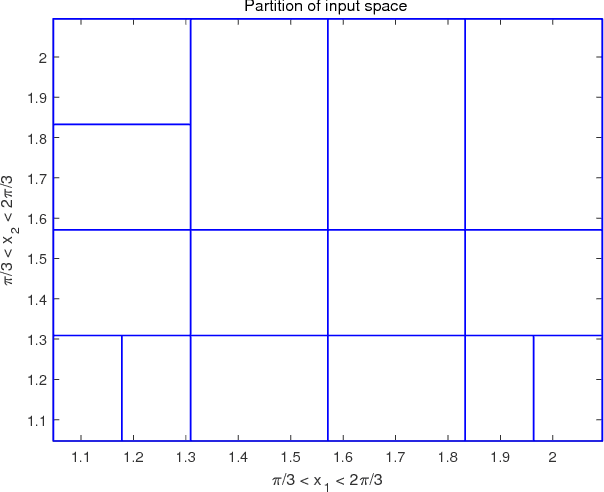

Specification-Guided Safety Verification for Feedforward Neural Networks

Dec 14, 2018

Abstract:This paper presents a specification-guided safety verification method for feedforward neural networks with general activation functions. As such feedforward networks are memoryless, they can be abstractly represented as mathematical functions, and the reachability analysis of the neural network amounts to interval analysis problems. In the framework of interval analysis, a computationally efficient formula which can quickly compute the output interval sets of a neural network is developed. Then, a specification-guided reachability algorithm is developed. Specifically, the bisection process in the verification algorithm is completely guided by a given safety specification. Due to the employment of the safety specification, unnecessary computations are avoided and thus the computational cost can be reduced significantly. Experiments show that the proposed method enjoys much more efficiency in safety verification with significantly less computational cost.

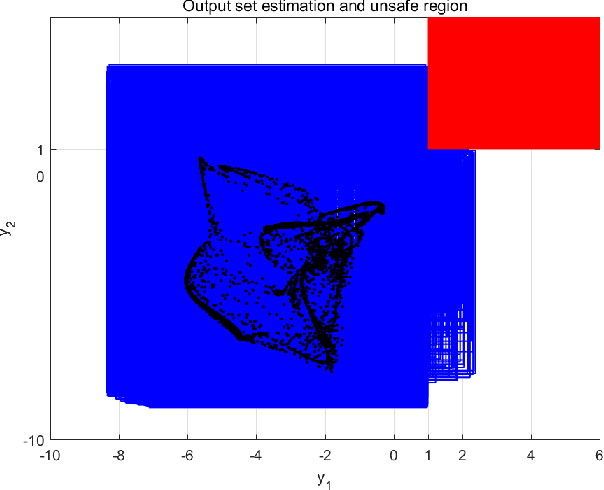

Output Reachable Set Estimation and Verification for Multi-Layer Neural Networks

Feb 20, 2018

Abstract:In this paper, the output reachable estimation and safety verification problems for multi-layer perceptron neural networks are addressed. First, a conception called maximum sensitivity in introduced and, for a class of multi-layer perceptrons whose activation functions are monotonic functions, the maximum sensitivity can be computed via solving convex optimization problems. Then, using a simulation-based method, the output reachable set estimation problem for neural networks is formulated into a chain of optimization problems. Finally, an automated safety verification is developed based on the output reachable set estimation result. An application to the safety verification for a robotic arm model with two joints is presented to show the effectiveness of proposed approaches.

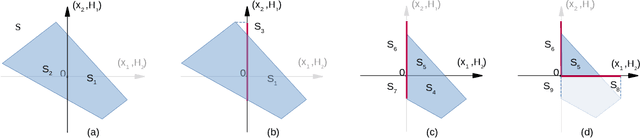

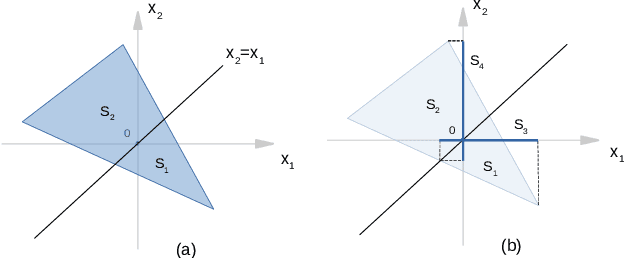

Reachable Set Computation and Safety Verification for Neural Networks with ReLU Activations

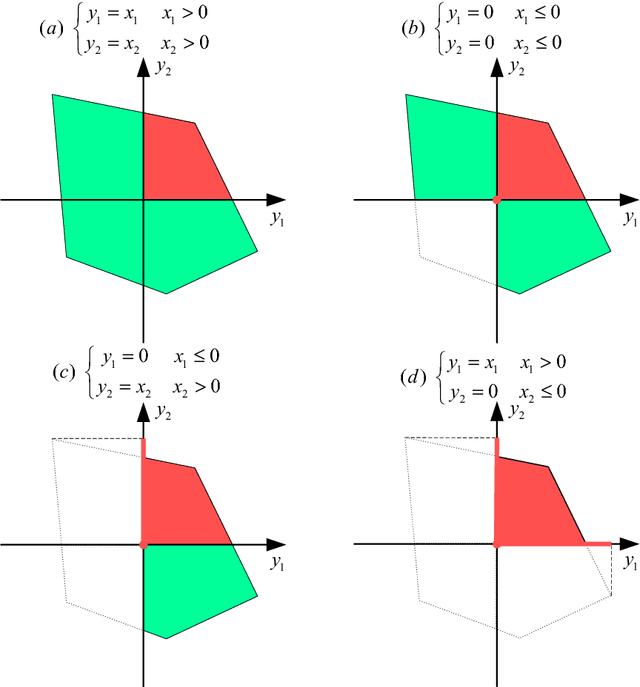

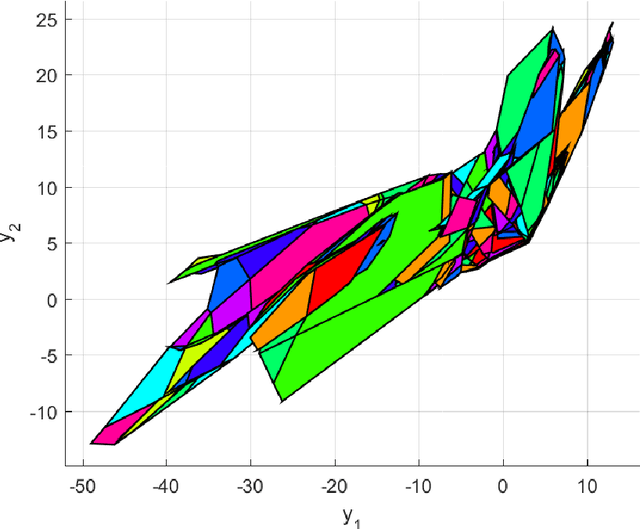

Dec 21, 2017

Abstract:Neural networks have been widely used to solve complex real-world problems. Due to the complicate, nonlinear, non-convex nature of neural networks, formal safety guarantees for the output behaviors of neural networks will be crucial for their applications in safety-critical systems.In this paper, the output reachable set computation and safety verification problems for a class of neural networks consisting of Rectified Linear Unit (ReLU) activation functions are addressed. A layer-by-layer approach is developed to compute output reachable set. The computation is formulated in the form of a set of manipulations for a union of polyhedra, which can be efficiently applied with the aid of polyhedron computation tools. Based on the output reachable set computation results, the safety verification for a ReLU neural network can be performed by checking the intersections of unsafe regions and output reachable set described by a union of polyhedra. A numerical example of a randomly generated ReLU neural network is provided to show the effectiveness of the approach developed in this paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge