Taylor T Johnson

Institute for Software Integrated Systems, Vanderbilt University

EditScout: Locating Forged Regions from Diffusion-based Edited Images with Multimodal LLM

Dec 05, 2024

Abstract:Image editing technologies are tools used to transform, adjust, remove, or otherwise alter images. Recent research has significantly improved the capabilities of image editing tools, enabling the creation of photorealistic and semantically informed forged regions that are nearly indistinguishable from authentic imagery, presenting new challenges in digital forensics and media credibility. While current image forensic techniques are adept at localizing forged regions produced by traditional image manipulation methods, current capabilities struggle to localize regions created by diffusion-based techniques. To bridge this gap, we present a novel framework that integrates a multimodal Large Language Model (LLM) for enhanced reasoning capabilities to localize tampered regions in images produced by diffusion model-based editing methods. By leveraging the contextual and semantic strengths of LLMs, our framework achieves promising results on MagicBrush, AutoSplice, and PerfBrush (novel diffusion-based dataset) datasets, outperforming previous approaches in mIoU and F1-score metrics. Notably, our method excels on the PerfBrush dataset, a self-constructed test set featuring previously unseen types of edits. Here, where traditional methods typically falter, achieving markedly low scores, our approach demonstrates promising performance.

Formal Verification of Long Short-Term Memory based Audio Classifiers: A Star based Approach

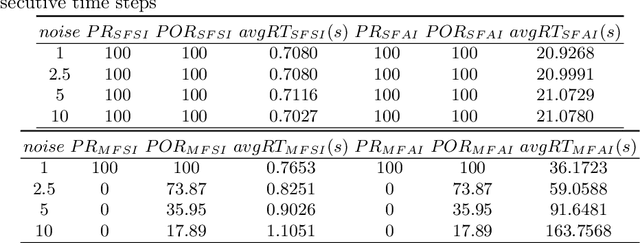

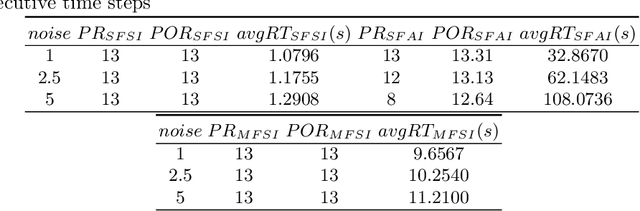

Nov 16, 2023Abstract:Formally verifying audio classification systems is essential to ensure accurate signal classification across real-world applications like surveillance, automotive voice commands, and multimedia content management, preventing potential errors with serious consequences. Drawing from recent research, this study advances the utilization of star-set-based formal verification, extended through reachability analysis, tailored explicitly for Long Short-Term Memory architectures and their Convolutional variations within the audio classification domain. By conceptualizing the classification process as a sequence of set operations, the star set-based reachability approach streamlines the exploration of potential operational states attainable by the system. The paper serves as an encompassing case study, validating and verifying sequence audio classification analytics within real-world contexts. It accentuates the necessity for robustness verification to ensure precise and dependable predictions, particularly in light of the impact of noise on the accuracy of output classifications.

* In Proceedings FMAS 2023, arXiv:2311.08987

Robustness Verification of Deep Neural Networks using Star-Based Reachability Analysis with Variable-Length Time Series Input

Jul 26, 2023

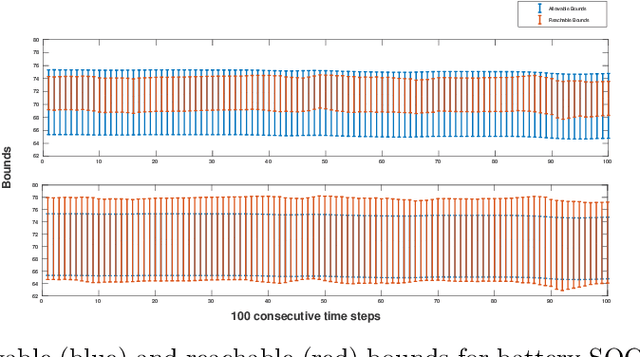

Abstract:Data-driven, neural network (NN) based anomaly detection and predictive maintenance are emerging research areas. NN-based analytics of time-series data offer valuable insights into past behaviors and estimates of critical parameters like remaining useful life (RUL) of equipment and state-of-charge (SOC) of batteries. However, input time series data can be exposed to intentional or unintentional noise when passing through sensors, necessitating robust validation and verification of these NNs. This paper presents a case study of the robustness verification approach for time series regression NNs (TSRegNN) using set-based formal methods. It focuses on utilizing variable-length input data to streamline input manipulation and enhance network architecture generalizability. The method is applied to two data sets in the Prognostics and Health Management (PHM) application areas: (1) SOC estimation of a Lithium-ion battery and (2) RUL estimation of a turbine engine. The NNs' robustness is checked using star-based reachability analysis, and several performance measures evaluate the effect of bounded perturbations in the input on network outputs, i.e., future outcomes. Overall, the paper offers a comprehensive case study for validating and verifying NN-based analytics of time-series data in real-world applications, emphasizing the importance of robustness testing for accurate and reliable predictions, especially considering the impact of noise on future outcomes.

Work In Progress: Safety and Robustness Verification of Autoencoder-Based Regression Models using the NNV Tool

Jul 14, 2022

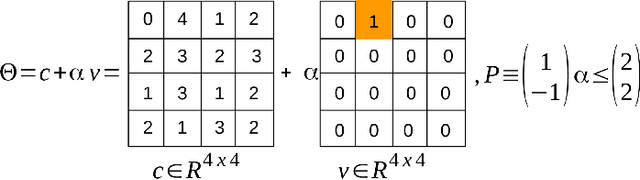

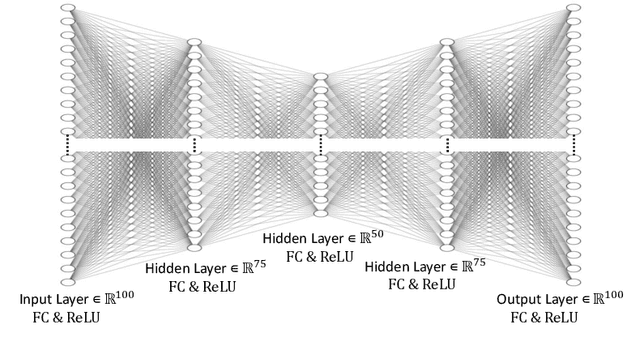

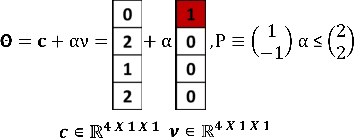

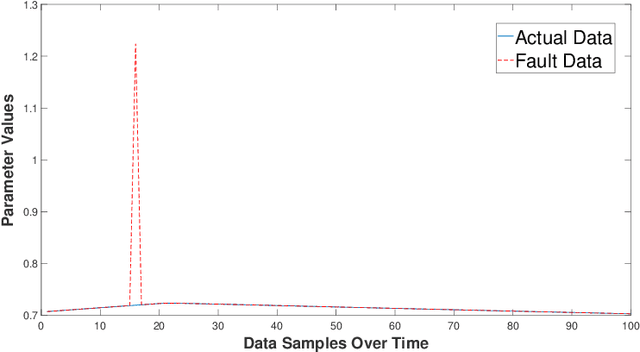

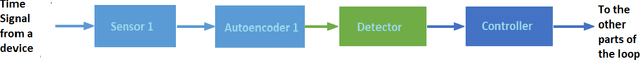

Abstract:This work in progress paper introduces robustness verification for autoencoder-based regression neural network (NN) models, following state-of-the-art approaches for robustness verification of image classification NNs. Despite the ongoing progress in developing verification methods for safety and robustness in various deep neural networks (DNNs), robustness checking of autoencoder models has not yet been considered. We explore this open space of research and check ways to bridge the gap between existing DNN verification methods by extending existing robustness analysis methods for such autoencoder networks. While classification models using autoencoders work more or less similar to image classification NNs, the functionality of regression models is distinctly different. We introduce two definitions of robustness evaluation metrics for autoencoder-based regression models, specifically the percentage robustness and un-robustness grade. We also modified the existing Imagestar approach, adjusting the variables to take care of the specific input types for regression networks. The approach is implemented as an extension of NNV, then applied and evaluated on a dataset, with a case study experiment shown using the same dataset. As per the authors' understanding, this work in progress paper is the first to show possible reachability analysis of autoencoder-based NNs.

* In Proceedings SNR 2021, arXiv:2207.04391

Ablation Study of How Run Time Assurance Impacts the Training and Performance of Reinforcement Learning Agents

Jul 08, 2022

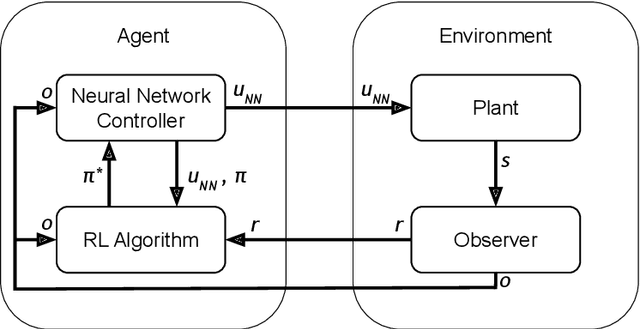

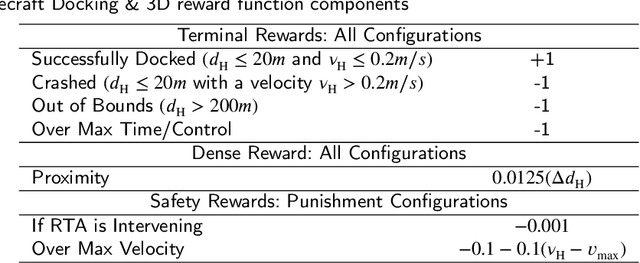

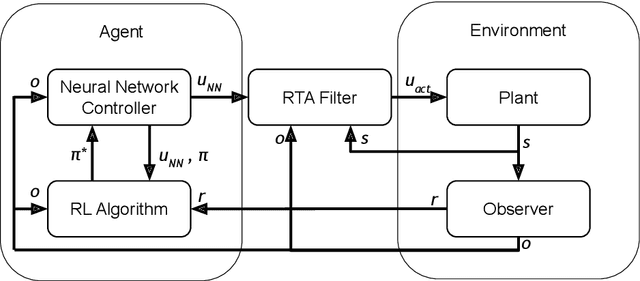

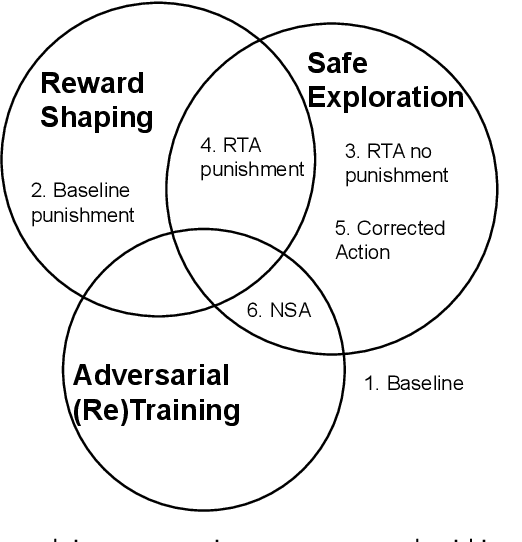

Abstract:Reinforcement Learning (RL) has become an increasingly important research area as the success of machine learning algorithms and methods grows. To combat the safety concerns surrounding the freedom given to RL agents while training, there has been an increase in work concerning Safe Reinforcement Learning (SRL). However, these new and safe methods have been held to less scrutiny than their unsafe counterparts. For instance, comparisons among safe methods often lack fair evaluation across similar initial condition bounds and hyperparameter settings, use poor evaluation metrics, and cherry-pick the best training runs rather than averaging over multiple random seeds. In this work, we conduct an ablation study using evaluation best practices to investigate the impact of run time assurance (RTA), which monitors the system state and intervenes to assure safety, on effective learning. By studying multiple RTA approaches in both on-policy and off-policy RL algorithms, we seek to understand which RTA methods are most effective, whether the agents become dependent on the RTA, and the importance of reward shaping versus safe exploration in RL agent training. Our conclusions shed light on the most promising directions of SRL, and our evaluation methodology lays the groundwork for creating better comparisons in future SRL work.

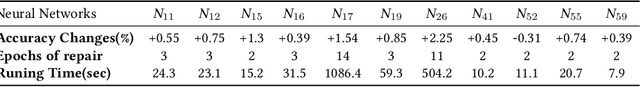

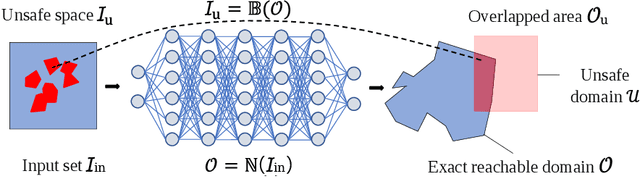

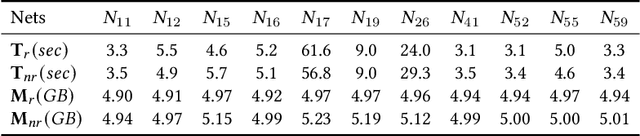

Neural Network Repair with Reachability Analysis

Aug 09, 2021

Abstract:Safety is a critical concern for the next generation of autonomy that is likely to rely heavily on deep neural networks for perception and control. Formally verifying the safety and robustness of well-trained DNNs and learning-enabled systems under attacks, model uncertainties, and sensing errors is essential for safe autonomy. This research proposes a framework to repair unsafe DNNs in safety-critical systems with reachability analysis. The repair process is inspired by adversarial training which has demonstrated high effectiveness in improving the safety and robustness of DNNs. Different from traditional adversarial training approaches where adversarial examples are utilized from random attacks and may not be representative of all unsafe behaviors, our repair process uses reachability analysis to compute the exact unsafe regions and identify sufficiently representative examples to enhance the efficacy and efficiency of the adversarial training. The performance of our framework is evaluated on two types of benchmarks without safe models as references. One is a DNN controller for aircraft collision avoidance with access to training data. The other is a rocket lander where our framework can be seamlessly integrated with the well-known deep deterministic policy gradient (DDPG) reinforcement learning algorithm. The experimental results show that our framework can successfully repair all instances on multiple safety specifications with negligible performance degradation. In addition, to increase the computational and memory efficiency of the reachability analysis algorithm, we propose a depth-first-search algorithm that combines an existing exact analysis method with an over-approximation approach based on a new set representation. Experimental results show that our method achieves a five-fold improvement in runtime and a two-fold improvement in memory usage compared to exact analysis.

Reachability Analysis of Convolutional Neural Networks

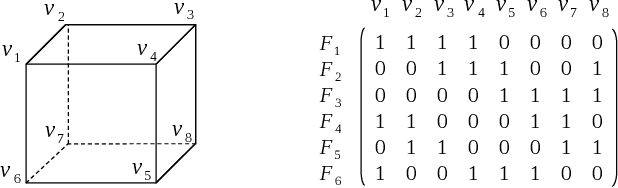

Jun 22, 2021

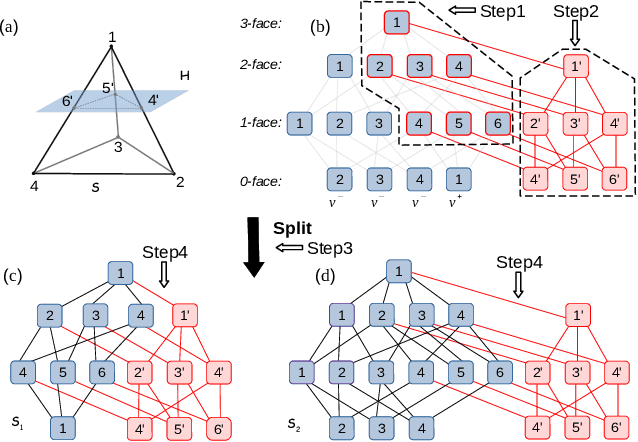

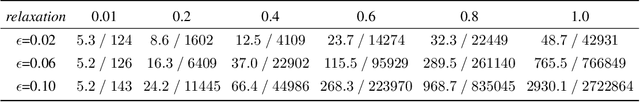

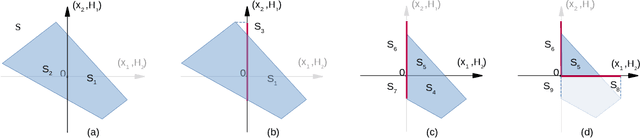

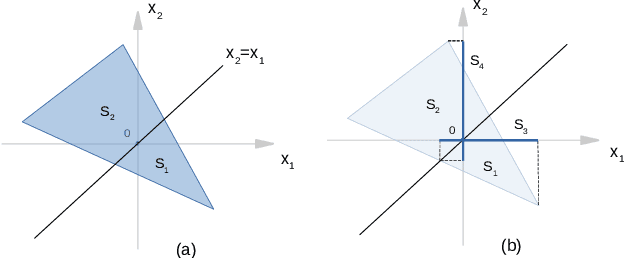

Abstract:Deep convolutional neural networks have been widely employed as an effective technique to handle complex and practical problems. However, one of the fundamental problems is the lack of formal methods to analyze their behavior. To address this challenge, we propose an approach to compute the exact reachable sets of a network given an input domain, where the reachable set is represented by the face lattice structure. Besides the computation of reachable sets, our approach is also capable of backtracking to the input domain given an output reachable set. Therefore, a full analysis of a network's behavior can be realized. In addition, an approach for fast analysis is also introduced, which conducts fast computation of reachable sets by considering selected sensitive neurons in each layer. The exact pixel-level reachability analysis method is evaluated on a CNN for the CIFAR10 dataset and compared to related works. The fast analysis method is evaluated over a CNN CIFAR10 dataset and VGG16 architecture for the ImageNet dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge