Patrick Musau

Reachability Analysis of a General Class of Neural Ordinary Differential Equations

Jul 13, 2022

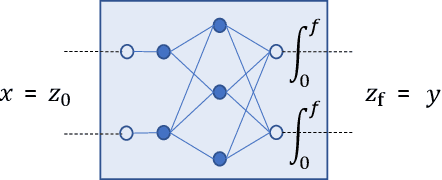

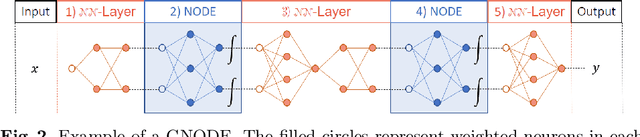

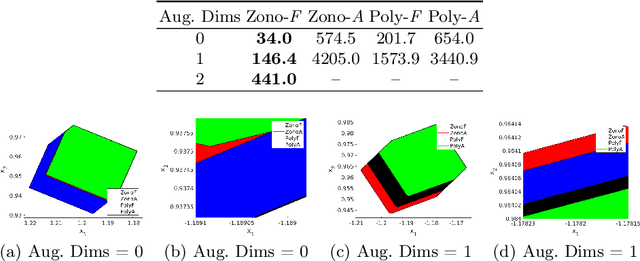

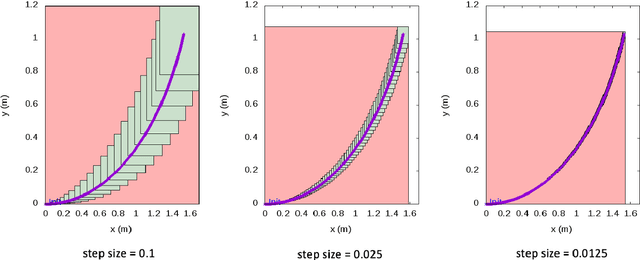

Abstract:Continuous deep learning models, referred to as Neural Ordinary Differential Equations (Neural ODEs), have received considerable attention over the last several years. Despite their burgeoning impact, there is a lack of formal analysis techniques for these systems. In this paper, we consider a general class of neural ODEs with varying architectures and layers, and introduce a novel reachability framework that allows for the formal analysis of their behavior. The methods developed for the reachability analysis of neural ODEs are implemented in a new tool called NNVODE. Specifically, our work extends an existing neural network verification tool to support neural ODEs. We demonstrate the capabilities and efficacy of our methods through the analysis of a set of benchmarks that include neural ODEs used for classification, and in control and dynamical systems, including an evaluation of the efficacy and capabilities of our approach with respect to existing software tools within the continuous-time systems reachability literature, when it is possible to do so.

An Empirical Analysis of the Use of Real-Time Reachability for the Safety Assurance of Autonomous Vehicles

May 03, 2022

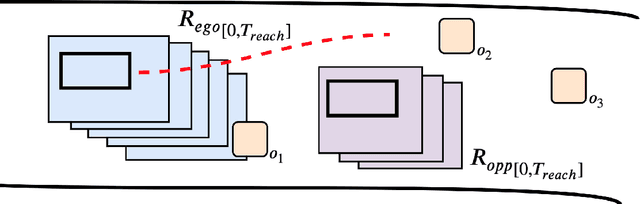

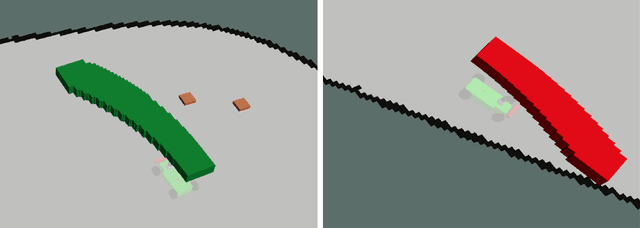

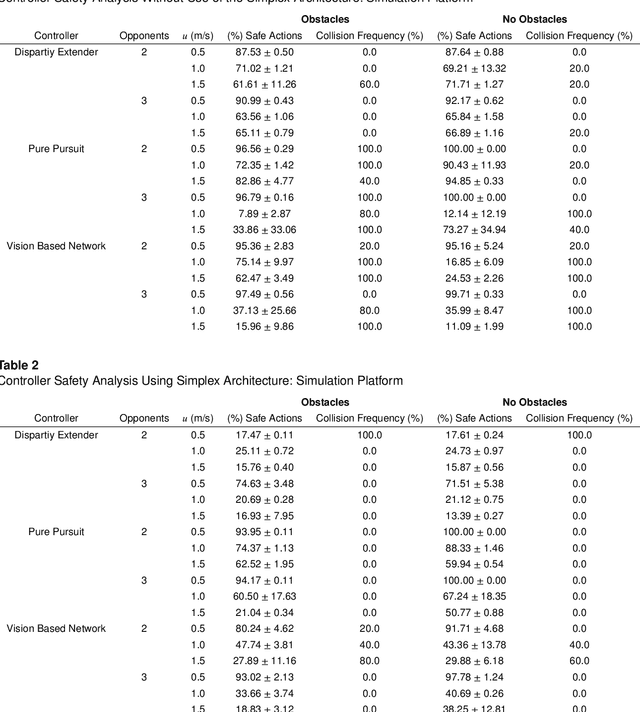

Abstract:Recent advances in machine learning technologies and sensing have paved the way for the belief that safe, accessible, and convenient autonomous vehicles may be realized in the near future. Despite tremendous advances within this context, fundamental challenges around safety and reliability are limiting their arrival and comprehensive adoption. Autonomous vehicles are often tasked with operating in dynamic and uncertain environments. As a result, they often make use of highly complex components, such as machine learning approaches, to handle the nuances of sensing, actuation, and control. While these methods are highly effective, they are notoriously difficult to assure. Moreover, within uncertain and dynamic environments, design time assurance analyses may not be sufficient to guarantee safety. Thus, it is critical to monitor the correctness of these systems at runtime. One approach for providing runtime assurance of systems with components that may not be amenable to formal analysis is the simplex architecture, where an unverified component is wrapped with a safety controller and a switching logic designed to prevent dangerous behavior. In this paper, we propose using a real-time reachability algorithm for the implementation of the simplex architecture to assure the safety of a 1/10 scale open source autonomous vehicle platform known as F1/10. The reachability algorithm that we leverage (a) provides provable guarantees of safety, and (b) is used to detect potentially unsafe scenarios. In our approach, the need to analyze an underlying controller is abstracted away, instead focusing on the effects of the controller's decisions on the system's future states. We demonstrate the efficacy of our architecture through a vast set of experiments conducted both in simulation and on an embedded hardware platform.

NNV: The Neural Network Verification Tool for Deep Neural Networks and Learning-Enabled Cyber-Physical Systems

Apr 12, 2020

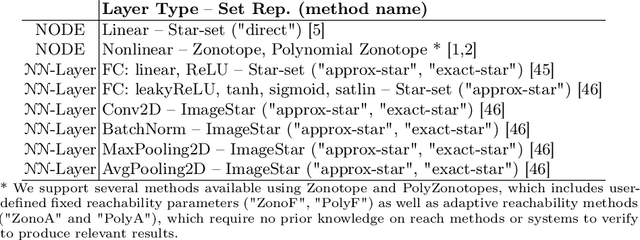

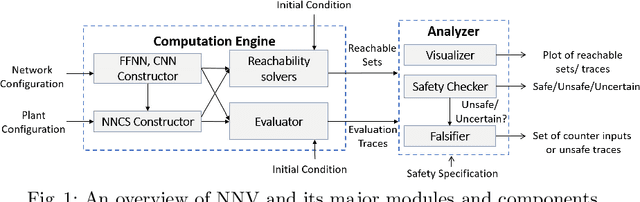

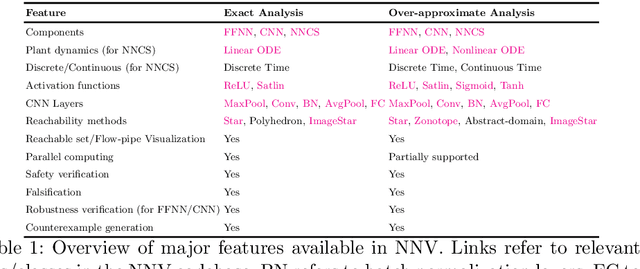

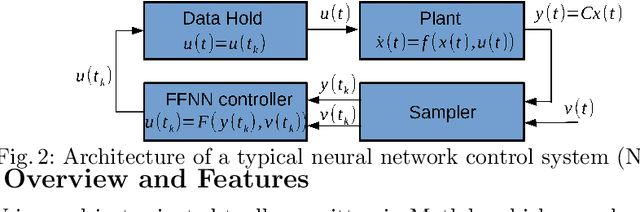

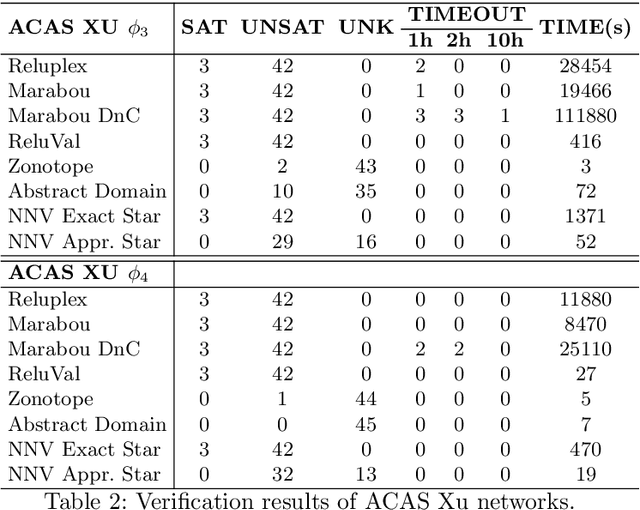

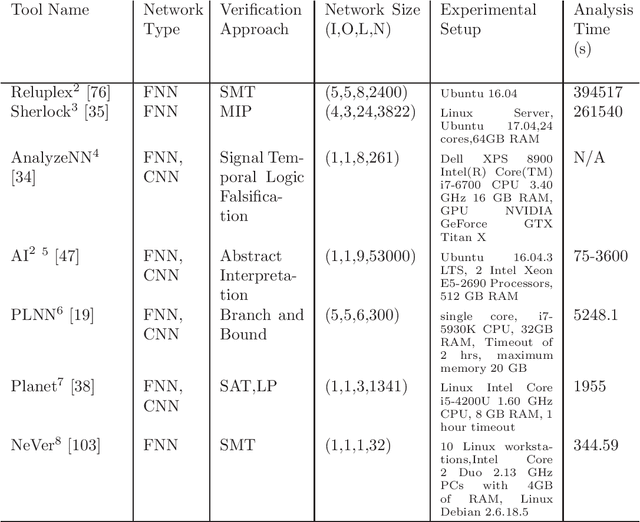

Abstract:This paper presents the Neural Network Verification (NNV) software tool, a set-based verification framework for deep neural networks (DNNs) and learning-enabled cyber-physical systems (CPS). The crux of NNV is a collection of reachability algorithms that make use of a variety of set representations, such as polyhedra, star sets, zonotopes, and abstract-domain representations. NNV supports both exact (sound and complete) and over-approximate (sound) reachability algorithms for verifying safety and robustness properties of feed-forward neural networks (FFNNs) with various activation functions. For learning-enabled CPS, such as closed-loop control systems incorporating neural networks, NNV provides exact and over-approximate reachability analysis schemes for linear plant models and FFNN controllers with piecewise-linear activation functions, such as ReLUs. For similar neural network control systems (NNCS) that instead have nonlinear plant models, NNV supports over-approximate analysis by combining the star set analysis used for FFNN controllers with zonotope-based analysis for nonlinear plant dynamics building on CORA. We evaluate NNV using two real-world case studies: the first is safety verification of ACAS Xu networks and the second deals with the safety verification of a deep learning-based adaptive cruise control system.

Verification for Machine Learning, Autonomy, and Neural Networks Survey

Oct 03, 2018

Abstract:This survey presents an overview of verification techniques for autonomous systems, with a focus on safety-critical autonomous cyber-physical systems (CPS) and subcomponents thereof. Autonomy in CPS is enabling by recent advances in artificial intelligence (AI) and machine learning (ML) through approaches such as deep neural networks (DNNs), embedded in so-called learning enabled components (LECs) that accomplish tasks from classification to control. Recently, the formal methods and formal verification community has developed methods to characterize behaviors in these LECs with eventual goals of formally verifying specifications for LECs, and this article presents a survey of many of these recent approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge