Souhaib Ben Taieb

Calibrated Multivariate Distributional Regression with Pre-Rank Regularization

Jan 30, 2026Abstract:The goal of probabilistic prediction is to issue predictive distributions that are as informative as possible, subject to being calibrated. Despite substantial progress in the univariate setting, achieving multivariate calibration remains challenging. Recent work has introduced pre-rank functions, scalar projections of multivariate forecasts and observations, as flexible diagnostics for assessing specific aspects of multivariate calibration, but their use has largely been limited to post-hoc evaluation. We propose a regularization-based calibration method that enforces multivariate calibration during training of multivariate distributional regression models using pre-rank functions. We further introduce a novel PCA-based pre-rank that projects predictions onto principal directions of the predictive distribution. Through simulation studies and experiments on 18 real-world multi-output regression datasets, we show that the proposed approach substantially improves multivariate pre-rank calibration without compromising predictive accuracy, and that the PCA pre-rank reveals dependence-structure misspecifications that are not detected by existing pre-ranks.

RainDiff: End-to-end Precipitation Nowcasting Via Token-wise Attention Diffusion

Oct 16, 2025Abstract:Precipitation nowcasting, predicting future radar echo sequences from current observations, is a critical yet challenging task due to the inherently chaotic and tightly coupled spatio-temporal dynamics of the atmosphere. While recent advances in diffusion-based models attempt to capture both large-scale motion and fine-grained stochastic variability, they often suffer from scalability issues: latent-space approaches require a separately trained autoencoder, adding complexity and limiting generalization, while pixel-space approaches are computationally intensive and often omit attention mechanisms, reducing their ability to model long-range spatio-temporal dependencies. To address these limitations, we propose a Token-wise Attention integrated into not only the U-Net diffusion model but also the spatio-temporal encoder that dynamically captures multi-scale spatial interactions and temporal evolution. Unlike prior approaches, our method natively integrates attention into the architecture without incurring the high resource cost typical of pixel-space diffusion, thereby eliminating the need for separate latent modules. Our extensive experiments and visual evaluations across diverse datasets demonstrate that the proposed method significantly outperforms state-of-the-art approaches, yielding superior local fidelity, generalization, and robustness in complex precipitation forecasting scenarios.

Multivariate Latent Recalibration for Conditional Normalizing Flows

May 22, 2025Abstract:Reliably characterizing the full conditional distribution of a multivariate response variable given a set of covariates is crucial for trustworthy decision-making. However, misspecified or miscalibrated multivariate models may yield a poor approximation of the joint distribution of the response variables, leading to unreliable predictions and suboptimal decisions. Furthermore, standard recalibration methods are primarily limited to univariate settings, while conformal prediction techniques, despite generating multivariate prediction regions with coverage guarantees, do not provide a full probability density function. We address this gap by first introducing a novel notion of latent calibration, which assesses probabilistic calibration in the latent space of a conditional normalizing flow. Second, we propose latent recalibration (LR), a novel post-hoc model recalibration method that learns a transformation of the latent space with finite-sample bounds on latent calibration. Unlike existing methods, LR produces a recalibrated distribution with an explicit multivariate density function while remaining computationally efficient. Extensive experiments on both tabular and image datasets show that LR consistently improves latent calibration error and the negative log-likelihood of the recalibrated models.

Risk-Based Thresholding for Reliable Anomaly Detection in Concentrated Solar Power Plants

Mar 24, 2025Abstract:Efficient and reliable operation of Concentrated Solar Power (CSP) plants is essential for meeting the growing demand for sustainable energy. However, high-temperature solar receivers face severe operational risks, such as freezing, deformation, and corrosion, resulting in costly downtime and maintenance. To monitor CSP plants, cameras mounted on solar receivers record infrared images at irregular intervals ranging from one to five minutes throughout the day. Anomalous images can be detected by thresholding an anomaly score, where the threshold is chosen to optimize metrics such as the F1-score on a validation set. This work proposes a framework for generating more reliable decision thresholds with finite-sample coverage guarantees on any chosen risk function. Our framework also incorporates an abstention mechanism, allowing high-risk predictions to be deferred to domain experts. Second, we propose a density forecasting method to estimate the likelihood of an observed image given a sequence of previously observed images, using this likelihood as its anomaly score. Third, we analyze the deployment results of our framework across multiple training scenarios over several months for two CSP plants. This analysis provides valuable insights to our industry partner for optimizing maintenance operations. Finally, given the confidential nature of our dataset, we provide an extended simulated dataset, leveraging recent advancements in generative modeling to create diverse thermal images that simulate multiple CSP plants. Our code is publicly available.

Segmentation-Guided CT Synthesis with Pixel-Wise Conformal Uncertainty Bounds

Mar 11, 2025Abstract:Accurate dose calculations in proton therapy rely on high-quality CT images. While planning CTs (pCTs) serve as a reference for dosimetric planning, Cone Beam CT (CBCT) is used throughout Adaptive Radiotherapy (ART) to generate sCTs for improved dose calculations. Despite its lower cost and reduced radiation exposure advantages, CBCT suffers from severe artefacts and poor image quality, making it unsuitable for precise dosimetry. Deep learning-based CBCT-to-CT translation has emerged as a promising approach. Still, existing methods often introduce anatomical inconsistencies and lack reliable uncertainty estimates, limiting their clinical adoption. To bridge this gap, we propose STF-RUE, a novel framework integrating two key components. First, STF, a segmentation-guided CBCT-to-CT translation method that enhances anatomical consistency by leveraging segmentation priors extracted from pCTs. Second, RUE, a conformal prediction method that augments predicted CTs with pixel-wise conformal prediction intervals, providing clinicians with robust reliability indicator. Comprehensive experiments using UNet++ and Fast-DDPM on two benchmark datasets demonstrate that STF-RUE significantly improves translation accuracy, as measured by a novel soft-tissue-focused metric designed for precise dose computation. Additionally, STF-RUE provides better-calibrated uncertainty sets for synthetic CT, reinforcing trust in synthetic CTs. By addressing both anatomical fidelity and uncertainty quantification, STF-RUE marks a crucial step toward safer and more effective adaptive proton therapy. Code is available at https://anonymous.4open.science/r/cbct2ct_translation-B2D9/.

Rectifying Conformity Scores for Better Conditional Coverage

Feb 22, 2025Abstract:We present a new method for generating confidence sets within the split conformal prediction framework. Our method performs a trainable transformation of any given conformity score to improve conditional coverage while ensuring exact marginal coverage. The transformation is based on an estimate of the conditional quantile of conformity scores. The resulting method is particularly beneficial for constructing adaptive confidence sets in multi-output problems where standard conformal quantile regression approaches have limited applicability. We develop a theoretical bound that captures the influence of the accuracy of the quantile estimate on the approximate conditional validity, unlike classical bounds for conformal prediction methods that only offer marginal coverage. We experimentally show that our method is highly adaptive to the local data structure and outperforms existing methods in terms of conditional coverage, improving the reliability of statistical inference in various applications.

Large Language Models Are Human-Like Internally

Feb 03, 2025

Abstract:Recent cognitive modeling studies have reported that larger language models (LMs) exhibit a poorer fit to human reading behavior, leading to claims of their cognitive implausibility. In this paper, we revisit this argument through the lens of mechanistic interpretability and argue that prior conclusions were skewed by an exclusive focus on the final layers of LMs. Our analysis reveals that next-word probabilities derived from internal layers of larger LMs align with human sentence processing data as well as, or better than, those from smaller LMs. This alignment holds consistently across behavioral (self-paced reading times, gaze durations, MAZE task processing times) and neurophysiological (N400 brain potentials) measures, challenging earlier mixed results and suggesting that the cognitive plausibility of larger LMs has been underestimated. Furthermore, we first identify an intriguing relationship between LM layers and human measures: earlier layers correspond more closely with fast gaze durations, while later layers better align with relatively slower signals such as N400 potentials and MAZE processing times. Our work opens new avenues for interdisciplinary research at the intersection of mechanistic interpretability and cognitive modeling.

Multi-Output Conformal Regression: A Unified Comparative Study with New Conformity Scores

Jan 17, 2025

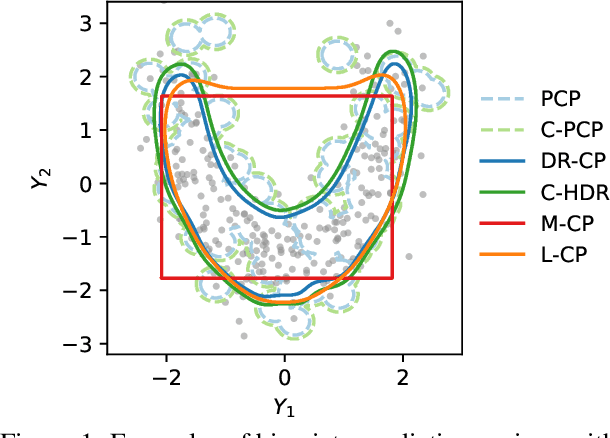

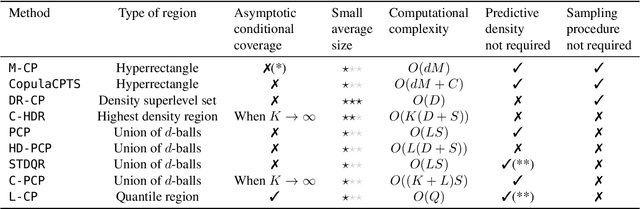

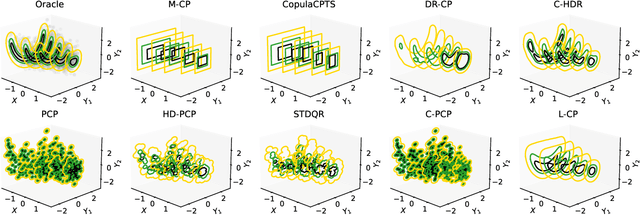

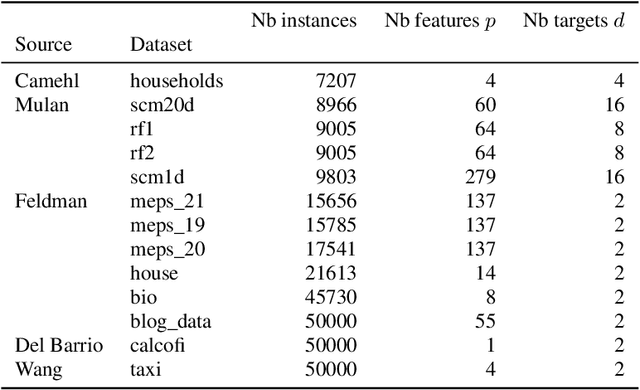

Abstract:Quantifying uncertainty in multivariate regression is essential in many real-world applications, yet existing methods for constructing prediction regions often face limitations such as the inability to capture complex dependencies, lack of coverage guarantees, or high computational cost. Conformal prediction provides a robust framework for producing distribution-free prediction regions with finite-sample coverage guarantees. In this work, we present a unified comparative study of multi-output conformal methods, exploring their properties and interconnections. Based on our findings, we introduce two classes of conformity scores that achieve asymptotic conditional coverage: one is compatible with any generative model, and the other offers low computational cost by leveraging invertible generative models. Finally, we conduct a comprehensive empirical study across 32 tabular datasets to compare all the multi-output conformal methods considered in this work. All methods are implemented within a unified code base to ensure a fair and consistent comparison.

Preventing Conflicting Gradients in Neural Marked Temporal Point Processes

Dec 11, 2024

Abstract:Neural Marked Temporal Point Processes (MTPP) are flexible models to capture complex temporal inter-dependencies between labeled events. These models inherently learn two predictive distributions: one for the arrival times of events and another for the types of events, also known as marks. In this study, we demonstrate that learning a MTPP model can be framed as a two-task learning problem, where both tasks share a common set of trainable parameters that are optimized jointly. We show that this often leads to the emergence of conflicting gradients during training, where task-specific gradients are pointing in opposite directions. When such conflicts arise, following the average gradient can be detrimental to the learning of each individual tasks, resulting in overall degraded performance. To overcome this issue, we introduce novel parametrizations for neural MTPP models that allow for separate modeling and training of each task, effectively avoiding the problem of conflicting gradients. Through experiments on multiple real-world event sequence datasets, we demonstrate the benefits of our framework compared to the original model formulations.

Revisiting Deep Feature Reconstruction for Logical and Structural Industrial Anomaly Detection

Oct 21, 2024Abstract:Industrial anomaly detection is crucial for quality control and predictive maintenance, but it presents challenges due to limited training data, diverse anomaly types, and external factors that alter object appearances. Existing methods commonly detect structural anomalies, such as dents and scratches, by leveraging multi-scale features from image patches extracted through deep pre-trained networks. However, significant memory and computational demands often limit their practical application. Additionally, detecting logical anomalies-such as images with missing or excess elements-requires an understanding of spatial relationships that traditional patch-based methods fail to capture. In this work, we address these limitations by focusing on Deep Feature Reconstruction (DFR), a memory- and compute-efficient approach for detecting structural anomalies. We further enhance DFR into a unified framework, called ULSAD, which is capable of detecting both structural and logical anomalies. Specifically, we refine the DFR training objective to improve performance in structural anomaly detection, while introducing an attention-based loss mechanism using a global autoencoder-like network to handle logical anomaly detection. Our empirical evaluation across five benchmark datasets demonstrates the performance of ULSAD in detecting and localizing both structural and logical anomalies, outperforming eight state-of-the-art methods. An extensive ablation study further highlights the contribution of each component to the overall performance improvement. Our code is available at https://github.com/sukanyapatra1997/ULSAD-2024.git

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge