Sneha Das

Who Does Your Algorithm Fail? Investigating Age and Ethnic Bias in the MAMA-MIA Dataset

Oct 31, 2025Abstract:Deep learning models aim to improve diagnostic workflows, but fairness evaluation remains underexplored beyond classification, e.g., in image segmentation. Unaddressed segmentation bias can lead to disparities in the quality of care for certain populations, potentially compounded across clinical decision points and amplified through iterative model development. Here, we audit the fairness of the automated segmentation labels provided in the breast cancer tumor segmentation dataset MAMA-MIA. We evaluate automated segmentation quality across age, ethnicity, and data source. Our analysis reveals an intrinsic age-related bias against younger patients that continues to persist even after controlling for confounding factors, such as data source. We hypothesize that this bias may be linked to physiological factors, a known challenge for both radiologists and automated systems. Finally, we show how aggregating data from multiple data sources influences site-specific ethnic biases, underscoring the necessity of investigating data at a granular level.

EmoTale: An Enacted Speech-emotion Dataset in Danish

Aug 20, 2025

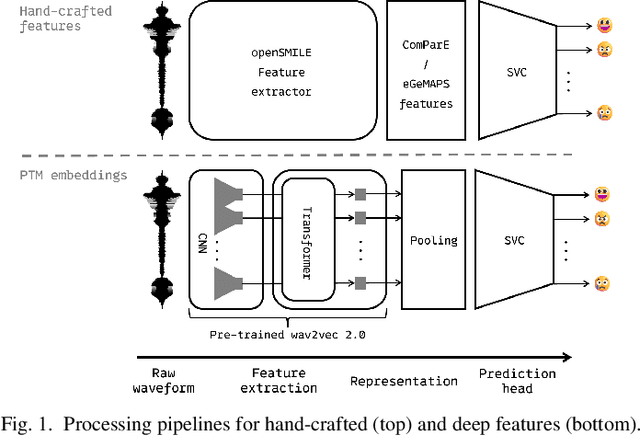

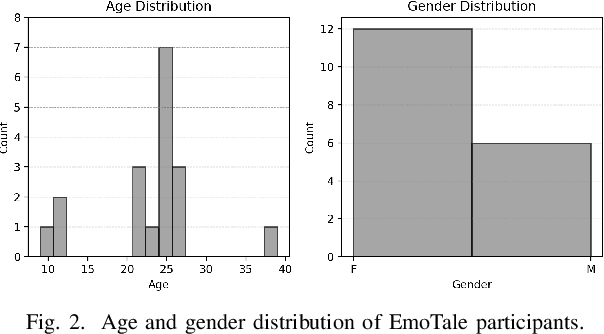

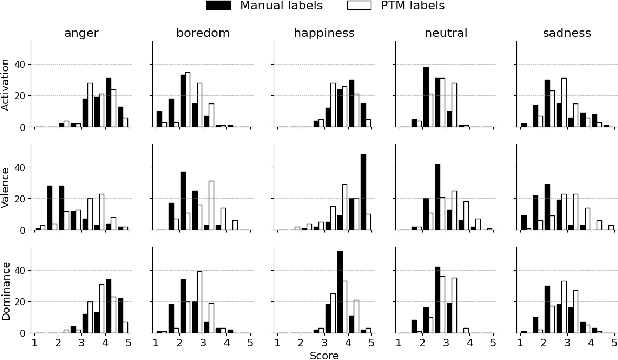

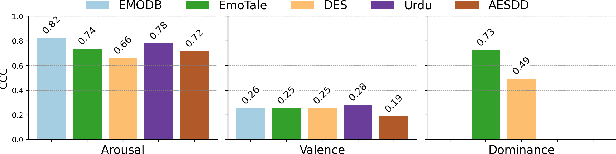

Abstract:While multiple emotional speech corpora exist for commonly spoken languages, there is a lack of functional datasets for smaller (spoken) languages, such as Danish. To our knowledge, Danish Emotional Speech (DES), published in 1997, is the only other database of Danish emotional speech. We present EmoTale; a corpus comprising Danish and English speech recordings with their associated enacted emotion annotations. We demonstrate the validity of the dataset by investigating and presenting its predictive power using speech emotion recognition (SER) models. We develop SER models for EmoTale and the reference datasets using self-supervised speech model (SSLM) embeddings and the openSMILE feature extractor. We find the embeddings superior to the hand-crafted features. The best model achieves an unweighted average recall (UAR) of 64.1% on the EmoTale corpus using leave-one-speaker-out cross-validation, comparable to the performance on DES.

Exploring Local Interpretable Model-Agnostic Explanations for Speech Emotion Recognition with Distribution-Shift

Apr 07, 2025Abstract:We introduce EmoLIME, a version of local interpretable model-agnostic explanations (LIME) for black-box Speech Emotion Recognition (SER) models. To the best of our knowledge, this is the first attempt to apply LIME in SER. EmoLIME generates high-level interpretable explanations and identifies which specific frequency ranges are most influential in determining emotional states. The approach aids in interpreting complex, high-dimensional embeddings such as those generated by end-to-end speech models. We evaluate EmoLIME, qualitatively, quantitatively, and statistically, across three emotional speech datasets, using classifiers trained on both hand-crafted acoustic features and Wav2Vec 2.0 embeddings. We find that EmoLIME exhibits stronger robustness across different models than across datasets with distribution shifts, highlighting its potential for more consistent explanations in SER tasks within a dataset.

Is it the model or the metric -- On robustness measures of deeplearning models

Dec 13, 2024Abstract:Determining the robustness of deep learning models is an established and ongoing challenge within automated decision-making systems. With the advent and success of techniques that enable advanced deep learning (DL), these models are being used in widespread applications, including high-stake ones like healthcare, education, border-control. Therefore, it is critical to understand the limitations of these models and predict their regions of failures, in order to create the necessary guardrails for their successful and safe deployment. In this work, we revisit robustness, specifically investigating the sufficiency of robust accuracy (RA), within the context of deepfake detection. We present robust ratio (RR) as a complementary metric, that can quantify the changes to the normalized or probability outcomes under input perturbation. We present a comparison of RA and RR and demonstrate that despite similar RA between models, the models show varying RR under different tolerance (perturbation) levels.

Examining the Interplay Between Privacy and Fairness for Speech Processing: A Review and Perspective

Sep 05, 2024Abstract:Speech technology has been increasingly deployed in various areas of daily life including sensitive domains such as healthcare and law enforcement. For these technologies to be effective, they must work reliably for all users while preserving individual privacy. Although tradeoffs between privacy and utility, as well as fairness and utility, have been extensively researched, the specific interplay between privacy and fairness in speech processing remains underexplored. This review and position paper offers an overview of emerging privacy-fairness tradeoffs throughout the entire machine learning lifecycle for speech processing. By drawing on well-established frameworks on fairness and privacy, we examine existing biases and sources of privacy harm that coexist during the development of speech processing models. We then highlight how corresponding privacy-enhancing technologies have the potential to inadvertently increase these biases and how bias mitigation strategies may conversely reduce privacy. By raising open questions, we advocate for a comprehensive evaluation of privacy-fairness tradeoffs for speech technology and the development of privacy-enhancing and fairness-aware algorithms in this domain.

Evaluation of Large Language Models: STEM education and Gender Stereotypes

Jun 14, 2024

Abstract:Large Language Models (LLMs) have an increasing impact on our lives with use cases such as chatbots, study support, coding support, ideation, writing assistance, and more. Previous studies have revealed linguistic biases in pronouns used to describe professions or adjectives used to describe men vs women. These issues have to some degree been addressed in updated LLM versions, at least to pass existing tests. However, biases may still be present in the models, and repeated use of gender stereotypical language may reinforce the underlying assumptions and are therefore important to examine further. This paper investigates gender biases in LLMs in relation to educational choices through an open-ended, true to user-case experimental design and a quantitative analysis. We investigate the biases in the context of four different cultures, languages, and educational systems (English/US/UK, Danish/DK, Catalan/ES, and Hindi/IN) for ages ranging from 10 to 16 years, corresponding to important educational transition points in the different countries. We find that there are significant and large differences in the ratio of STEM to non-STEM suggested education paths provided by chatGPT when using typical girl vs boy names to prompt lists of suggested things to become. There are generally fewer STEM suggestions in the Danish, Spanish, and Indian context compared to the English. We also find subtle differences in the suggested professions, which we categorise and report.

Exploratory Evaluation of Speech Content Masking

Jan 08, 2024Abstract:Most recent speech privacy efforts have focused on anonymizing acoustic speaker attributes but there has not been as much research into protecting information from speech content. We introduce a toy problem that explores an emerging type of privacy called "content masking" which conceals selected words and phrases in speech. In our efforts to define this problem space, we evaluate an introductory baseline masking technique based on modifying sequences of discrete phone representations (phone codes) produced from a pre-trained vector-quantized variational autoencoder (VQ-VAE) and re-synthesized using WaveRNN. We investigate three different masking locations and three types of masking strategies: noise substitution, word deletion, and phone sequence reversal. Our work attempts to characterize how masking affects two downstream tasks: automatic speech recognition (ASR) and automatic speaker verification (ASV). We observe how the different masks types and locations impact these downstream tasks and discuss how these issues may influence privacy goals.

On Crowdsourcing-design with Comparison Category Rating for Evaluating Speech Enhancement Algorithms

Jun 02, 2023Abstract:Speech enhancement techniques improve the quality or the intelligibility of an audio signal by removing unwanted noise. It is used as preprocessing in numerous applications such as speech recognition, hearing aids, broadcasting and telephony. The evaluation of such algorithms often relies on reference-based objective metrics that are shown to correlate poorly with human perception. In order to evaluate audio quality as perceived by human observers it is thus fundamental to resort to subjective quality assessment. In this paper, a user evaluation based on crowdsourcing (subjective) and the Comparison Category Rating (CCR) method is compared against the DNSMOS, ViSQOL and 3QUEST (objective) metrics. The overall quality scores of three speech enhancement algorithms from real time communications (RTC) are used in the comparison using the P.808 toolkit. Results indicate that while the CCR scale allows participants to identify differences between processed and unprocessed audio samples, two groups of preferences emerge: some users rate positively by focusing on noise suppression processing, while others rate negatively by focusing mainly on speech quality. We further present results on the parameters, size considerations and speaker variations that are critical and should be considered when designing the CCR-based crowdsourcing evaluation.

Pre-processing Blood-Volume-Pulse for In-the-wild Applications

Apr 27, 2023Abstract:Blood-volume-pulse (BVP) is a biosignal commonly used in applications for non-invasive affect recognition and wearable technology. However, its predisposition to noise constitutes limitations for its application in real-life settings. This paper revisits BVP processing and proposes standard practices for feature extraction from empirical observations of BVP. We propose a method for improving the use of features in the presence of noise and compare it to a standard signal processing approach of a 4th order Butterworth bandpass filter with cut-off frequencies of 1 Hz and 8 Hz. Our method achieves better results for most time features as well as for a subset of the frequency features. We find that all but one time feature and around half of the frequency features perform better when the noisy parts are known (best case). When the noisy parts are unknown and estimated using a metric of skewness, the proposed method in general works better or similar to the Butterworth bandpass filter, but both methods also fail for a subset features. Our results can be used to select BVP features that are meaningful under different SNR conditions.

Interpretability by design using computer vision for behavioral sensing in child and adolescent psychiatry

Jul 11, 2022

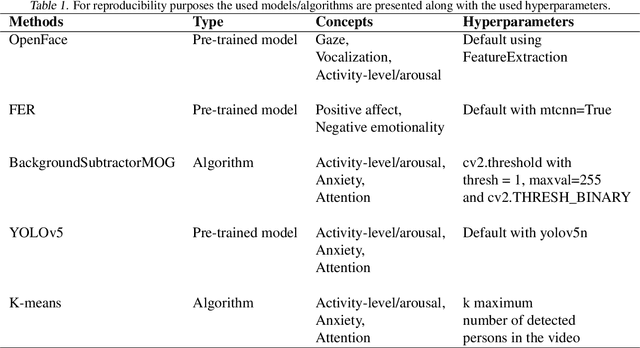

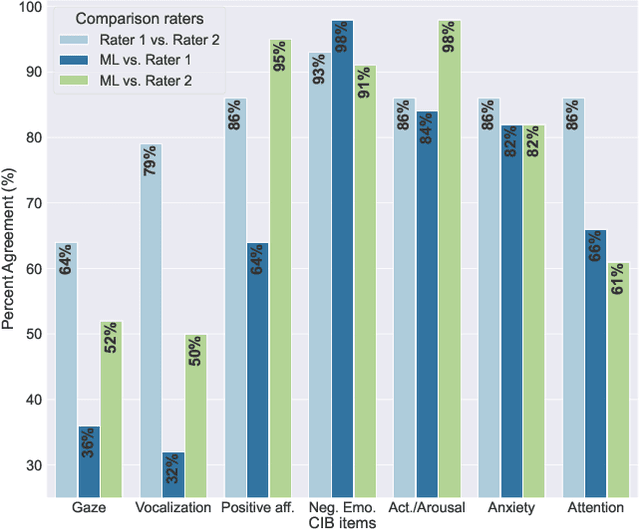

Abstract:Observation is an essential tool for understanding and studying human behavior and mental states. However, coding human behavior is a time-consuming, expensive task, in which reliability can be difficult to achieve and bias is a risk. Machine learning (ML) methods offer ways to improve reliability, decrease cost, and scale up behavioral coding for application in clinical and research settings. Here, we use computer vision to derive behavioral codes or concepts of a gold standard behavioral rating system, offering familiar interpretation for mental health professionals. Features were extracted from videos of clinical diagnostic interviews of children and adolescents with and without obsessive-compulsive disorder. Our computationally-derived ratings were comparable to human expert ratings for negative emotions, activity-level/arousal and anxiety. For the attention and positive affect concepts, our ML ratings performed reasonably. However, results for gaze and vocalization indicate a need for improved data quality or additional data modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge